How to Use Multithreading in FastAPI?

This article will explore the usage of multi-threading in the FastAPI framework, covering common use cases, problem-solving approaches, and practical examples.

In modern web applications, high performance and fast responsiveness are crucial. Python's FastAPI framework has become the preferred choice for many developers due to its outstanding performance and user-friendly features.

In Python, a thread is a separate flow of execution that can run concurrently with other threads. Each thread operates independently, and multiple threads can be created and executed within a single program. This is in contrast to the traditional sequential execution, where tasks are executed one after the other.

In certain scenarios, single-threaded execution may not meet the requirements, prompting the consideration of using multi-threading to enhance application concurrency. This article will explore the usage of multithreading in the FastAPI framework, covering common use cases, problem-solving approaches, and practical examples.

Concurrency, Parallelism and Multithreading in Python

Concurrency, parallelism, and multithreading are related but distinct concepts in Python programming. Here's an overview of each:

Concurrency: Concurrency refers to the ability of different parts or units of a program to make progress concurrently or simultaneously. It deals with the design and architecture of programs that can handle multiple tasks or events at the same time. Concurrency is a broader concept that includes both parallelism and multithreading.

Parallelism: Parallelism refers to the simultaneous execution of multiple tasks or operations. In a true parallel system, multiple processors or cores can physically execute multiple tasks simultaneously. However, due to Python's Global Interpreter Lock (GIL), true parallelism within a single process is not achievable in Python, as only one thread can execute Python bytecode at a time.

Multithreading: Multithreading is a way of achieving concurrency within a single process. A thread is a lightweight unit of execution within a process, and by creating multiple threads, a program can split its workload and perform different tasks concurrently. Although Python's GIL prevents true parallelism within a single process, multithreading can still provide performance benefits for certain types of tasks, such as I/O operations, network requests, or database queries.

In the context of FastAPI, multithreading can be beneficial for handling concurrent requests and offloading blocking operations (e.g., I/O, network requests) to separate threads. This can improve the overall responsiveness and performance of the application, as the main thread can continue executing other tasks while separate threads handle blocking operations.

However, it's important to note that multithreading in Python is not a silver bullet for performance optimization. For CPU-bound tasks that involve heavy computations or number crunching, multithreading may not provide significant performance benefits due to the GIL. In such cases, other approaches like multiprocessing or using libraries like NumPy or Numba, which can leverage parallel computation on multi-core systems, may be more appropriate.

How to Use Multithreading in FastAPI?

Step 1. Creating Threads in Path Operation Functions

You can directly create threads using the threading module within path operation functions.

import threading

from fastapi import FastAPI

app = FastAPI()

@app.get("/resource")

def get_resource():

t = threading.Thread(target=do_work)

t.start()

return {"message": "Thread started"}

def do_work():

# perform computationally intensive work here

Step 2. Utilizing Background Tasks

FastAPI provides the @app.on_event("startup") decorator to create background tasks during startup.

from fastapi import FastAPI, BackgroundTasks

app = FastAPI()

@app.on_event("startup")

def startup_event():

threading.Thread(target=do_work, daemon=True).start()

def do_work():

while True:

# perform background work

Step 3. Using Third-Party Background Task Libraries

You can employ third-party libraries like apscheduler to execute periodic background tasks.

from apscheduler.schedulers.background import BackgroundScheduler

from fastapi import FastAPI

app = FastAPI()

scheduler = BackgroundScheduler()

@app.on_event("startup")

def start_background_processes():

scheduler.add_job(do_work, "interval", seconds=5)

scheduler.start()

def do_work():

# perform periodic work

Practice Example

Installing Required Tools

First, ensure that you have Python and pip installed. Then, use the following command to install FastAPI and uvicorn:

pip install fastapi

pip install uvicorn

Writing a Multi-threaded Application

Let's create a simple FastAPI application:

from fastapi import FastAPI

import threading

import time

app = FastAPI()

# Time-consuming task function

def long_running_task(task_id: int):

print(f"Starting Task {task_id}")

time.sleep(5) # Simulate task execution time

print(f"Finished Task {task_id}")

# Background task function

def run_background_task(task_id: int):

thread = threading.Thread(target=long_running_task, args=(task_id,))

thread.start()

@app.get("/")

async def read_root():

return {"Hello": "World"}

@app.get("/task/{task_id}")

async def run_task_in_background(task_id: int):

# Create and start a background thread to run the task

run_background_task(task_id)

return {"message": f"Task {task_id} is running in the background."}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="127.0.0.1", port=8000)

In this example, we use the threading.Thread class to create a background thread for running the time-consuming task. Inside the run_background_task function, we create a thread object thread with long_running_task as its target function, passing task_id as an argument. Then, we call thread.start() to launch a new thread and run long_running_task in the background.

Running the Application

Use the following command to run the FastAPI application:

uvicorn main:app --reload

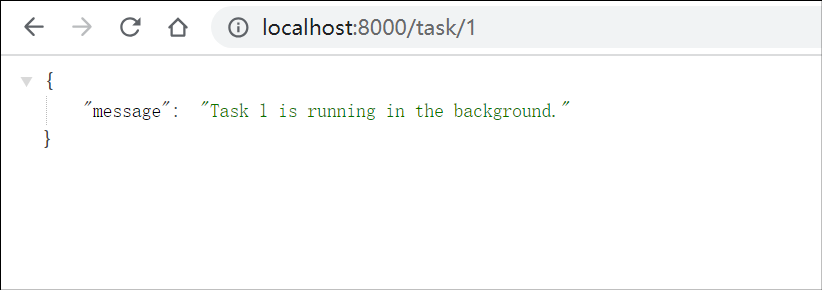

Now, you can test the application using a browser or a tool like curl. For instance, open a browser, type http://localhost:8000/task/1, and hit Enter. You will immediately receive the response {"message": "Task 1 is running in the background."}, and the task running in the background will print "Finished Task 1" after 5 seconds. Meanwhile, you can continue sending other task requests.

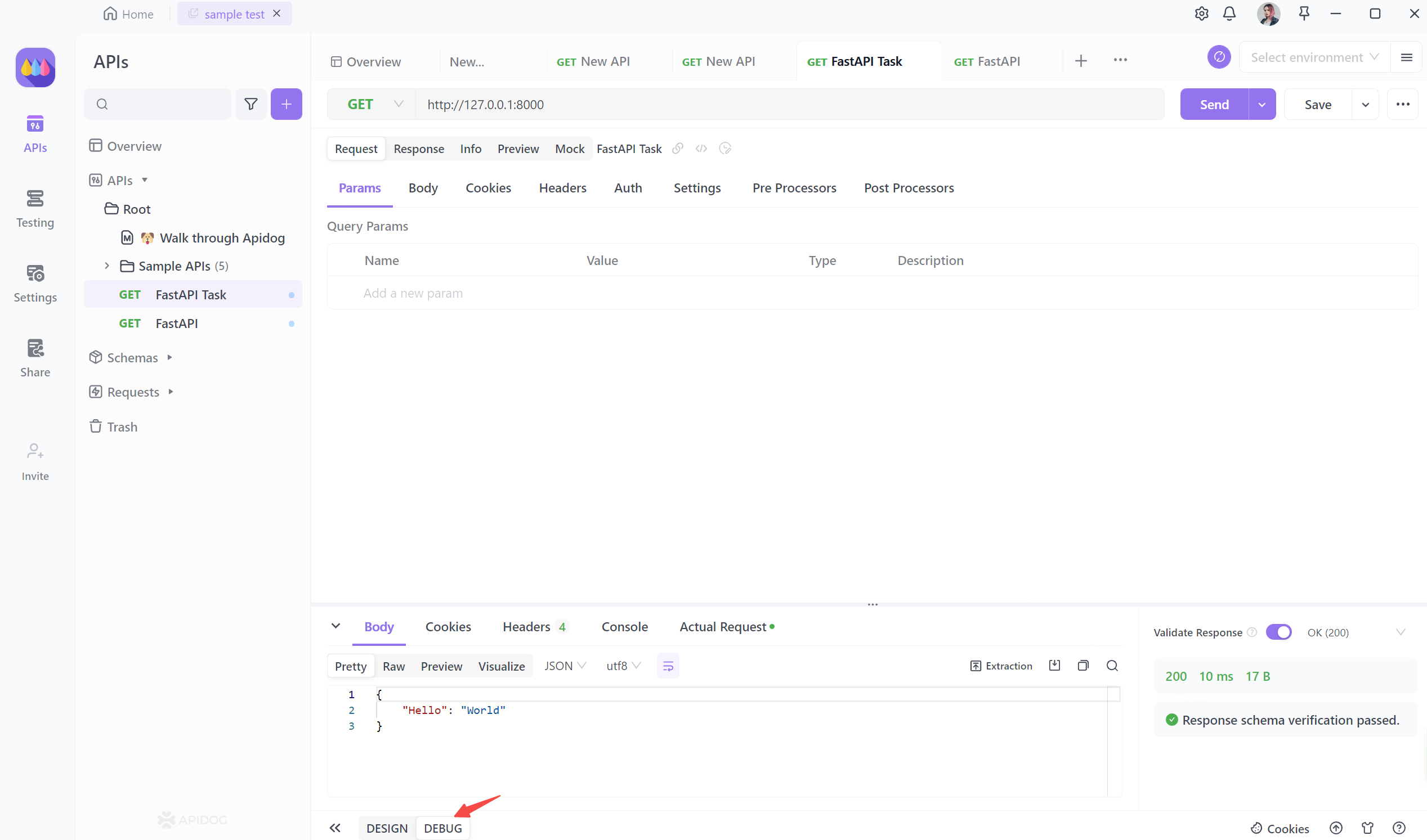

Test FastAPI API with Apidog

Apidog is an all-in-one API collaboration platform that integrates API documentation, API debugging, API mocking, and API automation testing. With Apidog, we can easily debug FastAPI interfaces.

To quickly debug an API endpoint, create a new project and select the "DEBUG." Fill in the request address, and you can swiftly send the request to obtain the response results, as illustrated in the example above.

Tips for FastAPI Multithreading

Multi-threading can be a powerful tool for optimizing code execution, but it also comes with its own set of challenges and considerations.

- Identify CPU-bound vs I/O-bound tasks: Before implementing multi-threading, it is important to identify the nature of the tasks in your FastAPI application. CPU-bound tasks are those that heavily rely on computational power, while I/O-bound tasks involve waiting for external resources like databases or APIs. Multi-threading is most effective for I/O-bound tasks, as it allows the application to perform other operations while waiting for the I/O operations to complete.

- Use an appropriate number of threads: The number of threads used in your FastAPI application should be carefully chosen. While it may be tempting to use a large number of threads, excessive thread creation can lead to resource contention and decreased performance. It is recommended to perform load testing and experimentation to determine the optimal number of threads for your specific application.

- Avoid shared mutable state: Multi-threading introduces the potential for race conditions and data inconsistencies when multiple threads access shared mutable state. To avoid such issues, it is important to design your FastAPI application in a way that minimizes shared mutable state. Use thread-safe data structures and synchronization mechanisms like locks or semaphores when necessary.

- Consider the Global Interpreter Lock (GIL): Python's Global Interpreter Lock (GIL) ensures that only one thread can execute Python bytecode at a time. This means that multi-threading in Python may not provide true parallelism for CPU-bound tasks. However, the GIL is released during I/O operations, allowing multi-threading to be effective for I/O-bound tasks. Consider using multiprocessing instead of multi-threading for CPU-bound tasks if parallelism is crucial.

- Implement error handling and graceful shutdown: When using multi-threading in FastAPI, it is important to handle errors gracefully and ensure proper shutdown of threads. Unhandled exceptions in threads can cause your application to crash or behave unexpectedly. Implement error handling mechanisms and use tools like

concurrent.futures.ThreadPoolExecutorto gracefully shut down threads when they are no longer needed. - Monitor and optimize thread performance: It is crucial to monitor the performance of your multi-threaded FastAPI application and identify any bottlenecks or areas for improvement. Use tools like profilers and performance monitoring libraries to identify areas of high CPU usage or excessive thread contention. Optimize your code and thread usage based on the insights gained from monitoring.