Software developers and quality assurance teams constantly seek ways to streamline their processes, and AI emerges as a powerful ally in this effort. Engineers create test cases to verify that applications function as expected, but traditional methods often consume significant time and resources. AI tools address this challenge by automating the generation of comprehensive test cases, drawing from code, specifications, or API definitions to produce scenarios that cover edge cases, normal operations, and potential failures.

This approach not only accelerates development cycles but also boosts accuracy. For instance, AI analyzes patterns and predicts vulnerabilities that humans might overlook. As teams adopt these technologies, they achieve higher test coverage with less manual intervention.

However, selecting the right tool matters. This article explores two effective options: Claude Code and Apidog. Each provides unique features for generating test cases, and we outline step-by-step instructions for both. Additionally, we discuss benefits, challenges, and advanced techniques to help you implement AI effectively in your projects.

Understanding Test Cases in Software Development

Developers define test cases as detailed sets of conditions or variables under which testers determine whether a system satisfies requirements or works correctly. These include inputs, execution steps, and expected outputs. Teams use test cases to identify defects early, ensure reliability, and maintain quality throughout the software lifecycle.

Traditional test case creation involves manual analysis of requirements, which proves time-intensive and prone to human error. Testers review specifications, brainstorm scenarios, and document each case meticulously. Consequently, coverage gaps arise, especially in complex systems with numerous interactions.

AI transforms this process by employing machine learning algorithms to parse code or docs and generate diverse test cases automatically. Tools process natural language descriptions or structured data, producing outputs that align with best practices. Therefore, integrating AI reduces workload while enhancing thoroughness.

Benefits of Using AI to Write Test Cases

AI brings several advantages to test case generation. First, it increases efficiency; algorithms produce hundreds of test cases in minutes, a task that might take humans days. Developers focus on high-level strategy instead of rote documentation.

Second, AI improves coverage. Machine learning models identify edge cases, such as boundary values or rare combinations, that manual methods often miss. This leads to more robust testing and fewer post-release bugs.

Third, AI promotes consistency. Generated test cases follow standardized formats, reducing variability across team members. Teams maintain uniform quality, which simplifies reviews and integrations.

Moreover, AI adapts to changes. When code or requirements update, tools regenerate test cases quickly, keeping tests current. This agility supports agile methodologies and continuous integration pipelines.

Finally, cost savings accumulate. By automating repetitive tasks, organizations allocate resources to innovation rather than maintenance. Studies show that AI-driven testing can cut defect detection costs by up to 30%.

Challenges in Traditional Test Case Writing and How AI Addresses Them

Manual test case creation faces hurdles like scalability issues in large projects. As applications grow, the number of possible scenarios explodes, overwhelming teams. AI counters this by scaling effortlessly, handling vast datasets without fatigue.

Another challenge involves expertise dependency. Junior testers might struggle with complex domains, leading to incomplete coverage. AI democratizes this process, allowing even novices to generate professional-grade test cases through intuitive interfaces.

Furthermore, keeping test cases aligned with evolving code proves difficult. Manual updates lag behind development, causing outdated tests. AI tools integrate with version control, regenerating cases on demand to maintain synchronization.

Despite these benefits, AI is not infallible. It requires quality inputs to produce reliable outputs. Poorly defined specifications yield suboptimal test cases. Therefore, teams must refine inputs and review AI-generated results.

Option 1: Using Claude Code to Generate Test Cases

Claude Code, powered by Anthropic's advanced AI models, excels in code-related tasks, including test case generation. Developers prompt Claude Code with code snippets, requirements, or descriptions, and it outputs structured test cases in various formats. This option suits general software testing beyond APIs, such as unit, integration, or functional tests.

Claude Code leverages natural language processing to understand context and generate relevant scenarios. Users access it via the Anthropic console or integrated environments. Its agentic coding capabilities allow iterative refinement, where Claude Code suggests improvements based on feedback.

Step-by-Step Guide to Generating Test Cases with Claude Code

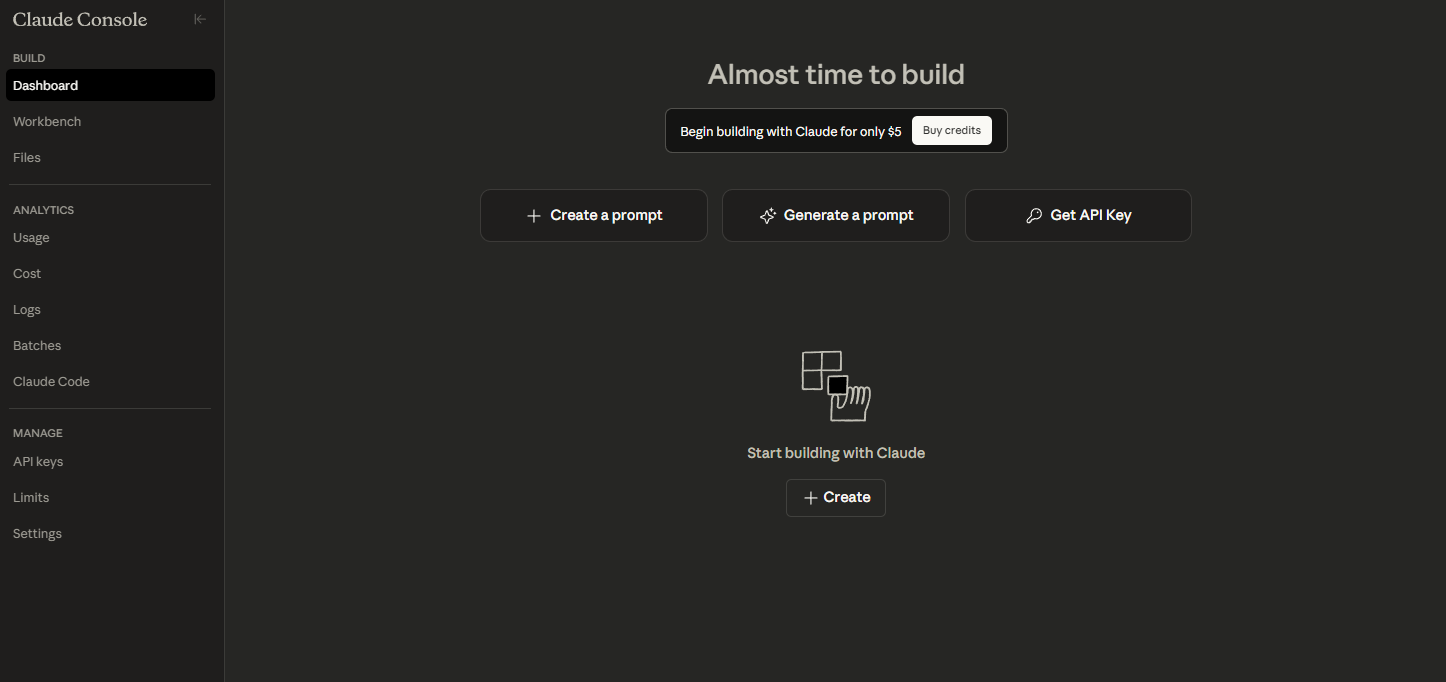

Step 1: Set Up Your Claude Account and Access Claude Code.

Visit anthropic.com and create an account if you lack one. Log in to the console. Select a project or start a new conversation. Ensure you have API access or use the web interface for prompts. This setup takes minutes and requires no additional software.

Step 2: Prepare Your Input Materials.

Gather requirements, code snippets, or user stories. For example, if testing a function that calculates factorial, include the code and specifications like input ranges and expected behaviors. Organize this information clearly to guide Claude Code effectively.

Step 3: Craft a Detailed Prompt.

Write a prompt that describes the task. Use active voice: "Generate unit test cases for this Python function: def factorial(n): if n == 0: return 1 else: return n * factorial(n-1). Include positive, negative, and edge cases." Specify the testing framework, such as pytest or unittest, to tailor outputs.

Step 4: Submit the Prompt to Claude Code.

Enter the prompt in the console. Claude Code processes it and generates test cases. Review the output, which typically includes code for each test case, assertions, and explanations.

Step 5: Refine and Iterate.

If the results need adjustment, provide feedback: "Add more edge cases for negative inputs." Claude Code refines the output iteratively. This step ensures completeness.

Step 6: Integrate Generated Test Cases into Your Project.

Copy the code into your testing files. Run the tests using your framework to validate. Document any modifications for team reference.

Use specific language in prompts to avoid ambiguity. Include examples of desired output formats. Test in small batches to manage complexity. Regularly update prompts with new requirements.

By following these steps, developers produce high-quality test cases efficiently. Claude Code's flexibility makes it ideal for diverse projects, from web apps to algorithms.

Advanced Techniques with Claude Code for Test Cases

Beyond basics, Claude Code supports test-driven development (TDD). Prompt it to generate tests before code: "Create test cases for a user authentication system that handles login, logout, and password reset." This enforces discipline.

Additionally, integrate Claude Code with IDEs via extensions. This allows real-time generation within your workflow. For instance, highlight code and prompt directly.

Claude Code also handles multi-language support. Specify languages like JavaScript or Java, and it adapts outputs accordingly. This versatility aids polyglot teams.

Moreover, use Claude Code for debugging test failures. Provide failing test output, and it suggests fixes or additional cases.

Case Study: A development team used Claude Code to generate tests for a machine learning model. They prompted with model specs, yielding 50+ cases covering data variations, resulting in 20% fewer bugs in production.

Option 2: Using Apidog to Generate Test Cases

Apidog stands out as an all-in-one API platform that incorporates AI to generate test cases directly from API definitions. It targets API testing, making it perfect for backend developers and QA engineers. Apidog analyzes OpenAPI specs or similar formats to create scenarios encompassing positive, negative, and boundary conditions.

The tool's visual interface simplifies setup, and its AI engine ensures comprehensive coverage. Users benefit from integration with CI/CD pipelines for automated execution.

Step-by-Step Guide to Generating Test Cases with Apidog

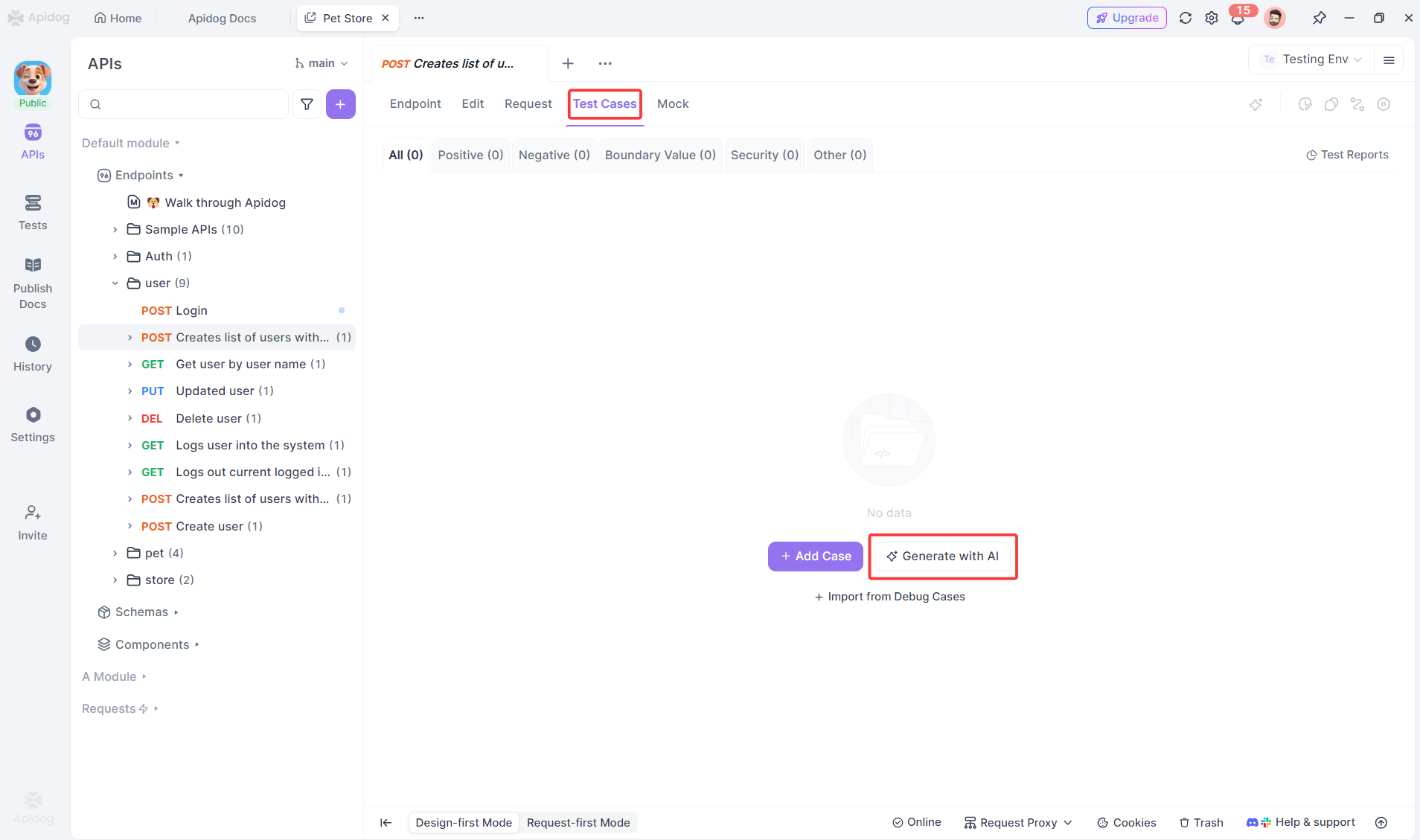

Step 1: Access the Endpoint Documentation and Switch to Test Cases Tab.

Navigate to any endpoint documentation page within Apidog. Locate and switch to the Test Cases tab. There, identify the Generate with AI button and click it to initiate the process. This action opens the AI generation interface directly tied to your API specs.

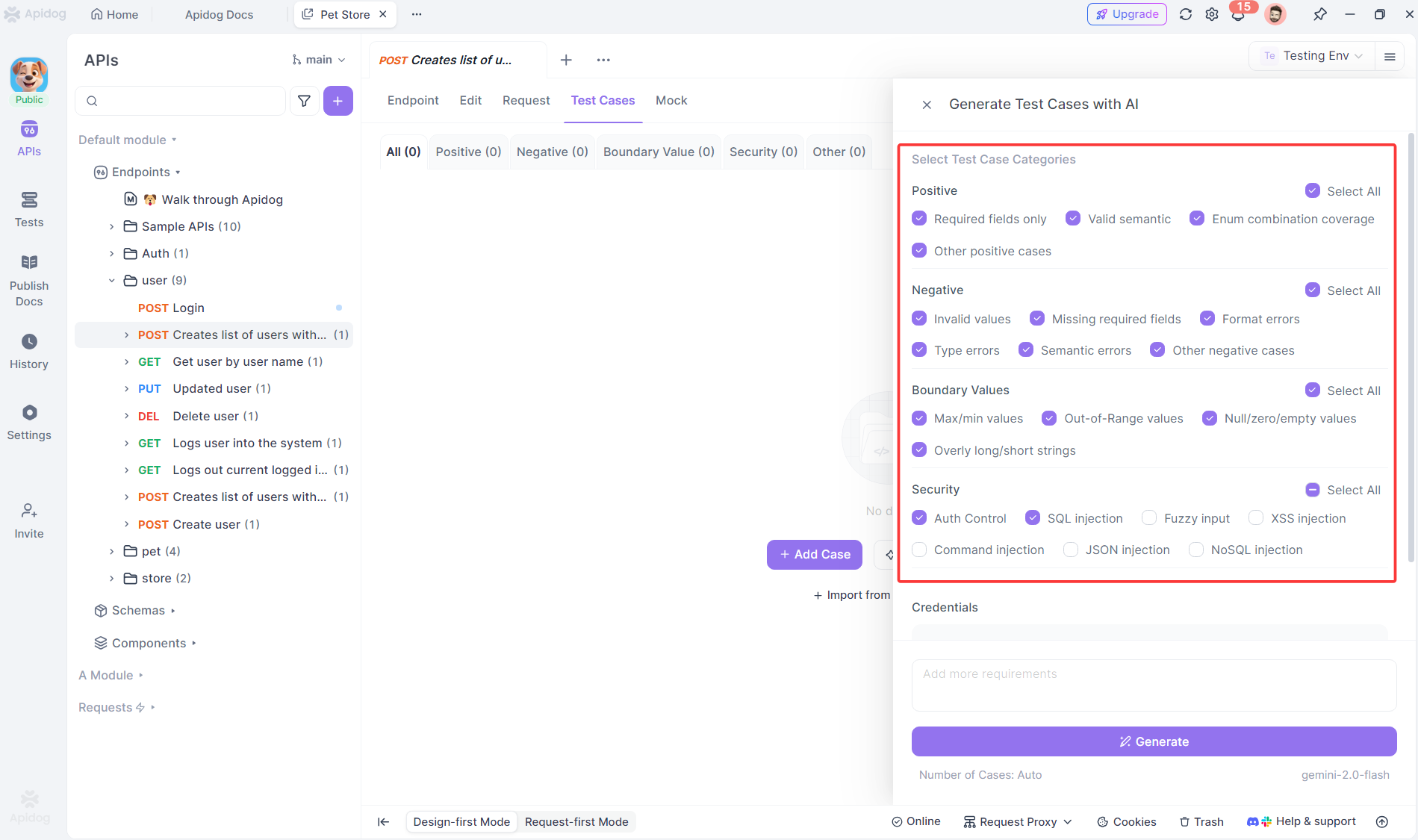

Step 2: Select Test Case Categories.

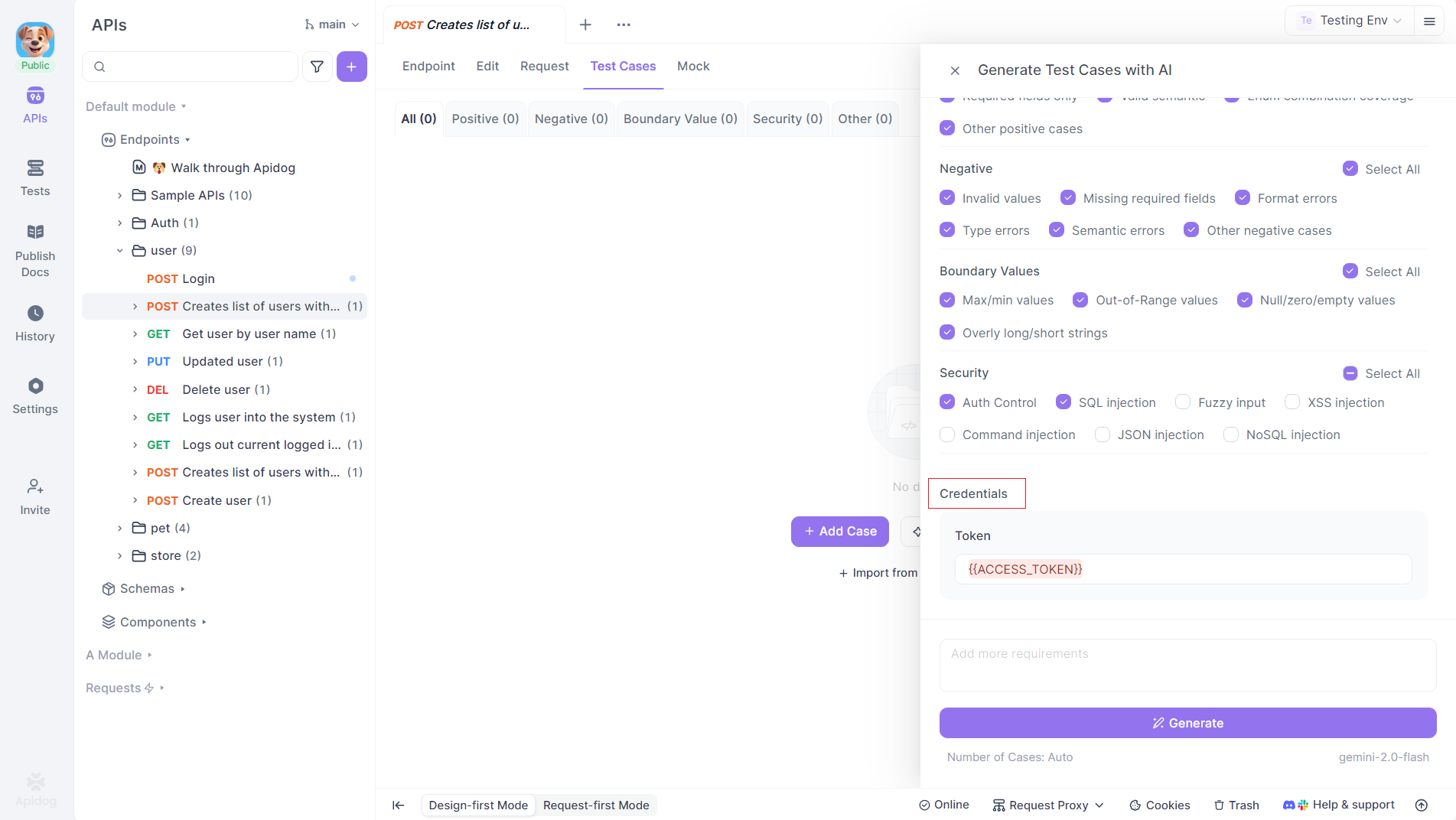

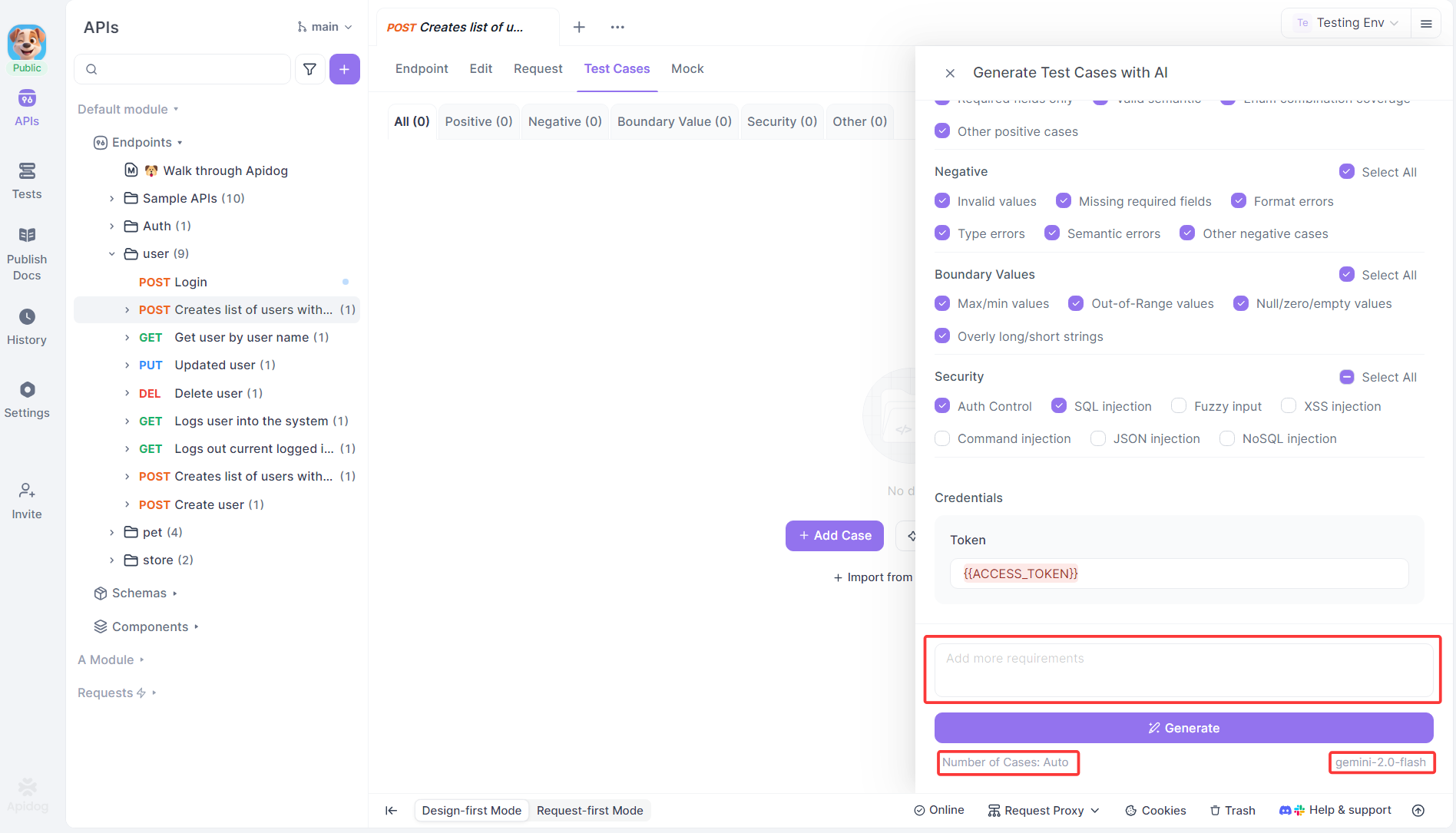

After clicking Generate with AI, observe a settings panel that slides out on the right side. Choose the types of test cases you want to generate, such as positive, negative, boundary, security, and others. This selection ensures the AI focuses on relevant scenarios, tailoring the output to your testing needs.

Step 3: Configure Credentials if Required.

Check if the endpoint demands credentials. If so, the configuration references these credentials automatically. Modify the credential values as necessary to fit your testing environment. Apidog encrypts keys locally before sending them to the AI LLM provider and decrypts them automatically after generation. This step maintains quick validation while prioritizing information security.

Step 4: Add Additional Requirements and Customize Generation Settings.

Provide extra requirements in the text box at the bottom of the panel to enhance accuracy and specificity. In the lower-left corner, configure the number of test cases to generate, with a maximum of 80 cases per run. In the lower-right corner, switch between different large language models and providers to optimize results. These adjustments allow fine-tuning before proceeding.

Step 5: Generate the Test Cases.

Click the Generate button. The AI begins creating test cases based on your API specifications and the configured settings. Monitor the progress as Apidog processes the request. Once complete, the generated test cases appear for review.

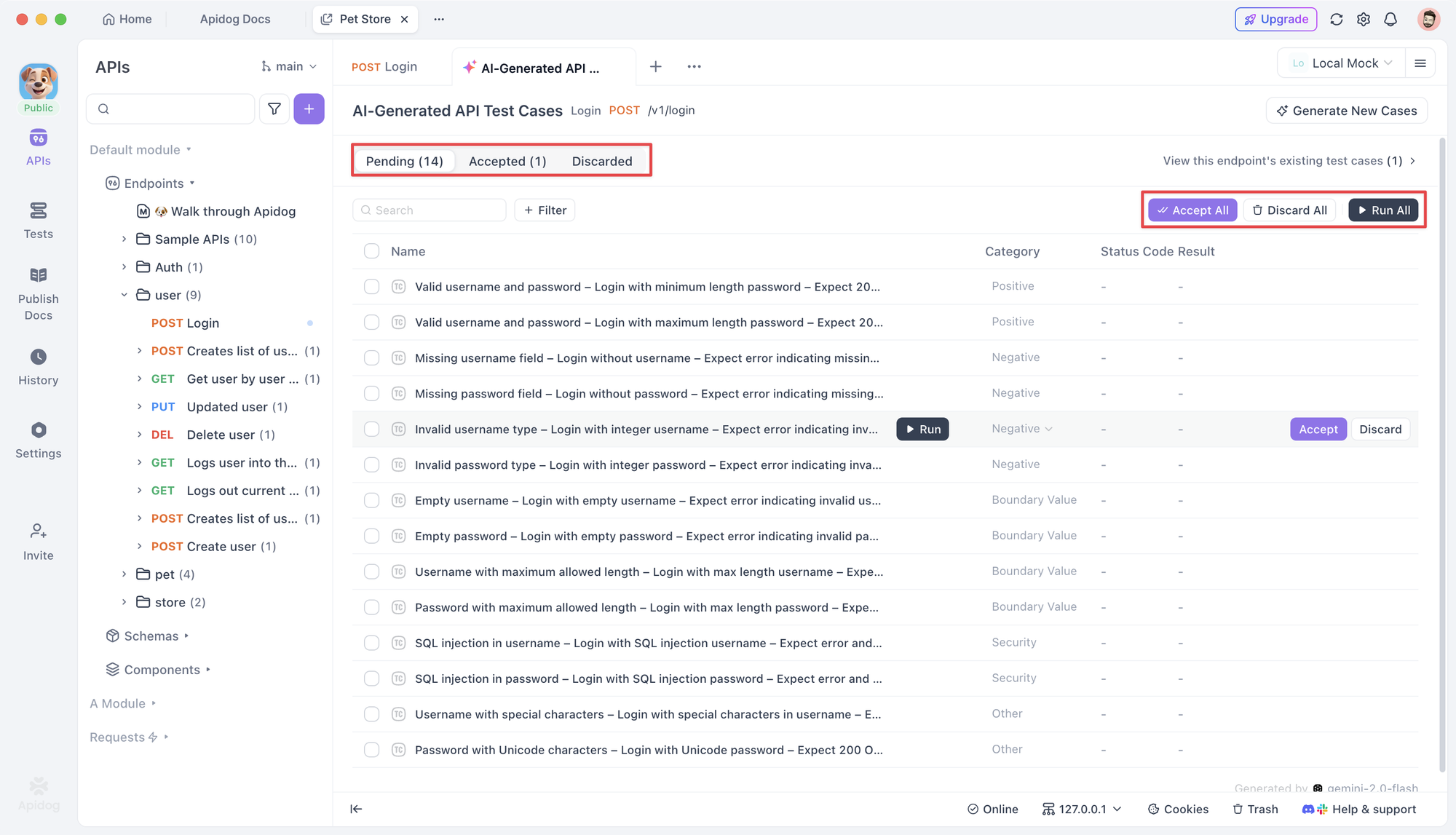

Step 6: Review and Manage Generated Test Cases.

Click on a specific test case to view its request parameters, rename it, or adjust its category. Use AI to generate test cases efficiently here. Click Run to execute the test case and verify if it matches expectations through the response. Click Accept to save the test case under the Test Cases tab in your documentation. Click Discard to remove unnecessary test cases. For efficiency, select multiple test cases at once to perform bulk actions like running or discarding.

By following these steps, teams produce and manage AI-generated test cases seamlessly within Apidog. The platform's intuitive controls make it accessible for both individual developers and collaborative groups.

Advanced Features in Apidog for Test Case Management

Apidog supports data-driven testing. Import datasets to parameterize cases, enabling bulk execution with varied inputs.

Furthermore, it offers performance testing. Generate load test cases to simulate traffic and measure response times.

Collaboration features allow teams to share scenarios, ensuring consistency.

Case Study: An e-commerce platform used Apidog to generate API test cases, covering 95% of endpoints automatically. This reduced manual testing time by 40%, accelerating deployments.

Comparing Claude Code and Apidog for Test Case Generation

Claude Code offers broad applicability, ideal for non-API code, while Apidog specializes in APIs with built-in execution. Claude Code requires prompting skills, whereas Apidog provides a GUI for ease.

In terms of cost, both have free tiers, but Apidog's pro features enhance scalability. Choose based on project needs: general coding with Claude Code or API-focused with Apidog.

Best Practices for AI-Generated Test Cases

Always validate outputs manually. AI might miss domain-specific nuances, so review for accuracy.

Combine AI with human insight. Use generated cases as a starting point and refine them.

Maintain version control. Track changes to test cases alongside code.

Monitor for biases. AI trained on certain data might overlook unique scenarios; diversify inputs.

Integrate into workflows. Automate generation in pipelines for continuous testing.

Common Pitfalls and How to Avoid Them

One pitfall involves over-reliance on AI, leading to untested assumptions. Counter this by running exploratory tests.

Another concerns input quality. Garbage in yields garbage out; ensure specs are detailed.

Scalability issues arise with large projects. Break them into modules for manageable generation.

Security considerations matter; avoid exposing sensitive data in prompts.

Real-World Applications and Case Studies

In fintech, teams use AI to generate compliance test cases, ensuring regulatory adherence.

Healthcare apps employ Apidog for API tests on patient data endpoints, prioritizing privacy.

Claude Code aids game developers in testing algorithms for fairness.

A startup reported 50% faster onboarding using AI-generated tests.

Future Trends in AI for Test Case Writing

AI will evolve with multimodal inputs, analyzing code, docs, and visuals.

Self-healing tests that adapt to code changes will emerge.

Integration with VR for immersive testing simulations looms.

Ethical AI use will gain focus, emphasizing transparency.

Conclusion: Embracing AI for Superior Test Cases

AI revolutionizes how teams write test cases, offering speed, coverage, and efficiency. By mastering tools like Claude Code and Apidog, developers elevate their practices. Start implementing these strategies today to reap the benefits.