The phrase "AI-powered testing" has been buzzing around the industry for years, however, most tools still require humans to write test cases, define scenarios, and interpret results. Agentic AI Testing represents a fundamental shift, where autonomous AI agents can plan, execute, and adapt testing strategies without constant human direction/intervention. These aren't just smart assistants; they're digital testers that behave like experienced Quality Assurance engineers, making decisions, learning from failures, and prioritizing risks based on real-time analysis.

This guide explores Agentic AI Testing from the ground up: what makes it different, how it enhances the entire software development lifecycle, and why it's becoming essential for modern development teams drowning in complexity.

What Exactly Is Agentic AI Testing?

Agentic AI Testing uses autonomous AI agents—systems that can perceive their environment, make decisions, and take actions to achieve specific goals—to perform software testing. Unlike traditional test automation that follows rigid scripts, these agents:

- Plan dynamically: Analyze code changes, historical bugs, and usage patterns to decide what to test

- Execute intelligently: Interact with applications like humans, exploring edge cases and adapting to UI changes

- Learn continuously: Remember which tests found bugs and adjust future strategies accordingly

- Self-heal: When the UI changes, agents update their own locators and test steps

Think of it as hiring a senior QA engineer who never sleeps, writes tests at machine speed, and gets smarter with every release.

How Agentic AI Enhances the Software Development Lifecycle

Agentic AI Testing doesn’t just automate testing—it fundamentally improves how teams build and ship software across every SDLC phase:

Requirements Analysis

Agents analyze user stories and automatically generate acceptance criteria in Gherkin syntax:

# Auto-generated by AI agent from story: "User can reset password"

Scenario: Password reset with valid email

Given the user is on the login page

When they enter "user@example.com" in the reset form

And click "Send Reset Link"

Then they should see "Check your email"

And receive an email within 2 minutes

Test Design

Agents create comprehensive test suites by combining:

- Code coverage analysis

- Historical bug patterns

- API contract definitions

- User journey analytics

Test Execution

Agents run tests 24/7, prioritizing high-risk areas based on:

- Recent code changes

- Complexity metrics

- Past defect density

- Production usage patterns

Defect Analysis

When tests fail, agents don’t just report—they investigate:

- Isolate root cause (API vs UI vs database)

- Suggest potential fixes based on similar past issues

- Predict which other features might be affected

Continuous Improvement

Agents analyze test effectiveness and retire low-value tests while creating new ones for uncovered areas.

Agentic AI Testing vs Manual Testing: A Clear Comparison

| Aspect | Manual Testing | Agentic AI Testing |

|---|---|---|

| Speed | Hours/days for regression | Minutes for full suite |

| Consistency | Prone to human error | Deterministic execution |

| Coverage | Limited by time | Comprehensive, adaptive |

| Exploration | Ad-hoc, experience-based | Data-driven, intelligent |

| Learning | Individual knowledge loss | Institutional memory |

| Cost | High (salary × time) | Low after setup |

| Scalability | Linear (add people) | Exponential (add compute) |

| Adaptability | Manual updates required | Self-healing locators |

The key insight: Agentic AI Testing doesn’t replace human testers—it elevates them. Testers become test architects, focusing on strategy while agents handle repetitive execution.

Tools and Frameworks for Agentic AI Testing

Commercial Platforms

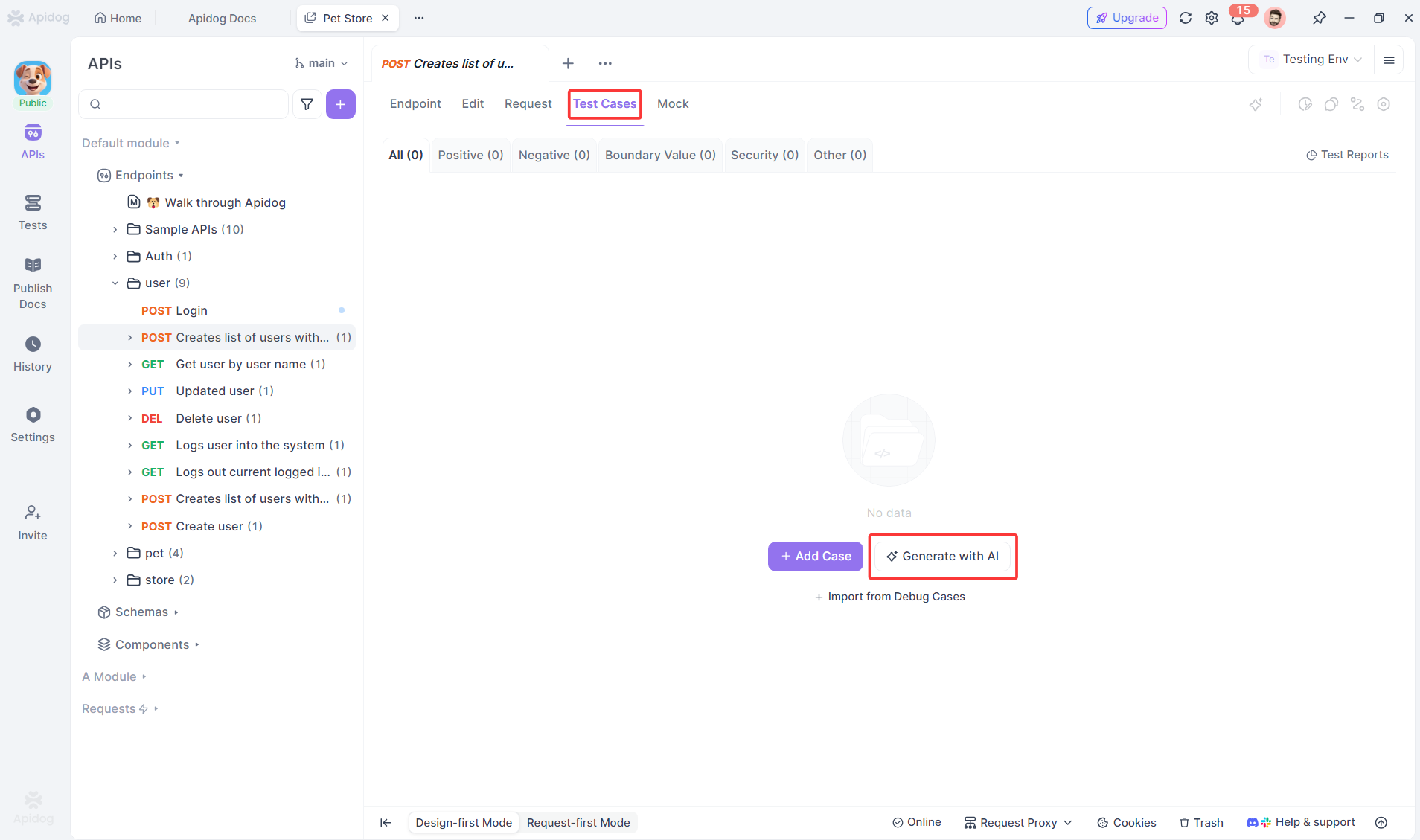

- Apidog: Specializes in API testing agents that generate and execute tests from specifications using AI

- Mabl: Low-code platform with self-healing UI tests

- Functionize: Cloud-based autonomous testing with NLP test creation

Open-Source Frameworks

- Playwright + AI Models: Custom agents using GPT-5 / Sonnet 4.5 to generate and maintain tests

- Cypress with ML Plugins: Community-driven self-healing extensions

Specialized Tools

- Apidog: API-first agentic testing that generates test cases from OpenAPI specs and runs them autonomously

// Example: Apidog AI agent generating API tests

const apidog = require('apidog-ai');

// Agent reads API spec and generates comprehensive tests

const testSuite = await apidog.agent.analyzeSpec('openapi.yaml');

// Agent prioritizes based on risk

const prioritizedTests = await apidog.agent.rankByRisk(testSuite);

// Agent executes and adapts

const results = await apidog.agent.run(prioritizedTests, {

selfHeal: true,

parallel: 10,

maxRetries: 3

});

How Agentic AI Testing is Performed: The Workflow

Step 1: Agent Ingests Application Context

The agent scans your:

- OpenAPI specifications

- Database schemas

- Frontend code (React components, forms)

- Historical test results

- Production logs

Step 2: Agent Generates Test Strategy

Using connected LLM (Claude, GPT-4), the agent creates:

- Happy path tests: 40% of suite

- Edge case tests: 35% of suite (boundary values, invalid inputs)

- Negative tests: 15% (security, error handling)

- Exploratory tests: 10% (unusual user flows)

Step 3: Agent Executes Tests Autonomously

The agent:

- Manages test data lifecycle

- Handles authentication

- Adapts to UI changes (self-healing selectors)

- Retries flaky tests with exponential backoff

- Captures detailed logs and traces

Step 4: Agent Analyzes Results

Beyond pass/fail, the agent:

- Generates bug reports with reproduction steps

- Creates video recordings of failures

- Suggests root cause based on stack traces

- Identifies test coverage gaps

Step 5: Agent Updates Strategy

Based on results, the agent:

- Removes ineffective tests

- Creates new tests for missed paths

- Adjusts risk scores for future prioritization

Pros and Cons of Agentic AI Testing

| Pros | Cons |

|---|---|

| Massive Coverage: Tests thousands of scenarios impossible manually | Initial Setup: Requires API keys, environment configuration |

| Self-Healing: Adapts to UI changes automatically | Learning Curve: Teams need to understand agent behavior |

| Speed: Runs 1000x more tests in same time | Cost: Compute and LLM API costs can add up |

| Consistency: No human error or fatigue | Complex Scenarios: May struggle with highly creative test design |

| Documentation: Generates living test specs | Debugging: Agent decisions can be opaque without good logging |

| 24/7 Operation: Continuous testing without supervision | Security: Agents need access to test environments and data |

How Apidog Enables Agentic AI Testing

While general agentic platforms exist, Apidog specializes in API testing agents that deliver immediate value without complex setup.

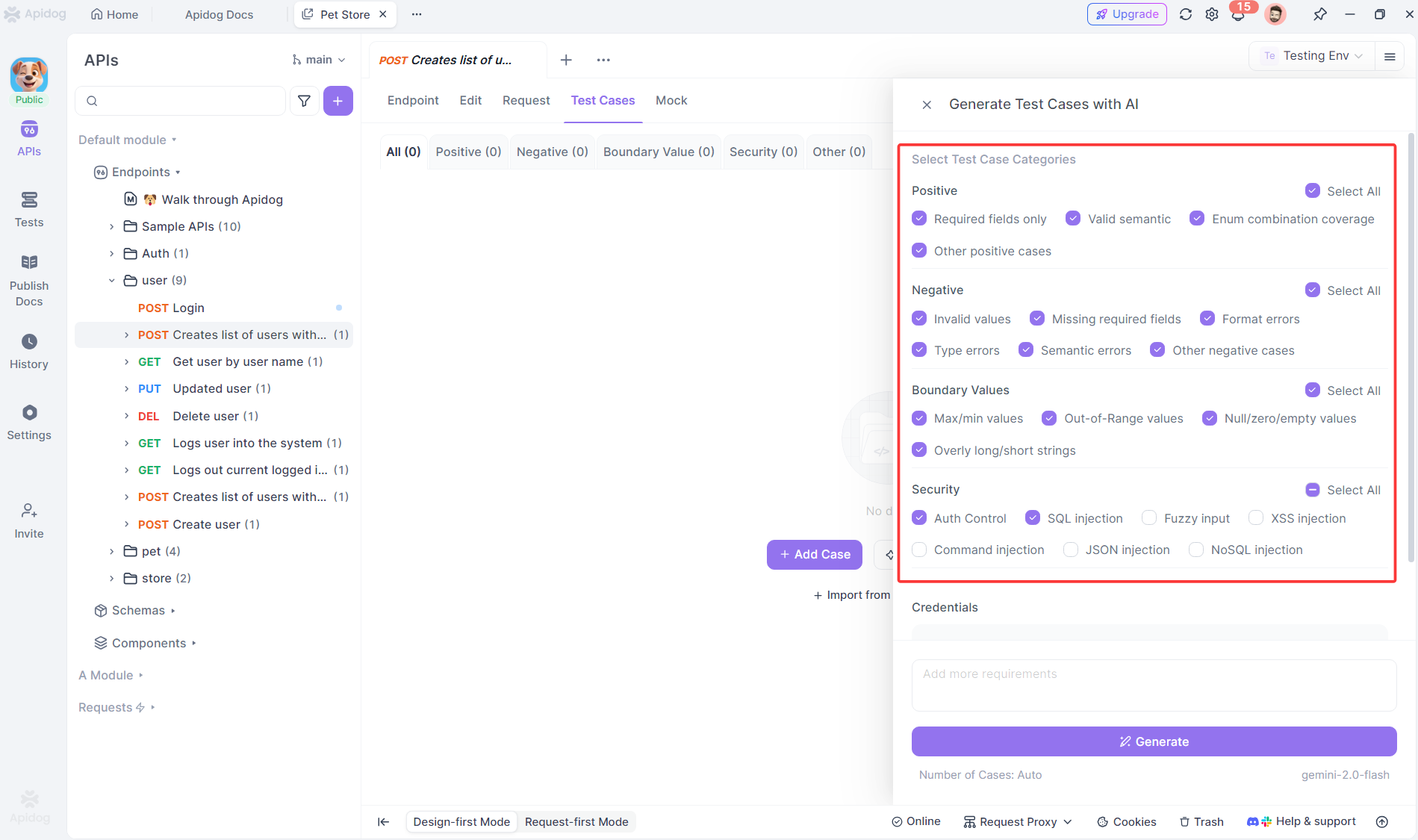

Automatic Test Case Generation

Apidog’s AI agent reads your OpenAPI spec and creates comprehensive tests:

# API Spec

paths:

/api/users:

post:

requestBody:

required: true

content:

application/json:

schema:

type: object

properties:

email:

type: string

format: email

name:

type: string

minLength: 1

Intelligent Test Execution

Apidog agents don’t just run tests—they optimize execution:

// Agent prioritizes based on risk

const executionPlan = {

runOrder: ['critical-path', 'high-risk', 'medium-risk', 'low-risk'],

parallelism: 10,

selfHeal: true,

retryFlaky: {

enabled: true,

maxAttempts: 3,

backoff: 'exponential'

}

};

Adaptive Maintenance

When your API changes, Apidog agent detects it and updates tests:

- New endpoint? Agent generates tests automatically

- Removed field? Agent removes dependent tests

- Changed type? Agent adjusts validation assertions

Frequently Asked Questions

Q1: Will Agentic AI Testing replace QA teams?

Ans: No—it elevates them. QA engineers become test strategists who train agents, review their findings, and focus on exploratory testing. Agents handle repetitive execution, humans handle creative risk analysis.

Q2: How do I trust what the agent is testing?

Ans: Apidog provides complete audit logs of agent decisions: why it chose tests, what it observed, how it adapted. You can review and approve agent-generated test suites before execution.

Q3: Can agents test complex business logic?

Ans: Yes, but they need rich context. Feed them user stories, acceptance criteria, and business rules. The more context, the better the agent’s test design.

Q4: What if the agent misses critical bugs?

Ans: Start with agent-generated tests as a baseline, then add human-designed tests for known risky areas. Over time, the agent learns from missed bugs and improves coverage.

Q5: How does Apidog handle authentication in agentic tests?

Ans: Apidog agents manage auth automatically—handling token refresh, OAuth flows, and credential rotation. You define auth once, and the agent uses it across all tests.

Conclusion

Agentic AI Testing represents the next evolution of quality assurance—moving from scripted automation to intelligent, autonomous validation. By delegating repetitive test execution to agents, teams achieve coverage levels impossible with manual approaches while freeing human testers to focus on strategic quality risks.

The technology is here today. Tools like Apidog make it accessible without massive infrastructure investment. Start small: let an agent generate tests for one API endpoint, review its work, and see the results. As confidence grows, expand agentic testing across your application.

The future of testing isn’t more humans writing more scripts—it’s smarter agents collaborating with humans to build software that actually works in the wild. That future is Agentic AI Testing, and it’s already transforming how modern teams ship quality code.