Google Gemini transforms the way developers and enthusiasts approach coding by introducing vibe coding, a method that leverages advanced AI to convert natural language ideas into fully functional applications. Developers generate code through conversational prompts, allowing rapid prototyping without traditional barriers.

Engineers recognize that small adjustments in transitions yield significant improvements in code readability and maintainability. Google Gemini facilitates this by automating complex setups, so you focus on core logic. Furthermore, the system incorporates multimodal capabilities, enabling apps that handle text, images, and more. Users access this via Google AI Studio, where Gemini models process prompts intelligently.

What Is Vibe Coding and How Does Google Gemini Enable It?

Vibe coding represents a paradigm shift in software development. Developers describe application ideas in natural language, and AI systems like Google Gemini interpret those descriptions to produce executable code. This approach, first popularized in early 2025, emphasizes collaboration between human intuition and AI precision. Google Gemini, as the core model, analyzes prompts to identify required components, such as user interfaces, backend logic, and integrations.

Google Gemini powers vibe coding by utilizing its multimodal reasoning abilities. The model understands context from prompts, selecting appropriate APIs and features automatically. For example, if a prompt requests an image-editing app, Google Gemini wires in tools like Imagen for generation tasks. This eliminates manual configuration, reducing development time from hours to minutes.

Moreover, vibe coding differs from traditional coding by prioritizing iteration over perfection. Users refine outputs through successive prompts, ensuring the final product aligns with their vision. Google Gemini excels here, as it maintains state across interactions, building on previous generations.

Google Gemini employs transformer-based architectures to process inputs. It tokenizes prompts, applies attention mechanisms to weigh relevance, and generates code in languages like JavaScript or Python. The system's integration with Google AI Studio provides a user-friendly interface, where developers select models like Gemini 2.5 Pro for advanced reasoning.

However, vibe coding requires responsible use. Engineers review AI-generated code for security vulnerabilities and efficiency. Google Gemini supports this by offering explanations alongside outputs, fostering transparency.

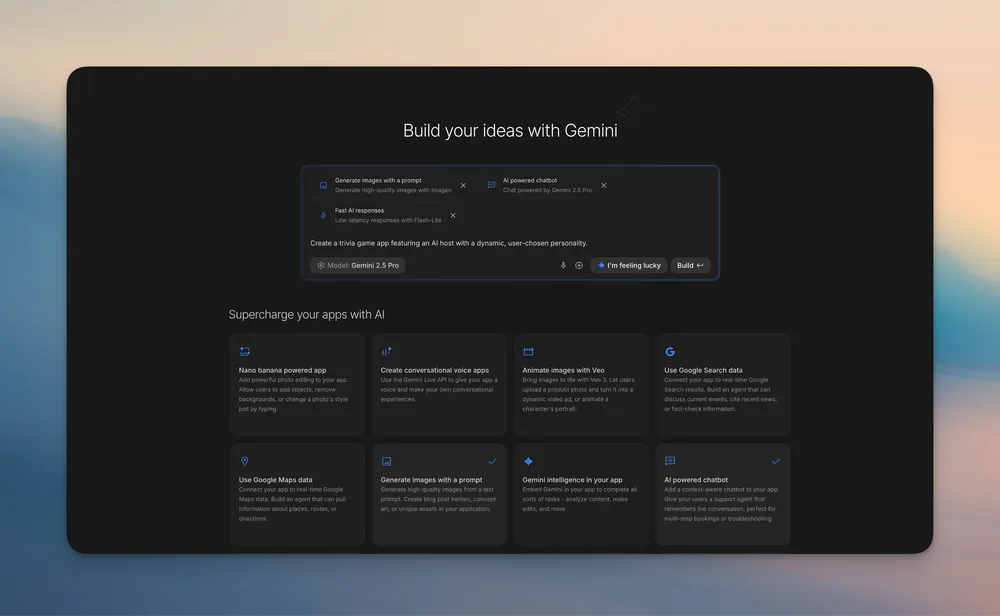

This visual illustrates the streamlined setup, where you input ideas directly.

The Technical Architecture Behind Google Gemini's Vibe Coding

Google Gemini operates on a sophisticated stack that includes large language models trained on vast datasets. The architecture features multiple layers: input processing, reasoning engines, and output generation. When you submit a prompt, Google Gemini tokenizes it into embeddings, which capture semantic meaning.

Subsequently, the model applies chain-of-thought reasoning to break down complex requests. For instance, a prompt like "Build a photo transformer app" triggers Google Gemini to identify needs for camera access, image processing, and UI elements. It then assembles code using predefined templates and APIs.

Key components include:

- Multimodal Integration: Google Gemini handles text, images, and video via unified embeddings.

- API Auto-Wiring: The system connects to services like Veo for video generation or Google Search for data validation.

- Quota Management: Google Gemini monitors usage, switching to user-provided API keys when free limits exhaust.

Furthermore, the brainstorming feature during loading uses Google Gemini to suggest enhancements in real-time. This employs parallel processing to generate ideas without delaying the main build.

Engineers appreciate how Google Gemini optimizes for performance. It minimizes latency by caching common patterns, ensuring responsive interactions. However, limitations exist; highly specialized domains may require manual tweaks.

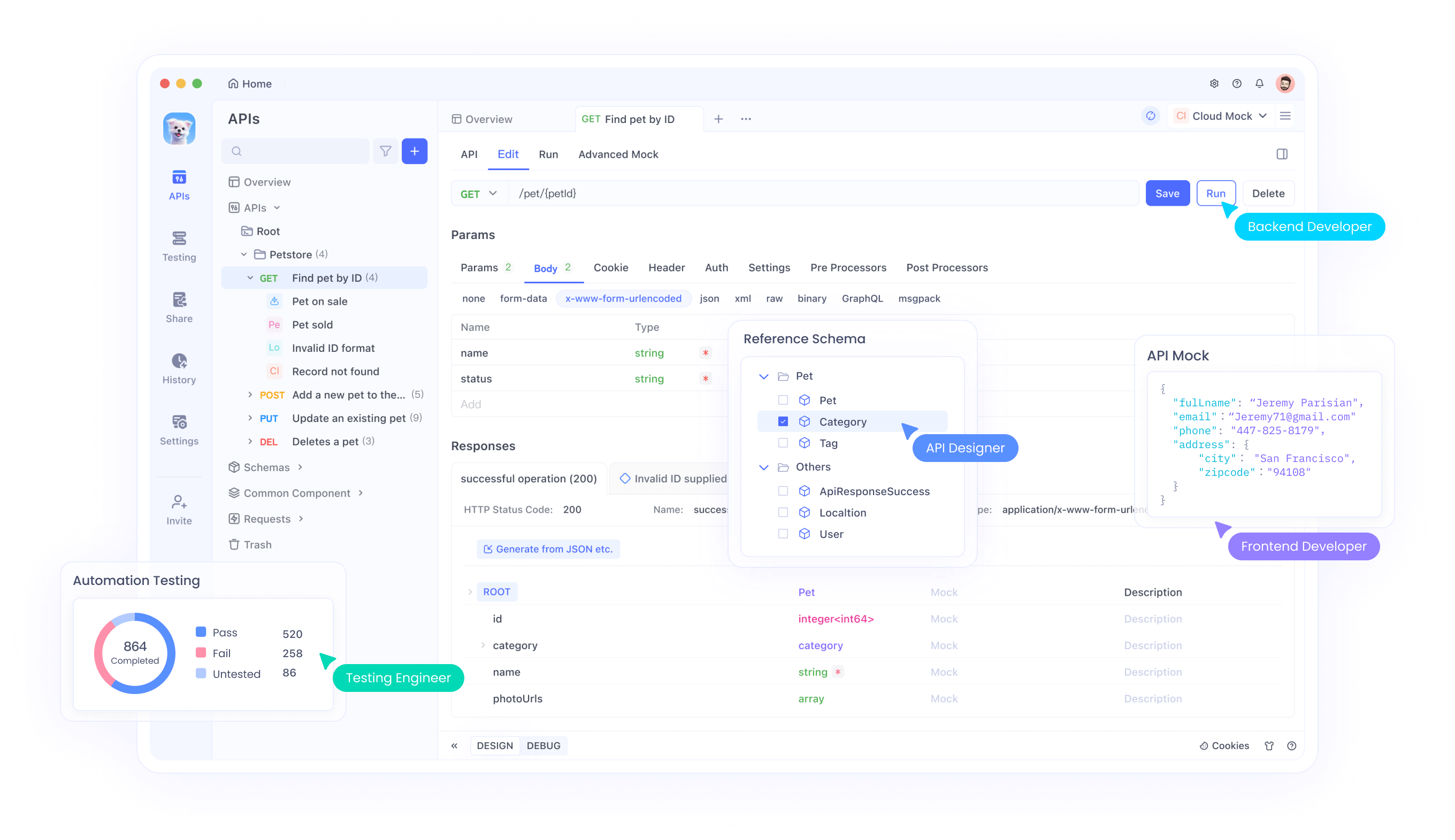

To extend capabilities, integrate with external tools. Apidog, for example, tests APIs that Google Gemini incorporates, verifying endpoints for reliability.

Getting Started with Vibe Coding in Google AI Studio

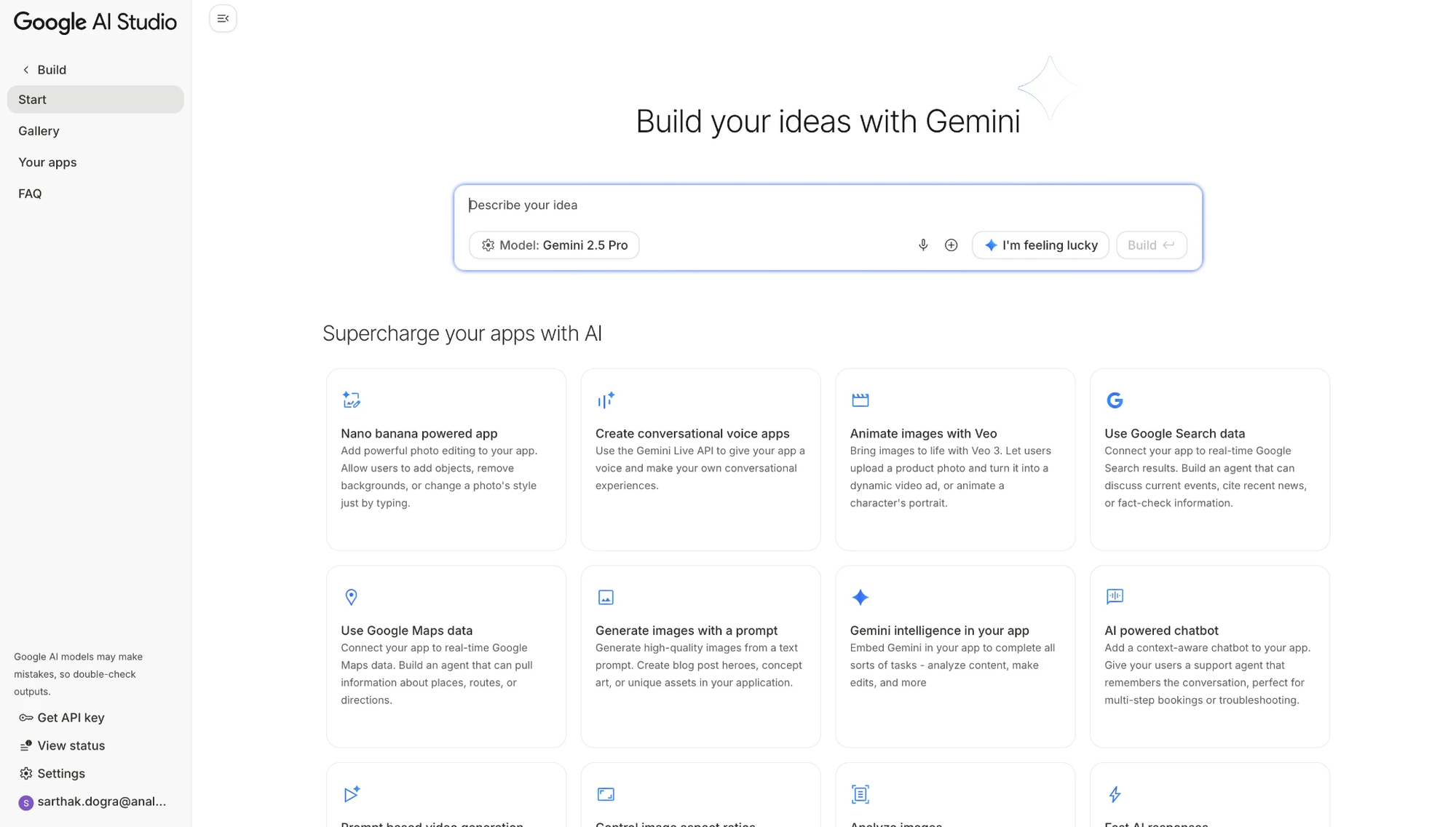

You begin vibe coding by navigating to Google AI Studio. Create an account if necessary, then access the Build tab. Here, Google Gemini presents options for model selection and feature activation.

First, choose a model such as Gemini 2.5 Flash for quick iterations or Pro for depth. Next, enable features like Nano Banana for photo editing or Veo for animation.

Then, craft your prompt. Effective prompts specify functionality, such as "Develop an interactive chatbot for garden design with image generation." Google Gemini processes this, generating the app skeleton.

Additionally, use the "I'm Feeling Lucky" button for random ideas. This leverages Google Gemini's creativity to propose concepts, complete with wired features.

Once built, the app appears in an editable interface. You test it directly within the studio, observing behaviors.

For API-heavy apps, Apidog proves invaluable. It allows you to mock and test calls that Google Gemini embeds, ensuring seamless operation.

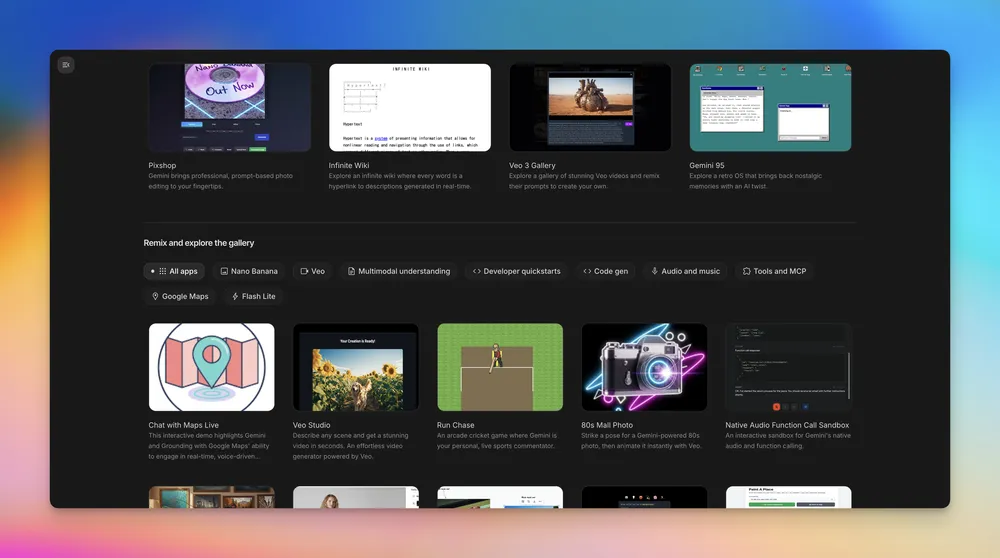

This gallery aids discovery, optimizing your starting point.

Step-by-Step Guide to Vibe Coding Your First App with Google Gemini

You start by logging into Google AI Studio and selecting the vibe coding mode. Enter a descriptive prompt, like "Create an app using nano banana where I can upload a picture of an object, drag it into a scene, and then generate that object in the scene - I want to use this to test furniture ideas"

Google Gemini analyzes the prompt, identifying requirements for camera input and image manipulation. It then assembles the code, integrating APIs automatically.

While building, the brainstorming screen displays Google Gemini-generated ideas, such as adding voice commands.

Upon completion, review the app. Activate Annotation Mode by highlighting elements, then instruct Google Gemini: "Change this button to blue and animate it."

Exploring Advanced Features in Google Gemini Vibe Coding

Google Gemini offers Annotation Mode for precise modifications. You select UI parts and provide natural language directives, which Google Gemini translates into code updates.

For instance, "Animate the image from left" triggers CSS animations via Google Gemini's understanding.

Moreover, the App Gallery serves as a repository. You browse, remix, and learn from existing projects, accelerating development.

Quota handling ensures continuity. Google Gemini notifies when limits approach, prompting API key addition.

Integration with other Google services amplifies power. Veo generates videos, while Imagen handles images—all wired by Google Gemini.

However, for custom APIs, Apidog facilitates design and testing, complementing Google Gemini's outputs.

Integrating Apidog with Google Gemini for Enhanced API Management

Apidog excels in API design and testing, perfectly suiting vibe-coded apps from Google Gemini.

You obtain a Gemini API key, then in Apidog, create projects to call endpoints.

For instance, if your app uses Veo via API, Apidog mocks requests, validating parameters.

Apidog's interface allows importing OpenAPI specs, aligning with Google Gemini's generations.

Furthermore, debug sessions in Apidog reveal issues that Google Gemini might overlook.

Engineers use Apidog to chain calls, ensuring multimodal apps function cohesively.

Download Apidog free to experience this synergy.

Best Practices for Vibe Coding with Google Gemini

You craft clear prompts, specifying languages and features.

Review code: Scan for inefficiencies, as Google Gemini optimizes generally but not always perfectly.

Test incrementally: Build small, expand.

Handle quotas: Monitor usage, integrate personal keys.

Document iterations: Track prompts for reproducibility.

Leverage Apidog for API layers, automating tests.

Avoid over-reliance; understand outputs to own the code.

The Future of Vibe Coding Powered by Google Gemini

Google Gemini evolves, promising deeper integrations and faster generations.

Future updates may include real-time collaboration or advanced debugging.

As AI advances, vibe coding blurs lines between novices and experts.

However, ethical considerations persist: Ensure bias-free outputs.

With tools like Apidog, the ecosystem strengthens, supporting complex deployments.

In summary, Google Gemini democratizes development through vibe coding. You now possess the knowledge to implement it effectively. Experiment, iterate, and create innovative apps today.