If you enjoy the smooth workflow of Claude Code (the CLI tool from Anthropic), then you likely know that by default it expects the Anthropic API format. But what if you want to use a different model, or explore new models that are not available through Anthropic’s direct API? And that’s where OpenRouter comes in! It provides a unified, OpenAI-compatible API access to hundreds of models from many different providers.

By bridging the two systems, you can get the best of both worlds: Claude Code’s developer-friendly interface and OpenRouter’s wide model catalog. This integration is what we are referring to as “Claude Code with Openrouter.”

The trick is using a "router / proxy" that translates between the two API formats. Once you have it set up, you can run Claude Code just as usual, but with OpenRouter powering the backend.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

Key Advantages of Using Claude Code with Openrouter

Combining Claude Code and Openrouter unlocks a range of powerful benefits:

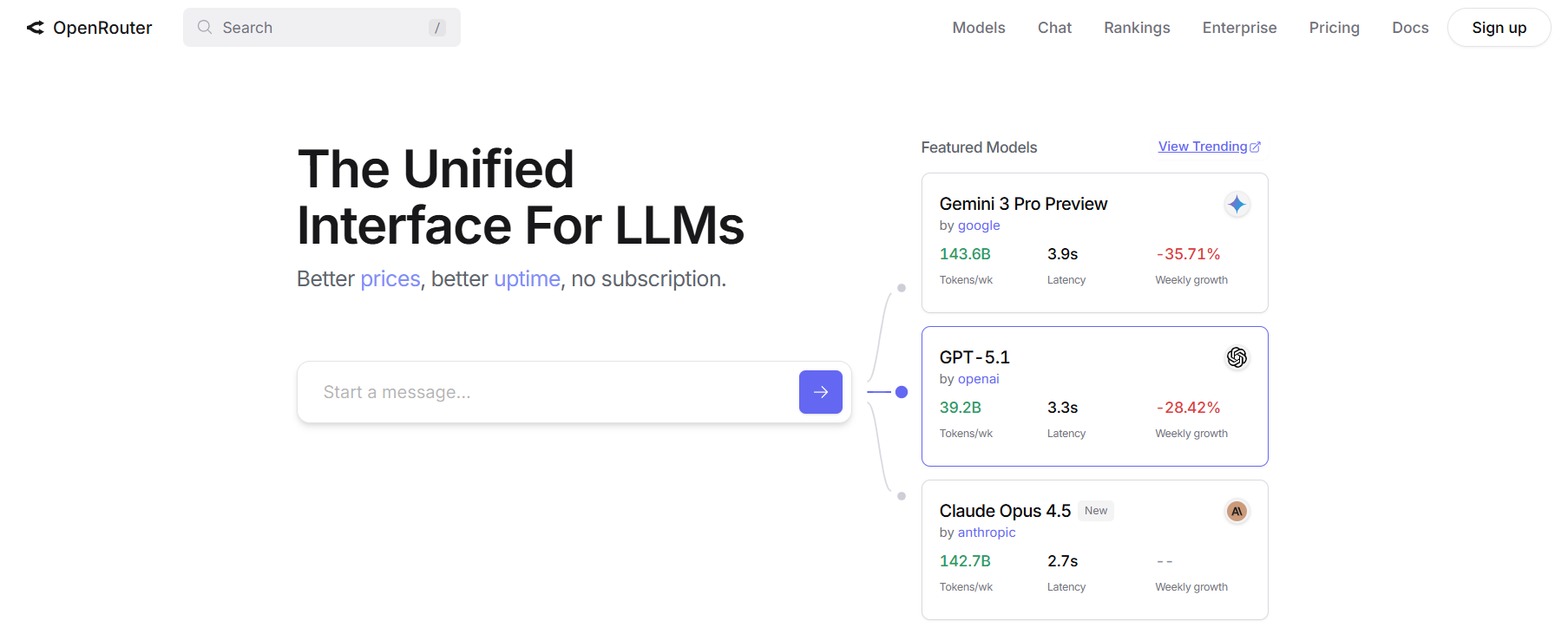

- Access to 400+ models — including Claude variants, GPT-style models, open-source LLMs, and more.

- No need for Anthropic subscription — you pay only for what you use via Openrouter’s pay-as-you-go pricing.

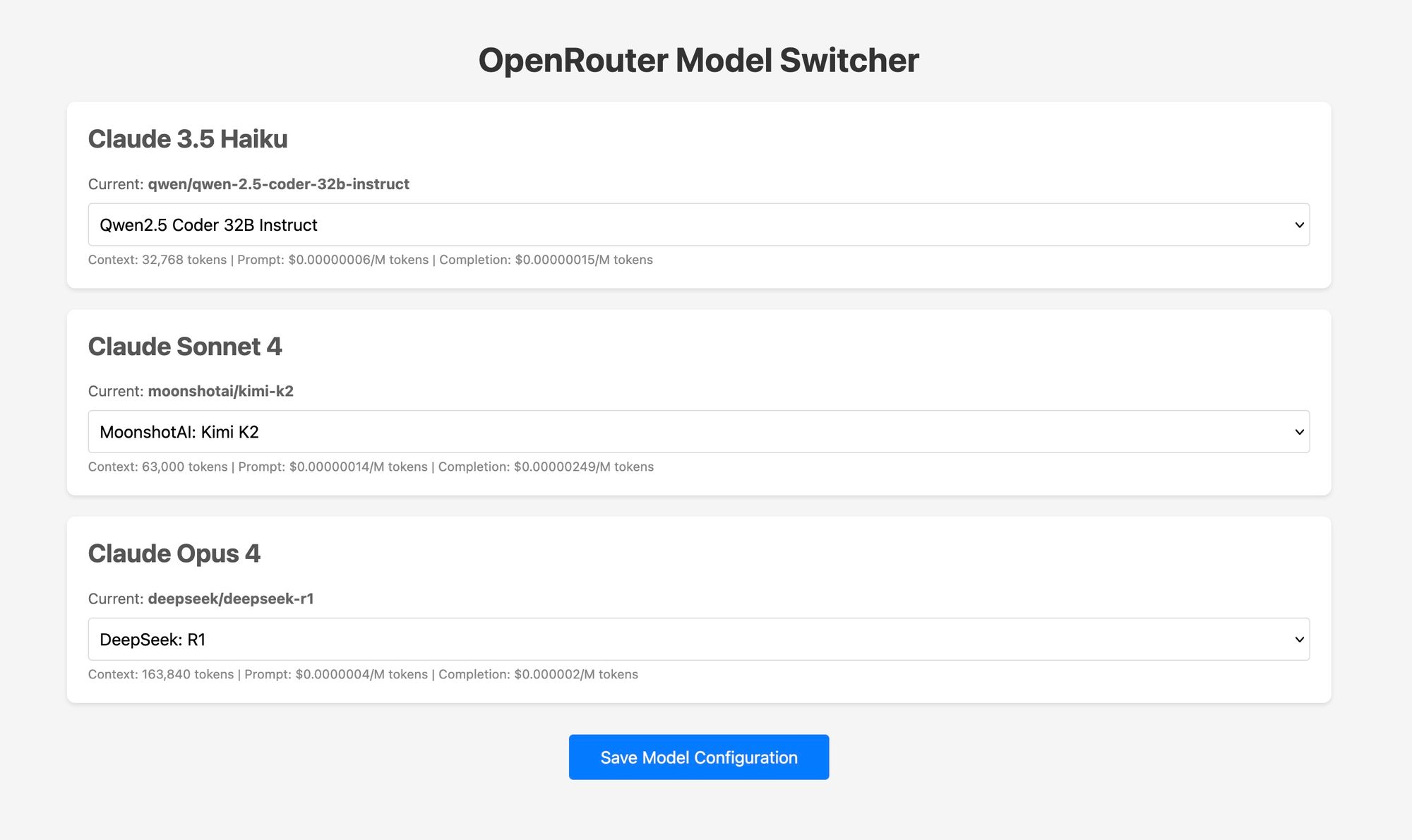

- Flexible model switching — change models mid-session in Claude Code with

/model, or route based on task type (lightweight vs reasoning, cost vs performance). - Cost optimization — low-cost or free models for routine tasks, powerful models only when needed: ideal for budget-conscious developers.

- Local or cloud-based routing — run everything locally for privacy, or host your router for shared team use, CI/CD, or cloud automation.

- Tooling & flexibility — some routers support advanced features like streaming, model fallback, multiple provider multiplexing, and integration with broader dev workflows.

Prerequisites

Before you begin, make sure you have:

1. Claude Code installed globally (for example via npm install -g @anthropic-ai/claude-code).

2. An Openrouter account with a valid API key (sk-or-...) (Create an account at OpenRouter).

3. A router/proxy tool (Docker is easiest, but Node.js–based routers also work) to handle format conversion

4. Basic comfort using environment variables and the command line

With this setup, you’ll be able to point Claude Code at the router — which then forwards requests to Openrouter and routes responses back.

Method 1: y-router (Simplest and Recommended)

One of the most widely used routers for this setup is y-router. It acts as a translator between Anthropic's expected API format and Openrouter’s OpenAI-style API (view repo at: GitHub). Here's how to do it step-by-step with y-router:

1. Deploy y-router locally (Docker recommended):

git clone https://github.com/luohy15/y-router.git

cd y-router

docker compose up -d

This brings up a local router service listening (by default) on http://localhost:8787.

2. Configure your environment variables so that Claude Code sends its requests to y-router, not directly to Anthropic:

export ANTHROPIC_BASE_URL="http://localhost:8787"

export ANTHROPIC_AUTH_TOKEN="sk-or-<your-openrouter-key>"

export ANTHROPIC_MODEL="z-ai/glm-4.5-air" # for a fast, lightweight model

# or export ANTHROPIC_MODEL="z-ai/glm-4.5" # for a more powerful model

3. Run Claude Code:

claude

The interface will start up as usual. If you type /model, you'll see the Openrouter-powered model selected. Congrats — you’re now using Claude Code with Openrouter. (ishan.rs)

This method keeps routing local, simple, and under your control — ideal for developers who like privacy and minimal overhead.

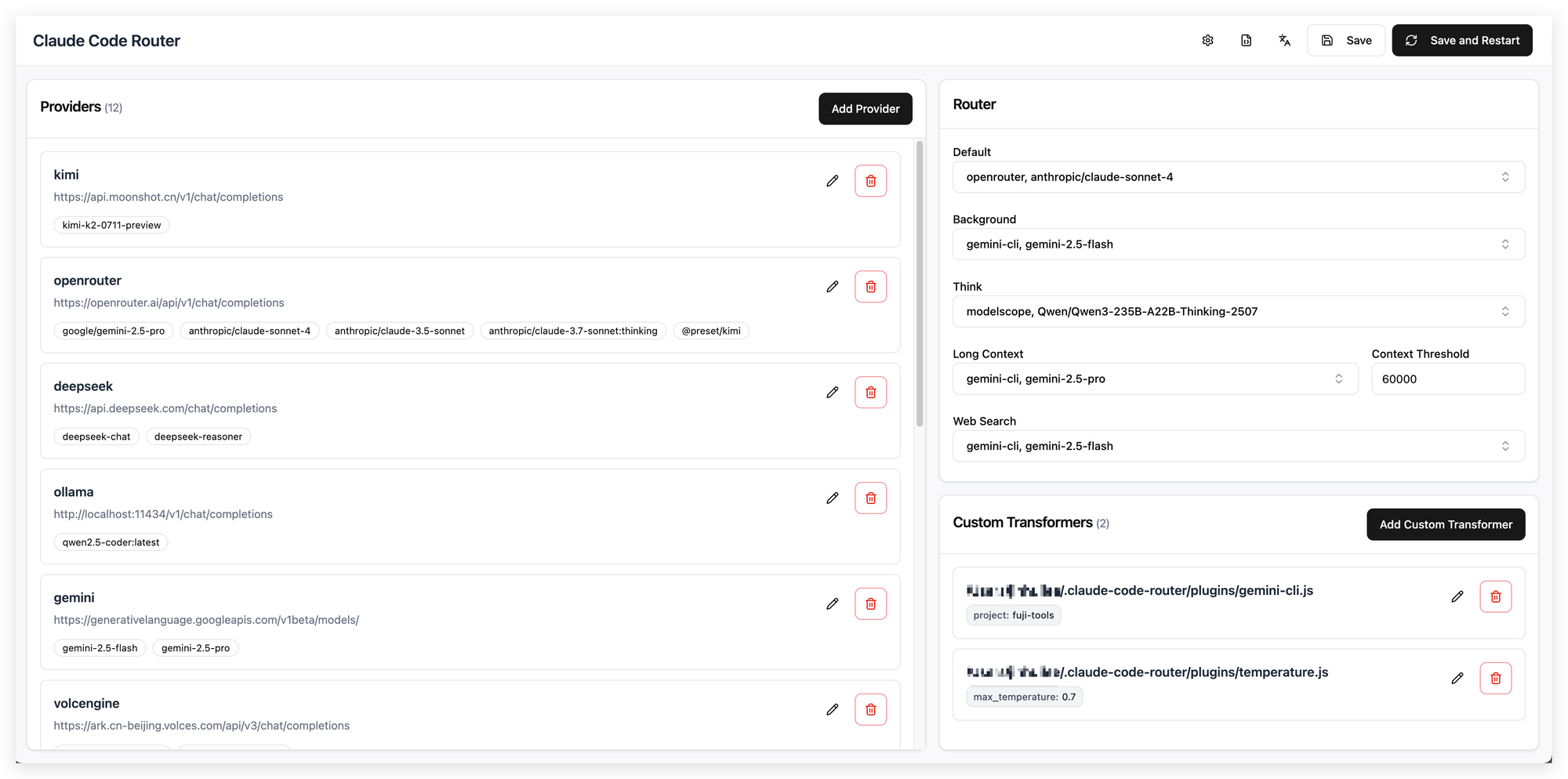

Method 2: Claude Code Router (npm-based, Feature-Rich)

If you prefer not to use Docker, there is another robust option: Claude Code Router — a Node.js-based router tool designed to give Claude Code access to external providers (view repo at GitHub). Here's how to set it up:

1. Install globally:

npm install -g @musistudio/claude-code-router

Then create a config file (e.g. ~/.claude-code-router/config.json) with your preferred settings and models. Many users include Openrouter as a provider, specifying their API key and listing supported models (more details on this method at lgallardo.com).

2. Start the router:

ccr start

Once running, set ANTHROPIC_BASE_URL to the router’s URL and use claude as usual. The router will translate requests and allow dynamic switching between models — including fallback behaviour, routing rules, and more.

This method is powerful if you want more control over model routing, fallback policies, or integration in larger toolchains (like CI/CD, automated scripts, or multi-model experiments).

Method 3: Direct Openrouter Proxy (Minimal Setup for Quick Testing)

If you just want a quick check or testing — without running a full router — you can try pointing Claude Code directly to an Openrouter-compatible proxy or minimal adapter. Some community solutions exist to make this easier (view their repo at GitHub).

For example, you might set:

export ANTHROPIC_BASE_URL="https://proxy-your-choice.com"

export ANTHROPIC_AUTH_TOKEN="sk-or-<your-key>"

export ANTHROPIC_MODEL="openrouter/model-name"

Then run Claude Code. This approach is useful for ephemeral tests or quick experiments. However, it may lack robustness (e.g. for streaming, tool-calling, or long-term sessions), depending on the proxy implementation.

Best Practices & Tips for a Smooth Experience

- Check model compatibility — not all models support advanced features like tool calls or long-context windows. Use lighter models for simple tasks, more capable models for heavy tasks like reasoning or coding.

- Secure your API key — treat your Openrouter API key like a secret; store it securely and never expose it client-side.

- Manage costs — monitor token usage when using large models; consider prompt caching, fallback models, or task-based routing to optimize cost/performance balance.

- Test routing setup — after configuration, always test with simple commands (e.g.

claude --model <model>) to ensure everything routes correctly. - Use fallback routing for reliability — in multi-model setups, configure fallback options so if one model is unavailable, the router can switch to another automatically.

Frequently Asked Questions

Q1. Do I need to pay for Anthropic to use Claude Code with Openrouter?

No. When configured with Openrouter (via a router or proxy), Claude Code uses your Openrouter API key for requests — you don’t need a paid Anthropic subscription.

Q2. Can I switch models on the fly within the same Claude Code session?

Yes. Many routers (and Claude Code itself) allow you to switch models using /model <model_name>. This works mid-conversation in most cases.

Q3. Are all models in Openrouter compatible with Claude Code features (like tool execution, streaming)?

Not always. Some “text-only” or lighter models might not support tool calling, long context, or streaming. For complex workflows, use models known to support those features.

Q4. Is a local Docker router more secure than a hosted one?

Generally yes. Running a router locally gives you full control and avoids exposing your API key to external services. Hosted routers are convenient but may pose security or reliability trade-offs.

Q5. Can I integrate this setup into CI/CD or automated workflows?

Absolutely. Tools like Claude Code Router support configuration files and environment variables, which makes them easy to integrate into automation pipelines (GitHub Actions, scripts, etc.).

Conclusion

Using Claude Code with Openrouter is an elegant, powerful way to free yourself from single-provider locks while retaining a familiar, streamlined developer interface. Whether you choose to run a local router via Docker, use a Node.js-based router, or test via a minimal proxy — you’re opening the door to a massive catalog of models, flexible cost options, and tailored workflows.

For developers, side-projects, or teams looking to optimize AI-powered coding without high overhead, this setup offers a compelling balance of control, flexibility, and scalability. With just a few configuration steps, you can dramatically expand what your AI assistant can do — then switch or scale as your needs evolve.

Give it a try: your next code session may soon be powered by a model you never thought possible — all under the familiar Claude Code interface.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!