Ever wondered how modern apps scale effortlessly without you managing a single server? That's the magic of serverless APIs—a game-changer in cloud computing that's reshaping how we build and deploy backend services. If you're a developer tired of server provisioning or a business owner seeking cost-efficient scaling, serverless APIs might just be your new best friend. In this deep dive, we'll unpack the infrastructure behind serverless APIs, weigh their benefits and drawbacks, spotlight popular tools, compare them to traditional serverful backends, explore testing with Apidog, and answer the big question: when should you go serverless? Drawing from expert insights, let's break it down technically and see why serverless APIs are exploding in popularity in 2025.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

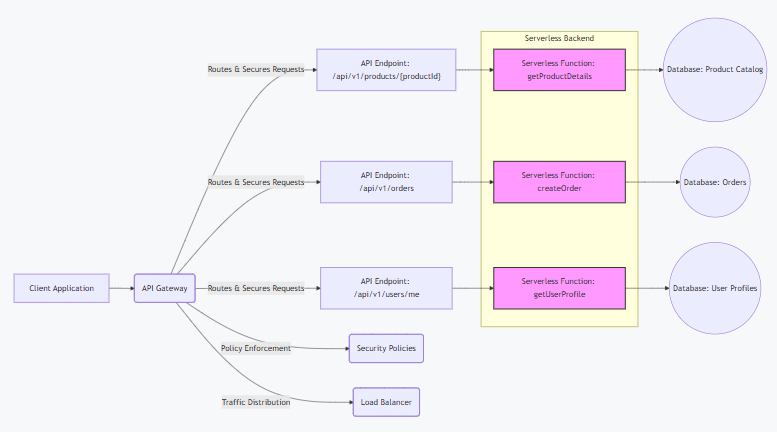

Understanding the Infrastructure and Architecture of Serverless APIs

At its core, a serverless API is an API built on serverless computing, where cloud providers handle the backend infrastructure, allowing developers to focus solely on code. Unlike traditional setups, serverless APIs run on Function as a Service (FaaS) platforms, executing code in stateless containers triggered by events like HTTP requests.

Technically, the architecture revolves around event-driven computing. When a request hits your serverless API endpoint, the provider (e.g., AWS Lambda) spins up a container, runs your function, and scales it automatically based on demand. This uses a pay-per-use model—no idle servers mean no wasted costs. Key elements include:

- API Gateway: Acts as the entry point, handling routing, authentication (e.g., JWT or OAuth), rate limiting, and request transformation. For instance, AWS API Gateway integrates with Lambda, caching responses for low-latency.

- FaaS Layer: Your code lives here as functions. Each function is isolated, with execution times limited (e.g., 15 minutes on Lambda) to encourage microservices design.

- Backend Services: Serverless APIs connect to managed databases like DynamoDB (NoSQL) or Aurora Serverless (SQL), storage like S3, and queues like SQS for asynchronous processing.

- Scaling Mechanics: Providers use auto-scaling groups and load balancers under the hood. For high traffic, containers replicate across availability zones, ensuring 99% uptime via redundancy.

Compared to monolithic architectures, serverless APIs decompose into granular functions, enabling independent scaling. However, this introduces cold starts—initial latency (50-500ms) when functions boot from idle. Mitigation strategies include provisioned concurrency (pre-warming functions) or using warmer tools like AWS Lambda Warmer.

In essence, serverless API architecture abstracts away OS, networking, and provisioning, letting you deploy code as functions that respond to triggers. It's event-driven, stateless, and highly resilient, but requires careful design to avoid vendor lock-in.

Benefits and Drawbacks of Serverless APIs

Serverless APIs aren't a silver bullet, but their pros often outweigh the cons for many use cases. Let's break it down technically.

Benefits

- Cost Efficiency: Pay only for execution time (e.g., Lambda charges $0.20 per million requests + $0.0000166667/GB-second). No costs for idle time, ideal for variable traffic—save up to 90% vs. always-on EC2 instances.

- Auto-Scaling: Handles spikes seamlessly; Lambda scales to 1,000 concurrent executions per region by default, with burst limits up to 3,000. No manual provisioning needed.

- Faster Time-to-Market: Focus on code, not infrastructure. Deploy functions in seconds via CLI (e.g.,

aws lambda update-function-code), accelerating CI/CD pipelines. - Built-in Resilience: Providers offer multi-AZ deployment, automatic retries, and dead-letter queues for failed events.

- Integration Ecosystem: Easy hooks into services like S3 (for file triggers) or DynamoDB (for data streams), enabling event-driven architectures.

Drawbacks

- Cold Starts: Latency spikes (up to 10s for complex functions) when scaling from zero. Workarounds like provisioned concurrency add costs ($0.035/GB-hour).

- Vendor Lock-In: Proprietary features (e.g., Lambda Layers) make migration tough. Use standards like OpenFaaS for portability.

- Execution Limits: Timeouts (15 min max), memory (10GB), and payload sizes (6MB sync) restrict long-running tasks—use Step Functions for orchestration.

- Debugging Challenges: Distributed nature makes tracing hard; tools like X-Ray ($0.0001/trace) help, but add complexity.

- State Management: Stateless functions require external storage (e.g., Redis), increasing latency and costs for stateful apps.

Overall, serverless APIs excel for bursty, event-driven workloads but may not suit constant high-throughput apps.

Popular Tools and Platforms for Serverless APIs

Building serverless APIs is easier with these platforms and tools, each offering unique features for different needs.

- AWS Lambda + API Gateway: The OG of serverless. Lambda runs code in 15+ languages, with Gateway handling routing. Pricing: $0.20/M requests. Pros: Deep AWS integration. Cons: Cold starts.

- Google Cloud Functions + API Gateway: Event-driven, supports Node.js/Python/Go. Pricing: $0.40/M invocations. Pros: Fast cold starts (via Firestore). Cons: Limited to Google ecosystem.

- Azure Functions + API Management: Timer-triggered functions in C#/Java/JS. Pricing: $0.20/M executions. Pros: Hybrid cloud support. Cons: Steeper learning curve.

- Vercel Serverless Functions: Edge functions for Next.js apps. Pricing: Free tier (100GB-hours/month). Pros: Global edge network. Cons: Tied to Vercel hosting.

- Cloudflare Workers: KV storage for state. Pricing: $0.30/M requests. Pros: Zero cold starts. Cons: 10ms CPU limit.

Tools like Serverless Framework (for multi-cloud deploys) or SAM (AWS-specific) simplify orchestration. For GraphQL, Apollo Server on Lambda is popular.

Serverless vs Serverful Backends: A Technical Comparison

Serverless (FaaS) and serverful (traditional VMs/containers) differ in management, scaling, and cost. Here's a breakdown:

- Management: Serverless abstracts infrastructure—no OS patching or load balancing. Serverful requires full control (e.g., Kubernetes orchestration).

- Scaling: Serverless auto-scales per request (zero to thousands in seconds). Serverful needs manual/auto-scaling groups, with provisioning lag.

- Cost Model: Serverless: Pay-per-use (e.g., Lambda's GB-seconds). Serverful: Fixed costs for always-on instances (e.g., EC2's $0.10/hour).

- Performance: Serverless risks cold starts; serverful offers consistent latency but wastes resources during low traffic.

- State Handling: Serverless is stateless (use external DBs); serverful supports stateful apps natively.

- Use Cases: Serverless for microservices/APIs; serverful for monolithic or compute-intensive apps.

In benchmarks, serverless can be 50% cheaper for intermittent loads but 20% slower due to startups. Choose based on traffic patterns—hybrid approaches blend both.

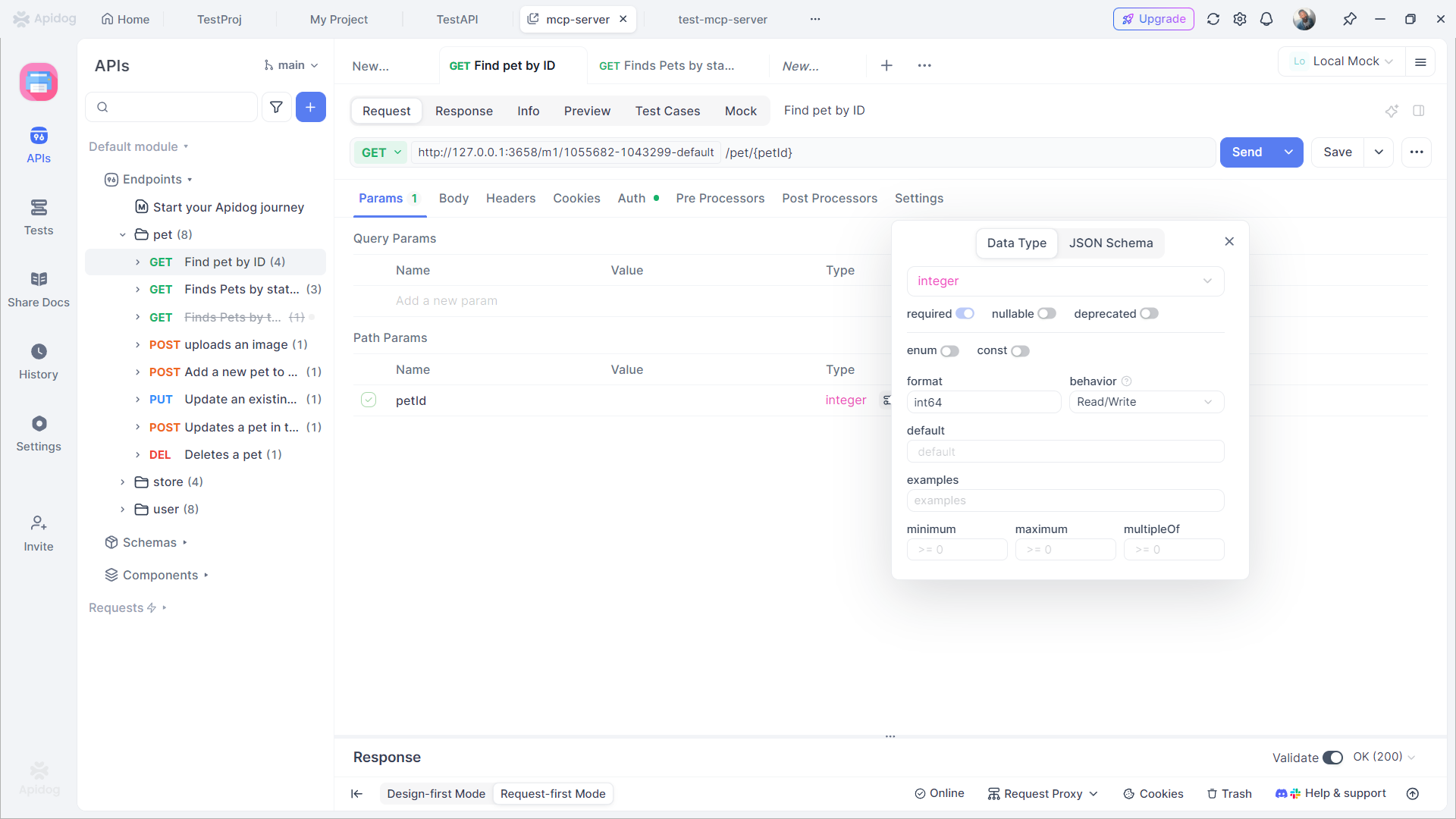

Testing Serverless APIs with Apidog

Testing serverless APIs is crucial to ensure reliability, and Apidog is a top tool for it. This all-in-one platform supports visual design, automated testing, and Mock servers.

How Apidog Helps Test Serverless APIs

- Visual Assertions: Set enums and validate responses visually—no code needed.

- Unlimited Runs: Free unlimited collection runs, unlike Postman’s 25/month limit.

- CI/CD Integration: Hook into pipelines like Jenkins for auto-testing on deploys.

- Mocking: Generate enum-compliant data for offline testing.

- AI Assistance: Auto-generate tests from prompts, e.g., "Test enum for user_status."

Benefits: Apidog’s real-time sync catches issues early, and its database connections test stateful flows. Pricing starts free, with Pro at $9/month—cheaper than Postman.

When Should You Use Serverless APIs?

Serverless APIs offer a modern approach to building and deploying applications, but they are not a one-size-fits-all solution. Understanding their strengths and limitations is key to leveraging them effectively. Here’s a breakdown of when to consider serverless APIs, with detailed explanations:

- Traffic Is Variable: Serverless is ideal for applications with unpredictable or spiky traffic patterns. For example, e-commerce platforms during flash sales or event registration sites experiencing sudden surges. Serverless functions automatically scale up to handle demand and scale down to zero when idle, ensuring you only pay for actual usage rather than provisioning expensive, always-on infrastructure.

- Rapid Prototyping and MVPs: If you need to quickly validate an idea or build a minimum viable product (MVP), serverless allows you to deploy functions in seconds. This agility accelerates experimentation, reduces time-to-market, and lets teams iterate based on real user feedback without committing to complex infrastructure setups.

- Event-Driven Applications: Serverless excels in event-driven architectures. Use cases include IoT data processing (e.g., handling sensor triggers), managing webhooks (e.g., responding to GitHub or Stripe events), and orchestrating microservices. Functions are triggered precisely when events occur, ensuring efficient resource utilization and simplifying event-based workflows.

- Cost Optimization for Intermittent Workloads: If your application spends significant time idle (e.g., 80% or more), serverless can drastically reduce costs. Traditional servers incur expenses even when inactive, but serverless follows a pay-per-execution model. This makes it economical for low-traffic apps, batch jobs, or background tasks that run intermittently.

- DevOps-Light Teams: Organizations with limited DevOps resources benefit from serverless’s managed infrastructure. Cloud providers handle scaling, patching, and maintenance, allowing developers to focus solely on code. This reduces operational overhead and accelerates development cycles, making it easier for small teams to deliver features faster.

When to Avoid Serverless APIs:

Serverless may not be suitable for:

- Long-running processes: Functions typically have time limits (e.g., 15 minutes on AWS Lambda), making them ill-suited for tasks like video encoding or large data exports.

- Stateful applications: Serverless is stateless by design; avoid it for apps requiring persistent connections or in-memory state (e.g., WebSocket servers).

- Ultra-low latency requirements: Cold starts (delays when initializing functions) can introduce latency, so avoid serverless for real-time systems demanding consistent response times under 50ms.

Final Verdict

Start small by prototyping a single microservice or API endpoint. Measure performance, costs, and scalability in your specific context. Serverless is a powerful tool for the right use cases—embrace it for agile development, variable workloads, and event-driven needs, but pair it with traditional infrastructure for stateful or high-performance requirements. By aligning serverless with your architectural goals and testing your API's with Apidog, you can maximize efficiency and innovation.