OpenAI’s o3-pro model is making waves in the AI community for its unmatched performance, precision, and adaptability. Whether you’re building advanced chatbots, integrating multi-modal AI into enterprise systems, or optimizing your API stack, understanding o3-pro’s benchmarks, pricing, and API limits is critical for strategic adoption. This guide breaks down o3-pro’s strengths, API costs, and usage details for technical teams.

💡 Looking for an API testing tool that generates beautiful API documentation and streamlines collaboration for developer teams? Apidog offers an all-in-one workspace that supports productive API development, with features designed for teams that need to move fast and scale efficiently. Many teams are switching to Apidog as a more affordable Postman alternative.

What Is OpenAI o3-pro?

The o3-pro model is OpenAI’s latest flagship large language model, engineered to deliver superior reasoning, speed, and accuracy across a broad range of tasks. Building on the architecture of GPT-4 and earlier models, o3-pro advances natural language understanding, code generation, and even multi-modal handling (processing both text and images).

“Extremely cheaper, faster, and way more precise than o1-pro (and coding with o3 vs o3-pro is night and day)...”

— Flavio Adamo, June 10, 2025

Key Features:

- Human-like text generation, even in complex or technical domains

- Multi-modal input support (text and images)

- High performance in tasks like summarization, translation, Q&A, narrative writing, and code generation

- Useful across industries, including education, healthcare, finance, and entertainment

o3-pro is gradually rolling out to ChatGPT users and API customers, making advanced AI more accessible to technical teams.

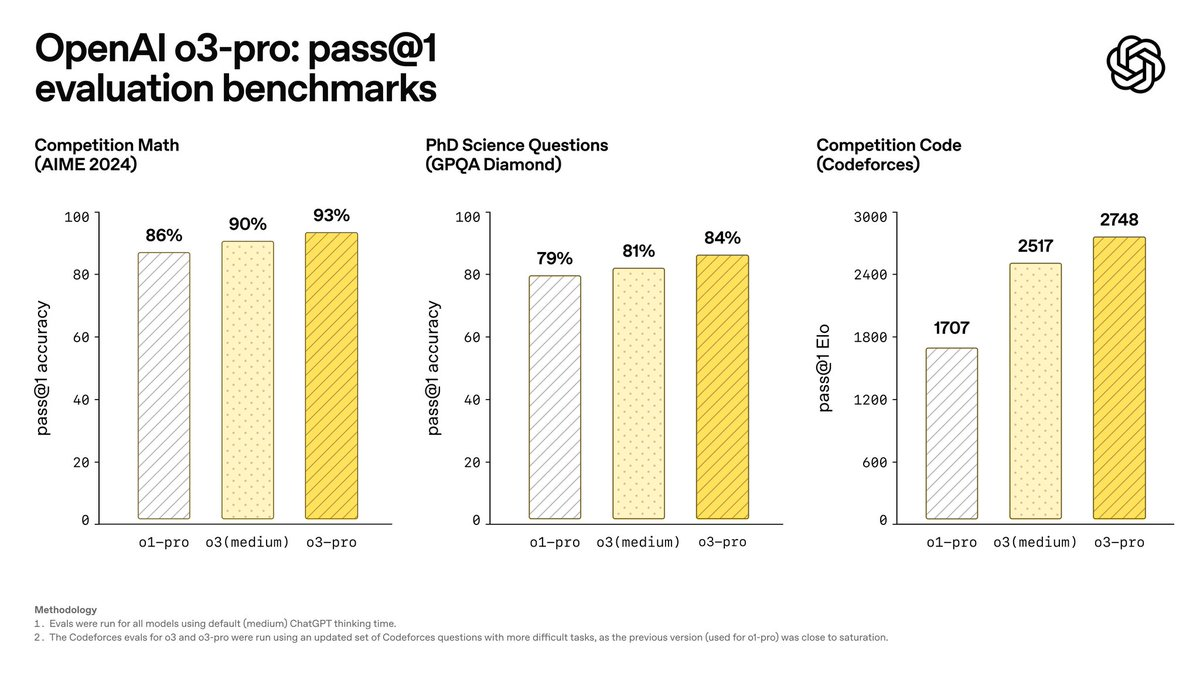

o3-pro Benchmarks: How Does It Compare?

Why do benchmarks matter?

For API developers and backend engineers, benchmarks provide concrete evidence of a model’s capabilities. OpenAI’s o3-pro outperforms competitors on several industry-standard tests:

- AIME 2024 (Mathematics):

o3-pro scores higher than Google Gemini 2.5 Pro, solving complex equations and applying robust mathematical reasoning. - GPQA Diamond (PhD-level Science):

o3-pro surpasses Anthropic Claude 4 Opus, demonstrating deep scientific knowledge and reliable technical analysis.

Additional Strengths:

- Exceptional natural language understanding and context retention

- High-speed text generation without significant drops in accuracy

- Resilient under stress and adversarial testing—ready for production-scale deployment

For teams building mission-critical API products, these results suggest o3-pro is a top-tier choice for demanding workloads.

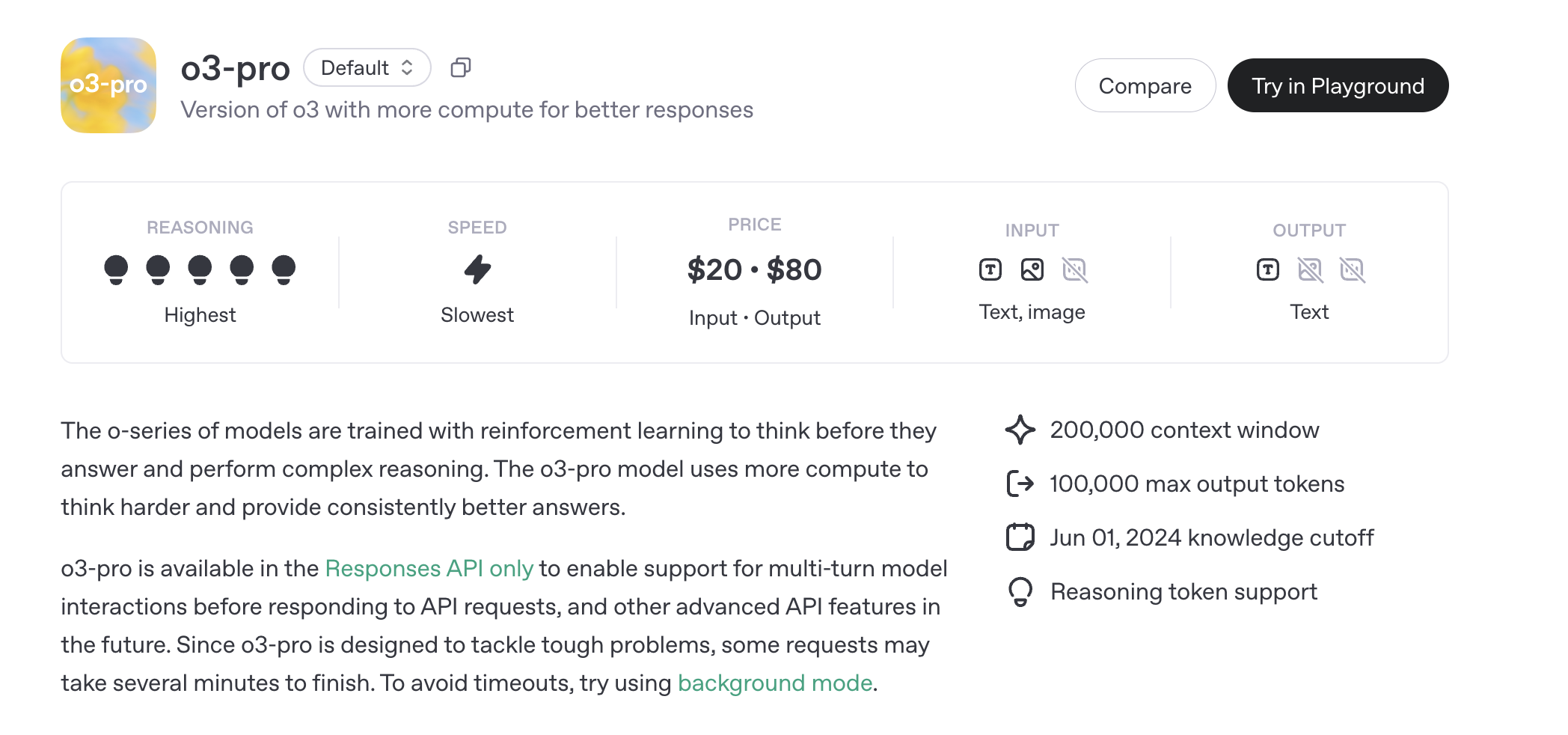

OpenAI o3-pro Pricing Explained

OpenAI prices o3-pro access exclusively via API, based on token usage. There are no standalone subscriptions; costs scale directly with compute resources consumed.

o3-pro API Pricing

- Input Tokens: $20.00 per 1 million tokens

- Output Tokens: $80.00 per 1 million tokens

1 million tokens ≈ 750,000 words (about the length of a long novel)

How does this compare?

- o3 (standard): $2.00 (input), $8.00 (output) per 1M tokens

- o3-mini: $1.10 (input), $4.40 (output) per 1M tokens

o3-pro’s higher price reflects its advanced reasoning and greater compute demands. Output tokens are more expensive due to the processing needed to generate high-quality responses.

Additional Charges:

Some tools (e.g., file search, image generation) have extra per-call fees. Integrating these via API will impact total spend—important for teams planning large-scale deployments.

Batch API Pricing & Enterprise Options

For high-volume or asynchronous workloads, the Batch API offers the same pricing ($20M/$80M tokens). This is ideal for batch processing, analytics, or bulk content generation.

Enterprise/Custom Pricing:

OpenAI offers volume discounts and tailored agreements for large API consumers, including custom support and enhanced SLAs. Fine-tuning is available, with additional charges based on compute usage.

Discounts:

Educational and non-profit organizations may access special rates. Periodic promotions also help lower costs for new or high-usage accounts.

o3-pro Rate Limits: What Developers Need to Know

Effective API management requires understanding per-user rate limits. o3-pro’s limits scale by usage tier:

| Tier | Requests/min | Tokens/min | Batch Queue Limit |

|---|---|---|---|

| 1 | 500 | 30,000 | 90,000 |

| 2 | 5,000 | 450,000 | 1,350,000 |

| 3 | 5,000 | 800,000 | 50,000,000 |

| 4 | 10,000 | 2,000,000 | 200,000,000 |

| 5 | 10,000 | 30,000,000 | 5,000,000,000 |

- No Free Tier: o3-pro is not available on OpenAI’s free plan.

- Batch Queue: Limits how many tokens can be queued for asynchronous (batch) processing—important for bulk tasks.

- Scaling: Overage is managed by automatically increasing limits as usage and spend grow.

o3-pro API Capabilities and Limitations

Supported API Endpoints:

- Chat Completions, Responses, Assistants, Batch, Fine-tuning, Embeddings

- Image Generation & Edit, Speech Generation, Transcription, Translation, Moderation

Key Features:

- Function Calling: Integrate external tools or workflows

- Structured Outputs: Consistent response formats for easy parsing

- Tools: File search, image generation, Model Control Protocol (MCP) via Responses API

Not Supported:

- Realtime and legacy Completions endpoints

- Streaming (responses are not streamed, but returned as full outputs)

- Audio input (only text and image input allowed)

- Web search, code interpreter, and computer use tools

- Distillation and predicted outputs

Model Snapshots:

You can lock to specific o3-pro versions (e.g., o3-pro-2025-06-10) for stable production performance.

How Apidog Streamlines o3-pro API Integration

For API-focused teams, integrating and testing advanced AI endpoints can be challenging. Apidog simplifies this with features tailored for developer workflows:

- Generate live API documentation that updates as you iterate

- Collaborate with your team in a unified workspace for maximum productivity

- Automate API testing for both synchronous and batch endpoints

- Switch from Postman at a lower cost, with seamless import and migration tools

Apidog helps you design, test, and document complex OpenAI API workflows—making it easier to ship reliable AI-powered features.

Conclusion

OpenAI’s o3-pro delivers top-tier reasoning and multi-modal AI capabilities, validated by strong benchmark results and robust API features. With transparent, usage-based pricing and scalable rate limits, it’s a strong fit for technical teams building intelligent systems at scale. While o3-pro costs more than previous models, its advanced output and reliability justify the investment for demanding applications.

For teams building, testing, and managing APIs—including OpenAI endpoints—Apidog provides the collaboration, automation, and documentation tools needed to accelerate development and ensure quality.