The OpenAI API is a powerful tool that allows developers and businesses to utilize advanced language models, automate content generation, and implement cutting-edge artificial intelligence into their products. To ensure fair and efficient usage among millions of users and varied applications, the API employs a system of user rate limits. These limits are designed to distribute available resources evenly, maintain system stability, and avoid abuse of the service.

In this article, we will explore what the API rate limits are, how they work, and what impact they have on your applications. Beyond that, we will provide a helpful table comparing typical thresholds for various API endpoints and present strategies to bypass or mitigate these limits while staying compliant with OpenAI’s terms of service.

Understanding API Rate Limits

At its core, an API rate limit restricts the number of requests or the volume of data (tokens) a user can process over a certain period—for example, per minute. This practice is common across many APIs, and OpenAI has built its own set of rules tailored to its sophisticated language models. Typically, rate limits are enforced in two dimensions:

- Request-based limits: These specify the number of API calls a user is allowed to make in a given time window.

- Token-based limits: These encompass the total number of tokens processed per minute or over another period, reflecting the computational demand of handling larger or more complex language tasks.

When an endpoint receives more requests or tokens than a user is allowed, the API responds with an error message—most often signaled by an HTTP status code 429 ("Too Many Requests"). This error indicates that you've reached your limit, and you will need either to wait until the counter resets or to implement strategies that better manage your usage.

The Mechanics Behind Rate Limits

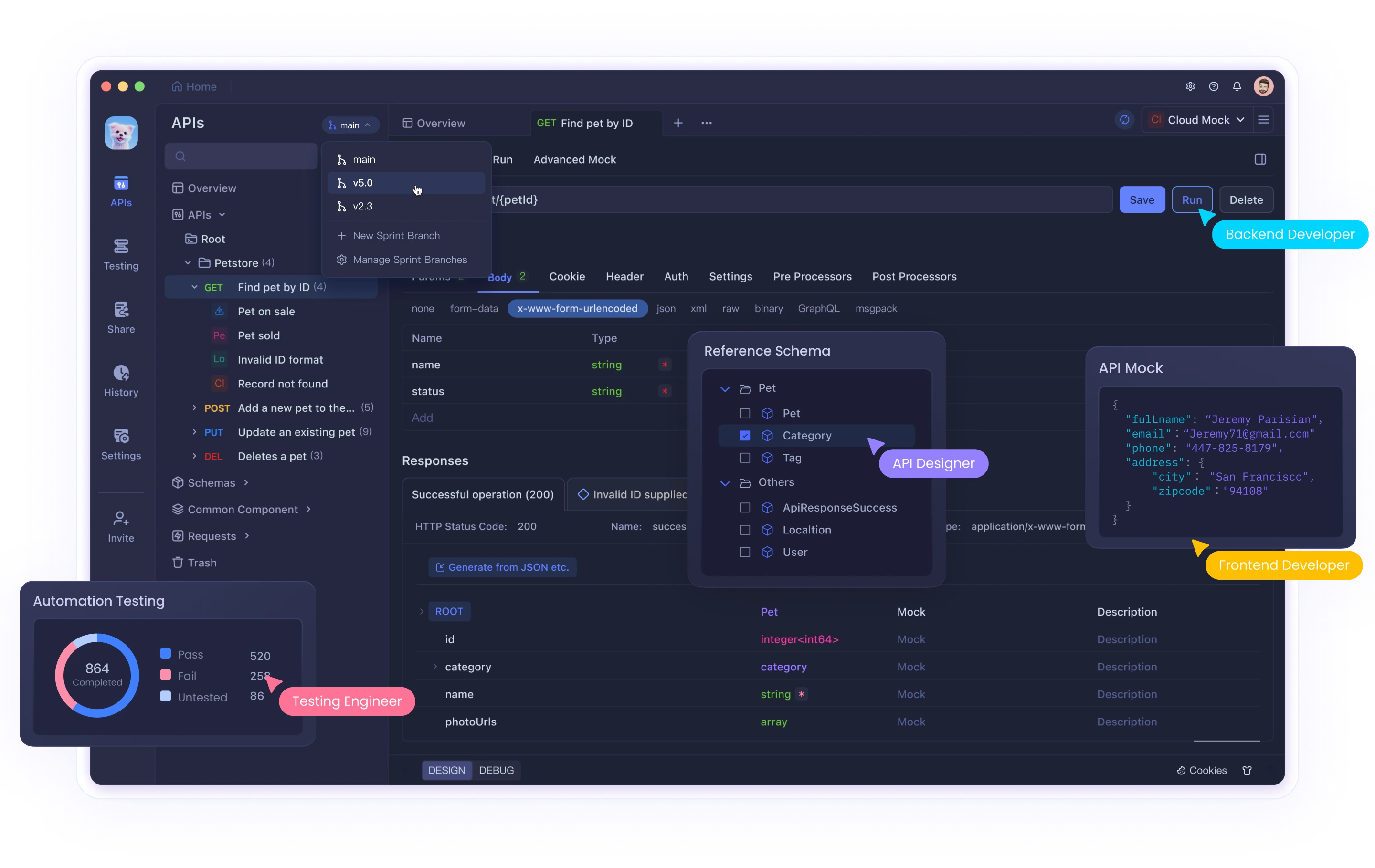

OpenAI's rate limits operate on several layers. On the client side, developers are encouraged to build applications with automatic management strategies—such as retry and exponential back-off mechanisms—to gracefully handle errors when the rate is exceeded. By reading real-time response headers that indicate your remaining quota and reset time, you can design algorithms that postpone or redistribute excessive API calls.

On the server side, the API continuously tracks the number of incoming requests and the processing load (often measured in tokens) against the user's quota. The rate limits are defined in both a burst scenario, where short periods of high activity are allowed, and sustained scenarios, where long-term usage is smoothly regulated. These controls are designed not only to protect server integrity but also to ensure that no single user monopolizes the shared computational resources.

When combined, these mechanics create a dynamic system that allows room for legitimate peaks in activity while maintaining the quality of service for everyone. This system ensures fairness by monitoring peak versus sustained usage and offering appropriate feedback so that developers can retry, adjust, or moderate their request frequency.

Comparison Table of API Rate Limits

Below is an illustrative table that outlines hypothetical rate limits for various OpenAI API endpoints. Note that these numbers are examples crafted for clarity, and actual numbers may vary based on your account level, endpoint changes, or negotiations with OpenAI.

| Endpoint | Requests Per Minute | Token Throughput Per Minute | Description and Notes |

|---|---|---|---|

| Completions | 60 req/min | 90,000 tokens/min | Suitable for generating text; higher volume during spikes |

| Chat Completions | 80 req/min | 100,000 tokens/min | Optimized for conversational context and interactive use |

| Embeddings | 120 req/min | 150,000 tokens/min | Designed for processing and analyzing large text portions |

| Moderation | 100 req/min | 120,000 tokens/min | Used for content filtering and determining text appropriateness |

| Fine-tuning & Training | 30 req/min | 50,000 tokens/min | Reserved for training additional models or refining output |

This table serves as a quick reference to tailor your application’s design according to its specific requirements. By understanding which endpoints require heavier computation (thus a higher token limit) versus those that rely more on simple request counts, you can spread out and balance your usage more effectively.

How Rate Limits Affect Your Applications

For any application reliant on the OpenAI API, hitting the imposed limits can lead to delays in processing, degraded user experience, and potential workflow halts. Consider a customer service chatbot that leverages the Chat Completions endpoint. During peak hours, a spike in traffic may result in a situation where the rate limit is exceeded, causing lag or temporary outages. These interruptions affect real-time communication and may lead customers to experience delays, resulting in a poor service reputation.

Similarly, back-end operations like content creation engines or data analytics pipelines might experience performance bottlenecks when API requests are throttled. A well-designed system employs strategies like load balancing, background queuing, and request batching to avoid interruptions. By planning load distribution thoroughly, developers build more resilient applications that maintain high throughput and responsiveness, even when nearing or exceeding the designated thresholds.

Strategies to Manage and Bypass Rate Limits

While “bypassing” rate limits might sound like trying to break the rules, what it really means is implementing strategies to avoid hitting the thresholds unnecessarily or to work within them more efficiently. In other words, these techniques are not about bypassing OpenAI’s limits in a rule-breaking way but about smartly managing request quotas so that your application remains robust and efficient.

Below are three effective options:

1. Aggregating and Caching Responses

Instead of sending a new API call for every user query, you can aggregate similar requests and cache the responses. For example, if multiple users request similar information or if certain static data is frequently needed, store the response locally (or in a distributed cache) for a predetermined period. This reduces the number of API calls required and saves on both request-based and token-based limits.

Benefits:

- Reduces redundant calls by efficiently reusing previous results.

- Lowers the latency associated with making external API calls.

- Supports scalability during periods of high traffic by decreasing overall load.

2. Distributed Request Handling with Multiple API Keys

If your application has grown significantly, consider splitting your workload across multiple API keys or even multiple OpenAI accounts (provided it is in accordance with their terms of service). This strategy involves rotating keys or distributing requests among several processes. Each key will have its own allocated quota, effectively multiplying your capacity while still operating within individual limits.

Benefits:

- Provides a greater cumulative quota enabling high workloads.

- Facilitates load balancing across distributed systems.

- Prevents a single point of failure if one key reaches its limit.

3. Negotiating for Higher Rate Limits

If your application's requirements consistently push you towards the default thresholds, a proactive approach is to contact OpenAI directly to explore the possibility of a higher rate limit tailored to your needs. Many API providers are open to negotiating custom limits if you can provide a detailed use-case and demonstrate a consistent pattern of responsible usage.

Benefits:

- Provides a long-term solution for scaling applications.

- Opens up opportunities for customized support and priority services.

- Ensures continuous operation without frequent interruptions due to rate limit errors.

Best Practices to Avoid Rate Limit Problems

Beyond the aforementioned tactics, employing best practices in API design and usage can safeguard against unexpected rate limit issues:

- Design for Scalability: Build your application to handle both bursts of activity and sustained use. Focus on load distribution and latency reduction throughout the system architecture.

- Implement Robust Error Handling: Whenever a rate limit error occurs, your system should log the event, notify the user if necessary, and automatically adopt exponential back-off strategies. This avoids the cascading failure of subsequent requests.

- Monitor Usage Proactively: Utilize analytics and logging tools to track the number of requests and tokens used over time. Regular monitoring allows you to predict and adjust for upcoming peaks before they become problematic.

- Test Under High-Load Conditions: Stress testing your API integrations helps identify bottlenecks. Simulated load testing provides insights into potential weak points in your request scheduling, informing improvements in throughput and delay management.

- Educate Your Team: Ensure that all team members involved in development and maintenance are well-versed in the rate limit policies and understand the best practices. This transparency facilitates faster troubleshooting and more efficient responses when issues arise.

Additional Considerations for Scaling Your API Usage

When planning for future growth, continuously refine your approach to API usage. Here are additional points to keep in mind:

- Token Counting Precision: Not all API calls are equal. A simple query might use a few tokens, while complex interactions could consume many more. Tracking token usage per request is essential for understanding your spending on computational resources.

- Balancing Endpoint Usage: Different endpoints have different limits. If your application leverages multiple endpoints, analyze the load distribution and prioritize requests to less constrained endpoints when possible.

- Integration of Asynchronous Processing: By shifting some real-time requests to asynchronous processing, you allow your system to process other tasks while waiting for the token or request counter to reset. This creates a smoother user experience and prevents bottlenecks during peak usage.

- Fallback Mechanisms: In scenarios where the API is inaccessible due to rate limits, having a standby plan—such as calling a cached backup or an alternative service—can keep your application running without interruption.

FAQs and Troubleshooting Tips

Here are answers to some frequently asked questions and tips that can help troubleshoot and prevent rate limit issues:

• What exactly does a 429 error mean?

This error occurs when you exceed the allowed rate. It signals that you need to slow down your requests or re-architect your request pattern.

• How can I effectively track my remaining quota?

API responses usually contain headers with your current usage levels and reset times. Building in a monitoring system that reads these values in real time is essential.

• What should I do when confronted with continuous rate limit errors?

Review your logs to identify patterns. With this data, adjust your load distribution strategy—whether through caching, distributing requests over time, or rotating keys.

• Are there better ways to optimize token usage?

Yes. Analyze your queries to minimize the token count where possible. Often, subtle changes in phrasing or prompt design can reduce token consumption without compromising the quality of results.

Conclusion

OpenAI API rate limits are designed not to stifle innovation but to ensure that resources are used fairly and efficiently across a diverse user base. Understanding the mechanics behind rate limits, comparing different endpoints, and adopting best practices are key to designing resilient applications. Whether you are working on a simple tool or a large-scale application, being proactive with load balancing, utilizing caching mechanisms, and even considering multiple API keys or negotiating higher thresholds can make all the difference.

By leveraging the strategies outlined in this article, you can optimize API usage to create a seamless experience, even during periods of high demand. Remember, rate limits are not obstacles but integral parameters that help maintain system stability. With thoughtful planning and effective management strategies, you can confidently scale your application while ensuring that performance and user experience remain top priorities.