Imagine having a supercomputer in your pocket, capable of understanding and generating human-like text on any topic. Sounds like science fiction, right? Well, thanks to recent breakthroughs in artificial intelligence, this dream is now a reality – and it's called Ollama.

Ollama is like a Swiss Army knife for Local LLMs. It's an open-source tool that lets you run powerful language models right on your own computer. No need for fancy cloud services or a degree in computer science. But here's where it gets really exciting: Ollama has a hidden superpower called an API. Think of it as a secret language that lets your programs talk directly to these AI brains.

Now, I know what you're thinking. "APIs? That sounds complicated!"

Don't worry – that's where Apidog comes in. It's like a friendly translator that helps you communicate with Ollama's API without breaking a sweat. In this article, we're going to embark on a journey together. I'll be your guide as we explore how to unleash the full potential of Ollama using Apidog. By the end, you'll be crafting AI-powered applications like a pro, all from the comfort of your own machine. So, buckle up – it's time to dive into the fascinating world of local AI and discover just how easy it can be!

What is Ollama and How to Use Ollama to Run LLMs Locally?

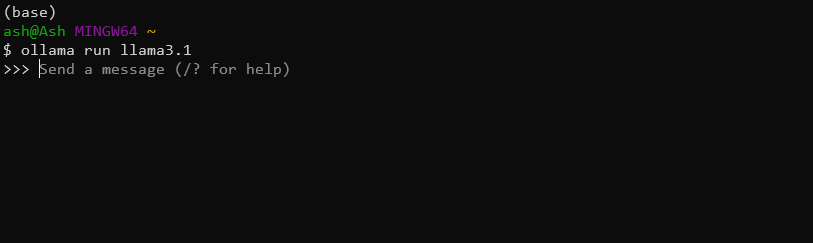

Ollama is a convenient tool that allows users to run large language models on their local machines. It simplifies the process of downloading, running, and fine-tuning various LLMs, making advanced AI capabilities accessible to a wider audience. For example, you can simply download and install the latest Llama 3.1 8B model with this command alone:

ollama run llama3.1

Does Ollama have an API?

Yes, you can use Ollama API to integrate these powerful Local LLMs into their applications seamlessly.

Key features of the Ollama API include:

- Model management (pulling, listing, and deleting models)

- Text generation (completions and chat interactions)

- Embeddings generation

- Fine-tuning capabilities

Using Ollama API, you can easily harness the power of LLMs in their applications without the need for complex infrastructure or cloud dependencies.

Using Apidog for Testing Ollama APIs

Apidog is a versatile API development and documentation platform designed to streamline the entire API lifecycle. It offers a user-friendly interface for designing, testing, and documenting APIs, making it an ideal tool for working with the Ollama API.

Some of APIDog's standout features include:

- Intuitive API design interface

- Automatic documentation generation

- API testing and debugging tools

- Collaboration features for team projects

- Support for various API protocols and formats

With Apidog, developers can easily create, manage, and share API documentation, ensuring that their Ollama API integrations are well-documented and maintainable.

Prerequsites

Before diving into making Ollama API calls with Apidog, ensure that you have the following prerequisites in place:

Ollama Installation: Download and install Ollama on your local machine. Follow the official Ollama documentation for installation instructions specific to your operating system.

APIDog Account: Sign up for an Apidog account if you haven't already. You can use either the web-based version or download the desktop application, depending on your preference.

Ollama Model: Pull a model using Ollama's command-line interface. For example, to download the "llama2" model, you would run:

ollama pull llama3.1

API Testing Tool: While Apidog provides built-in testing capabilities, you may also want to have a tool like cURL or Postman handy for additional testing and verification.

With these elements in place, you're ready to start exploring the Ollama API using Apidog.

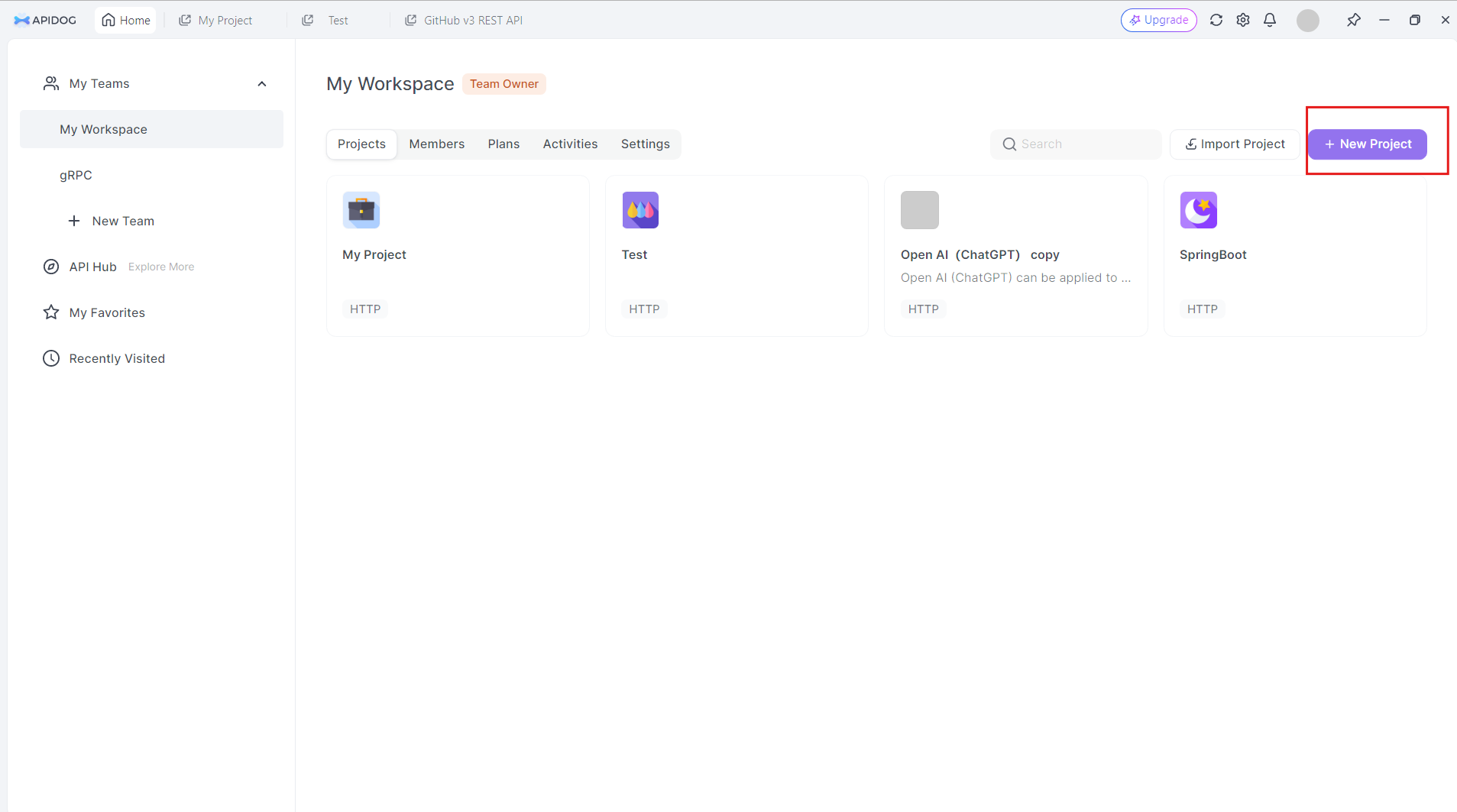

Creating an Ollama API Project in Apidog

To begin working with the Ollama API in Apidog, follow these steps:

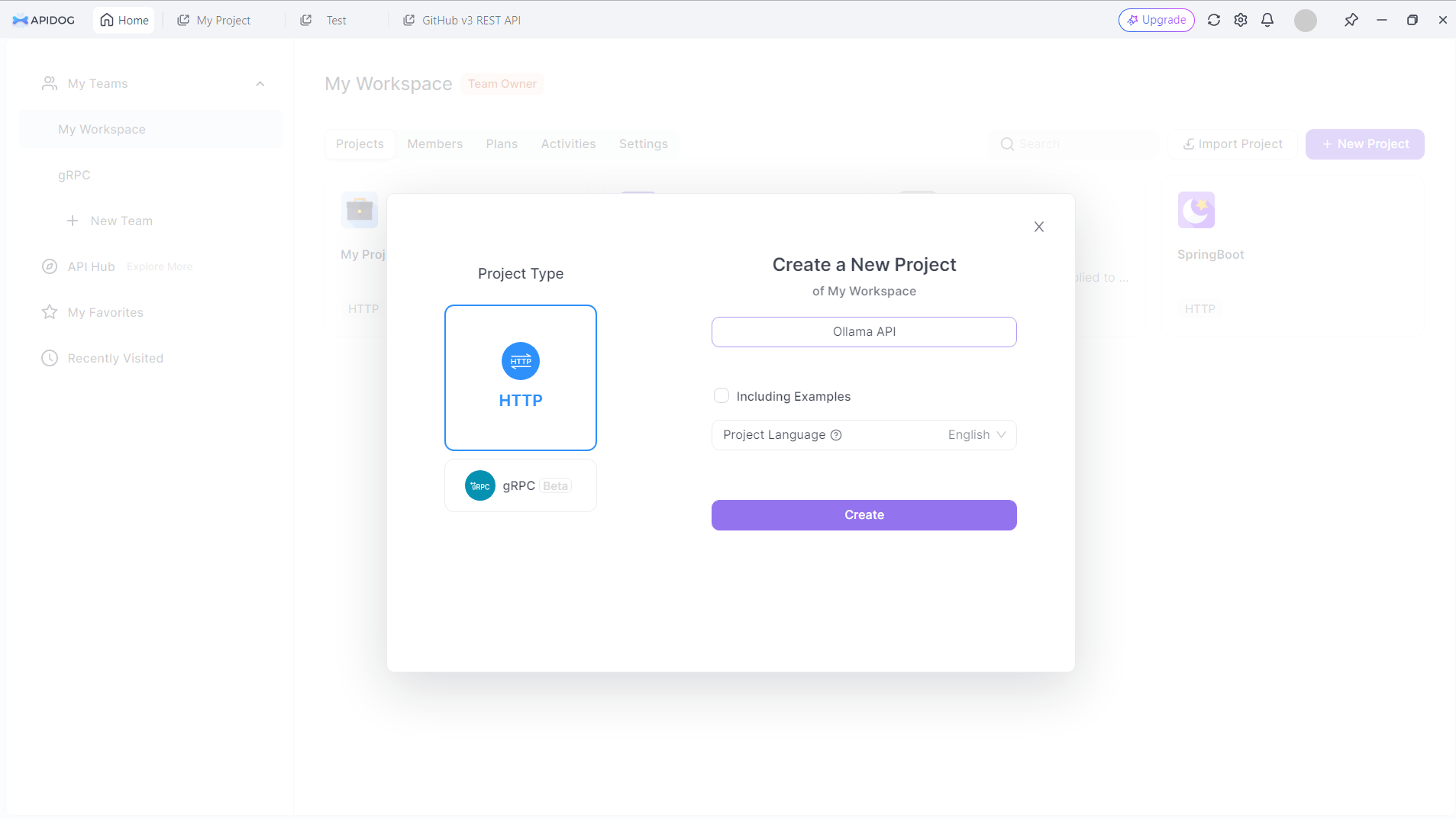

Create a New Project: Log into Apidog and create a new project specifically for your Ollama API work. Click on the New Project Button on the top right corner

Give your new project a name. Let's say: Ollama API:

Click on the Create button to proceed.

Set Up the Base URL: In your project settings, set the base URL to http://localhost:11434/api. This is the default address where Ollama exposes its API endpoints.

Import Ollama API Specification: While Ollama doesn't provide an official OpenAPI specification, you can create a basic structure for the API endpoints manually in APIDog.

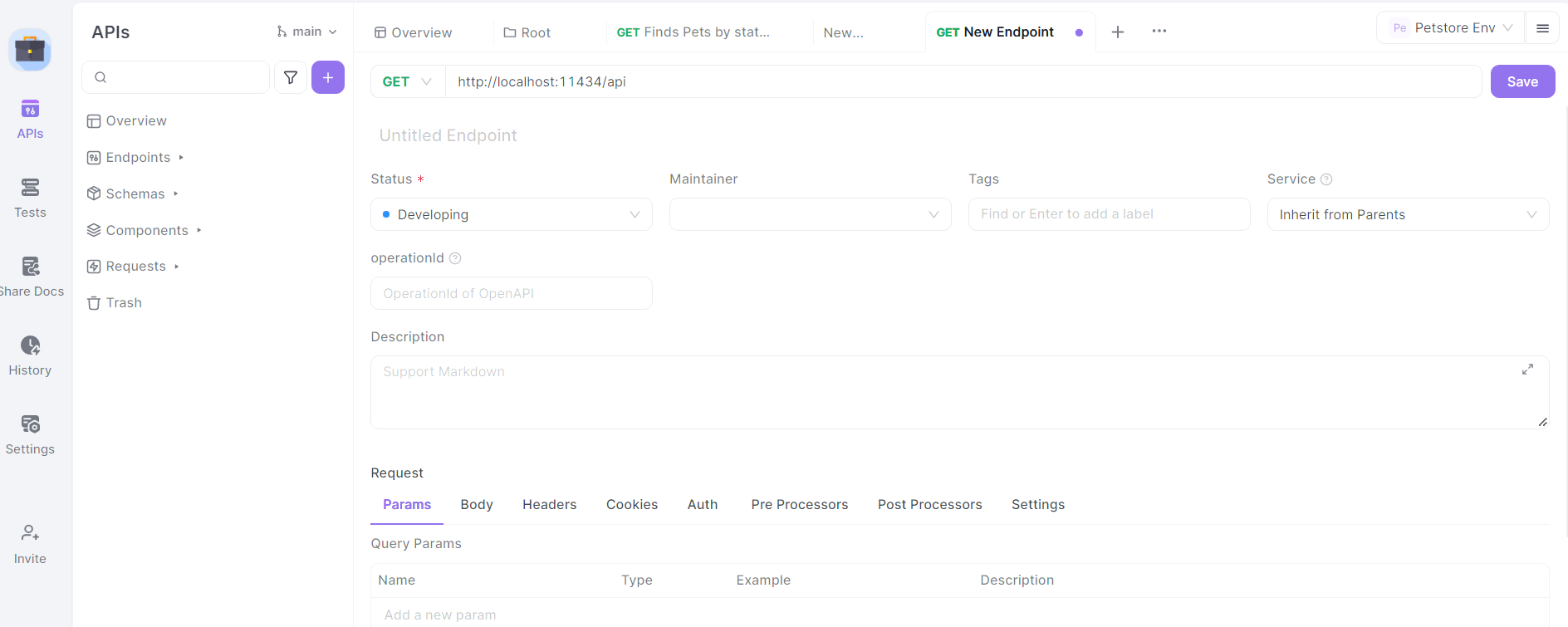

Define Endpoints: Start by defining the main Ollama API endpoints in APIDog. Some key endpoints to include are:

/generate(POST): For text generation/chat(POST): For chat-based interactions/embeddings(POST): For generating embeddings/pull(POST): For pulling new models/list(GET): For listing available models

For each endpoint, specify the HTTP method, request parameters, and expected response format based on the Ollama API documentation.

Designing Ollama API Calls in Apidog

Let's walk through the process of designing API calls for some of the most commonly used Ollama endpoints using Apidog:

Text Generation Endpoint (/generate)

In Apidog, create a new API endpoint with the path /generate and set the HTTP method to POST.

Define the request body schema:

{

"model": "string",

"prompt": "string",

"system": "string",

"template": "string",

"context": "array",

"options": {

"temperature": "number",

"top_k": "integer",

"top_p": "number",

"num_predict": "integer",

"stop": "array"

}

}

Provide a description for each parameter, explaining its purpose and any constraints.

Define the response schema based on the Ollama API documentation, including fields like response, context, and total_duration.

Chat Endpoint (/chat)

Create a new endpoint with the path /chat and set the HTTP method to POST.

Define the request body schema:

{

"model": "string",

"messages": [

{

"role": "string",

"content": "string"

}

],

"format": "string",

"options": {

"temperature": "number",

"top_k": "integer",

"top_p": "number",

"num_predict": "integer",

"stop": "array"

}

}

Provide detailed descriptions for the messages array, explaining the structure of chat messages with roles (system, user, assistant) and content.

Define the response schema, including the message object with role and content fields.

Model Management Endpoints

Create endpoints for model management operations:

- Pull Model (

/pull): POST request withnameparameter for the model to download. - List Models (

/list): GET request to retrieve available models. - Delete Model (

/delete): DELETE request withnameparameter for the model to remove.

For each of these endpoints, define appropriate request and response schemas based on the Ollama API documentation.

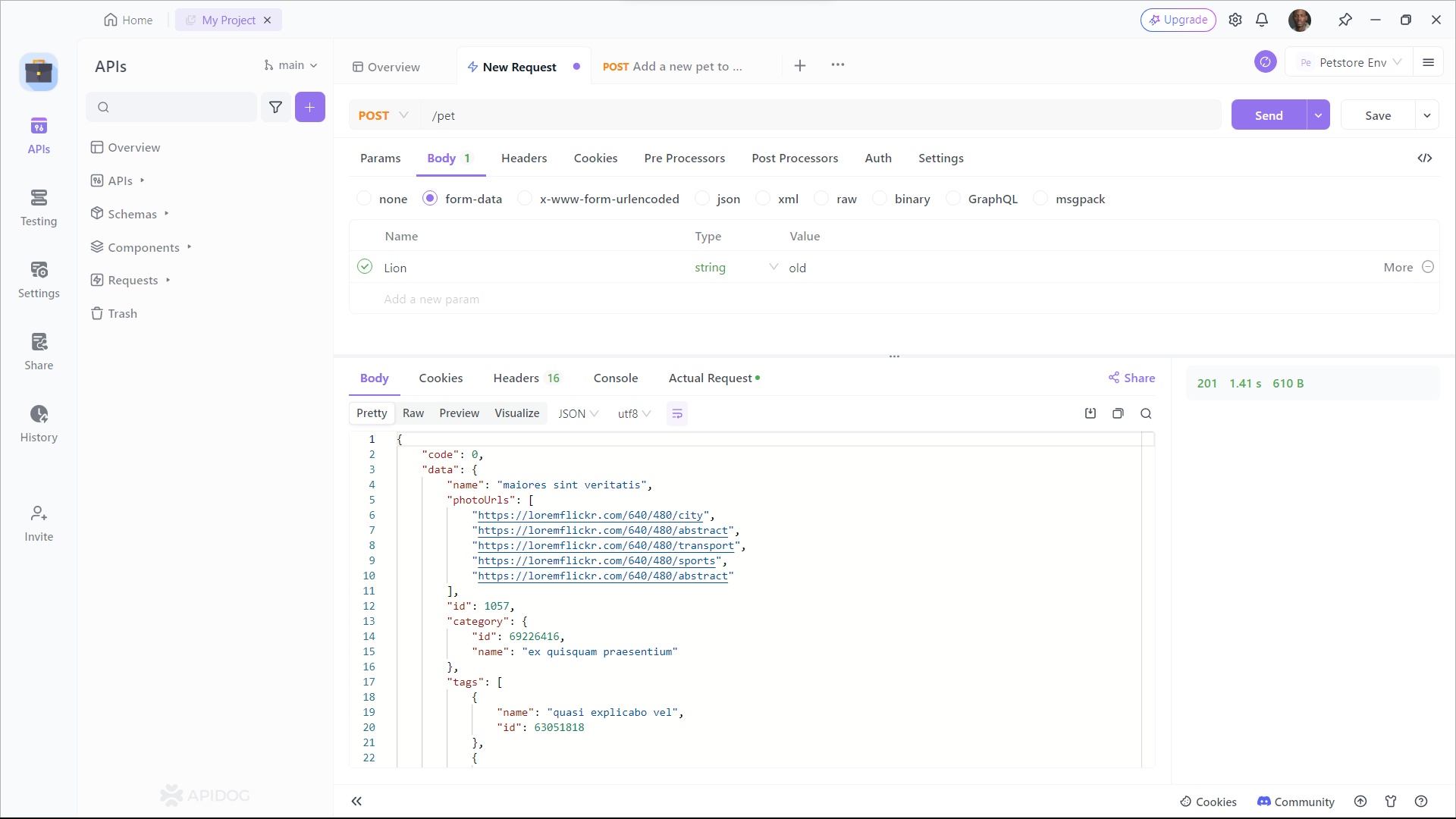

Testing Ollama API Calls in Apidog

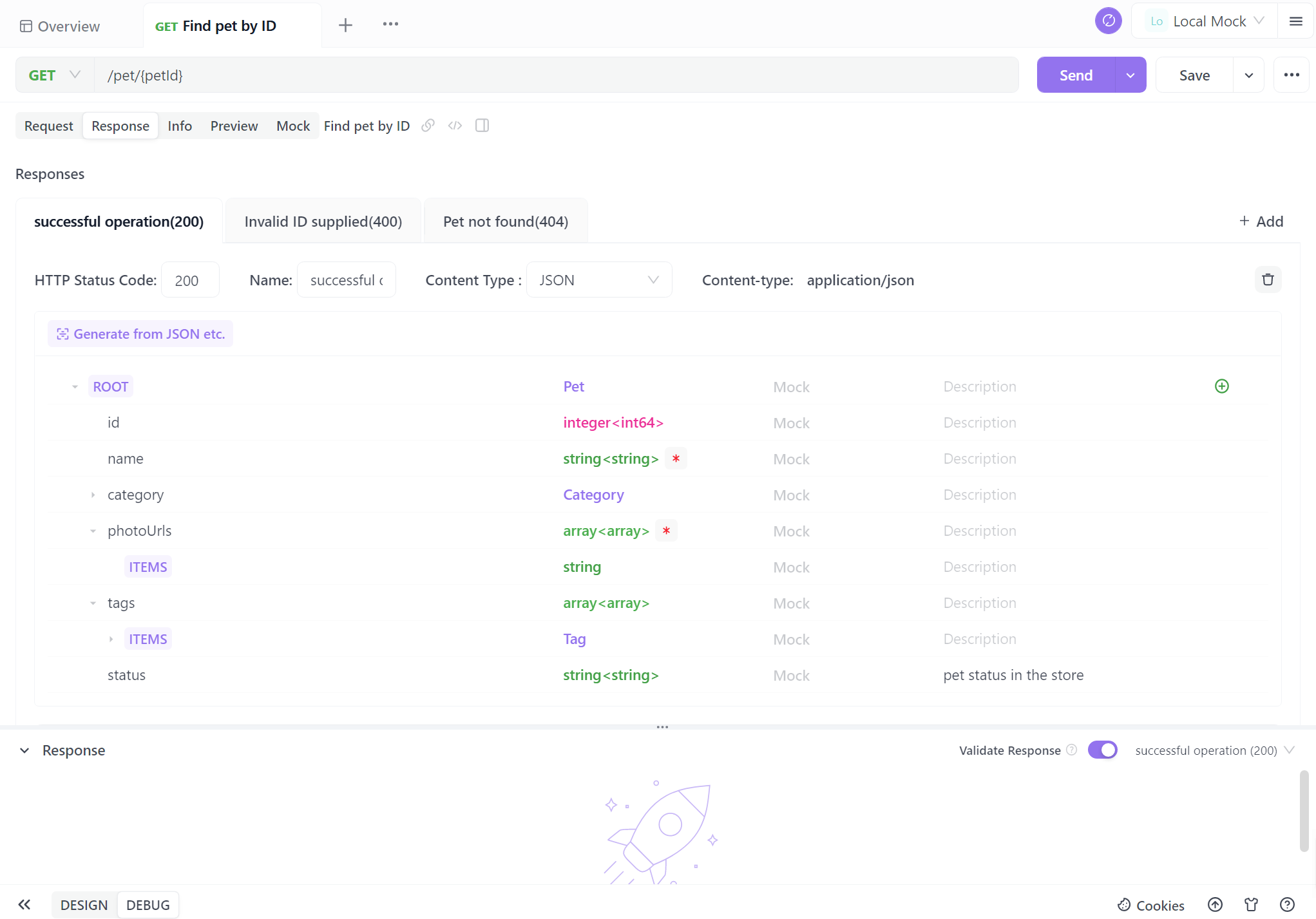

Apidog provides a built-in testing environment that allows you to send requests to your Ollama API and verify the responses. Here's how to use it:

Select an Endpoint: Choose one of the Ollama API endpoints you've defined in Apidog.

Configure Request Parameters: Fill in the required parameters for the selected endpoint. For example, for the /generate endpoint, you might set:

{

"model": "llama2",

"prompt": "Explain the concept of artificial intelligence in simple terms."

}

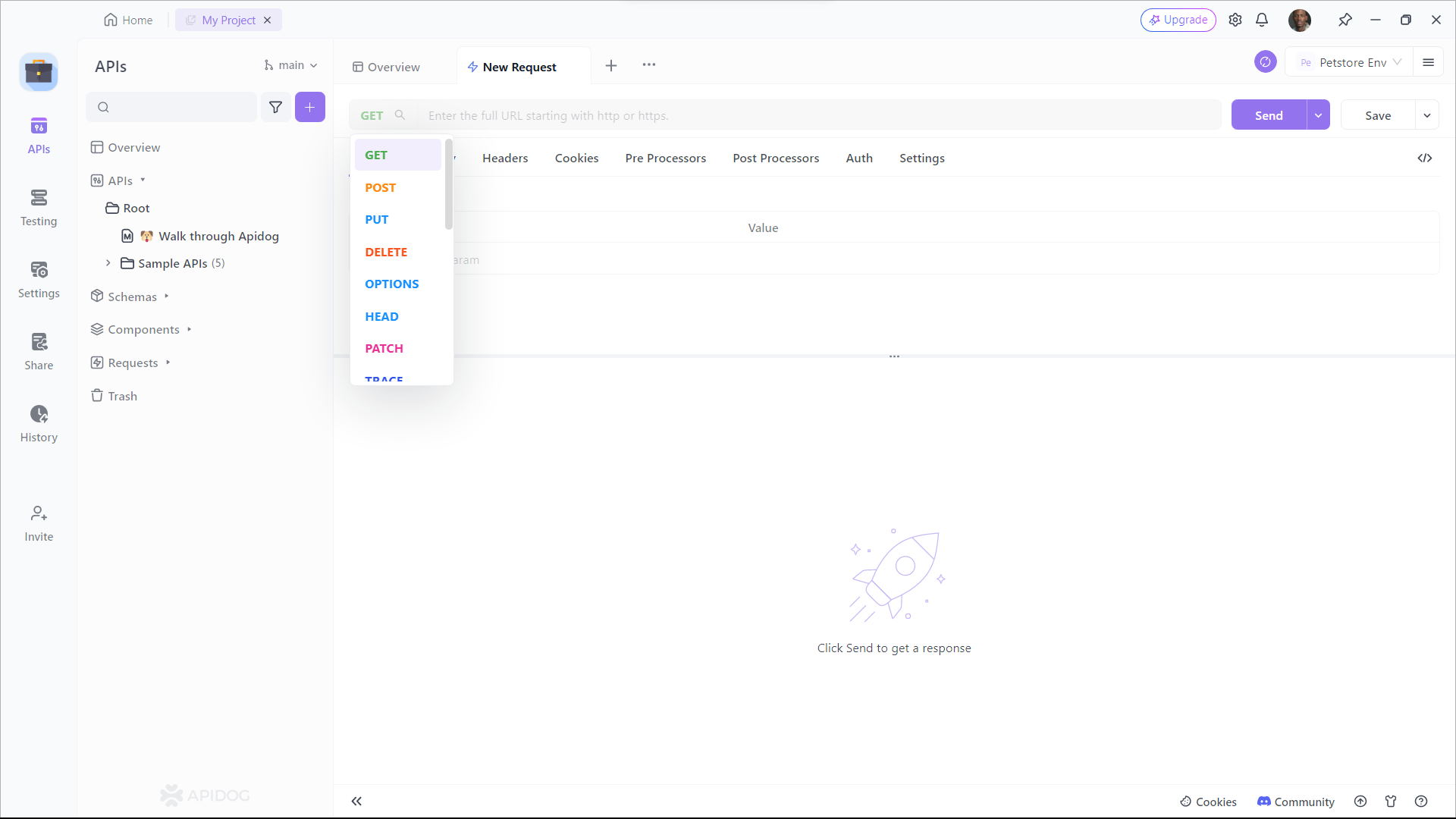

Send the Request: Use Apidog's "Send" button to execute the API call to your local Ollama instance.

Examine the response from Ollama, verifying that it matches the expected format and contains the generated text or other relevant information. If you encounter any issues, use Apidog's debugging tools to inspect the request and response headers, body, and any error messages.

Generating API Documentation

One of Apidog's most powerful features is its ability to automatically generate comprehensive API documentation. To create documentation for your Ollama API project:

Step 1: Sign up to Apidog

To start using Apidog for API documentation generation, you'll need to sign up for an account if you haven't already. Once you're logged in, you'll be greeted by Apidog's user-friendly interface.

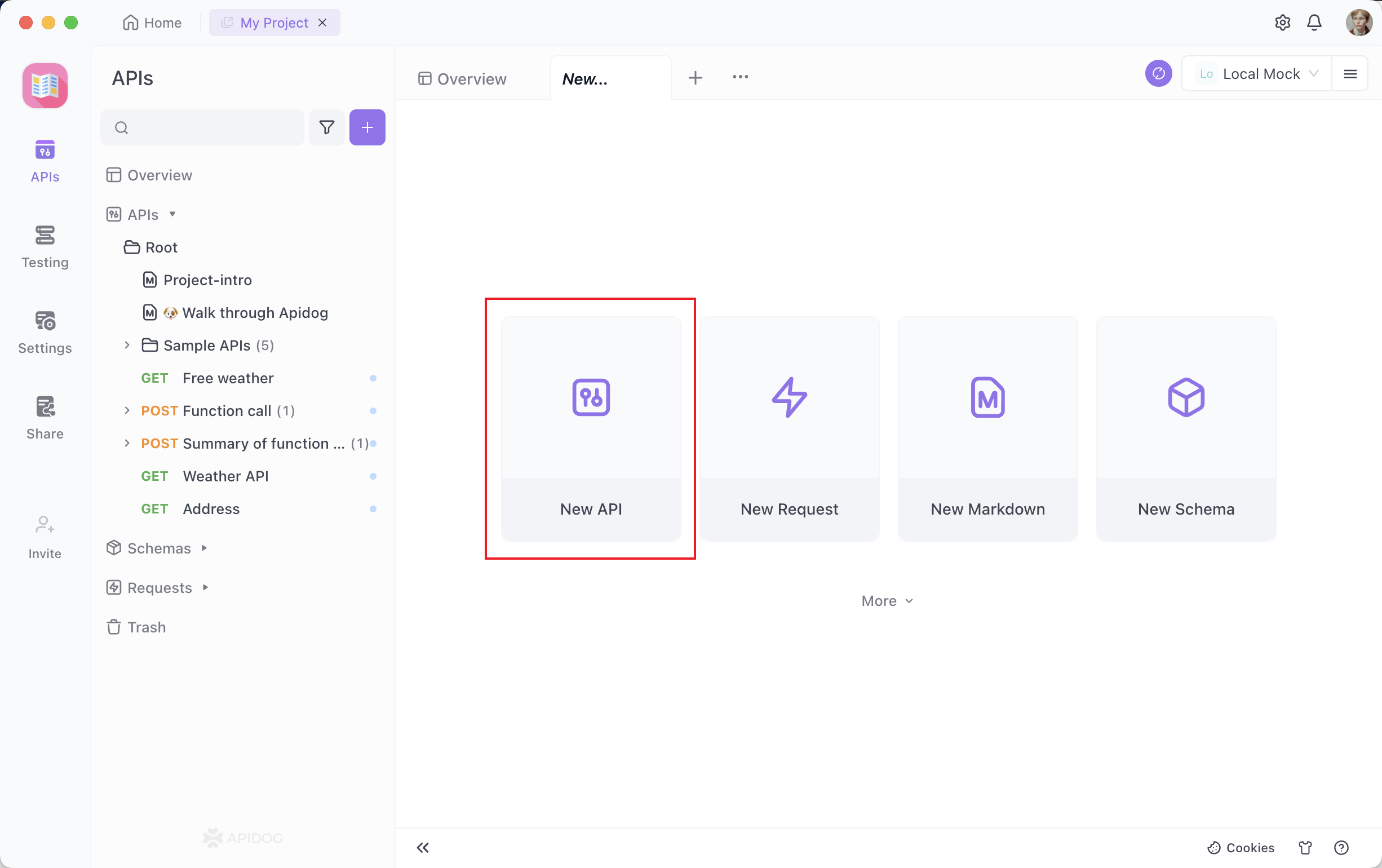

Step 2: Creating Your API Request

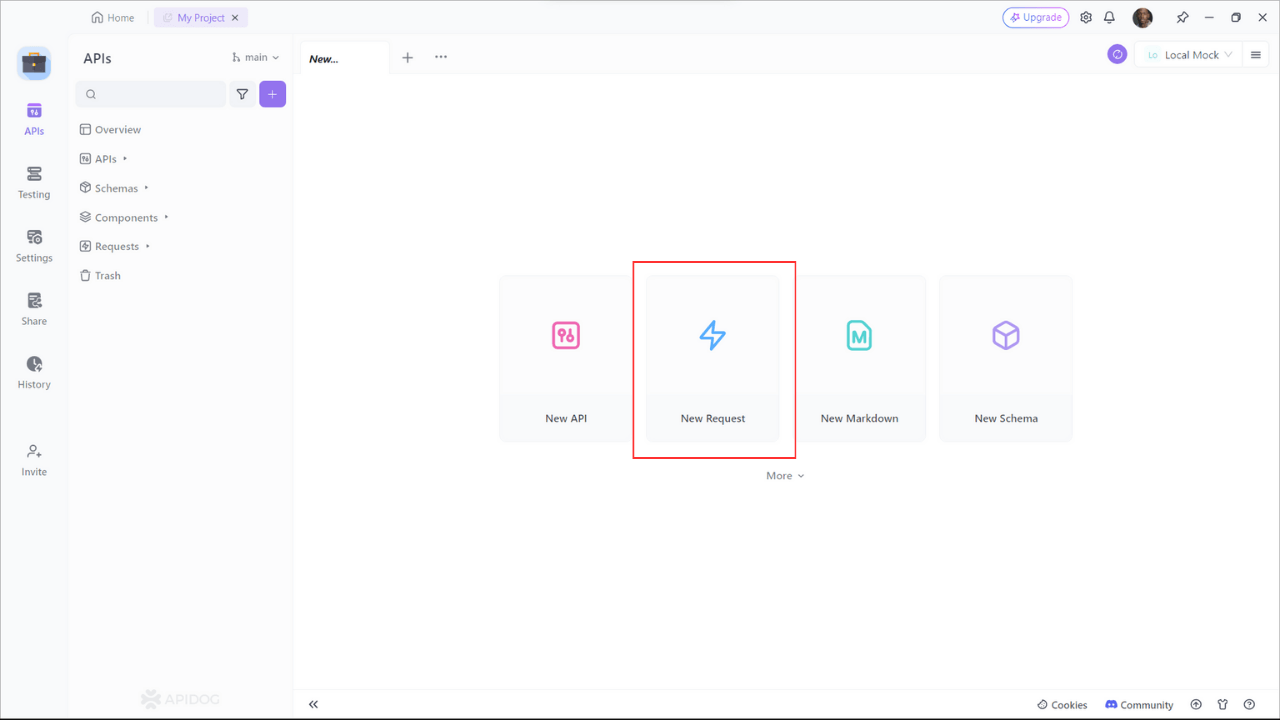

An API documentation project is composed of various endpoints, each representing a specific API route or functionality. To add an endpoint, click on the "+" button or "New API" within your project.

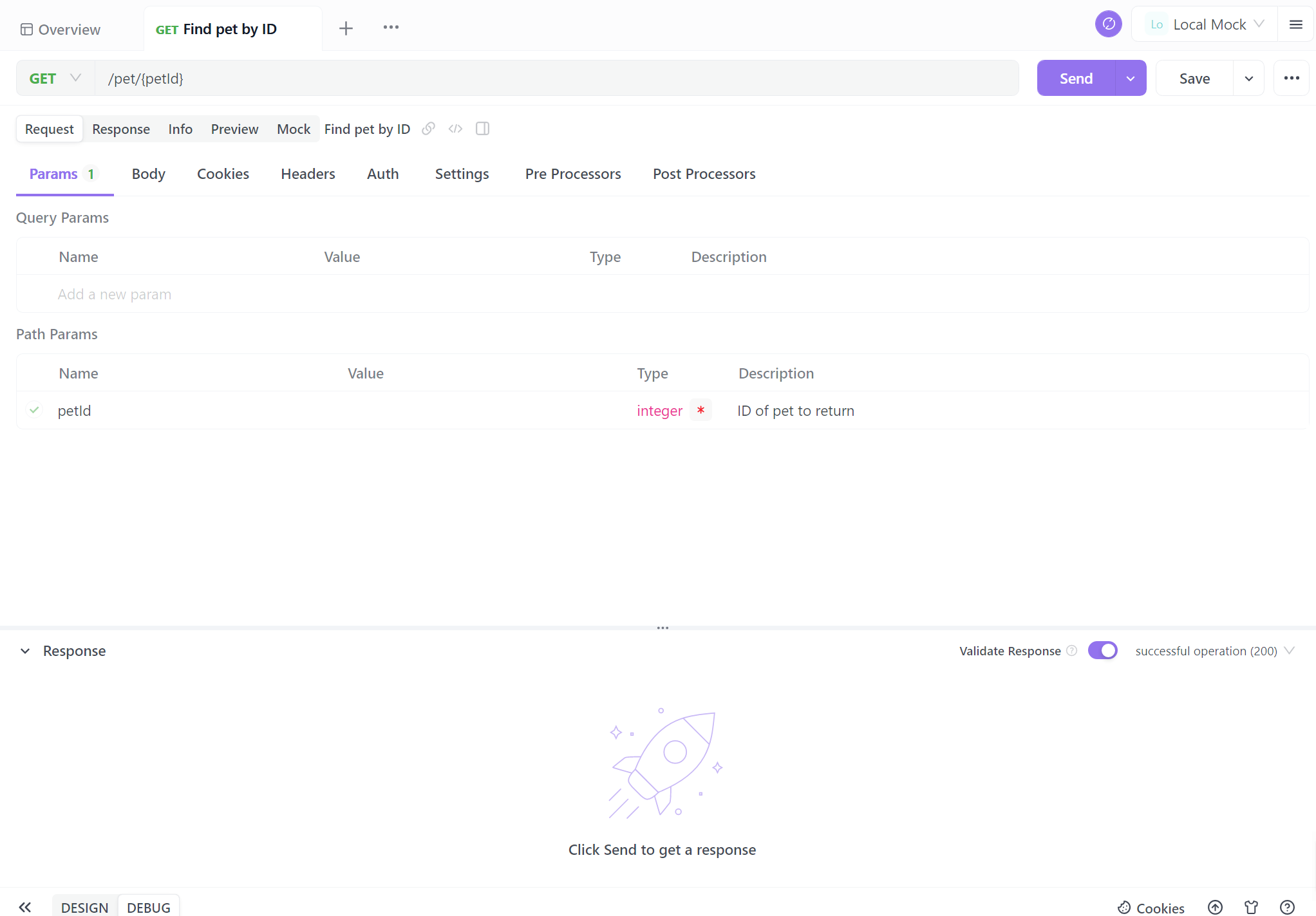

Step 3: Set up the Request Parameters

You'll need to provide details such as the endpoint's URL, description, and request/response details. Now comes the critical part – documenting your endpoints. Apidog makes this process incredibly straightforward. For each endpoint, you can:

- Specify the HTTP method (GET, POST, PUT, DELETE, etc.).

- Define request parameters, including their names, types, and descriptions.

- Describe the expected response, including status codes, response formats (JSON, XML, etc.), and example responses.

Many developers are not fond of writing API documentation, often finding it complex. However, in reality, with Apidog, you can complete it with just a few mouse clicks. Apidog's visual interface is beginner-friendly, making it much simpler than generating API documentation from code.

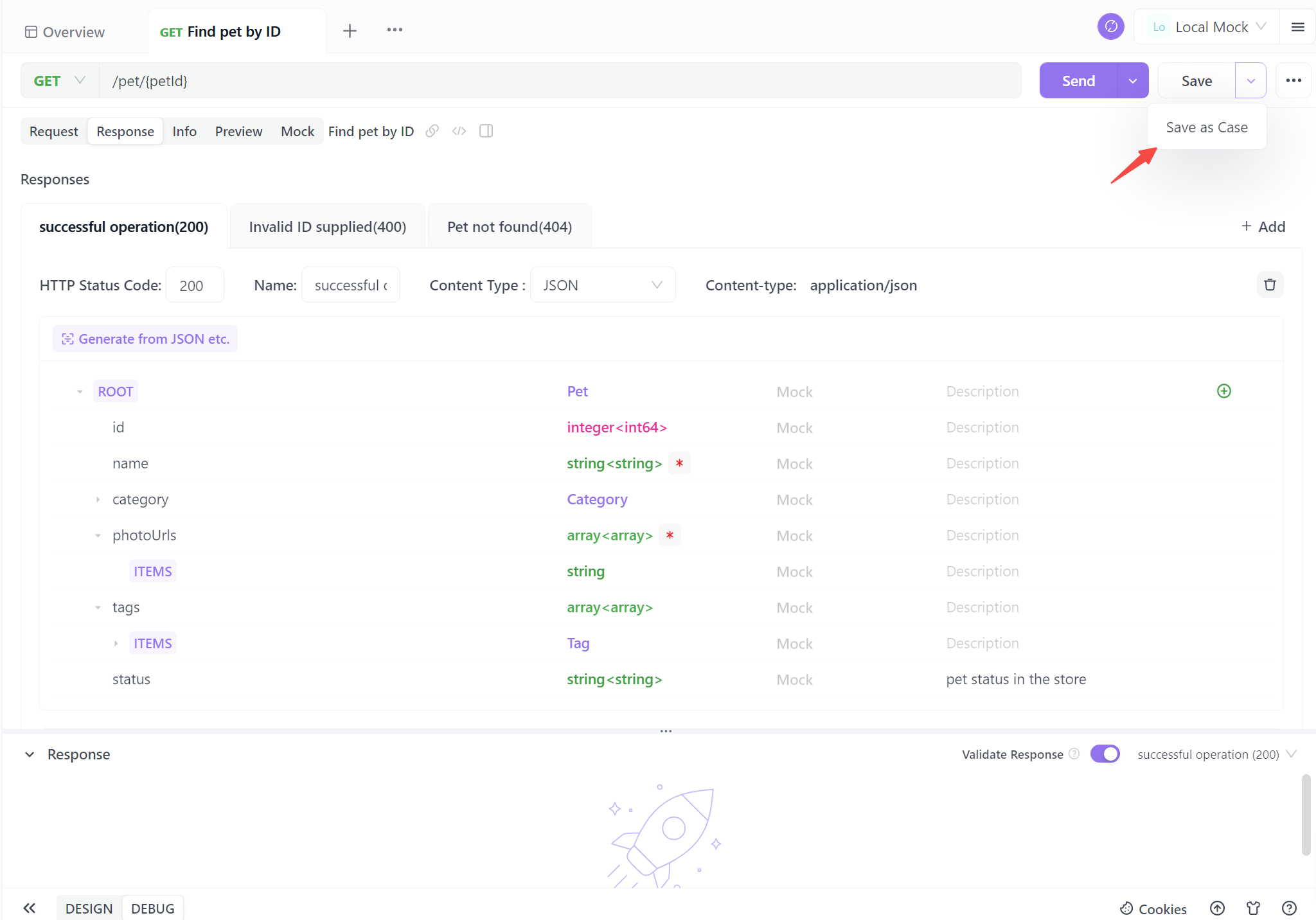

Step 4. Generate Your API

When you complete the basic API information, just one click to save as a case. You can also save it directly, but it is recommended to save it as a test case for easier future reference.

Following these four steps empowers you to effortlessly generate standardized API documentation. This streamlined process not only ensures clarity and consistency but also saves valuable time. With automated documentation, you're well-equipped to enhance collaboration, simplify user interaction, and propel your projects forward with confidence.

Best Practices for Ollama API Integration

As you work with the Ollama API through APIDog, keep these best practices in mind:

- Version Control: Use APIDog's version control features to track changes to your API definitions over time.

- Error Handling: Document common error responses and include examples of how to handle them in your API documentation.

- Rate Limiting: Be aware of any rate limiting implemented by Ollama, especially when running on resource-constrained systems, and document these limitations.

- Security Considerations: While Ollama typically runs locally, consider implementing authentication if exposing the API over a network.

- Performance Optimization: Document best practices for optimizing requests, such as reusing context for related queries to improve response times.

Conclusion

Integrating the Ollama API into your projects opens up a world of possibilities for leveraging powerful language models in your applications. By using APIDog to design, test, and document your Ollama API calls, you create a robust and maintainable integration that can evolve with your project's needs.

The combination of Ollama's local LLM capabilities and APIDog's comprehensive API management features provides developers with a powerful toolkit for building AI-enhanced applications. As you continue to explore the potential of Ollama and refine your API integrations, remember that clear documentation and thorough testing are key to successful implementation.

By following the steps and best practices outlined in this article, you'll be well-equipped to harness the full potential of Ollama's API, creating sophisticated AI-powered applications with confidence and ease. The journey of AI integration is an exciting one, and with tools like Ollama and APIDog at your disposal, the possibilities are truly limitless.