Developers and researchers increasingly seek LLMs with no restrictions to push the boundaries of artificial intelligence applications. These uncensored large language models operate without built-in content filters, enabling unrestricted responses across diverse queries. As the AI landscape evolves, uncensored LLMs empower users to explore complex topics, from ethical dilemmas to creative storytelling, without predefined moral or safety constraints.

In this article, experts examine the technical underpinnings of uncensored LLMs, their architectures, and real-world deployments. Users must handle these models responsibly, as their lack of filters can generate sensitive content.

Understanding Uncensored LLMs: Technical Foundations

Engineers design uncensored LLMs by fine-tuning base models on datasets that omit alignment instructions, which typically enforce ethical guidelines in standard variants. Base models like Llama 2 or Mistral undergo this process, where developers remove denial mechanisms—such as refusing queries on violence or bias—and adjust system prompts to encourage comprehensive responses. For instance, techniques like Reinforcement Learning from Human Feedback (RLHF) get bypassed or reversed to prioritize utility over safety.

Moreover, uncensored LLMs leverage transformer architectures, predicting next tokens in sequences with billions of parameters. Open-source communities contribute by sharing fine-tuned versions on platforms like Hugging Face, where models achieve uncensorship through "abliteration"—a method that erodes safety alignments via targeted fine-tuning. This approach ensures models respond to any prompt, but it demands robust hardware for inference, often requiring GPUs with ample VRAM.

These models differ from proprietary ones like GPT-4, which embed strict filters to comply with regulations. Uncensored variants, however, foster innovation in fields like research and simulation, where unrestricted outputs reveal raw capabilities. Nevertheless, developers mitigate risks by implementing custom safeguards in applications.

Benefits and Risks of LLMs with No Restrictions

Users gain significant advantages from LLMs with no restrictions, as these models deliver unfiltered insights that enhance problem-solving. For example, researchers utilize them for hypothesis testing in sensitive domains, where standard models might withhold information. Additionally, coders benefit from unrestricted code generation, accelerating development cycles without ethical interruptions.

Furthermore, these LLMs promote transparency in AI, allowing engineers to inspect and modify behaviors directly. Communities build upon them, creating specialized variants for tasks like multilingual processing or long-context reasoning. However, risks emerge from potential misuse, such as generating harmful content, which necessitates ethical oversight from deployers.

Platforms like Ollama enable local runs, minimizing data privacy concerns while maximizing control. Yet, high computational demands pose barriers, though optimizations like quantization address this by reducing model size without sacrificing much performance.

Criteria for Ranking the Top 10 Uncensored LLMs

Analysts rank these models based on parameter count, inference speed, community support, and benchmark scores from sources like Hugging Face leaderboards. Versatility across tasks—such as coding, role-playing, and reasoning—also factors in, alongside ease of local deployment. Moreover, recent 2026 updates prioritize models with extended context windows and mixture-of-experts (MoE) designs for efficiency.

1. Dolphin 3.0: Precision-Driven Uncensored Powerhouse

Cognitive Computations develops Dolphin 3.0 on the Llama 3.1 8B base, fine-tuning it for exceptional reasoning and steerability via system prompts. This model excels in logic-intensive tasks, delivering precise, unfiltered outputs without verbose fluff. Engineers appreciate its 8 billion parameters, which balance performance and resource needs, requiring about 16GB VRAM for optimal inference.

Key features include a hybrid architecture that enhances prompt adherence, making it ideal for custom AI assistants. Additionally, Dolphin 3.0 supports function calling, enabling integration with external tools. Pros encompass unmatched control over personas and rapid problem-solving in coding or math, while cons involve its direct prose style, which suits technical but not narrative applications.

Developers run Dolphin 3.0 locally using Ollama: install the tool, pull the model with ollama pull dolphin-llama3, and query via API or CLI. Benchmarks show it outperforming peers in structured reasoning, with scores above 80% on MMLU tests. Furthermore, its uncensorship stems from dataset curation that avoids alignment biases, allowing exploration of edge cases in research.

In deployment scenarios, teams integrate it into pipelines for automated analysis, where its efficiency shines. However, users calibrate prompts carefully to avoid unintended biases.

2. Nous Hermes 3: Creativity-Focused Uncensored Model

NousResearch crafts Nous Hermes 3 on the Llama 3.2 8B foundation, emphasizing creative writing and role-playing with coherent long-form outputs. With 8 billion parameters, it maintains character consistency in dialogues, leveraging ChatML for structured conversations. This makes it a top choice for narrative generation without restrictions.

Features highlight its nuanced understanding of prompts, supporting extended contexts up to 8k tokens. Pros include superior fiction crafting and engaging interactions, whereas cons note occasional verbosity in concise queries. Community-driven updates ensure ongoing improvements.

To deploy, users leverage Hugging Face: download the model, load it with Transformers library via from transformers import AutoModelForCausalLM; model = AutoModelForCausalLM.from_pretrained('NousResearch/Hermes-3-Llama-3.2-8B'), and generate text. Benchmarks indicate high scores in creative benchmarks, often exceeding 85% in role-play evaluations.

Moreover, its uncensorship arises from fine-tuning on diverse, unfiltered datasets, enabling deep explorations in storytelling. Developers apply it in game design, where unrestricted creativity accelerates prototyping.

3. LLaMA-3.2 Dark Champion Abliterated: Long-Context Uncensored Beast

DavidAU fine-tunes LLaMA-3.2 Dark Champion on an 8x3B MoE architecture, ablating safety layers for unaligned outputs. Boasting a 128k context window, it processes vast documents efficiently, ideal for data analysis.

This model's MoE design activates subsets of parameters, reducing compute while maintaining power. Pros feature rapid inference and deep reasoning, but cons include potential negative biases and high VRAM demands (around 40GB).

Installation involves Hugging Face downloads, with inference via pipeline('text-generation', model='DavidAU/Llama-3.2-8X3B-MOE-Dark-Champion-Instruct-uncensored-abliterated-18.4B'). It scores highly on long-context benchmarks, surpassing 90% accuracy in retrieval tasks.

Additionally, abliteration ensures no restrictions, suiting advanced research. Teams use it for automating reports, where its scale handles complex datasets seamlessly.

4. Llama 2 Uncensored: Versatile Entry-Level Uncensored LLM

Meta's Llama 2 serves as the base for this uncensored variant, fine-tuned by George Sung to eliminate moral filters. With 7-13 billion parameters, it runs on consumer hardware, supporting role-playing and general tasks.

Features include multiple quantization options like GGUF for CPU/GPU balance. Pros: accessibility and community plugins; cons: weaker reasoning than Llama 3.

Run it via Ollama: ollama run llama2-uncensored. Popular with 234K pulls, it benchmarks well for lightweight use.

Furthermore, its design fosters experimentation, making it a staple for beginners in uncensored AI.

5. WizardLM Uncensored: Reliable All-Rounder

TheBloke packages WizardLM Uncensored on Llama 2 13B, removing alignments for broad applications. It excels in chat and writing, with balanced capabilities.

Key aspects: strong community, easy deployment. Pros: predictability; cons: outdated base.

Deploy with ollama run wizardlm-uncensored. It garners 23K pulls, suitable for creative workflows.

6. Dolphin 2.7 Mixtral 8x7B: Coding-Specialized Uncensored Model

Eric Hartford builds this on Mixtral's MoE, fine-tuned for coding without filters. 8x7B parameters ensure efficiency in specialized tasks.

Features: quantization formats, high coding performance. Pros: speed; cons: hardware needs.

Use Ollama: ollama run dolphin-mixtral:8x7b. Benchmarks highlight its prowess in programming.

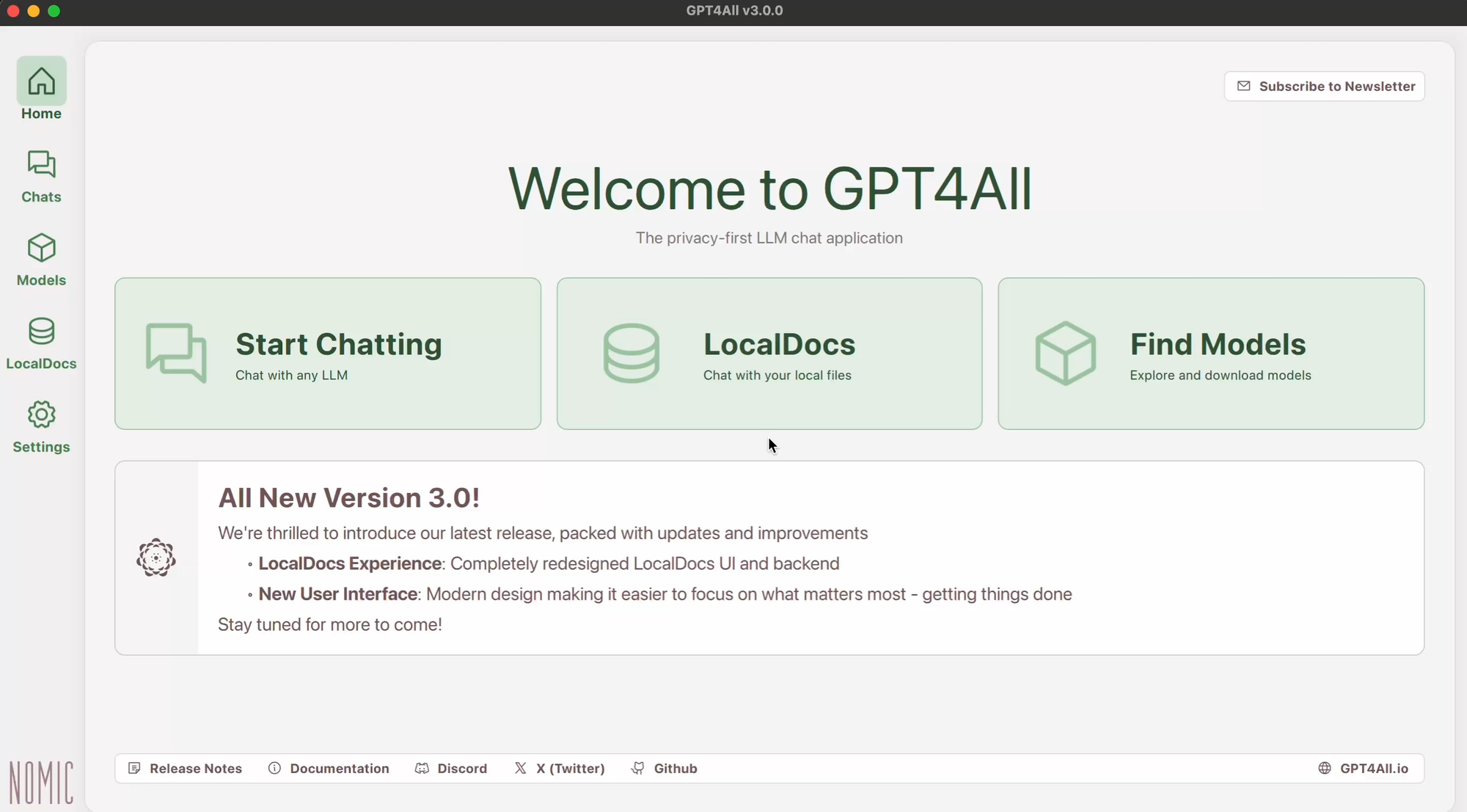

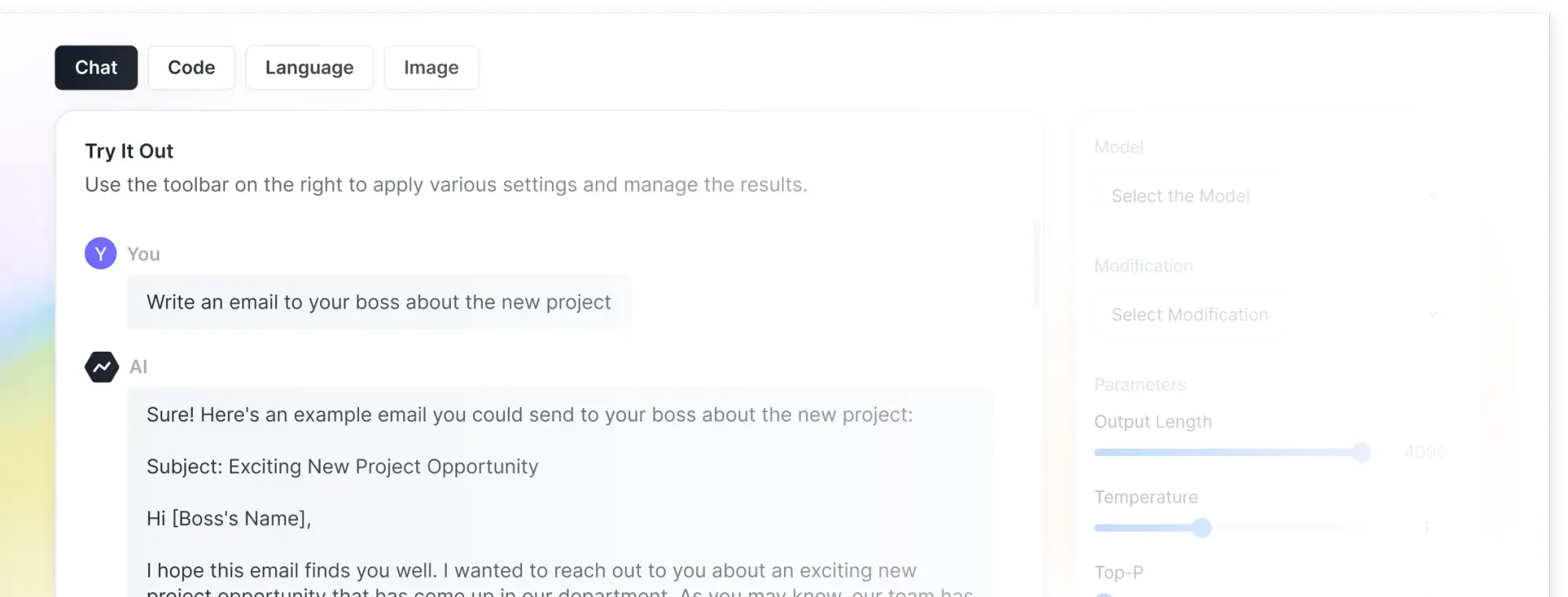

7. GPT-4All: Offline-Focused Uncensored Framework

GPT-4All optimizes for local runs, building on transformer research for uncensored chats. Cross-platform support aids deployment.

Pros: free, customizable; cons: context limits.

Install via official site, run executables. Ideal for privacy-conscious users.

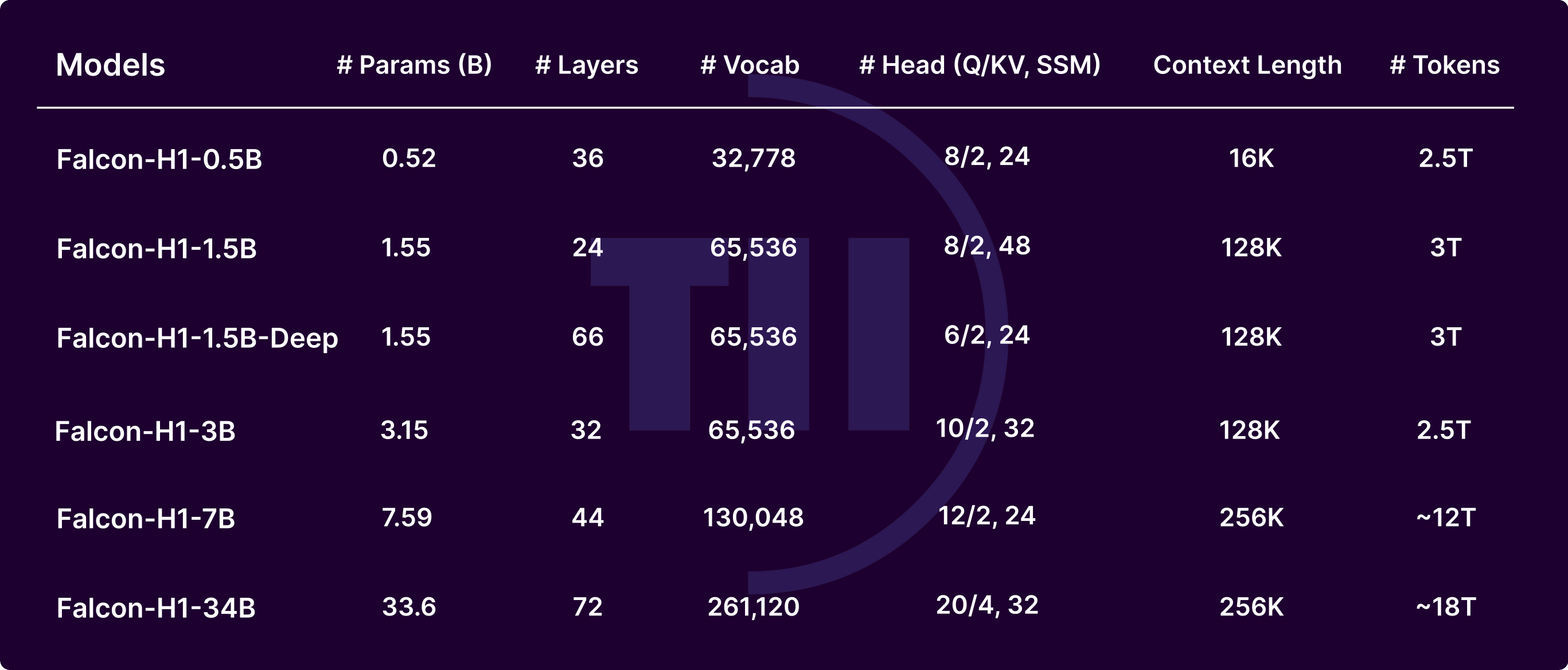

8. Falcon LLM: High-Performance Uncensored Alternative

Technology Innovation Institute develops Falcon with innovative architecture for nuanced text. Optimized for speed.

Features: modular design. Pros: quality; cons: ecosystem maturity.

Load with Transformers library. Suits research.

9. MPT-7B Chat: Conversational Uncensored LLM

MosaicML tunes MPT-7B for chats, emphasizing low latency. 7B parameters fit modest setups.

Pros: real-time; cons: complex tasks.

Deploy locally with scripts. Great for bots.

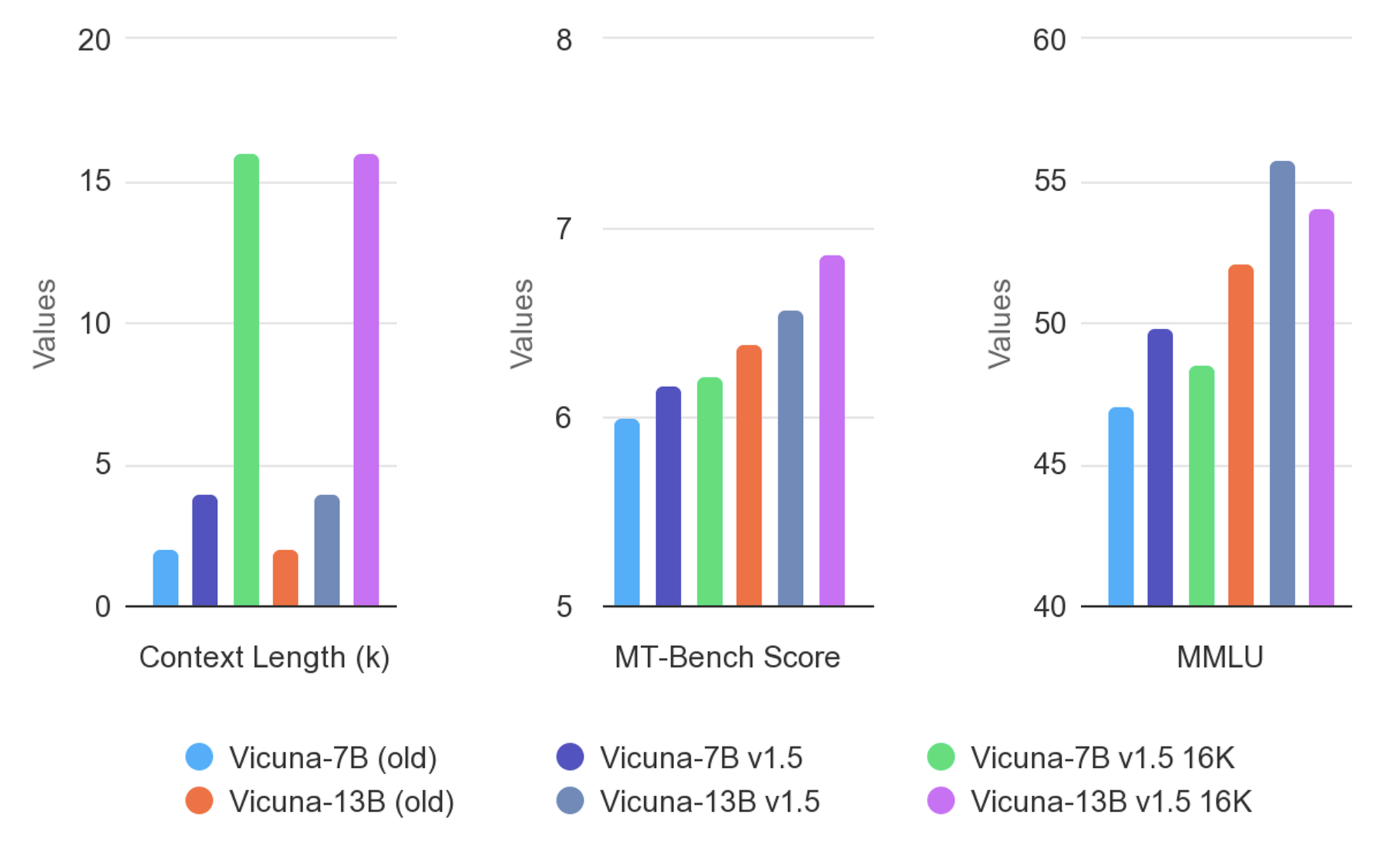

10. Vicuna: Dialogue-Optimized Uncensored Model

Vicuna fine-tunes on conversational data for natural interactions. Lightweight design.

Pros: engaging; cons: non-conversational weaknesses.

Run via community tools. Enhances interactive apps.

Deployment Best Practices for Uncensored LLMs

Engineers optimize deployments by selecting quantization levels, such as Q4 or Q8, to fit hardware. Tools like Ollama or LM Studio simplify runs, while APIs via Apidog enable scaling.

Additionally, monitor VRAM usage and fine-tune for specific domains. Security measures include isolating environments.

Future Trends in Uncensored LLMs

Innovators predict larger MoE models and better abliteration techniques. Integration with multimodal capabilities expands uses.

However, regulatory pressures may influence development, pushing for hybrid approaches.

Conclusion

This exploration reveals how LLMs with no restrictions revolutionize AI applications. From Dolphin 3.0's precision to Vicuna's dialogues, these models offer unparalleled freedom. Developers harness them responsibly, leveraging tools like Apidog for seamless integrations. As technology advances, these uncensored LLMs continue to drive innovation, transforming research and development landscapes.