As AI models push the boundaries of reasoning and agentic capabilities, Kimi K2 Thinking emerges as a standout innovation from Moonshot AI, blending open-source accessibility with enterprise-grade performance. This trillion-parameter thinking agent model redefines how developers interact with large language models, particularly through its robust API. Designed for tasks requiring deep inference and tool chaining, the Kimi K2 Thinking API allows seamless integration into applications, from automated research agents to complex coding assistants. In this guide, we'll explore Kimi K2 Thinking's foundations, architecture, benchmarks, pricing, practical applications, and hands-on usage—equipping you to leverage the Kimi K2 Thinking API effectively. Let's get started!

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

Introduction to Kimi K2 Thinking

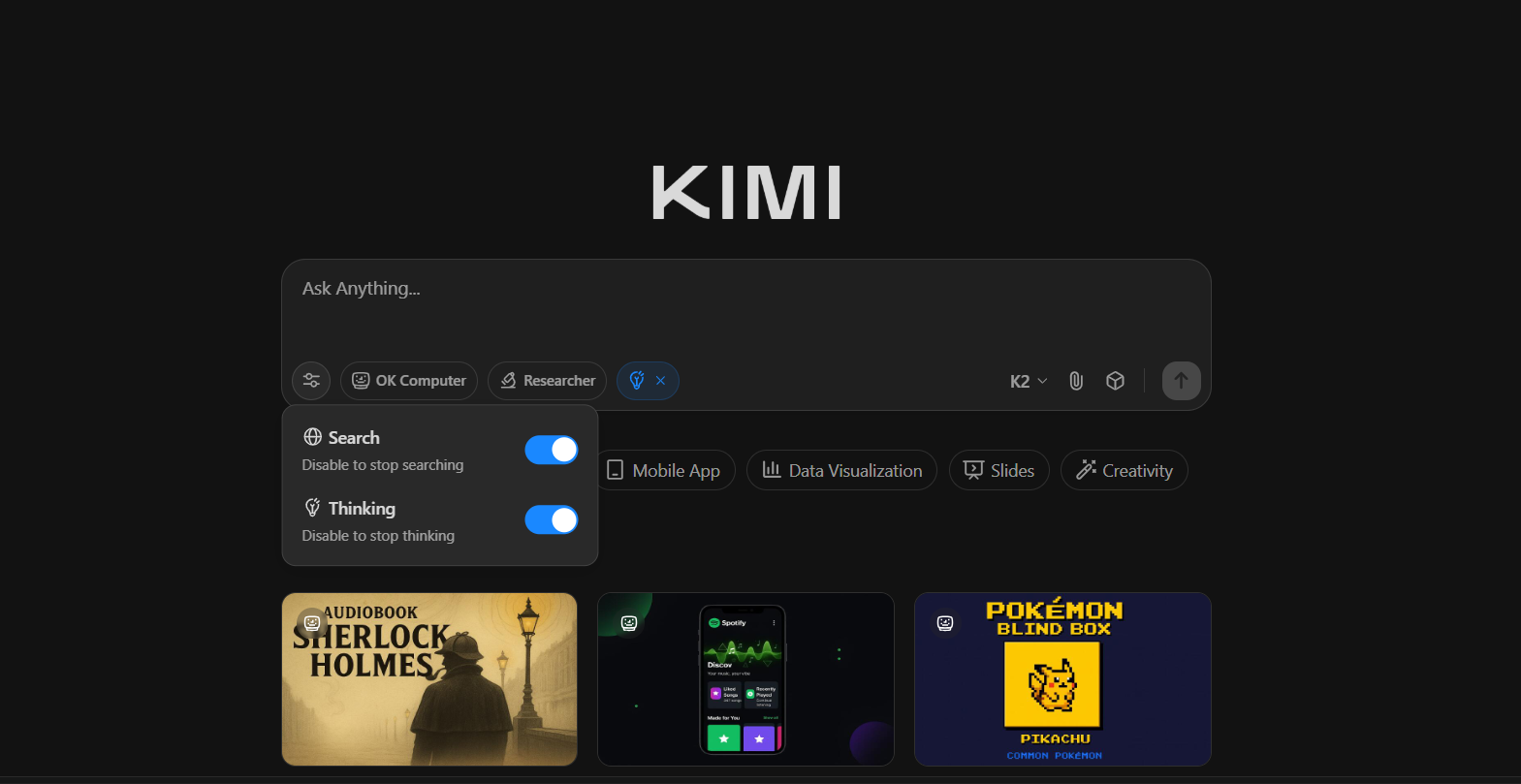

Kimi K2 Thinking represents Moonshot AI's bold step forward in open-source AI, launching as a specialized thinking agent model optimized for sequential reasoning and tool use. At its core, Kimi K2 Thinking is built to simulate human-like deliberation, processing queries through extended "thinking" tokens that enable multi-turn tool interactions without constant human input. This model, available via API for developers, excels in environments demanding prolonged context retention and adaptive decision-making, such as agentic search or code generation.

What sets Kimi K2 Thinking apart is its focus on test-time scaling—expanding not just model size but the depth of inference during runtime. With a massive 1 trillion parameters, it handles intricate chains of thought, making the Kimi K2 Thinking API ideal for applications where precision trumps speed. Developers can access it through Moonshot's platform, where chat mode is live on kimi.com, and full agentic capabilities are rolling out soon. For those tired of black-box models, Kimi K2 Thinking's open weights and code invite customization, fostering a community-driven ecosystem. As we delve deeper, you'll see how this API turns abstract reasoning into tangible tools for your projects.

The Architecture of Kimi K2 Thinking

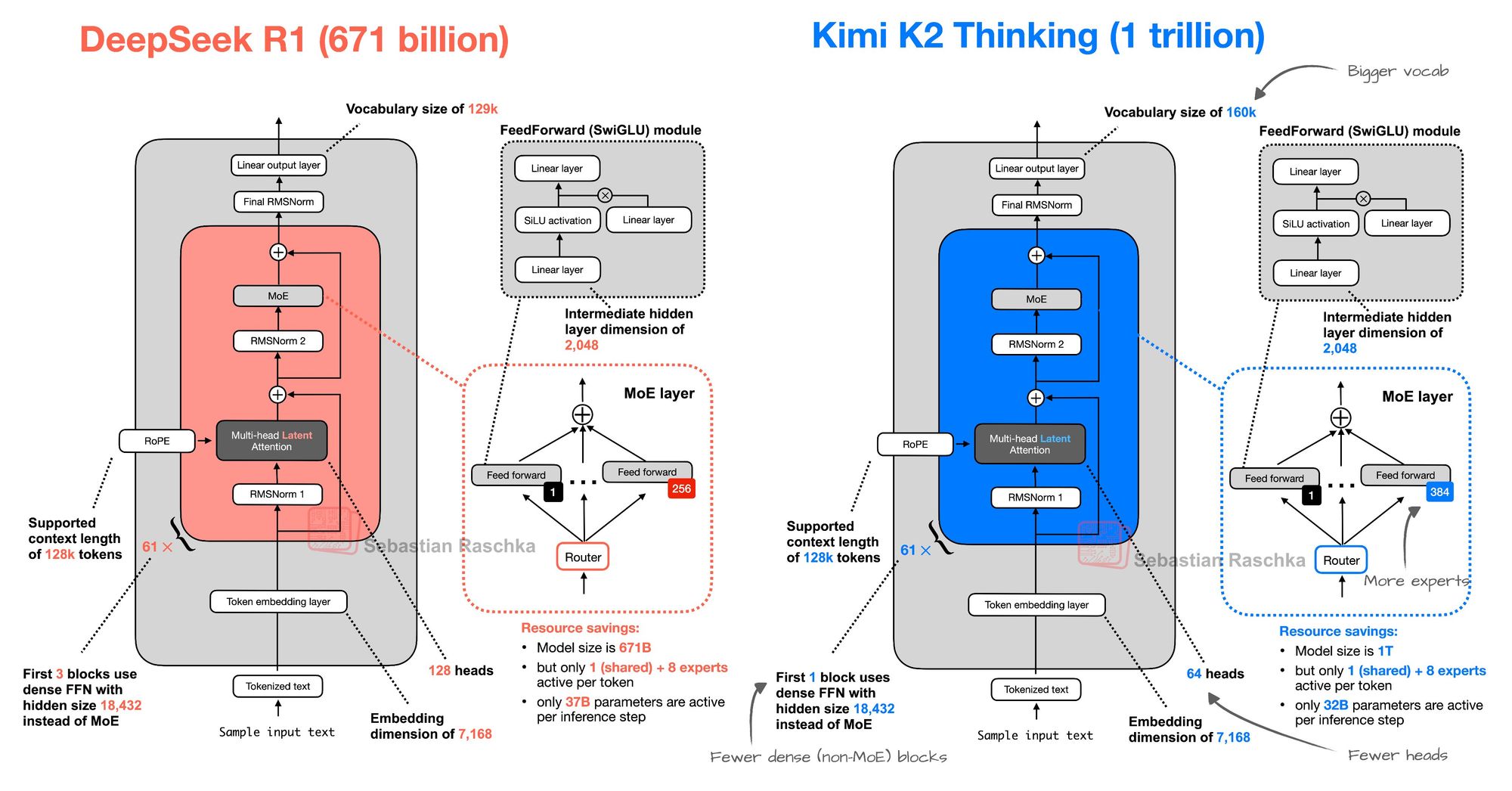

Delving into the technical underpinnings, Kimi K2 Thinking employs a Mixture-of-Experts (MoE) architecture that echoes DeepSeek R1's design but scales ambitiously for superior efficiency. Like DeepSeek R1's 671 billion parameters, Kimi K2 Thinking leverages sparse activation to route inputs through specialized experts, minimizing compute waste. However, it expands the vocabulary to 160,000 tokens—up from DeepSeek R1's 129,000—enabling richer handling of multilingual and domain-specific terms, crucial for the Kimi K2 Thinking API's global applications.

The model features 384 experts compared to DeepSeek R1's 256, allowing finer-grained specialization in tasks like coding or search. Yet, it streamlines with fewer dense (non-MoE) blocks and reduced attention heads (64 versus 128), optimizing for inference speed without sacrificing depth. This balance results in a 256K context window, supporting extended dialogues or document analysis in the Kimi K2 Thinking API calls. Trained on diverse datasets emphasizing agentic behaviors, the architecture prioritizes "thinking" phases—iterative internal monologues that refine outputs before tool invocation.

For API users, this translates to reliable multi-step reasoning: A single Kimi K2 Thinking API request can orchestrate 200-300 tool calls, from web scraping to code execution, all within a unified response. Moonshot AI's emphasis on open-source weights means developers can fine-tune for niche needs, such as financial modeling, while the API's lightweight footprint suits edge deployments. Overall, Kimi K2 Thinking's architecture embodies efficient scaling, making the Kimi K2 Thinking API a pragmatic choice for resource-conscious teams.

Benchmarks and Capabilities of Kimi K2 Thinking

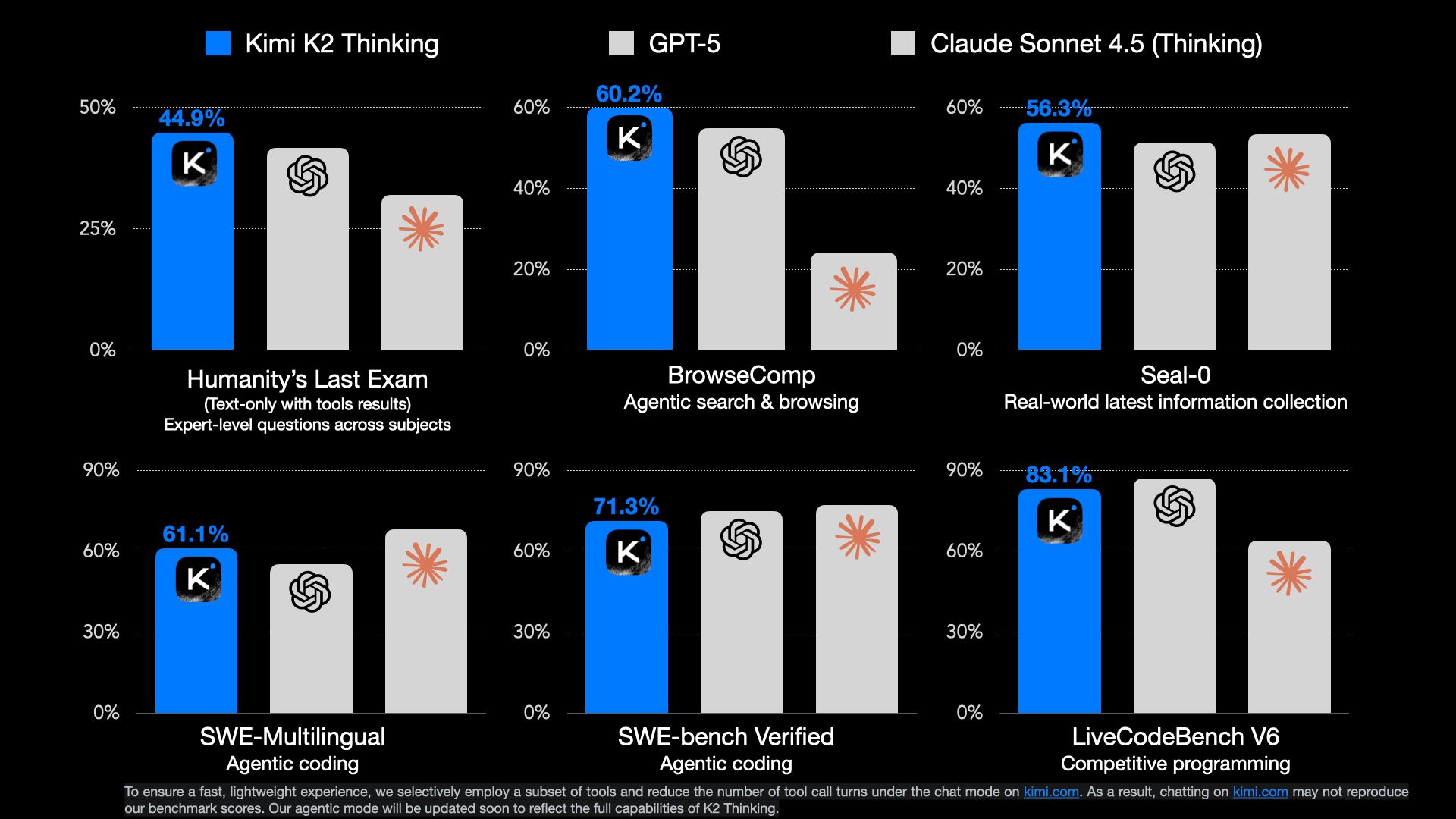

Kimi K2 Thinking has quickly claimed state-of-the-art (SOTA) status in agentic benchmarks, underscoring its prowess as an open-source thinking agent. On the HumanEval-Like Evaluation (HLE), it achieves 44.9%—surpassing competitors in complex problem-solving. Similarly, BrowseComp scores 60.2%, highlighting its excellence in web navigation and data synthesis, where traditional models falter on multi-page reasoning.

A hallmark is its endurance: The model executes up to 200-300 sequential tool calls autonomously, ideal for long-horizon tasks like research pipelines or debugging marathons via the Kimi K2 Thinking API. It shines in reasoning, agentic search, and coding, with strong performance on GAIA and LiveCodeBench, often edging out closed-source rivals. The 256K context window supports processing entire codebases or lengthy documents, enabling nuanced outputs.

Moonshot AI positions Kimi K2 Thinking as a pioneer in test-time scaling, amplifying "thinking tokens" and tool turns for deeper inference. Currently live in chat mode on kimi.com, full agentic mode promises even more fluid API interactions. Early adopters praise its balance of accuracy and speed, with API latency under 2 seconds for standard queries. For developers, these benchmarks mean the Kimi K2 Thinking API delivers reliable, high-fidelity results, fostering trust in production environments.

Pricing the Kimi K2 Thinking API

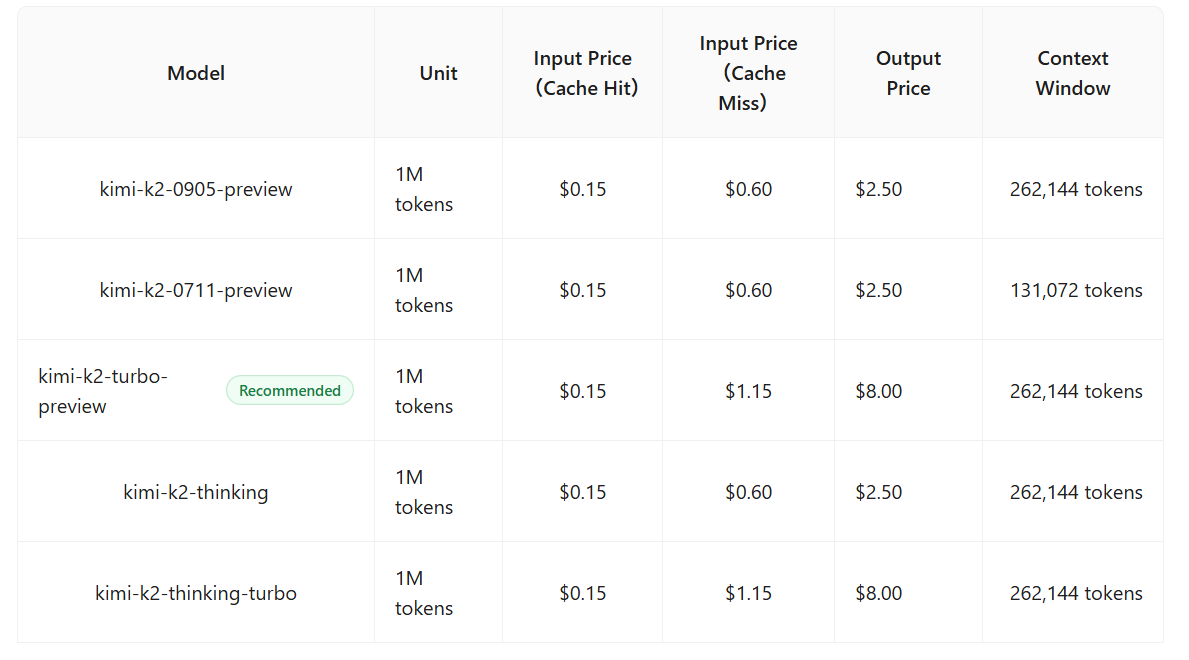

One of Kimi K2 Thinking's most appealing aspects is its competitive pricing, positioning the Kimi K2 Thinking API as a budget-friendly alternative to premium models. Input tokens cost $0.15 per million, with outputs at $2.50 per million—significantly lower than Claude 4.5 Sonnet's $3/$15 rates. This makes it ideal for high-volume applications like chatbots or data analysis.

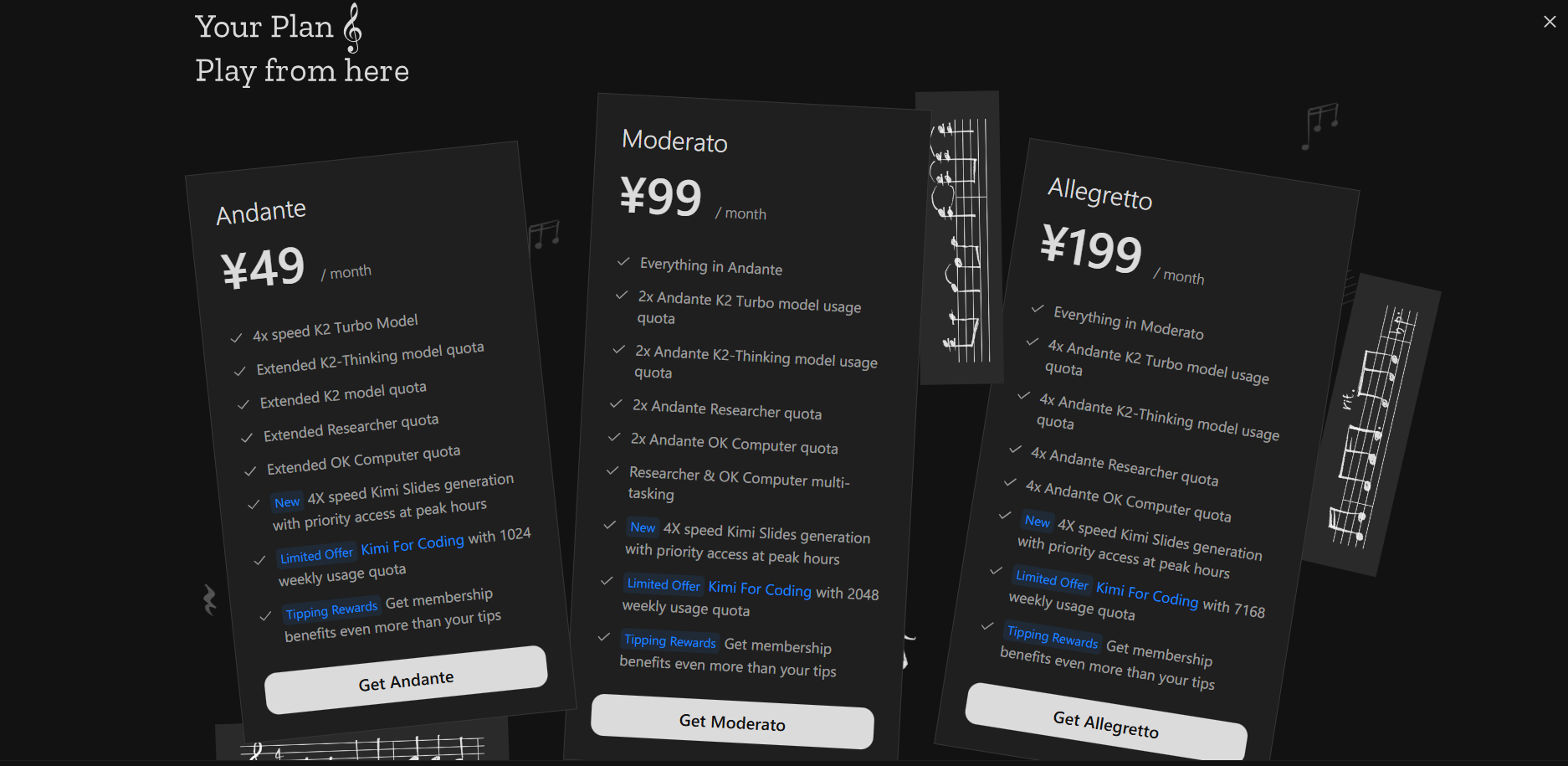

Despite crushing GPT-5 and Sonnet in benchmarks like HLE and BrowseComp, Kimi K2 Thinking maintains affordability through efficient MoE design, reducing operational costs without compromising quality. Free tiers offer limited API calls for testing, while paid plans start at $49/month, scaling to enterprise volumes with volume discounts. No hidden fees for tool calls enhance predictability. For startups, this pricing democratizes advanced agentic AI, allowing experimentation without prohibitive expenses.

Practical Use Cases for the Kimi K2 Thinking API

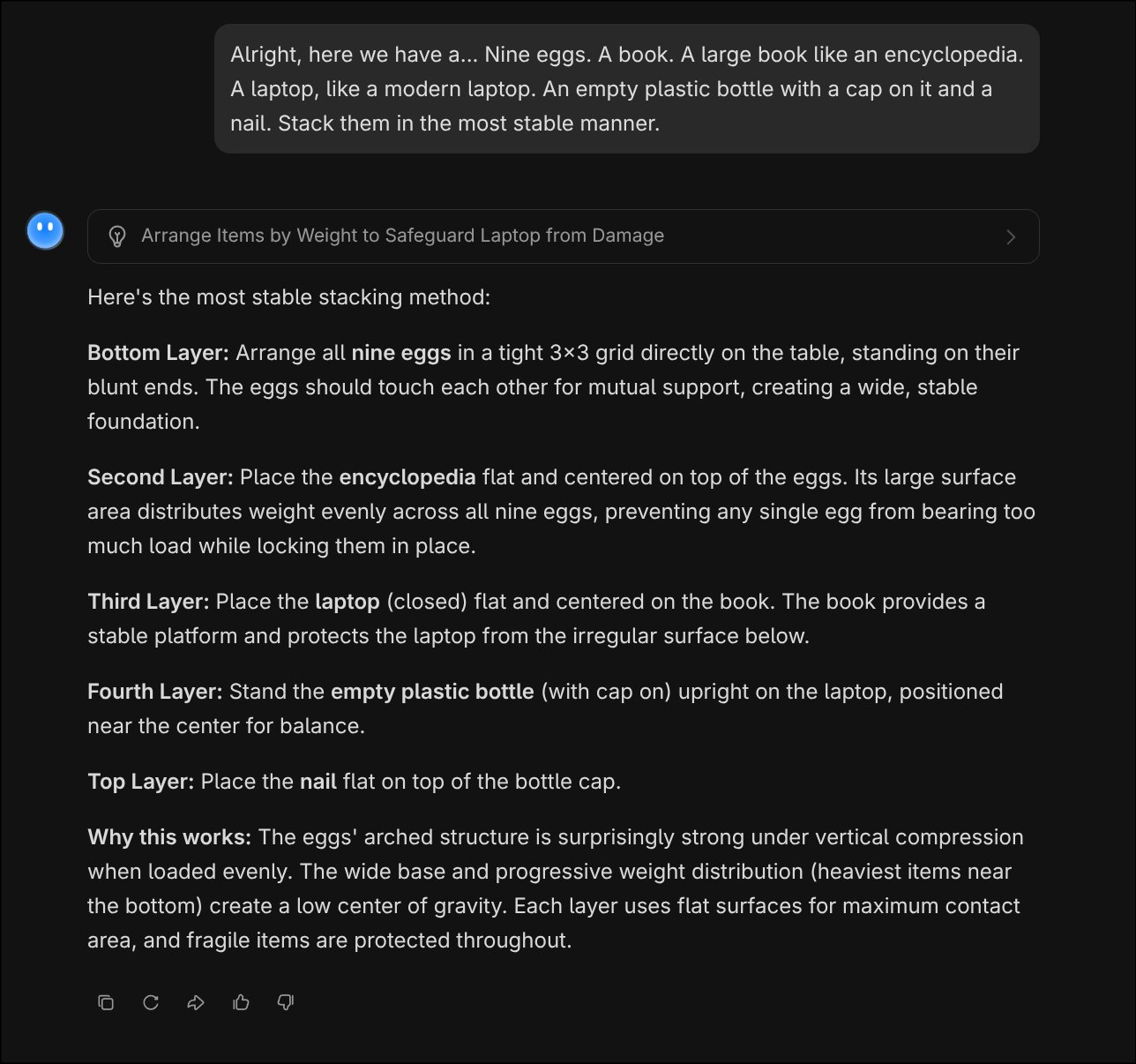

Kimi K2 Thinking's strength lies in its human-like reasoning, making the Kimi K2 Thinking API perfect for tricky, multi-step problems. Consider a classic stacking puzzle: "Alright, here we have nine eggs, a book (large like an encyclopedia), a laptop (modern), an empty plastic bottle with cap, and a nail. Stack them in the most stable manner."

The API responds with logical, step-by-step deduction. The output showcases Kimi K2 Thinking's intuitive physics simulation and sequential planning, far beyond rote responses.

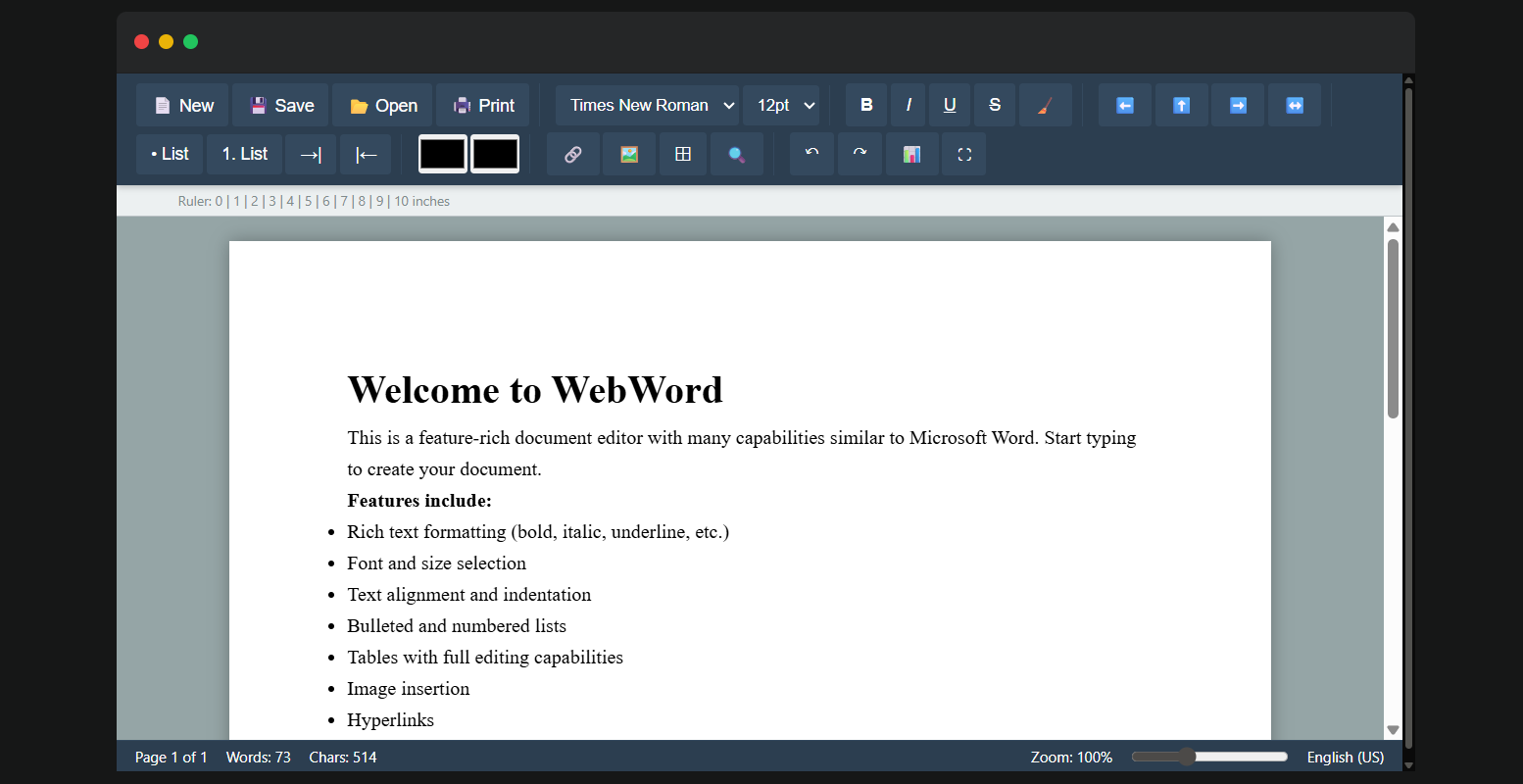

For coding: Consider a document cloning task, such as replicating a Microsoft Word report structure: Prompt the API with "Clone the layout of this Word template, including tables, images, custom fonts, and headers."

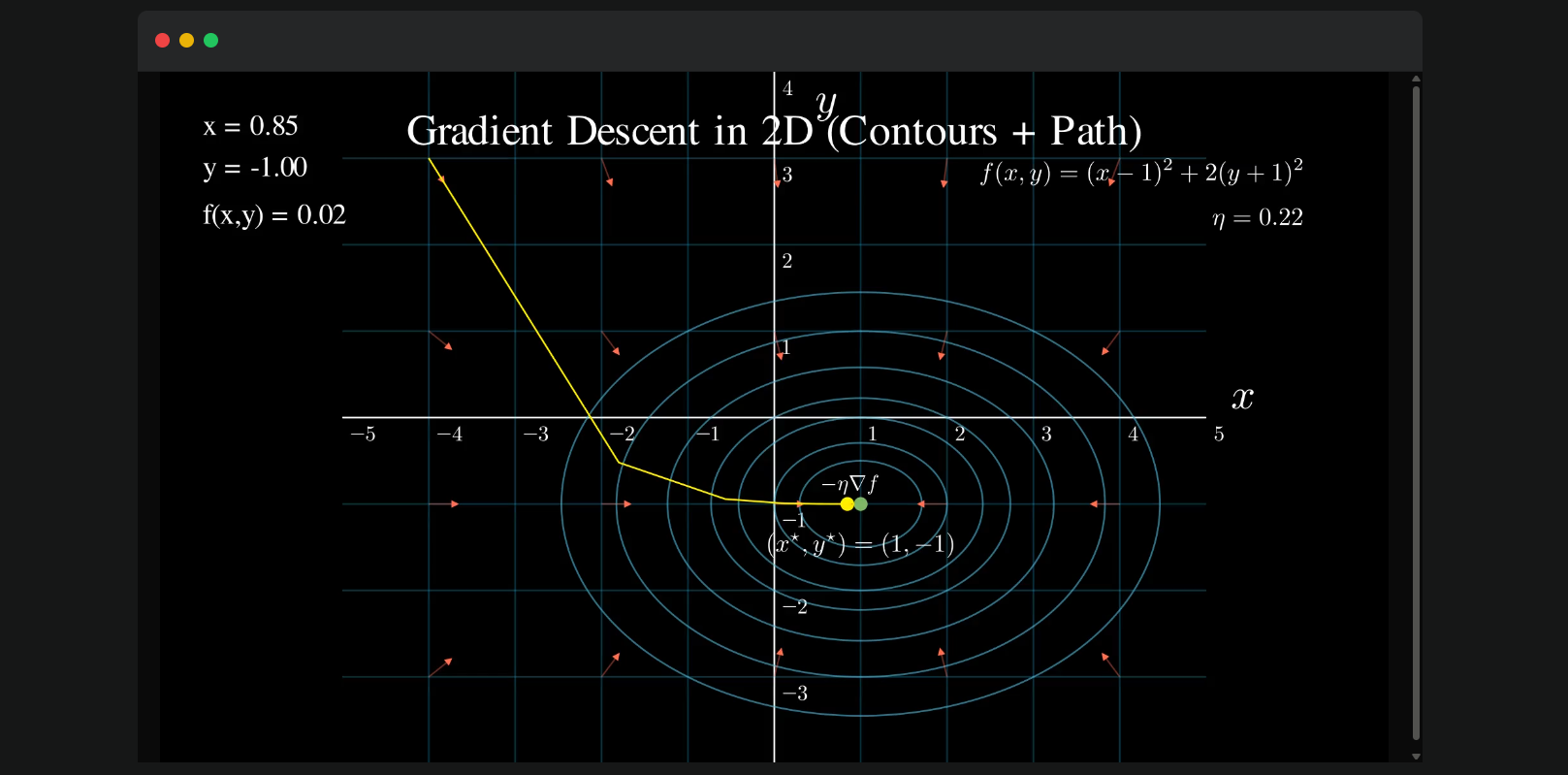

Another compelling application is visualizing gradient descent, a fundamental machine learning concept. Using the Kimi K2 Thinking API, submit: "Visualize gradient descent." The model reasons through the math, invokes Matplotlib via code execution, and produces a step-by-step plot: Each iteration traces the cost function's descent, with annotations for learning rate and convergence point. The response includes the Python snippet for reproducibility, plus insights e.g. "At iteration 5, loss drops below 0.1, confirming stability." This not only educates but enables rapid prototyping for ML tutorials or optimization demos.

Kimi K2 Thinking API Compatibility

A major draw of the Kimi K2 Thinking API is its drop-in compatibility with OpenAI's interface specifications, easing migrations for existing applications. Developers can use OpenAI's Python or Node.js SDKs unchanged, simply updating the base_url to Moonshot's endpoint "https://api.moonshot.ai/v1" and swapping the api_key for a Kimi credential.

This compatibility means if your service relies on GPT endpoints, transitioning to Kimi K2 Thinking requires minimal code tweaks—no refactoring SDK calls or handling new schemas. For instance, a chat completion request:

from openai import OpenAI

client = OpenAI(

api_key="your_kimi_api_key",

base_url="https://api.moonshot.ai/v1"

)

response = client.chat.completions.create(

model="kimi-k2-thinking",

messages=[{"role": "user", "content": "Explain quantum entanglement."}]

)

The response mirrors OpenAI's format, with Kimi K2 Thinking's enhanced reasoning. This frictionless setup accelerates adoption, allowing seamless A/B testing or hybrid deployments. For agentic flows, tool calls align perfectly, supporting JSON schemas for structured outputs.

Obtaining and Using the Kimi K2 Thinking API Key

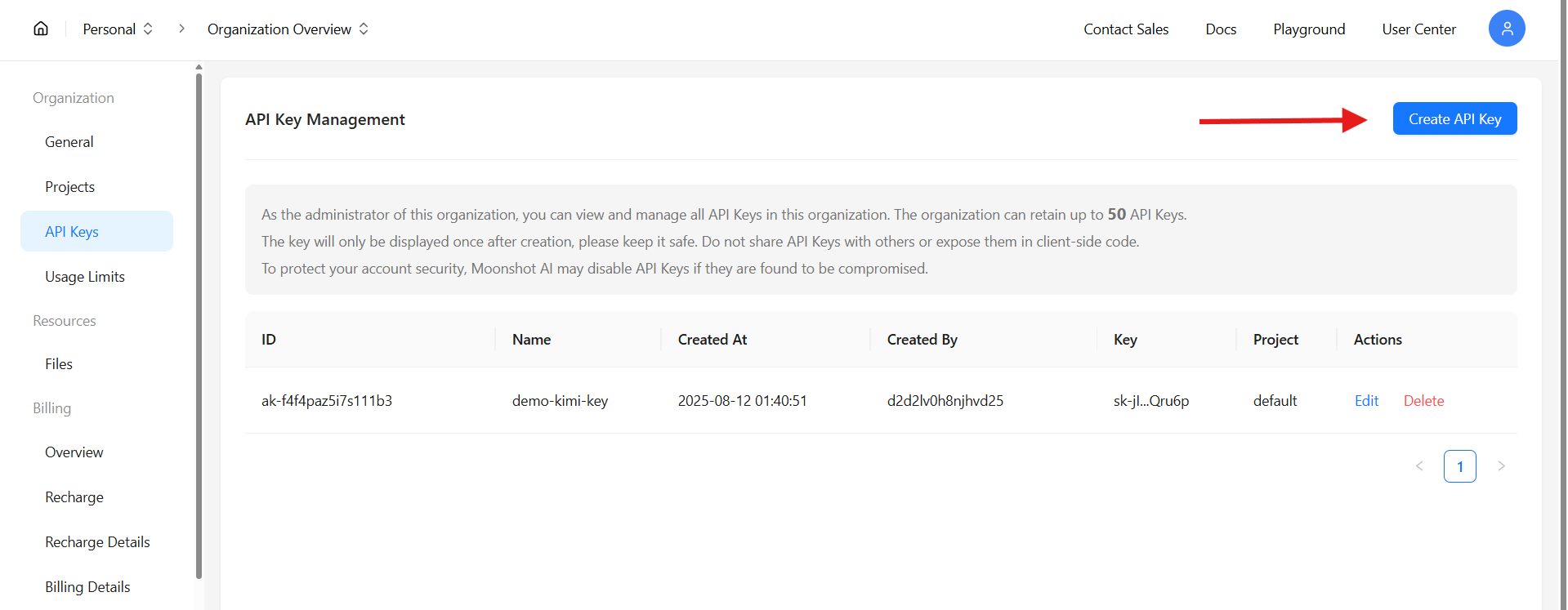

Securing access to the Kimi K2 Thinking API starts at platform.moonshot.ai. Sign up or log in, then navigate to the API console under "API Keys." Click "Create New Key," select permissions (e.g., chat completions, tool calls), and generate—copy the key immediately, as it's shown once.

With the key, configure your SDK as above. Test with a simple curl:

curl https://api.moonshot.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $KIMI_API_KEY" \

-d '{

"model": "kimi-k2-thinking",

"messages": [{"role": "user", "content": "Hello, world!"}]

}'

This verifies connectivity, returning a completion with Kimi K2 Thinking's signature depth. Rate limits (e.g., 100 RPM free tier) apply, scalable via paid plans. Documentation at the console provides endpoints for fine-tuning or batch jobs.

Testing the Kimi K2 Thinking API with Apidog

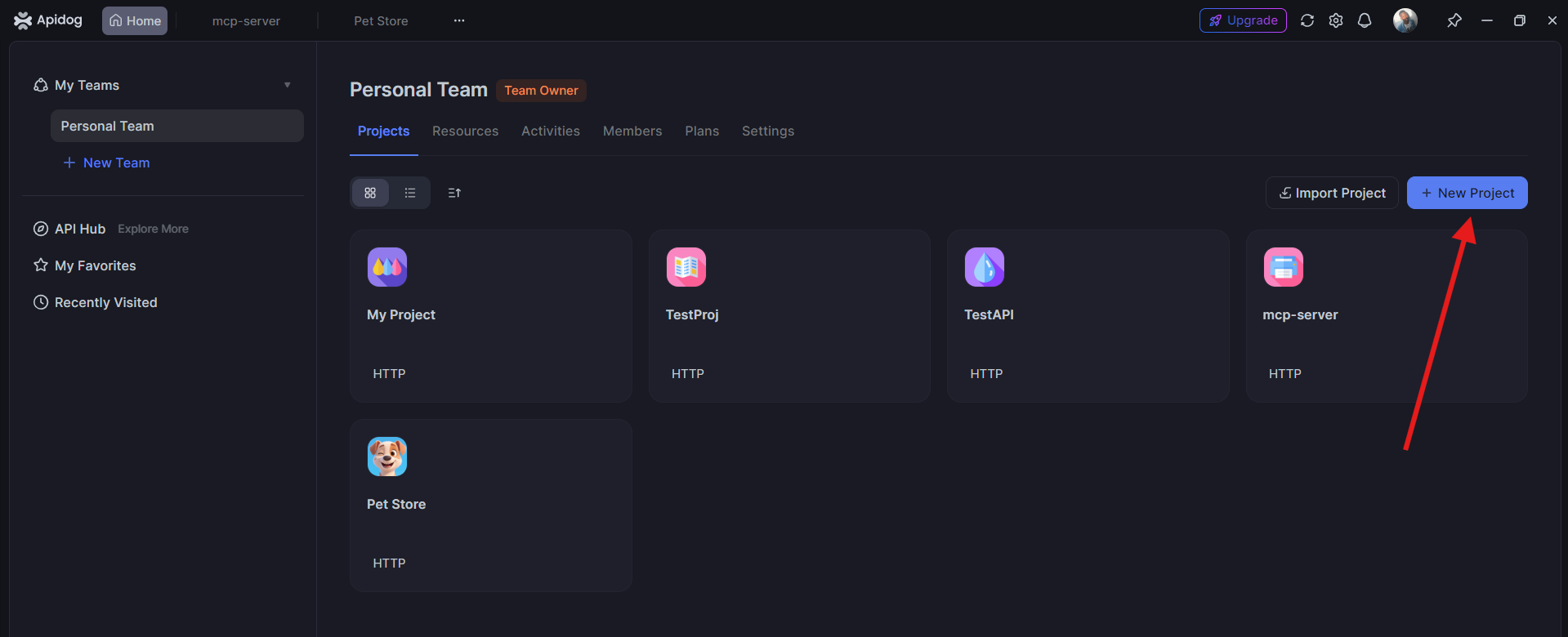

Before deploying, you can easily test your kimi k2 thinking api requests using Apidog, a collaborative API testing platform.

1. Open Apidog and create a new project. Add the Kimi API endpoint:POST https://api.moonshot.ai/v1/chat/completions

2. Include your API Key under Authorization > Bearer Token.

3. Add the request body:

{ "model": "kimi-k2-thinking", "messages": [ {"role": "user", "content": "Write a poem about AI reasoning."} ]}

4. Click Send — you’ll get a live response from the Kimi K2 Thinking model.

Testing in Apidog helps verify your configurations and ensure your API key and endpoint are functioning correctly before integration.

Conclusion: Embrace the Kimi K2 Thinking API

The Kimi K2 Thinking API stands as a beacon of open-source innovation, blending architectural efficiency, benchmark dominance, and practical utility at a fraction of competitors' costs. From logical puzzles to coding marathons, its agentic depth via OpenAI compatibility empowers developers to build smarter systems. Obtain your key, test with Apidog, and start scaling—Kimi K2 Thinking is ready to think alongside you.