Have you ever wanted to experiment with cutting-edge AI models but felt deterred by complex pricing structures or expensive API costs? You're not alone. Many developers, researchers, and AI enthusiasts face this exact challenge when trying to access powerful language models like MiniMax M2 without incurring substantial expenses.

Here’s the fantastic news: you can absolutely get your hands on MiniMax’s technology without spending a single dime. And honestly, that’s a game-changer. The world of AI is moving at light speed, and the gatekeepers used to be the big tech giants with hefty price tags. Now, platforms like OpenRouter are throwing the doors wide open, giving developers and curious minds like you and me a chance to play in the big leagues.

What Exactly is MiniMax?

In a nutshell, MiniMax is an AI powerhouse. They’re not a one-trick pony; they’ve developed a whole family of models. You might have heard of their star player, abab-5.5, which is their flagship large language model. It’s designed to be a powerhouse for complex reasoning, coding, and creative tasks. But they also have specialized models for speech-to-text, text-to-speech, and even visual recognition.

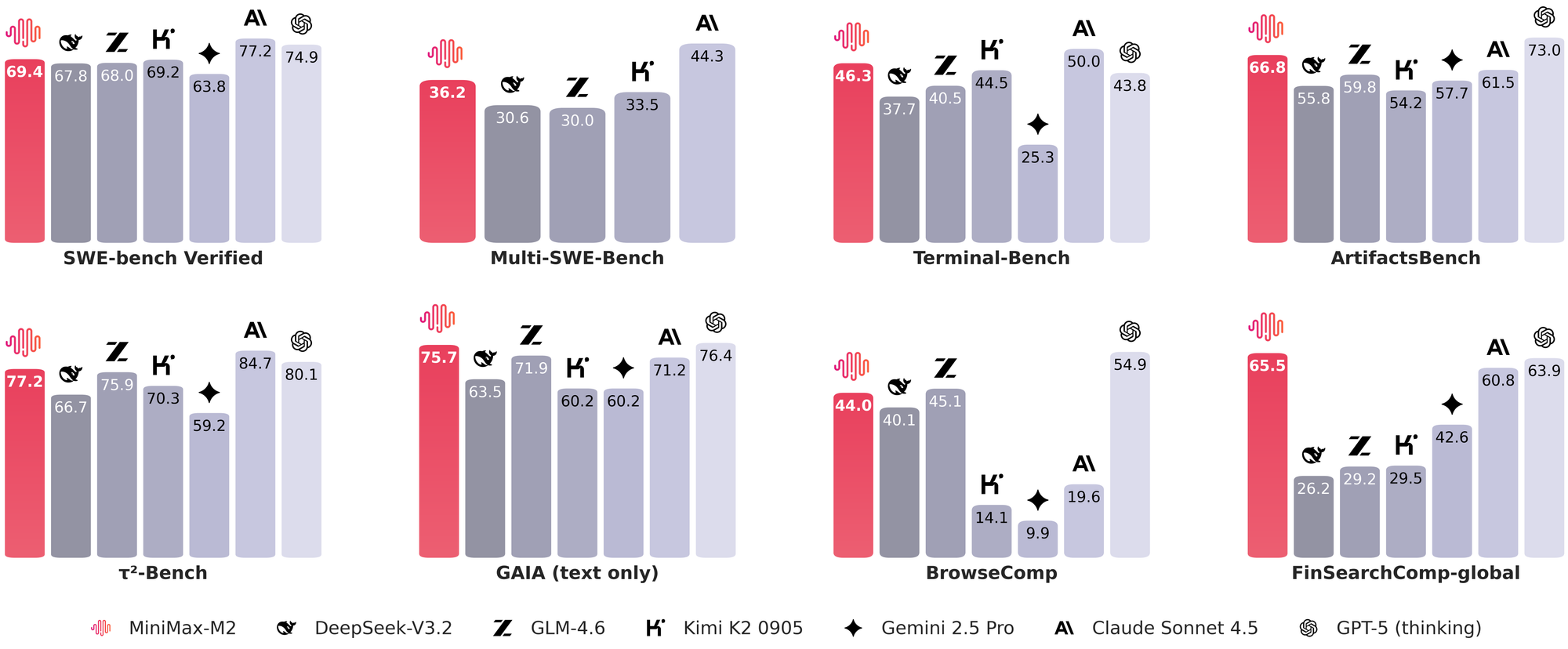

What makes them stand out? For starters, their models consistently rank highly on various benchmarks and how to use open router api discussions. When developers and researchers compare model performance on tasks like reasoning, coding proficiency (like the HumanEval benchmark), and general knowledge (like MMLU), MiniMax’s models are often right up there with the best from OpenAI and Anthropic. This isn't just academic; it means you're getting a genuinely capable and robust AI to work with.

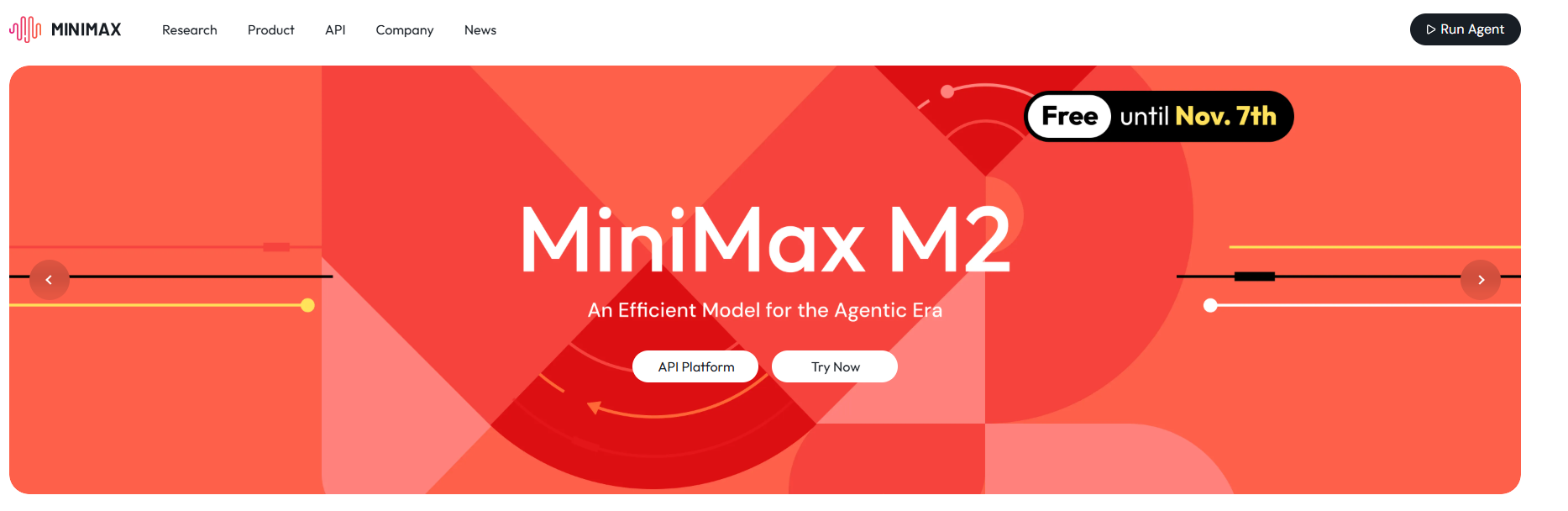

MiniMax M2: What Makes It Special

MiniMax M2 represents a significant advancement in language model capabilities, offering impressive performance across various tasks while maintaining competitive efficiency. Understanding its strengths helps you determine optimal use cases for free access strategies.

Core Capabilities: MiniMax M2 excels in several key areas that make it particularly valuable for developers and researchers. Its strong performance in conversation tasks makes it excellent for chatbot development and customer service applications. Additionally, its reasoning capabilities provide value for analytical tasks, while its text generation quality suits content creation and creative writing applications.

Performance Characteristics: When evaluating MiniMax M2 against other models, several factors become important. Response speed affects user experience in real-time applications. Output quality impacts the usefulness of generated content. Context window size determines the complexity of tasks it can handle. These characteristics directly influence which free access strategies will be most effective for your specific needs.

Competitive Advantages: Understanding what sets MiniMax M2 apart from alternatives helps you make informed decisions about which model to prioritize in your free access efforts. Its balance of capability and efficiency often makes it an attractive option for resource-conscious projects.

The Golden Key: Introducing OpenRouter

Okay, so MiniMax is awesome. But how do we, the general public, access it for free? The answer is OpenRouter.

Think of OpenRouter as a universal remote control for AI models. Instead of signing up for a dozen different AI service websites, each with its own pricing, billing, and API quirks, OpenRouter gives you one single platform to access a huge variety of models including several from MiniMax.

Here’s the beautiful part: OpenRouter has a generous free tier. When you create an account, they give you a small amount of credit to start experimenting. This credit is more than enough to test the waters with MiniMax’s models, build a small project, or just satisfy your curiosity. It’s the perfect sandbox.

Your Step-by-Step Blueprint: Getting Started with MiniMax on OpenRouter

Let’s roll up our sleeves and get you set up. This process is surprisingly straightforward.

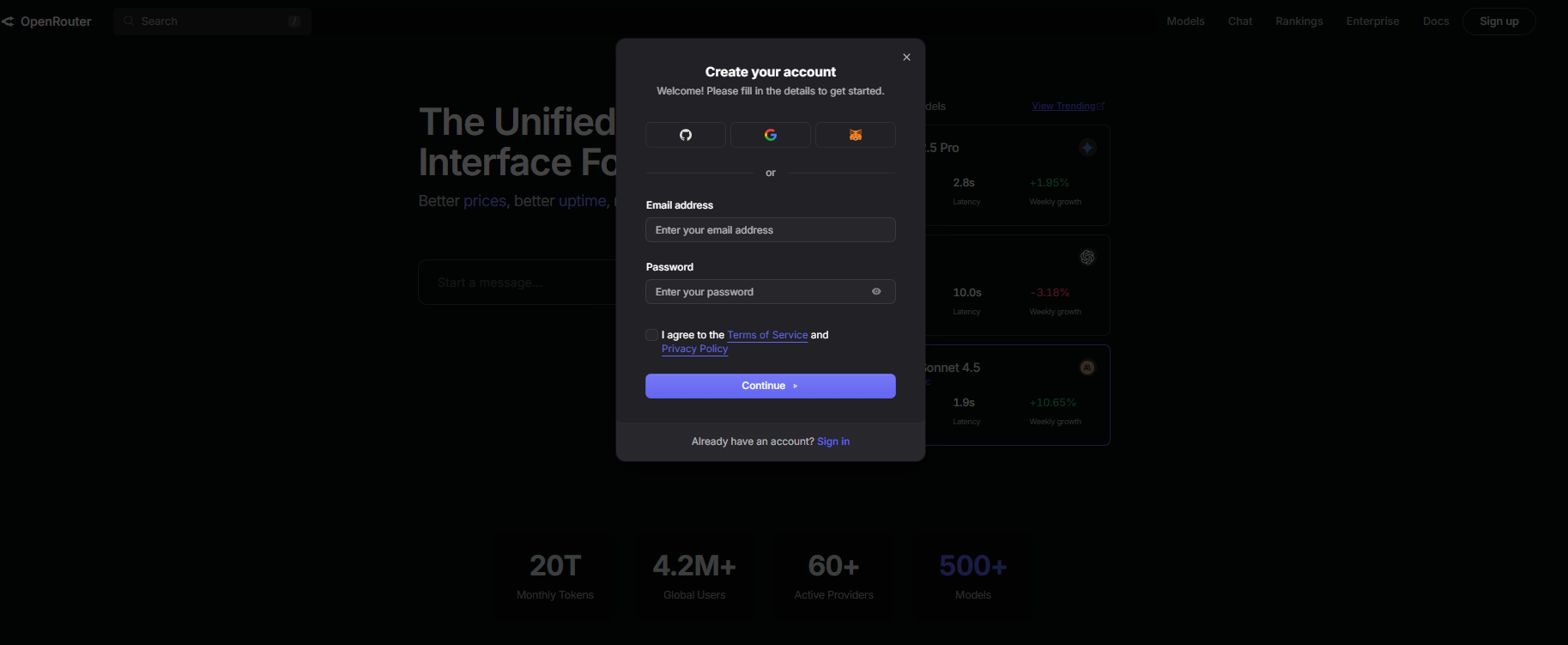

Step 1: Create Your OpenRouter Account

Head over to OpenRouter.ai and sign up. You can use your Google, GitHub, or Discord account for a super quick process. Once you’re in, take a moment to familiarize yourself with the dashboard. You’ll see a list of available models, your credit balance, and your usage statistics.

Step 2: Locate Your API Key

This is your passport. In the OpenRouter dashboard, navigate to the "Keys" section. You’ll see a long, cryptic string of characters. That’s your API key. Treat it like a password don’t share it publicly or commit it to a public GitHub repository. You’ll need this for every request you make.

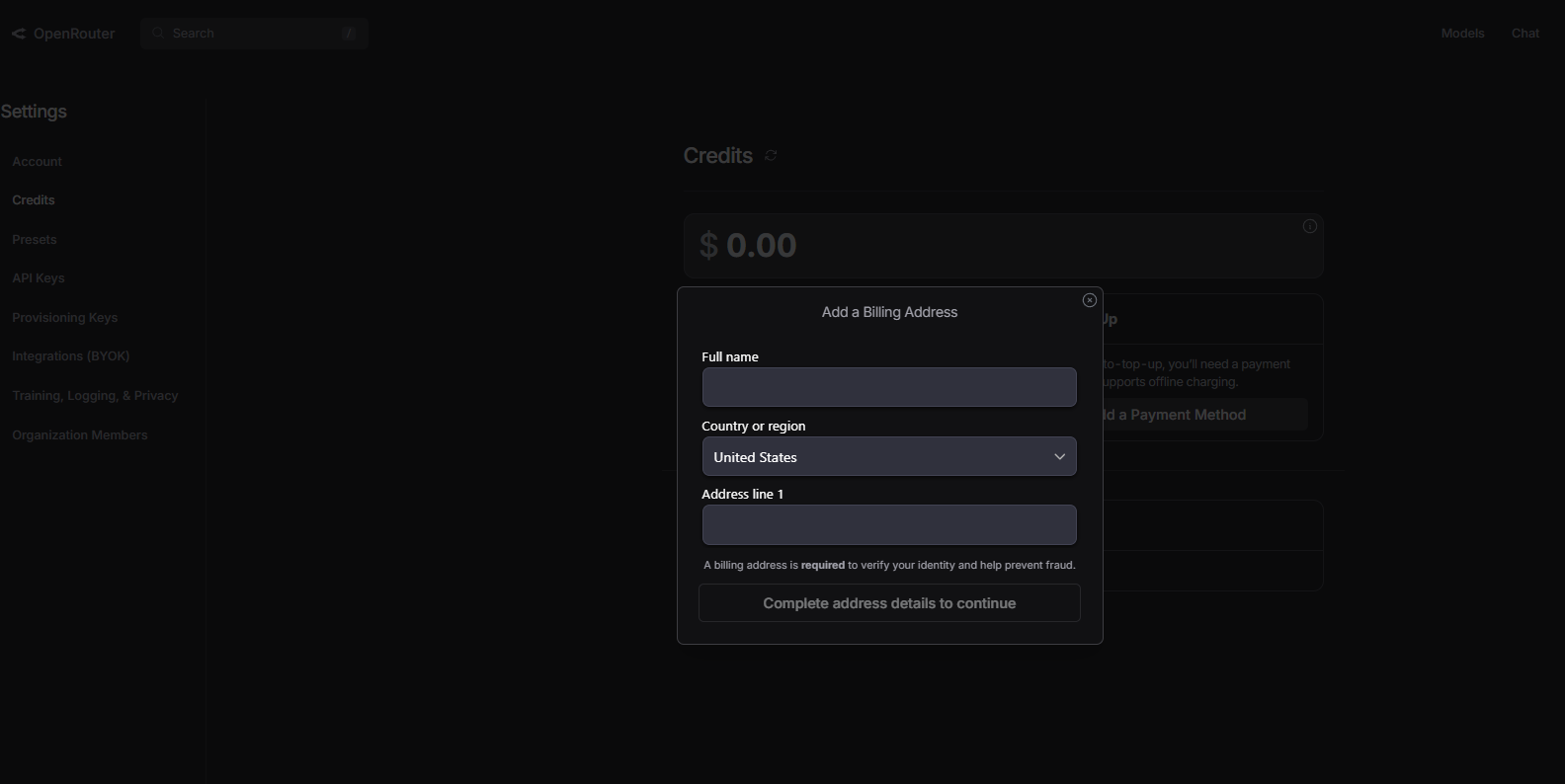

Step 3: Fund Your Account (A Little)

Remember the free credits? You might start with a few cents. For more substantial testing, you can add a small amount of money, say $5 or $10. The cost per request for AI models is incredibly low, so this small deposit will last you a surprisingly long time for experimentation. This is what makes it essentially "free" for most hobbyist uses.

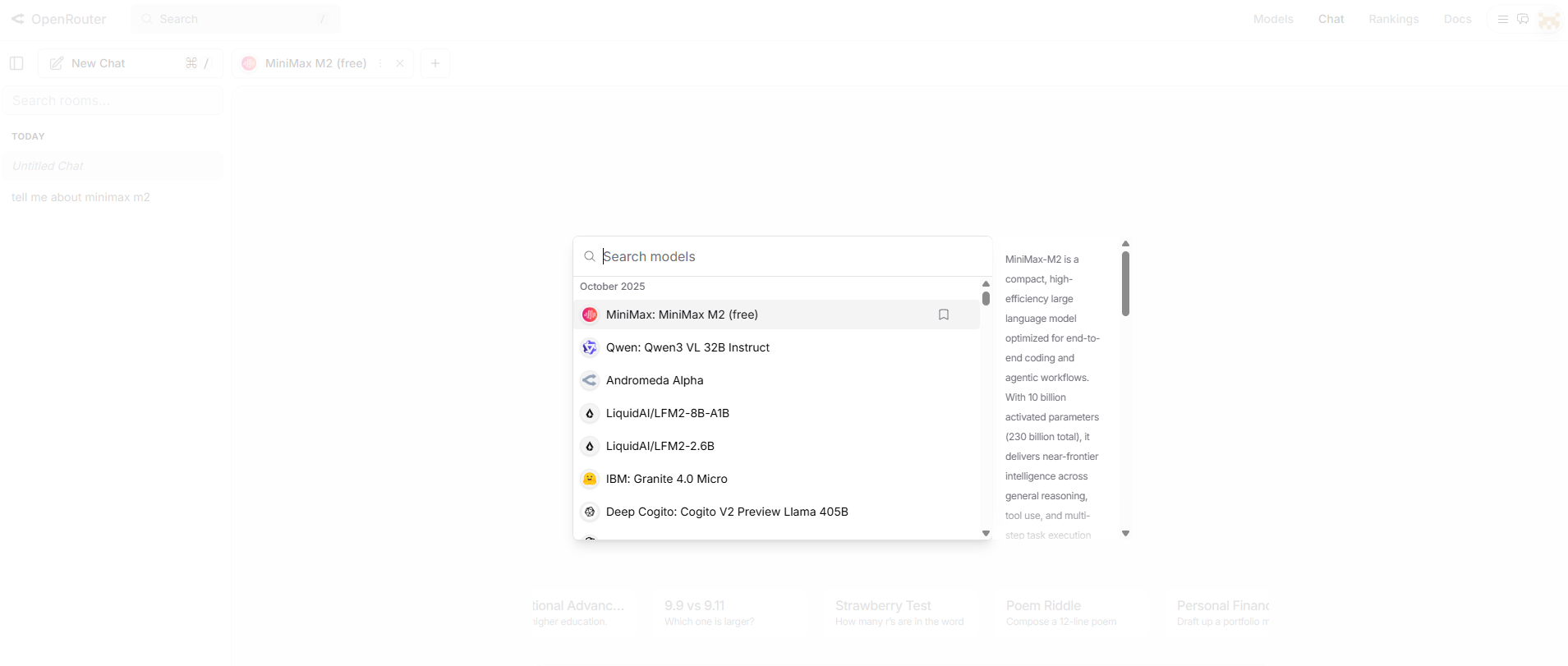

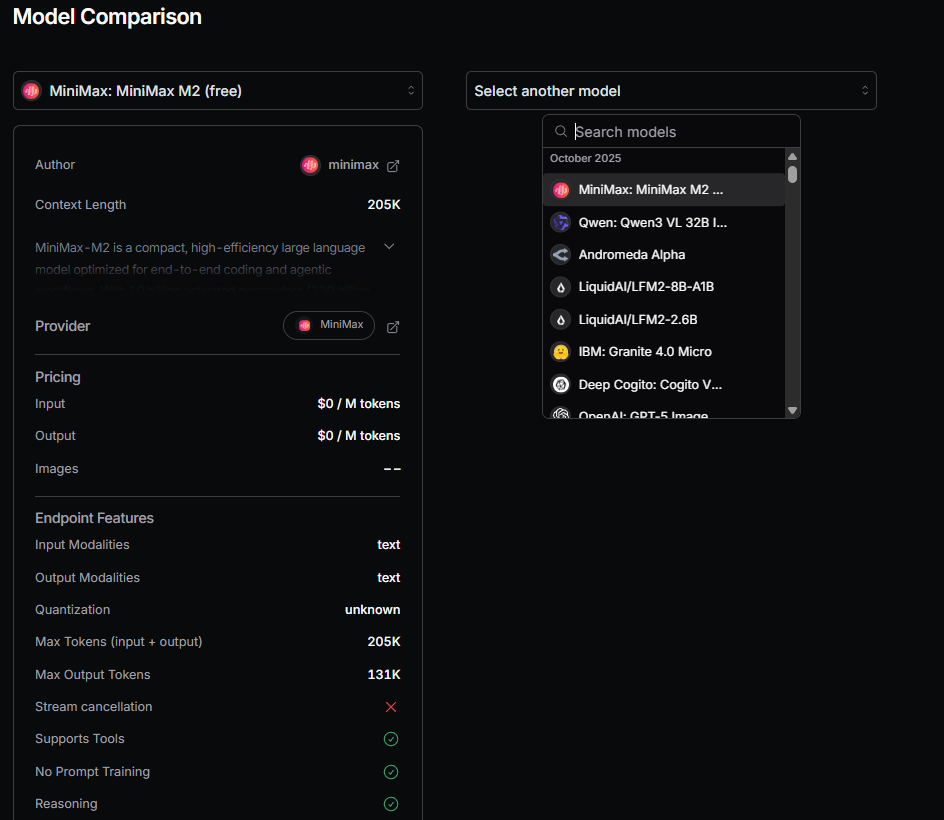

Step 4: Choose Your MiniMax Model

Now, go to the "Models" page on OpenRouter and search for "MiniMax." You’ll see a list. For text generation, you'll primarily be looking at:

- MiniMax Text Models: Like

mini-max/text-01great for general chat and instruction-following.

Take note of the exact model name, as you'll need to specify it in your API calls.

Why This Combo is a Powerhouse for Developers

Now that you know the basics, let's talk about why this is such a big deal.

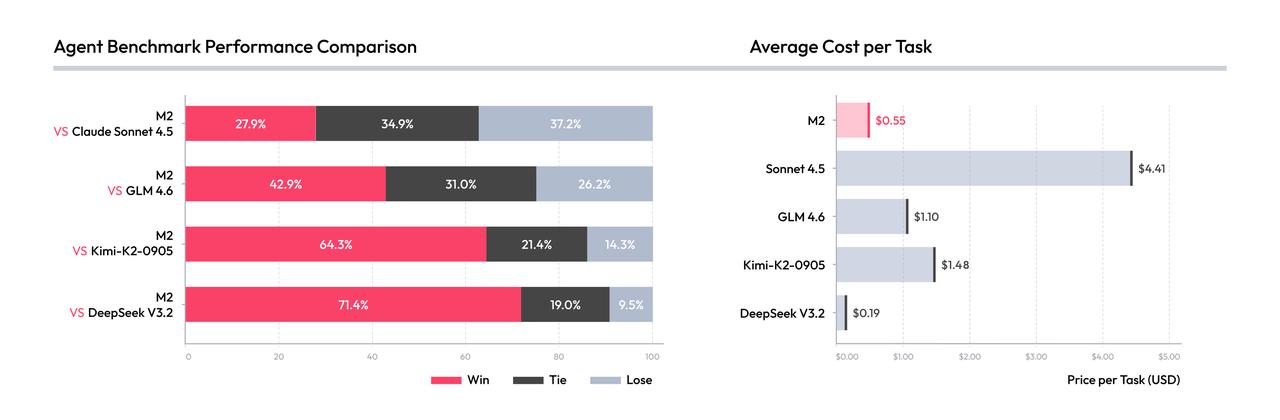

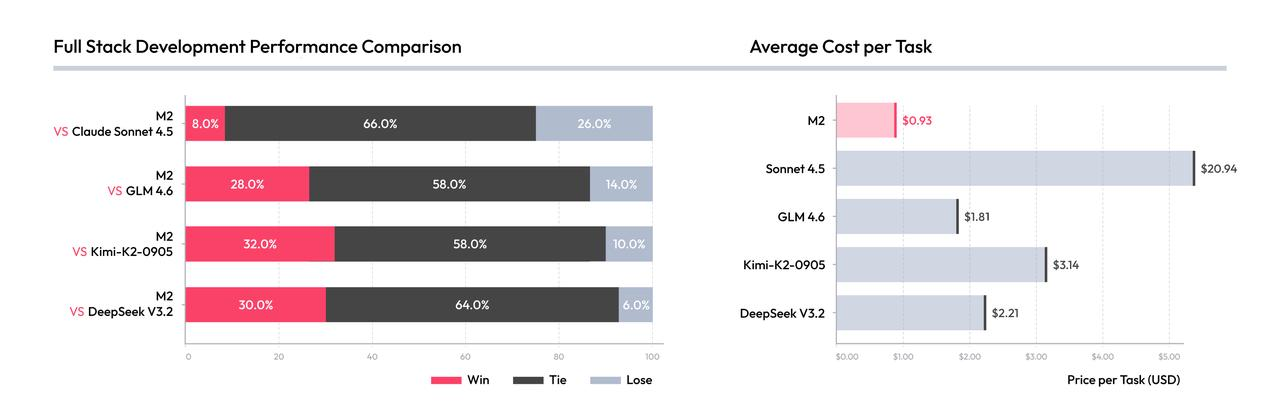

1. Cost-Effective Experimentation: As I mentioned, you're not locked into a single vendor's pricing. OpenRouter allows you to compare the cost and performance of MiniMax against dozens of other models. This is crucial for bootstrapped startups and indie developers.

2. Standardization is a Superpower: The OpenRouter API uses a format that is very similar to OpenAI's API. If you know how to work with one, you know how to work with all of them on OpenRouter, including MiniMax. This drastically reduces the learning curve.

3. Access to Cutting-Edge Models: MiniMax is constantly improving. By using them through OpenRouter, you get front-row seats to their latest and greatest models without having to manage a separate relationship with the company.

4. The Power of Choice: Maybe MiniMax's abab-5.5 is perfect for your coding assistant, but you find another model better for creative writing. With OpenRouter, you can switch between them in your code by changing just one line the model name. This flexibility is unparalleled.

Making Sense of the Landscape: E Benchmarks and How to Use OpenRouter API

You’ll often hear tech-savvy folks talk about e benchmarks and how to use open router api data to make informed decisions. Let's demystify that.

What are "E-Benchmarks"? The "E" likely stands for "evaluation." These are standardized tests like exams for AI models that measure their capabilities in areas like:

- Reasoning: Can the model solve logic puzzles?

- Knowledge: How well does it answer questions about history, science, etc.?

- Coding: Can it write functional code from a description?

- Safety: How well does it avoid generating harmful or biased content?

OpenRouter doesn't just provide access; it's a treasure trove of real-world performance data. On their models page, you can often see how each model, including MiniMax's, has performed on these public benchmarks. So, when you're wondering, "Is MiniMax good at coding compared to model X?", you can check the benchmark scores right there on OpenRouter.

This transforms your decision from a guessing game into a data-driven choice. You're not just picking a model by its name; you're picking it based on its proven performance on the specific tasks that matter to you.

Comparing Minimax M2 with Other Models via OpenRouter

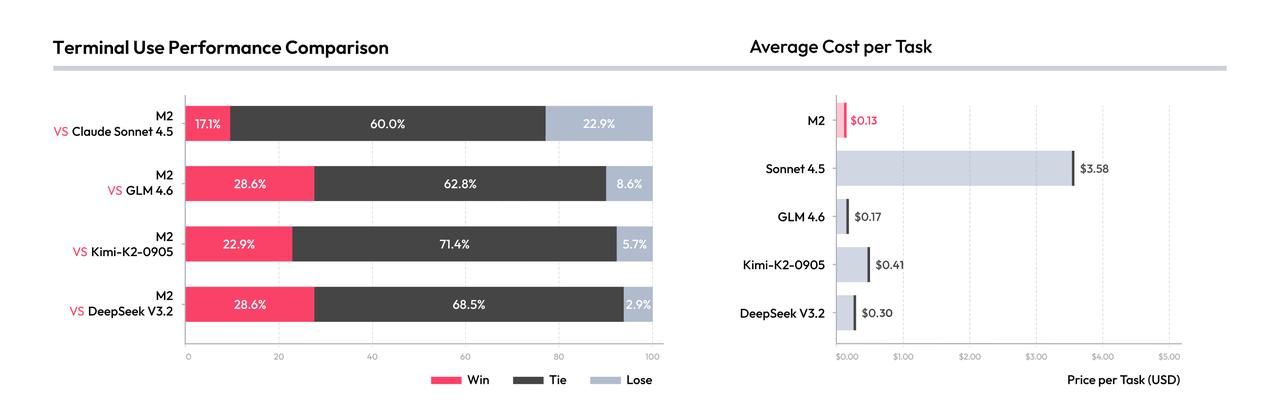

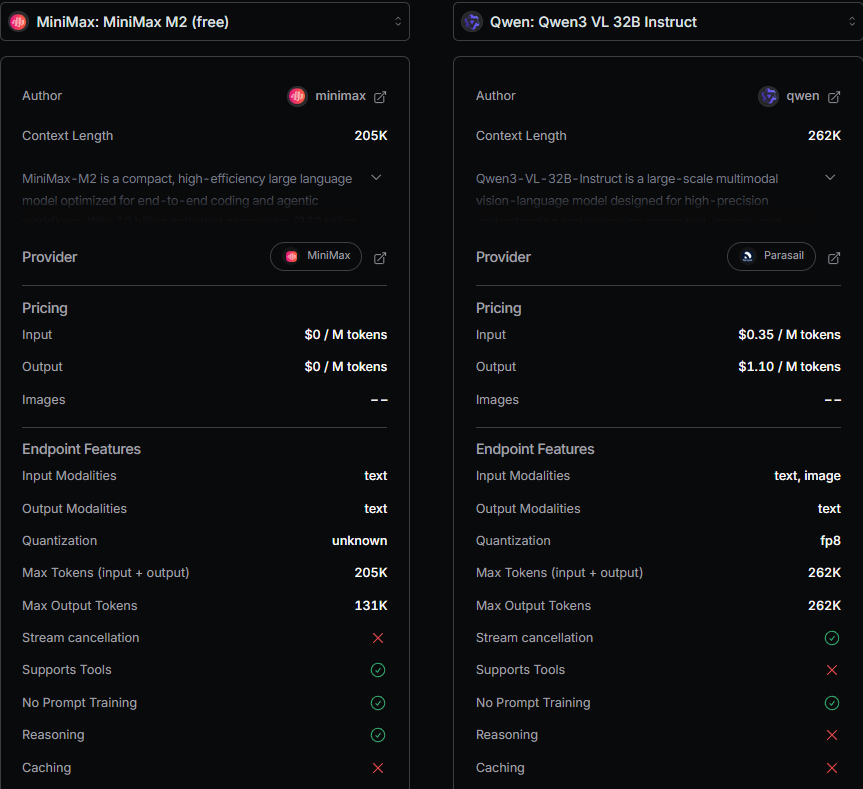

One of OpenRouter’s biggest strengths is model swapping. Want to see how Minimax M2 stacks up against Claude 3.5 Sonnet or Mistral Large?

Keep everything else identical same prompt, temperature, max_tokens and compare outputs side-by-side in Apidog.

This is invaluable for e-benchmarks. You might discover that:

- Minimax M2 excels at structured reasoning

- Claude is better at nuanced dialogue

- Mistral is fastest for short responses

Use these insights to pick the right model for your app without overpaying.

Cost Considerations: Is Minimax M2 Really “Free”?

Technically, yes at first. OpenRouter’s $1 free credit covers:

- ~5,000–7,000 Minimax M2 requests (depending on output length)

- Enough for serious prototyping, testing, and benchmarking

After that, Minimax M2 costs $0.15 per million input tokens and $0.60 per million output tokens (as of October 2025). That’s significantly cheaper than GPT-4 Turbo ($10/$30 per million tokens).

So even beyond the free tier, Minimax M2 is a cost-efficient choice for production apps especially if your users are in Asia.

Advanced Techniques and Pro Tips

Custom Integration Patterns

Building Robust Applications: When building applications that rely on free AI access, implement patterns that ensure reliability and user satisfaction.

Error Handling Strategies:

class RobustAIIntegration:

def __init__(self, api_client, fallback_models, circuit_breaker):

self.api_client = api_client

self.fallback_models = fallback_models

self.circuit_breaker = circuit_breaker

self.usage_monitor = UsageMonitor()

def generate_with_fallback(self, prompt, preferred_model):

# Try preferred model first

try:

if self.circuit_breaker.can_request():

return self.api_client.generate(prompt, preferred_model)

else:

raise Exception("Circuit breaker open")

except Exception as e:

# Try fallback models

for model in self.fallback_models:

try:

return self.api_client.generate(prompt, model)

except Exception:

continue

raise Exception("All models failed")

Caching and Response Optimization: Implement intelligent caching to reduce redundant API calls and improve response times.

Cache Strategy Implementation:

- Cache successful responses for common queries

- Implement cache invalidation strategies

- Use different cache TTL values based on content type

- Monitor cache hit rates and optimize accordingly

Performance Optimization

Request Batching: Combine multiple related requests into single API calls when possible to reduce overhead and improve efficiency.

Parallel Processing: Use parallel processing for independent requests while respecting rate limits and maintaining system stability.

Resource Pooling: Implement resource pooling for high-throughput applications to reduce connection overhead and improve performance.

Security and Compliance

API Security Best Practices: Ensure your AI integrations follow security best practices to protect both your users and your access privileges.

Security Implementation:

- Validate all inputs before sending to AI APIs

- Implement rate limiting and abuse prevention

- Use secure authentication methods

- Monitor for unusual usage patterns

- Maintain audit logs of API usage

Data Privacy Considerations: When working with AI APIs, consider data privacy and compliance requirements: - Understand data retention policies

- Implement data anonymization where appropriate

- Comply with relevant privacy regulations

- Secure transmission of sensitive information

Practical Use-Cases: Where the Free Variant Shines

Now that you’re set up and know how to call MiniMax M2, let’s look at real use-cases where the free variant is powerful.

1. Content creation & blogging

- Use it to generate article outlines, sub-headers, draft paragraphs

- Example: “Write a conversational blog intro about using MiniMax M2 for free”

- Since free tier has less cost, you can iterate rapidly and pick the best outputs

2. Code generation & debugging

Given MiniMax M2’s strength in coding tasks (as indicated by benchmarks for code generation & tool-use), you can use it to:

- Generate small utility scripts

- Refactor code snippets

- Suggest fixes

- Example prompt: “Explain this Python function and propose a simpler version”

3. Prototyping and experimentation

- Use free variant for proof-of-concept, MVPs

- Chain: free variant → higher tier model (via OpenRouter) for final polish

- Example: draft chat bot responses using MiniMax M2 free, refine using premium model

4. Educational & learning tasks

- Use it for summarising concepts, generating quiz questions, explaining code

- Since tokens cost is minimal in free variant, you can experiment without concern

5. Testing APIs and integrations

- Use Apidog to send prompts, evaluate response speed & quality

- Use free variant to test integration logic, then if performance demands scale up

The Role of Apidog in Your Workflow

Remember when I mentioned Apidog at the start? This is where it truly shines. Instead of manually writing and testing scripts, you can use Apidog to:

- Build a Request Collection: Save all your different MiniMax API calls (e.g., one for creative writing, one for code generation) as separate requests.

- Use Environments: Store your API key in an environment variable so you don’t have to paste it into every single request. This is more secure and efficient.

- Automate Testing: Write test cases to automatically check if your API calls to the MiniMax model are returning the expected format and quality of responses.

- Generate Code: Apidog can automatically generate the Python, JavaScript, or other code snippets for your perfectly crafted API request, saving you tons of time.

Integrating a professional API tool into your process isn't just a nice-to-have; it's a force multiplier that lets you focus on building your product rather than fighting with curl commands.

Advanced: Automating Minimax M2 Workflows with Apidog

Once you’re comfortable, take it further:

- Chain requests: Use Minimax M2’s output as input to another model.

- Export results: Save responses to CSV for offline analysis.

- Mock servers: Simulate Minimax M2 responses during frontend dev.

- CI/CD integration: Run e-benchmarks as part of your deployment pipeline.

Apidog turns one-off experiments into repeatable, scalable AI workflows.

Best Practices: Get the Most Out of the Free Tier

Free usage isn’t unlimited or unrestricted so you’ll want to adopt good practices to stretch it.

Prompt economy

- Keep prompts focused and concise

- Avoid overly verbose system messages

- Explicitly define output format when needed (so fewer tokens wasted)

Token management

- Monitor input + output tokens (some tasks can balloon output tokens)

- Cache repeated responses (for identical prompts)

- Use smaller

max_tokensif you only need short output

Quality vs cost trade-off

- If quality is good enough for your use-case (drafts, prototypes), stay on free

- For customer-facing, high-quality tasks, consider premium models or chaining

Use Apidog for monitoring

- Set up tests in Apidog that track latency, token usage, error rate

- Review regularly

- Identify if the free variant starts to degrade (e.g., slower responses, truncated output)

Scaling thoughtfully

- When you hit free tier ceilings, plan:

- Up-move to paid variant of MiniMax M2 if available

- Or switch to another model in OpenRouter that offers different cost/performance

- Maintain modular architecture so you can swap models without large refactor

Conclusion: Your AI Journey is Just Beginning

So, there you have it. You now know the secret handshake. Using MiniMax for free isn't just a pipe dream; it's a fully accessible reality thanks to platforms like OpenRouter. You've learned how to get an API key, how to structure a basic request, and even how to think about model performance using benchmarks.

The barrier to entry for building with world-class AI has never been lower. You have the power to create chatbots, writing assistants, coding helpers, and anything else you can imagine, all powered by the sophisticated technology from MiniMax.

The next step is to take action. Go to OpenRouter, create that account, grab your key, and fire up your code editor (or Apidog!). Send that first request. Tweak the prompt. See what happens. The most amazing discoveries often happen not by reading guides, but by diving in and experimenting for yourself. Happy building!