Developers constantly seek advanced tools that streamline coding tasks, and OpenAI delivers with the GPT-5-Codex-Mini. This model represents a compact evolution of the GPT-5-Codex series, offering cost-efficient performance for agentic coding workflows. Engineers integrate it to handle tasks like code generation, debugging, and repository management without sacrificing essential capabilities. However, accessing its API requires specific steps, which this guide outlines in detail.

Transitioning from traditional coding assistants to AI-driven ones marks a significant shift, yet small adjustments in setup yield substantial improvements in productivity. Therefore, understanding the model's foundation proves crucial.

Understanding GPT-5-Codex-Mini: Capabilities and Architecture

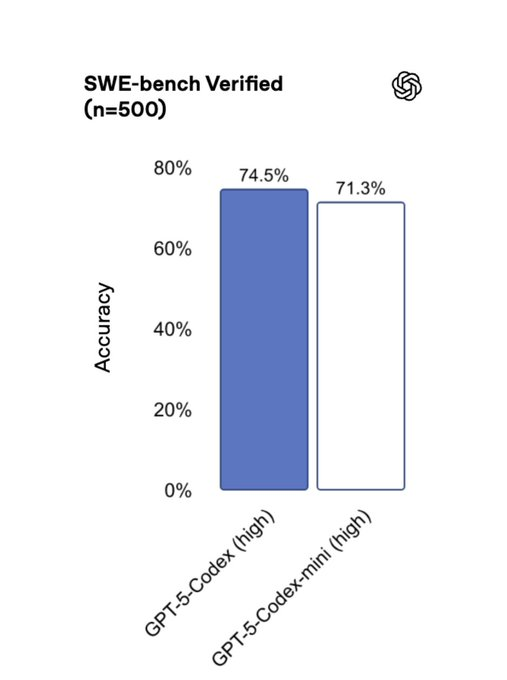

OpenAI engineers designed GPT-5-Codex-Mini as a lighter variant of GPT-5-Codex, prioritizing efficiency for well-defined coding scenarios. The model excels in generating front-end code, debugging large repositories, and performing refactors across languages like Python, JavaScript, and Go. Unlike its larger counterpart, GPT-5-Codex-Mini consumes fewer resources, enabling approximately four times more usage per credit allocation.

Technically, the architecture builds on transformer-based neural networks, optimized for token efficiency. It processes inputs with dynamic reasoning time—quick for simple queries but extended for intricate tasks involving multiple iterations. For instance, when refactoring a 3,000-line codebase, the model iterates through implementations, runs tests, and validates dependencies autonomously.

GPT-5-Codex-Mini integrates seamlessly with tools like the Codex CLI and IDE extensions. Developers activate it in VS Code or Cursor to preview changes locally, leveraging selected code snippets for context-aware suggestions. This approach reduces prompt lengths and accelerates response times, allowing teams to maintain workflow continuity.

However, users must note the slight capability tradeoff: while it handles most agentic tasks effectively, complex multi-language integrations may require switching to the full GPT-5-Codex. Consequently, evaluating your project's needs beforehand ensures optimal model selection.

Prerequisites for Accessing the GPT-5-Codex-Mini API

Accessing the GPT-5-Codex-Mini API demands careful preparation. First, developers verify their OpenAI account status. OpenAI structures access through usage tiers, ranging from 1 to 5, with models like GPT-5-Codex-Mini available across all levels but subject to organization verification for advanced features.

You start by creating an OpenAI account if you lack one. Navigate to the OpenAI platform and sign up using a valid email. Next, complete the organization verification process, which involves submitting business details or proof of identity. This step unlocks access to verified models, including streaming capabilities in the Responses API.

Additionally, secure an API key from the dashboard. Log in, access the API keys section, and generate a new key. Store it securely, as it authenticates all requests. Without this key, endpoints remain inaccessible.

Furthermore, ensure your development environment supports necessary libraries. Install the OpenAI Python SDK via pip: pip install openai. This package facilitates API calls, handling authentication and payload formatting automatically.

Transitioning to hardware requirements, a stable internet connection and sufficient compute resources prove essential, especially for batch processing. Although GPT-5-Codex-Mini optimizes for efficiency, high-volume usage benefits from cloud-based setups.

Signing Up for OpenAI Plans and Enabling API Access

OpenAI offers tiered plans that influence GPT-5-Codex-Mini availability. ChatGPT Plus subscribers gain basic access, suitable for occasional coding sessions. However, for extensive use, upgrade to Pro, Business, or Enterprise plans. These provide higher rate limits—recently increased by 50%—and priority processing.

To enable API access, link your ChatGPT account to the developer platform. Sign in with your credentials, and the system automatically syncs entitlements. For API-only users, purchase credits directly if you exceed included limits.

Moreover, monitor announcements for beta programs. Since API support for GPT-5-Codex-Mini rolled out recently, join waitlists via the OpenAI community forums. Participate in developer previews to gain early access, providing feedback that shapes future iterations.

Once activated, test connectivity. Send a simple request to the /models endpoint to list available models, confirming GPT-5-Codex-Mini appears. This verification step prevents integration issues later.

Consequently, aligning your plan with project scale maximizes value, as higher tiers offer expanded credits and reduced latency.

Generating and Managing Your OpenAI API Key

Security forms the cornerstone of API interactions, so generate your key responsibly. From the OpenAI dashboard, select "API keys" and click "Create new secret key." Name it descriptively, such as "GPT-5-Codex-Mini-Project," for easy management.

Store the key in environment variables rather than hardcoding it. In Python, use os.environ['OPENAI_API_KEY'] to load it dynamically. This practice mitigates exposure risks.

Additionally, rotate keys periodically. OpenAI allows revoking old keys without disrupting active sessions, ensuring compliance with security protocols.

Furthermore, implement rate limiting in your application. Monitor usage via the dashboard to avoid exceeding tiers, which could trigger temporary restrictions.

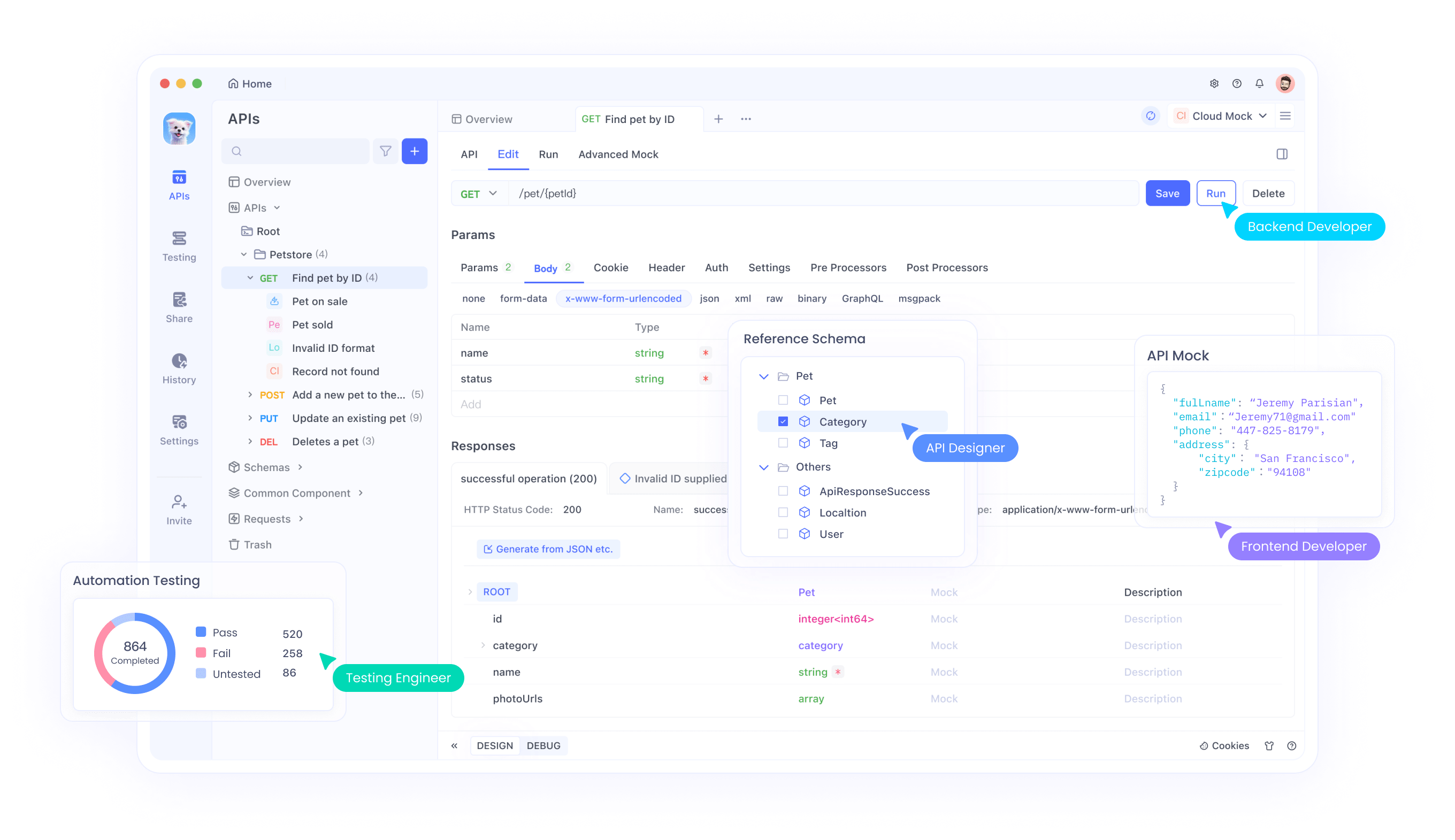

Transitioning to tools, Apidog simplifies key management. Its secure vault stores credentials, automating authentication in test environments. Thus, developers focus on coding rather than administrative tasks.

Integrating GPT-5-Codex-Mini into Your Development Workflow

Integration begins with selecting the appropriate endpoint. Use the Chat Completions API for general queries or the Responses API for agentic tasks. Specify "gpt-5-codex-mini" as the model parameter in your request body.

For example, craft a Python script to generate code:

import openai

openai.api_key = os.getenv('OPENAI_API_KEY')

response = openai.ChatCompletion.create(

model="gpt-5-codex-mini",

messages=[

{"role": "system", "content": "You are a helpful coding assistant."},

{"role": "user", "content": "Write a Python function to sort a list using quicksort."}

]

)

print(response.choices[0].message['content'])

This call returns optimized code, leveraging the model's efficiency.

Moreover, incorporate context from files. Attach code snippets or diagrams to prompts, enhancing accuracy for debugging scenarios.

However, optimize prompts for brevity. GPT-5-Codex-Mini performs best with concise instructions, reducing token consumption.

Additionally, handle responses asynchronously for real-time applications. Use streaming mode to receive partial outputs, improving user experience in IDE integrations.

Consequently, iterative testing refines integration, revealing performance nuances specific to your use case.

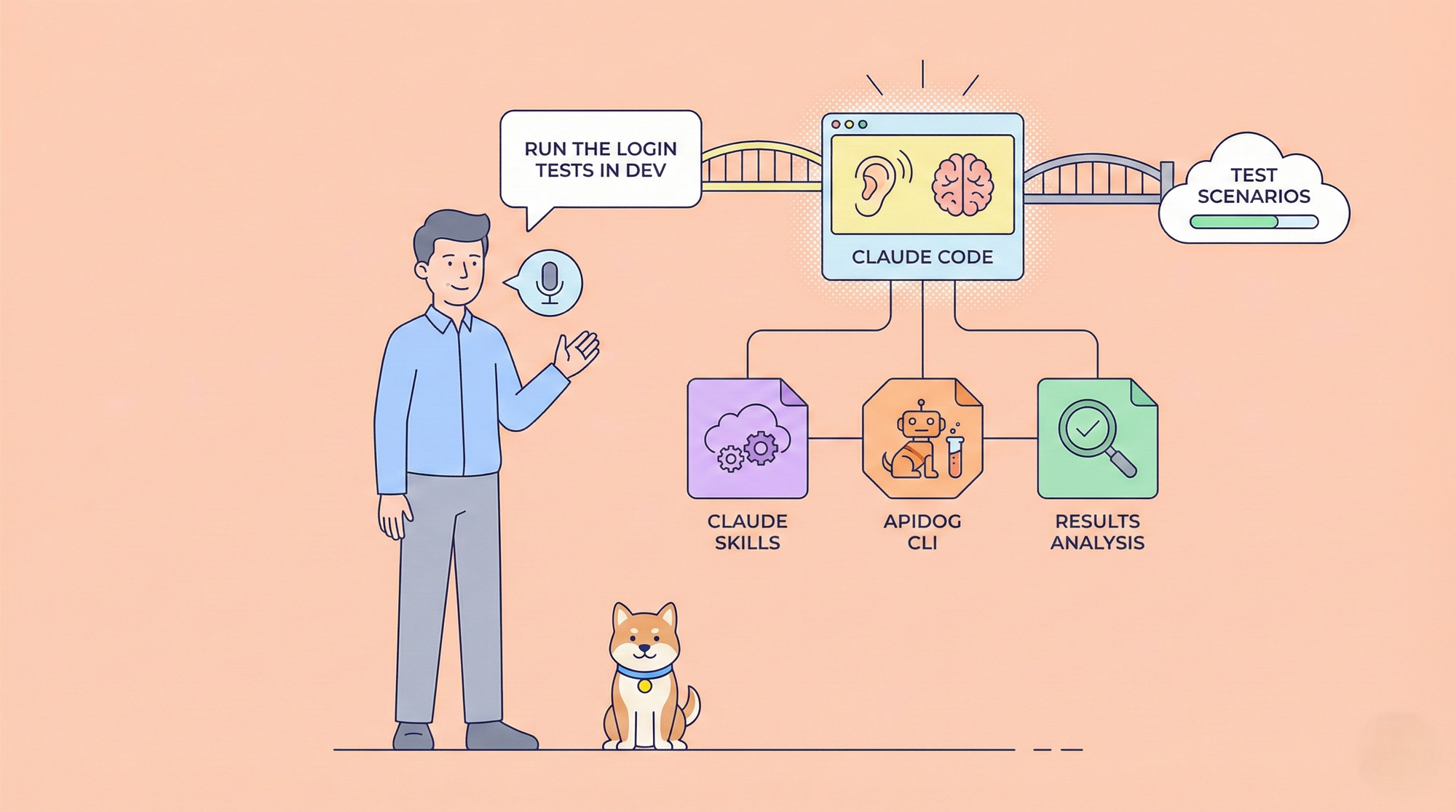

Using Apidog to Test and Optimize GPT-5-Codex-Mini API Calls

Apidog emerges as a powerful ally for API testing. Download it for free and import OpenAI's API specifications. Create collections for GPT-5-Codex-Mini endpoints, defining requests with variables for dynamic parameters.

Engineers simulate scenarios by sending mock requests. For instance, test rate limits by batching calls, analyzing response times and error rates.

Furthermore, Apidog's collaboration features allow teams to share test suites, ensuring consistent integration across projects.

Transitioning to optimization, use its analytics to identify bottlenecks. Monitor token usage and adjust prompts accordingly, extending your credit lifespan.

Moreover, integrate Apidog with CI/CD pipelines. Automate tests for API changes, catching deprecations early.

Thus, Apidog transforms raw API access into a streamlined workflow, amplifying the benefits of GPT-5-Codex-Mini.

Best Practices for Prompt Engineering with GPT-5-Codex-Mini

Effective prompts drive superior outputs. Structure them with clear roles: system for guidelines, user for tasks. Include examples to guide style, such as "Follow PEP 8 standards."

Additionally, break complex tasks into steps. Instruct the model to reason sequentially, improving accuracy for refactors.

However, avoid overloading prompts. GPT-5-Codex-Mini thrives on focused inputs, so segment large requests.

Furthermore, leverage tools integration. Enable web search or code execution in prompts for dynamic responses.

Consequently, regular experimentation refines techniques, adapting to the model's strengths.

Handling Rate Limits and Scaling Usage

OpenAI enforces rate limits to ensure fair access. Plus users enjoy baseline quotas, while Pro tiers offer priority queues.

Monitor usage via API headers, which report remaining requests. Implement exponential backoff for retries, preventing ban risks.

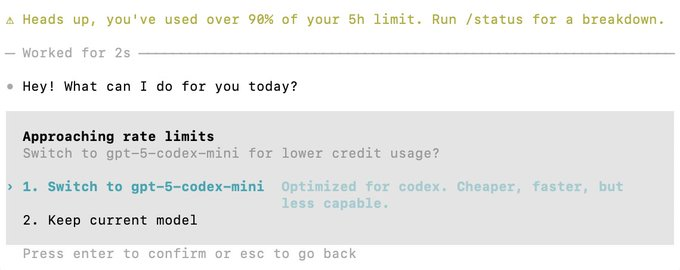

Moreover, switch to GPT-5-Codex-Mini when nearing limits, as it extends sessions without interruptions.

Transitioning to scaling, distribute calls across multiple keys for high-volume apps. Enterprise plans support this natively.

Thus, proactive management sustains performance, supporting growth.

Conclusion: Maximizing Value from GPT-5-Codex-Mini

Accessing and integrating GPT-5-Codex-Mini transforms coding workflows. Follow these steps to harness its power efficiently.

Remember, tools like Apidog enhance the experience—download it for free today.

Ultimately, consistent application yields profound productivity gains.