Google DeepMind recently unveiled the Gemini 2.5 Computer Use model, a specialized advancement built atop the robust visual understanding and reasoning foundations of Gemini 2.5 Pro. This model empowers AI agents to interact directly with graphical user interfaces (UIs), bridging a critical gap in digital task automation. Developers now access capabilities that allow agents to navigate web pages and applications with human-like precision, such as clicking buttons, typing text, and scrolling through content. Furthermore, this innovation addresses scenarios where structured APIs fall short, enabling agents to handle tasks like form submissions that traditionally require manual intervention.

This article examines the technical intricacies of the Gemini 2.5 Computer Use model, from its core mechanisms to real-world applications. We start by outlining its foundational capabilities and then explore how it operates within iterative loops.

Core Capabilities of Gemini 2.5 Computer Use Model

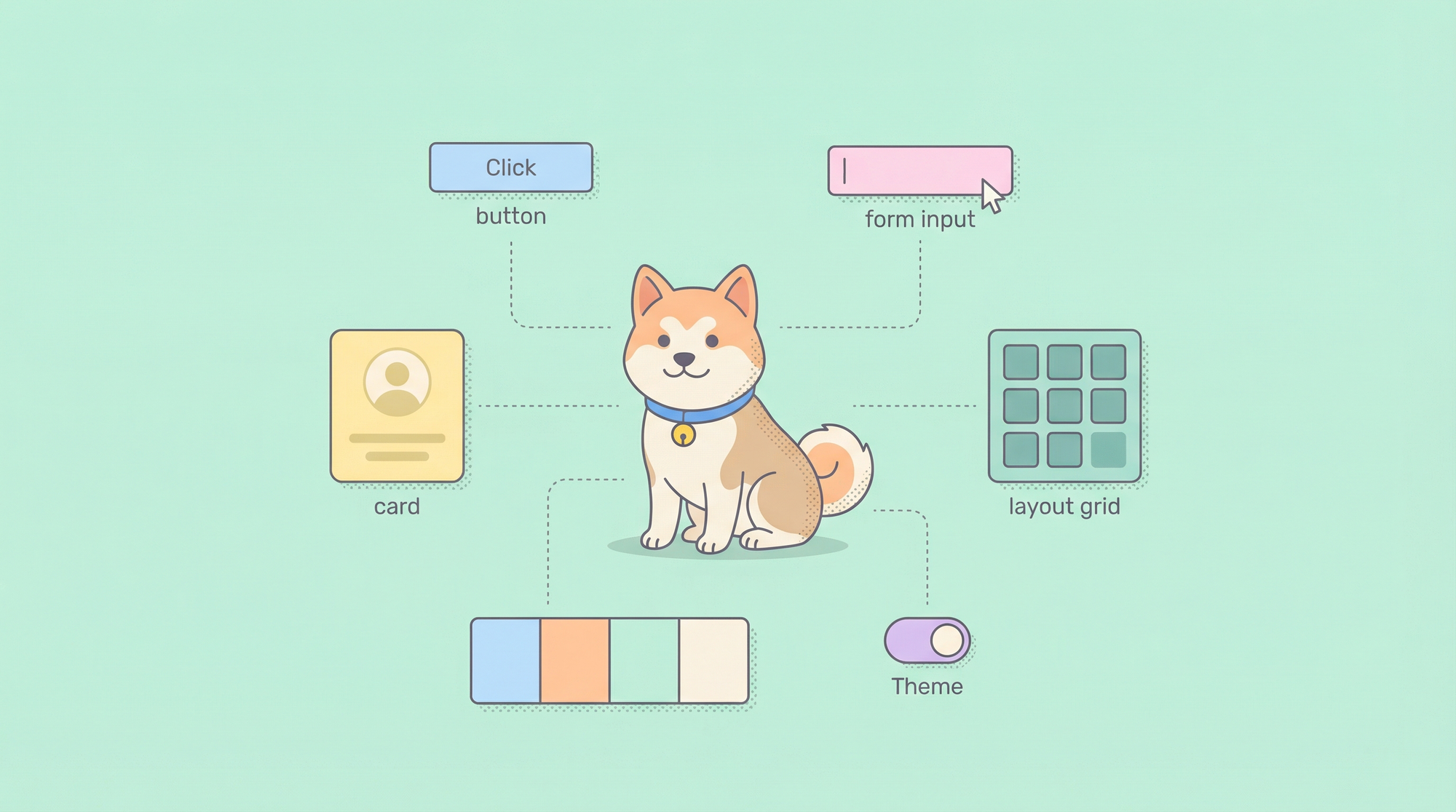

The Gemini 2.5 Computer Use model excels in enabling AI agents to perform UI manipulations that mimic human actions. Specifically, it supports filling out forms, selecting options from dropdown menus, applying filters, and even operating within authenticated sessions behind logins. Engineers optimize this model primarily for web browsers, where it demonstrates exceptional proficiency in handling dynamic web elements. Additionally, it shows promising results in mobile UI control, though full optimization for desktop operating systems remains in progress.

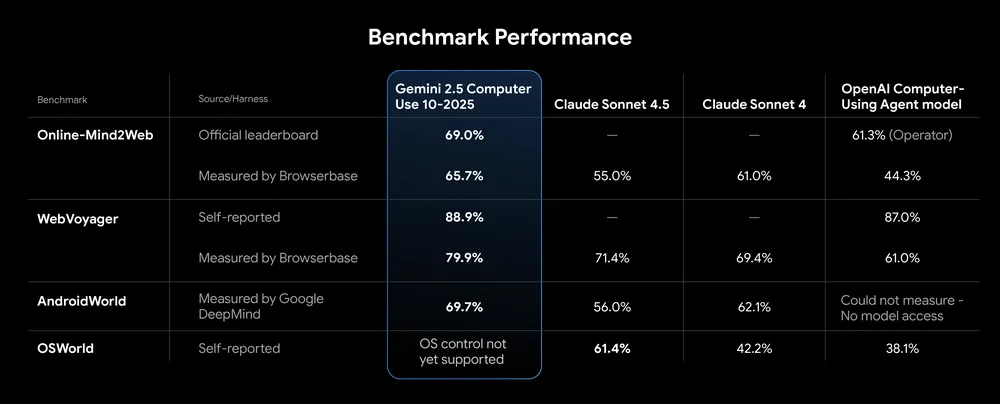

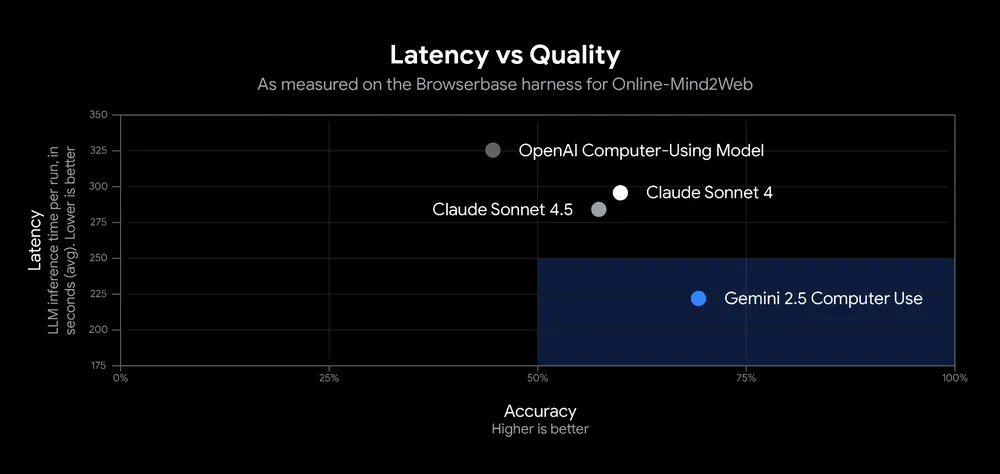

One key strength lies in its benchmark performance. The model achieves leading results across several standardized evaluations, including Online-Mind2Web, WebVoyager, and AndroidWorld. For instance, on the Browserbase harness for Online-Mind2Web, it delivers over 70% accuracy with a latency of approximately 225 seconds. This outperforms competitors by providing higher quality at reduced processing times, which proves crucial for real-time applications.

How Gemini 2.5 Computer Use Model Operates

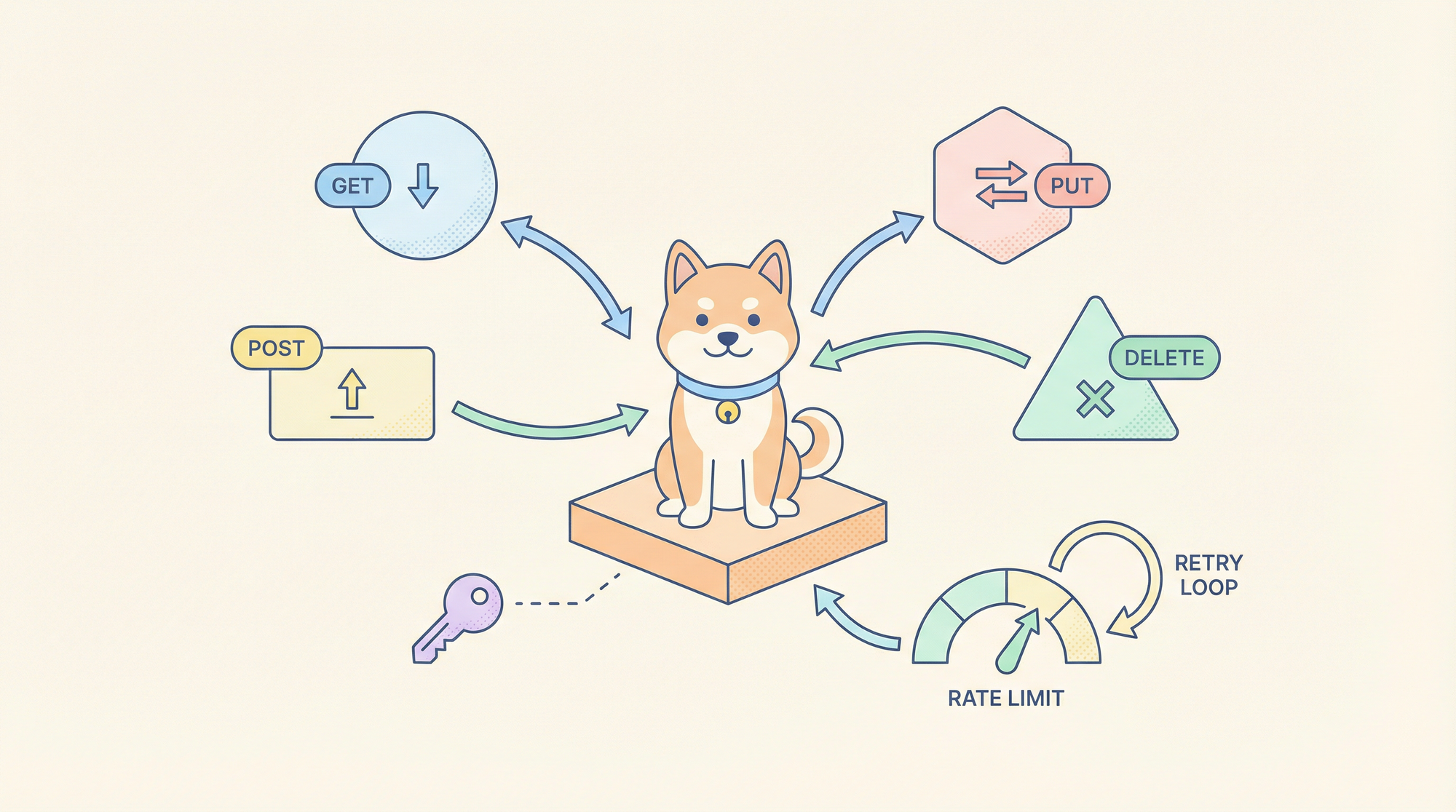

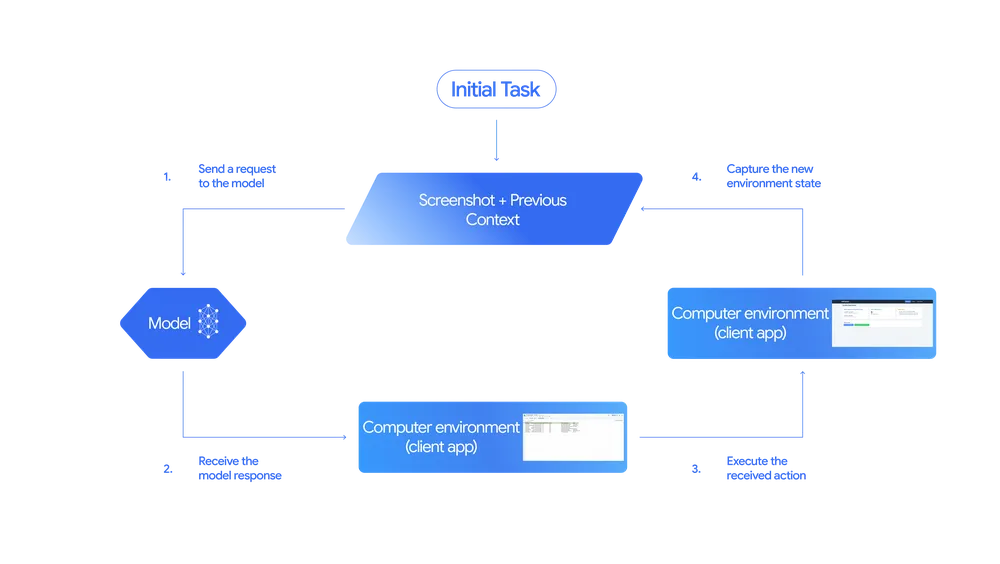

At its core, the Gemini 2.5 Computer Use model functions through an iterative loop exposed via the new computer_use tool in the Gemini API. Developers initiate this process by providing inputs such as the user's request, a screenshot of the current environment, and a history of prior actions. Optionally, they specify exclusions from the supported UI action list or include custom functions to tailor the agent's behavior.

The model processes these inputs and generates a response, typically in the form of a function call that represents a specific UI action—like clicking on an element or typing into a field. In cases involving high-stakes decisions, such as confirming a purchase, the response includes a prompt for end-user verification. Client-side code then executes this action, capturing a new screenshot and the updated URL as feedback.

This feedback loops back to the model, restarting the cycle until the task completes, an error arises, or safety protocols intervene. Such a mechanism ensures adaptive behavior, as the agent continually reassesses the UI state. However, developers must implement this loop carefully to avoid infinite iterations, incorporating timeouts or convergence criteria.

From a technical perspective, the model's visual reasoning draws from Gemini 2.5 Pro's multimodal capabilities, allowing it to interpret screenshots with high fidelity. It identifies interactive elements through advanced computer vision techniques, mapping them to actionable commands. This approach contrasts with traditional scripting methods, which often fail on dynamic UIs due to brittle selectors.

Furthermore, the model supports a comprehensive set of UI actions, including scrolling, hovering, and dragging. Engineers can extend this by defining custom functions, enabling domain-specific adaptations.

Benchmark Performance and Technical Evaluations

Benchmarking reveals the Gemini 2.5 Computer Use model's superiority in UI control tasks. On Online-Mind2Web, it achieves top accuracy by correctly interpreting and acting on web-based instructions. Similarly, in WebVoyager, which tests navigation across diverse websites, the model navigates complex paths with minimal errors. AndroidWorld evaluations highlight its mobile prowess, where it handles app interfaces like swiping and tapping effectively.

Latency metrics further underscore its edge. While competitors might require longer processing times for similar accuracy, this model balances speed and precision, often reducing latency by up to 50% in comparative tests. Early adopters, such as teams at Poke.com, report that the Gemini 2.5 Computer Use model outperforms alternatives, enabling faster workflows in human-centric interfaces.

Technically, these benchmarks employ harnesses that simulate real-world scenarios, measuring success rates, completion times, and error handling. The model's low-latency performance stems from optimized inference paths in Gemini 2.5 Pro, which leverages efficient token processing and parallel computations. Developers analyzing these results note improvements in parsing complex contexts, with up to 18% gains in challenging evaluations, as cited by Autotab.

However, benchmarks also expose limitations, such as reduced efficacy in non-optimized desktop environments. Engineers address this by combining the model with complementary tools, ensuring hybrid approaches for broader coverage. Transitioning to practical examples, these metrics manifest in tangible use cases.

Real-World Examples and Applications

Demonstrations showcase the Gemini 2.5 Computer Use model's versatility. In one scenario, an agent accesses a pet care signup page at https://tinyurl.com/pet-care-signup, extracts details for California-resident pets, and integrates them into a spa CRM at https://pet-luxe-spa.web.app. It then schedules a follow-up appointment with specialist Anima Lavar on October 10th after 8 a.m., mirroring the pet's treatment reason. This process involves multiple steps: form reading, data extraction, and calendar manipulation—all executed autonomously.

Another example involves organizing a chaotic sticky-note board at http://sticky-note-jam.web.app. The agent categorizes notes by dragging them into predefined sections, demonstrating drag-and-drop capabilities. These demos, accelerated for viewing, illustrate the model's fluid handling of interactive elements.

Early testers apply it in UI testing, where it automates regression checks on web applications. Personal assistants built with this model manage emails, bookings, and reminders by interfacing directly with apps. Workflow automation benefits from its ability to recover from failures; for instance, Google's payments platform team reports over 60% rehabilitation of stalled executions, reducing fix times from days to minutes.

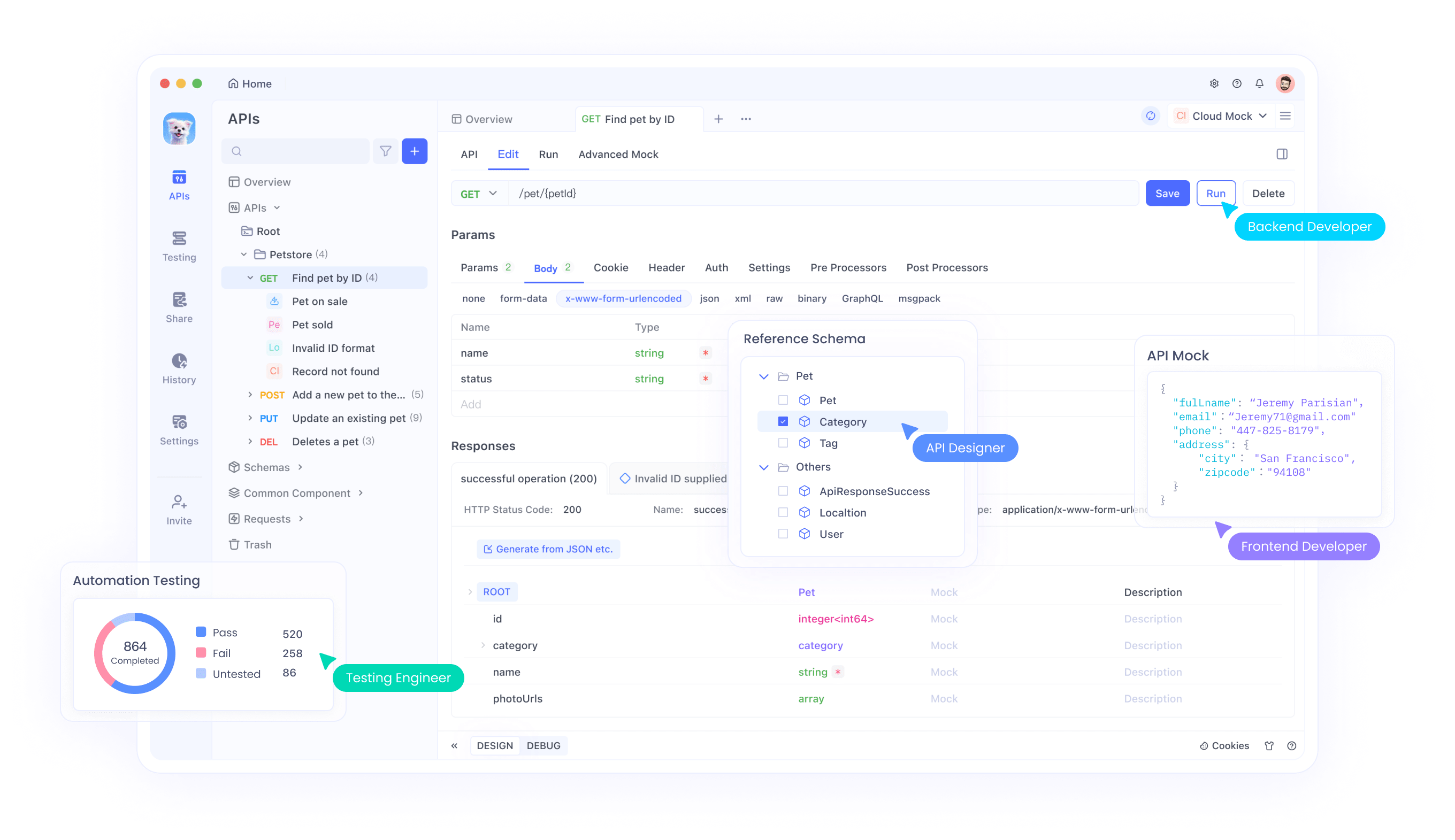

From a technical standpoint, these applications require robust error handling in the loop. Developers implement retry logic and state checkpoints to maintain progress. Moreover, integrating with APIs via tools like Apidog allows seamless testing of the computer_use endpoint, ensuring inputs like screenshots are formatted correctly. As safety becomes paramount, the model incorporates built-in guardrails.

Safety Features and Risk Mitigation

Google embeds safety directly into the Gemini 2.5 Computer Use model to counter risks like misuse, unexpected behaviors, and external threats such as prompt injections. The training process instills refusal mechanisms for harmful actions, such as compromising system integrity or bypassing security protocols like CAPTCHAs.

Developers access granular controls, including a per-step safety service that evaluates actions pre-execution. System instructions guide the model to seek user confirmation for sensitive operations, like controlling medical devices or making financial transactions. This layered approach minimizes vulnerabilities in web environments prone to scams.

Technically, safety evaluations involve adversarial testing, where simulated attacks probe for weaknesses. The model achieves high safety scores by classifying actions against predefined risk categories, halting progression if thresholds exceed. However, developers bear responsibility for thorough pre-launch testing, following documentation on best practices.

Furthermore, transparency in safety reporting allows engineers to refine integrations. For API-driven setups, tools like Apidog facilitate mocking safety responses during development, ensuring compliance without live risks. Transitioning to availability, these features make the model accessible for responsible use.

Availability and Developer Access

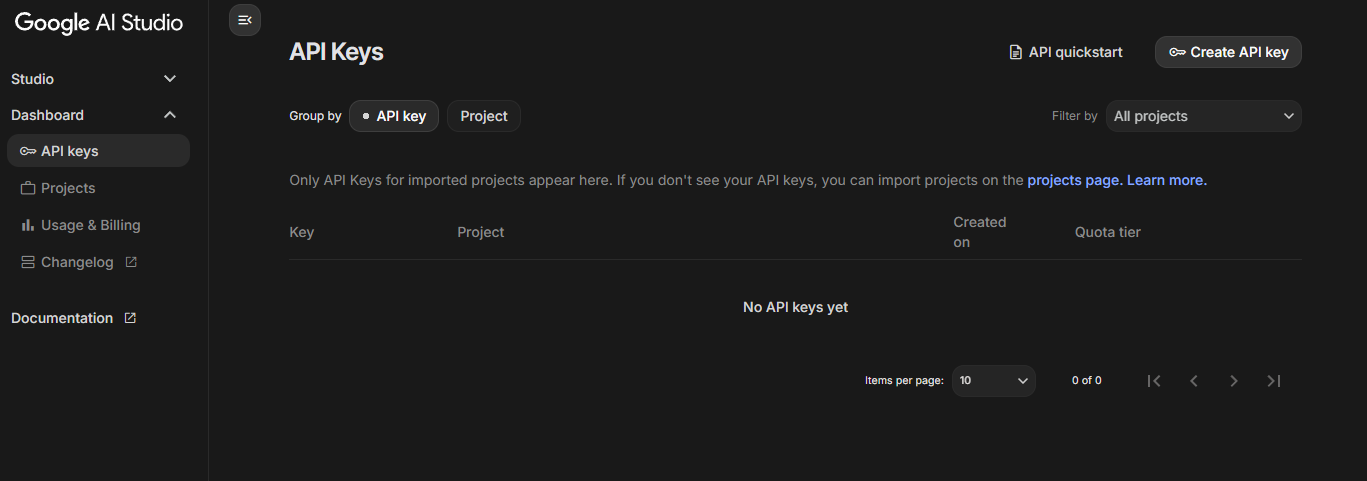

Google makes the Gemini 2.5 Computer Use model available in public preview through the Gemini API on platforms like Google AI Studio and Vertex AI. Developers integrate it immediately, leveraging existing authentication and quota systems.

Access requires no additional setup beyond standard API keys, enabling quick prototyping. Vertex AI users benefit from enterprise-grade scaling, while Google AI Studio suits individual experimentation. The model's rollout emphasizes iterative feedback, with Google encouraging reports on edge cases.

From a technical integration viewpoint, developers wrap the computer_use tool in custom loops using languages like Python or JavaScript. SDKs streamline screenshot handling and action execution, reducing boilerplate code. Additionally, documentation provides code samples for common scenarios, accelerating adoption.

As usage grows, monitoring tools track performance metrics, ensuring optimal resource allocation. For those exploring API interactions, Apidog offers free downloads to visualize endpoints, debug calls, and collaborate on integrations—perfect for building resilient agents with the Gemini 2.5 Computer Use model.

Integrating Gemini 2.5 Computer Use Model with Tools like Apidog

Integration elevates the Gemini 2.5 Computer Use model's utility. Apidog, a comprehensive API platform, complements it by enabling developers to test and document the Gemini API endpoints efficiently. Engineers use Apidog to simulate computer_use calls, verifying input formats like JSON-encoded screenshots and action histories.

In practice, Apidog's mocking features replicate model responses, allowing offline development of agent loops. This prevents costly API hits during iteration. Moreover, Apidog's collaboration tools let teams share API specs, ensuring consistent implementations across projects.

Technically, Apidog supports OpenAPI standards, aligning with Gemini's documentation. Developers import schemas directly, generating client code for seamless connections. For complex agents, Apidog monitors latency and error rates, optimizing the iterative loop's efficiency.

Furthermore, when handling custom functions in the model, Apidog visualizes parameter mappings, reducing integration errors. Case studies show teams using Apidog alongside Gemini for workflow automation, achieving faster deployments. As we consider future implications, such synergies point to evolving ecosystems.

Future Implications and Developments

The Gemini 2.5 Computer Use model signals a shift toward more autonomous AI agents. Future iterations may extend to desktop OS control, broadening applications in enterprise software. Google commits to responsible scaling, prioritizing safety as capabilities advance.

Technically, advancements could involve enhanced multimodal inputs, incorporating audio or haptic feedback for richer interactions. Researchers explore federated learning to personalize agents without compromising privacy.

In summary, the Gemini 2.5 Computer Use model redefines AI's role in digital interfaces. By enabling precise, low-latency UI control, it empowers developers to build innovative solutions. Tools like Apidog enhance this ecosystem, offering free resources to streamline development. As adoption accelerates, expect transformative impacts across industries.