Windsurf Cascade has emerged as a popular choice for many developers in this AI coding era. However, a persistent and frustrating issue has been plaguing its users: the infamous "Error Cascade has encountered an internal error in this step. No credits consumed on this tool call." This message, often appearing unexpectedly, can halt development workflows and lead to significant user dissatisfaction. This article will delve into this specific Cascade error, explore potential causes and user-suggested solutions.

The Frustration of the "Cascade Has Encountered an Internal Error in This Step" Message

Imagine you're deep in a coding session, relying on Cascade to generate, refactor, or explain code. Suddenly, your progress is interrupted by the stark notification: "Error Cascade has encountered an internal error in this step. No credits consumed on this tool call." This isn't just a minor inconvenience; it's a roadblock.

Users across various forums and communities have reported this Cascade error repeatedly, expressing concerns about lost productivity and, despite the "no credits consumed" assurance, sometimes noticing discrepancies in their credit usage. The error seems to appear across different models, including premium ones like Claude 3.5 Sonnet and GPT-4o, and can manifest during various operations, from simple prompts to complex code generation tasks. The lack of a clear, official explanation or a consistent fix from the platform itself adds to the user's burden.

This internal error not only disrupts the immediate task but also erodes confidence in the tool's reliability, especially for those on paid subscriptions who expect a seamless experience. The promise of "No credits consumed on this tool call" can also feel misleading when users perceive their overall credit balance depleting faster than expected during sessions plagued by these errors.

Common Scenarios and User Experiences with This Cascade Error

Developers encounter this Cascade error in a multitude of situations:

- During Code Generation: A request to write a new function or class results in the error instead of code.

- Refactoring Existing Code: Attempts to modify or improve code blocks are met with the internal error.

- Analyzing Files: Even the process of Windsurf analyzing project files has, for some, preceded a session filled with this error.

- Switching Models: The error isn't confined to a single AI model; users have reported it with various options available in Cascade.

- Repeated Occurrences: For many, this isn't an isolated incident but a recurring problem, sometimes making the tool unusable for extended periods.

The impact is significant. Deadlines can be threatened, and the stop-start nature of working around such an internal error is inefficient. While Windsurf's support suggests refreshing the window or starting a new conversation, these are often temporary fixes, if they work at all. The core issue, the Cascade error itself, remains, leaving users searching for more robust solutions and ways to protect their workflow and, critically, their credits, even if the tool claims "No credits consumed on this tool call" for that specific failed step.

User-Sourced Solutions for the Cascade Error

When faced with the persistent "Cascade has encountered an internal error in this step," understanding the potential triggers and exploring community-suggested workarounds becomes crucial.

While official explanations are sparse, user experiences and technical intuition point towards several possibilities for this Cascade error. These can range from issues with the underlying AI models, network connectivity problems, to conflicts within the local development environment or even the state of the files being processed. The claim of "No credits consumed on this tool call" offers little solace when productivity is hampered by such an internal error.

User-Suggested Workarounds for the "Cascade Has Encountered an Internal Error"

Frustrated users have experimented with various approaches to overcome this Cascade error. While not universally effective, these might offer some relief:

1. Refresh and Restart:

- Refresh the Windsurf/Cascade window/panel.

- Start a new Cascade conversation.

- Restart the IDE entirely.

2. Sign Out and Sign In: Some users reported success after signing out of their Windsurf/Codeium account within the IDE and then signing back in.

3. Clear Cache/Reset Context: Deleting the local Windsurf cache folder (e.g., .windsurf in the project or user directory) to force a re-indexing and reset of context has helped some, though it can be a bit of a drastic measure.

4. Check File Status: Ensure files being worked on are not locked or actively being run by a local server. Stop any relevant local servers before asking Cascade to modify those files.

5. Switch AI Models: If the error seems tied to a specific model (e.g., Sonnet 3.7), try switching to a different one (e.g., Sonnet 3.5 or another available option).

6. Simplify Prompts/Break Down Tasks: If a complex request is failing, try breaking it down into smaller, simpler steps.

7. Check Network Connection: Ensure your internet connection is stable. Trying a different Wi-Fi network was a solution for at least one user experiencing connection-related problems.

8. Patience/Try Later: Sometimes, the issue might be temporary on the provider's side (Anthropic, OpenAI, or Codeium itself). Waiting for a while and trying again later has anecdotally worked.

While these workarounds might offer temporary respite, they don't address the root cause of the Cascade error. Moreover, repeatedly trying different solutions can be time-consuming and further disrupt workflow, even if individual failed steps claim "No credits consumed on this tool call." This is where looking for more systemic improvements, like integrating free Apidog MCP Server, becomes highly relevant.

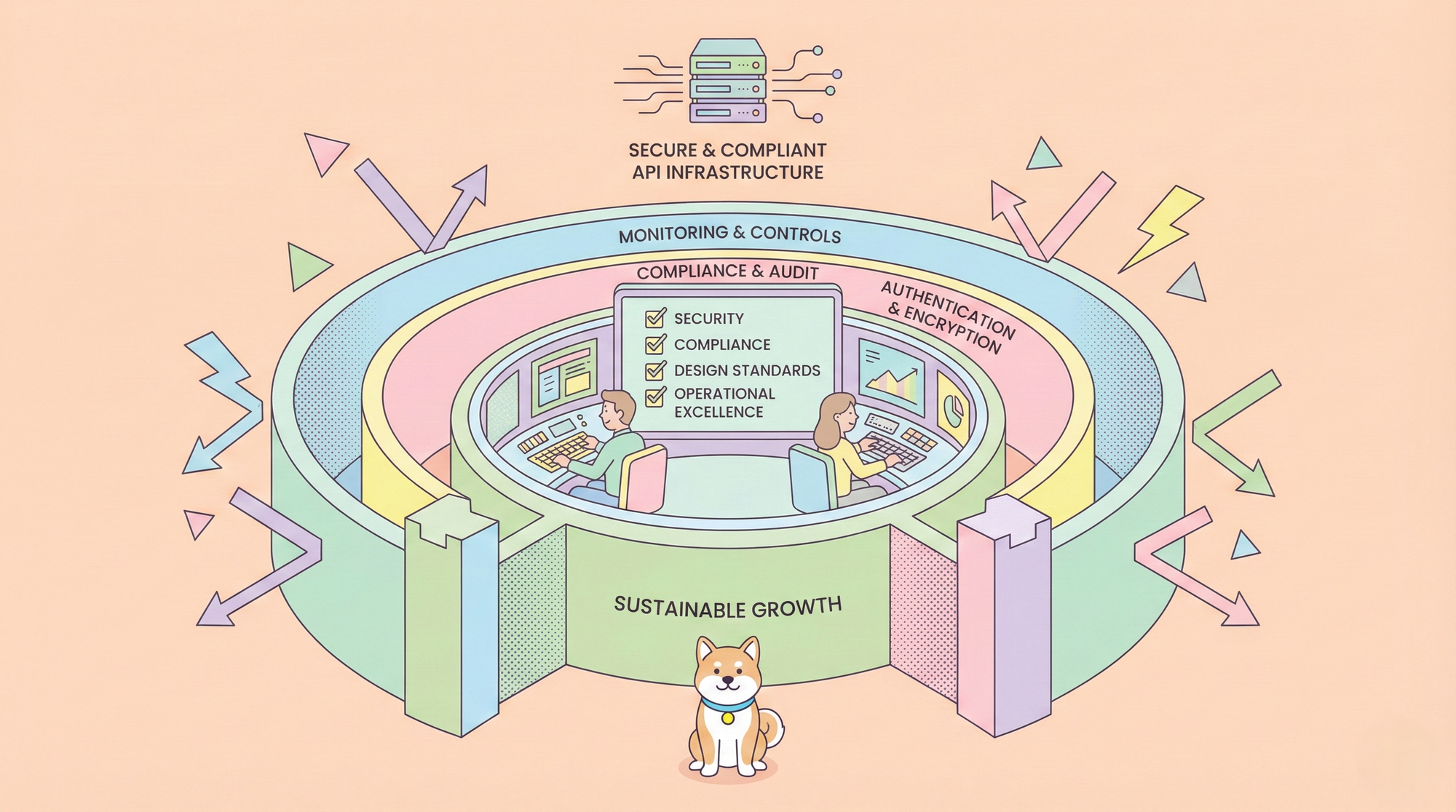

The Apidog MCP Server: A Proactive Solution to Mitigate Cascade Errors and Save Credits

While users grapple with workarounds for the "Cascade has encountered an internal error in this step", a more strategic approach involves optimizing the information flow to AI coding assistants. This is where the free Apidog MCP Server emerges as a powerful ally.

Apidog, renowned as an all-in-one API lifecycle management platform, offers its MCP Server to bridge the gap between your API specifications and AI tools like Cascade. By providing clear, structured, and accurate API context directly to Cascade, you can significantly reduce the ambiguity and potential for internal errors that arise from the AI trying to infer or guess API details.

This proactive step not only enhances reliability but can also lead to more efficient credit usage, even if Cascade states "No credits consumed on this tool call" for specific failures.

How Apidog MCP Server Addresses Potential Causes of Cascade Errors

The Apidog MCP Server can indirectly help alleviate some of the conditions that might lead to a Cascade error:

- Reduced Ambiguity for AI: When Cascade has direct access to precise API definitions (endpoints, request/response schemas, authentication methods) via the Apidog MCP Server, it doesn't need to make as many assumptions or complex inferences. This clarity can lead to simpler, more direct processing by the AI models, potentially reducing the likelihood of hitting an internal error due to misinterpretation or overly complex reasoning paths.

- Optimized Prompts: With Apidog MCP, your prompts to Cascade can be more targeted. Instead of describing an API, you can instruct Cascade to use the API definition from the MCP. For example: "Using the 'MyProjectAPI' from Apidog MCP, generate a TypeScript function to call the

/users/{id}endpoint." This precision can lead to more efficient processing and fewer chances for a Cascade error. - Focus on Core Logic: By offloading the burden of API specification recall to the Apidog MCP Server, Cascade can focus its resources on the core coding task (generating logic, writing tests, etc.). This can be particularly beneficial when dealing with complex APIs, where an internal error might otherwise occur due to the AI struggling with both the API details and the coding logic simultaneously.

Integrating the Free Apidog MCP Server: A Step Towards Stability

Prerequisites:

Before you begin, ensure the following:

✅ Node.js is installed (version 18+; latest LTS recommended)

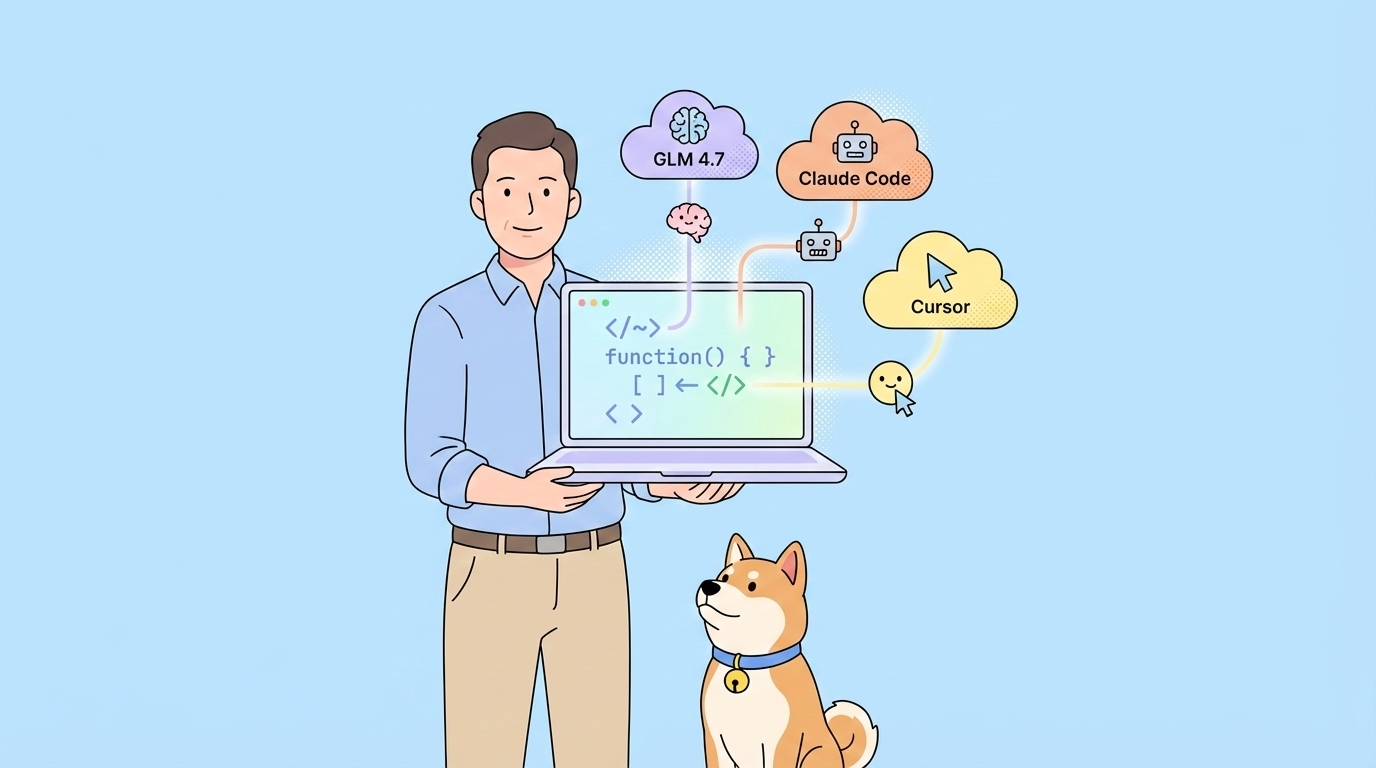

✅ You're using an IDE that supports MCP, such as: Cursor

Step 1: Prepare Your OpenAPI File

You'll need access to your API definition:

- A URL (e.g.,

https://petstore.swagger.io/v2/swagger.json) - Or a local file path (e.g.,

~/projects/api-docs/openapi.yaml) - Supported formats:

.jsonor.yaml(OpenAPI 3.x recommended)

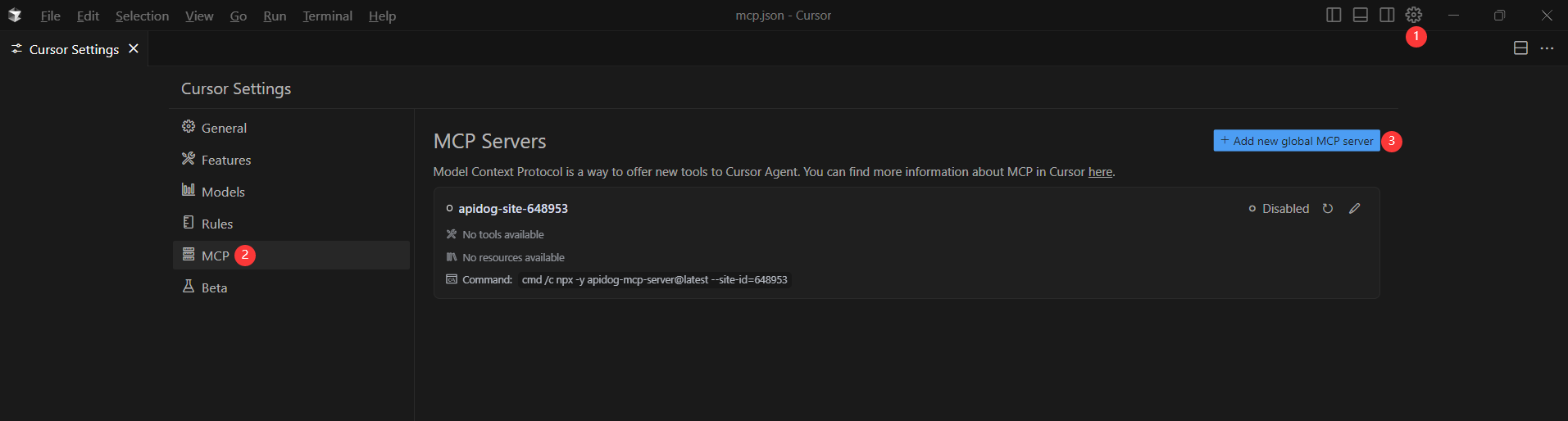

Step 2: Add MCP Configuration to Cursor

You'll now add the configuration to Cursor's mcp.json file.

Remember to Replace <oas-url-or-path> with your actual OpenAPI URL or local path.

- For MacOS/Linux:

{

"mcpServers": {

"API specification": {

"command": "npx",

"args": [

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}For Windows:

{

"mcpServers": {

"API specification": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}Step 3: Verify the Connection

After saving the config, test it in the IDE by typing the following command in Agent mode:

Please fetch API documentation via MCP and tell me how many endpoints exist in the project.If it works, you’ll see a structured response that lists endpoints and their details. If it doesn’t, double-check the path to your OpenAPI file and ensure Node.js is installed properly.

By making API information explicit and machine-readable through the free Apidog MCP Server, you're not just hoping to avoid the "Cascade has encountered an internal error in this step" message; you're actively improving the quality of input to the AI. This can lead to more accurate code generation, fewer retries, and a more stable development experience, ultimately helping you conserve those valuable credits, regardless of whether a specific failed step claims "No credits consumed on this tool call".

Conclusion: Enhancing AI Coding Reliability with Apidog

The recurring “Cascade has encountered an internal error” disrupts productivity and frustrates many Windsurf Cascade users. With no permanent fix yet available, developers rely on unreliable workarounds like restarting sessions or clearing caches—none of which address the root problem.

A more effective solution lies in improving the context provided to AI coding tools. This is where the free Apidog MCP Server proves invaluable. By integrating precise, well-documented API specifications directly into your AI-assisted workflow, Apidog reduces ambiguity and minimizes the risk of errors. Tools like Cascade can then access accurate API context, eliminating guesswork and improving code reliability.