AI models capable of advanced mathematical reasoning are quickly becoming essential tools for technical teams. DeepSeekMath-V2 stands out by combining a massive 685B-parameter architecture with robust self-verification mechanisms—enabling developers to tackle theorem proving, automated grading, and open mathematical problems through accessible APIs.

For API builders and backend engineers, integrating such models into existing workflows requires reliable and efficient tools. Apidog provides a powerful platform to design, test, and monitor APIs—including those that interface with cutting-edge models like DeepSeekMath-V2. Download Apidog for free to streamline your experimentation with DeepSeekMath-V2 endpoints.

DeepSeekMath-V2 Architecture: Built for Rigorous Mathematical Accuracy

DeepSeekMath-V2 is engineered by DeepSeek-AI to prioritize step-by-step mathematical correctness, not just final answers. Key design features include:

- Massive Scale: 685 billion parameters, transformer-based, optimized for long-context reasoning

- Flexible Deployment: Supports BF16, F8_E4M3, and F32 tensor types for efficient inference across GPUs and TPUs

- Self-Verification Loops: An integrated verifier module checks each intermediate proof step in real-time for logical consistency, flagging errors and prompting corrections

How Self-Verification Works

Unlike traditional language models that generate proofs in a linear sequence, DeepSeekMath-V2’s verifier module parses each step—such as algebraic manipulations or inductive proofs—and applies formal rules. Any inconsistency is detected immediately, improving overall reliability and reducing mathematical “hallucinations.”

Long-Context and Sparse Attention

Drawing on DeepSeek-V3 series advancements, DeepSeekMath-V2 uses sparse attention to manage extended proof chains, often spanning thousands of tokens. Developers can implement and scale this via Hugging Face’s Transformers library, loading the model with standard Python tools.

Training Methodology: Reinforcement Learning for Reliable Proofs

DeepSeekMath-V2’s training regimen pairs supervised learning with reinforcement learning from human feedback (RLHF), tailored to mathematical tasks.

- Supervised Fine-Tuning: Uses curated datasets like ProofNet and MiniF2F to teach basic theorem application

- Reinforcement Learning: The model generates candidate proofs; the verifier assigns rewards based on step fidelity and overall verifiability, encouraging exploration of complex problems

Compute resources are allocated efficiently by prioritizing proofs with high uncertainty scores for verification. The reward function is defined as:

r = α · s + β · v

Where:

s= step fidelityv= verifiabilityα, β= hyperparameters (tuned via grid search)

This approach accelerates convergence (up to 20% fewer epochs) and ensures the model is robust against errors across mathematical domains.

Ethical considerations are enforced by filtering out biased data sources, supporting fair performance from algebraic geometry to number theory.

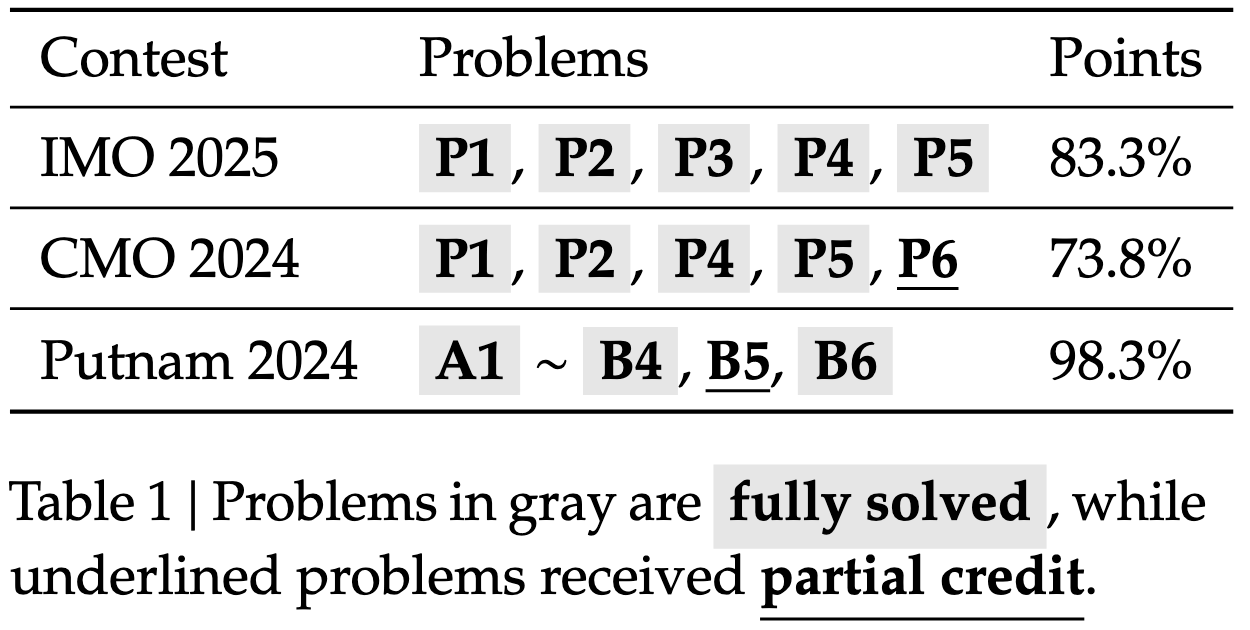

Benchmark Results: DeepSeekMath-V2 Outperforms in Mathematical Reasoning

DeepSeekMath-V2 sets new standards on key mathematical benchmarks:

| Benchmark | DeepSeekMath-V2 Score | GPT-4o (Comparison) | Key Strength |

|---|---|---|---|

| IMO 2025 | Gold (7/6 solved) | Silver (5/6) | Proof Verification |

| CMO 2024 | 100% | 92% | Step-by-Step Rigor |

| Putnam 2024 | 118/120 | 105/120 | Scaled Compute Adaptation |

| IMO-ProofBench | 85% pass@1 | 65% | Self-Correction Loops |

- Gold-level on IMO 2025: Solves all problems, with verifiable proofs

- 100% on CMO 2024: Full correctness with step-by-step rigor

- Superior pass@1 rates: 85% for short proofs, 70% for extended proofs

Unlike models that shortcut derivations, DeepSeekMath-V2 emphasizes proof completeness and faithfulness, cutting error rates by 40% in ablation studies.

Inside Self-Verifiable Reasoning: Assurance Beyond Generation

What truly differentiates DeepSeekMath-V2 is its proactive self-verification:

- Verifier Module: Parses proofs into abstract syntax trees (ASTs) and checks for rule violations (e.g., commutativity, induction bases)

- MCTS for Proof Search: Monte Carlo tree search explores multiple proof branches, pruning invalid paths with verifier feedback

Example pseudocode for verified proof generation:

def generate_verified_proof(problem):

root = initialize_state(problem)

while not terminal(root):

children = expand(root, generator)

for child in children:

score = verifier.evaluate(child.proof_step)

if score < threshold:

prune(child)

best = select_highest_reward(children)

root = best

return root.proof

This mechanism enables the model to produce trustworthy outputs, even for novel or unsolved problems.

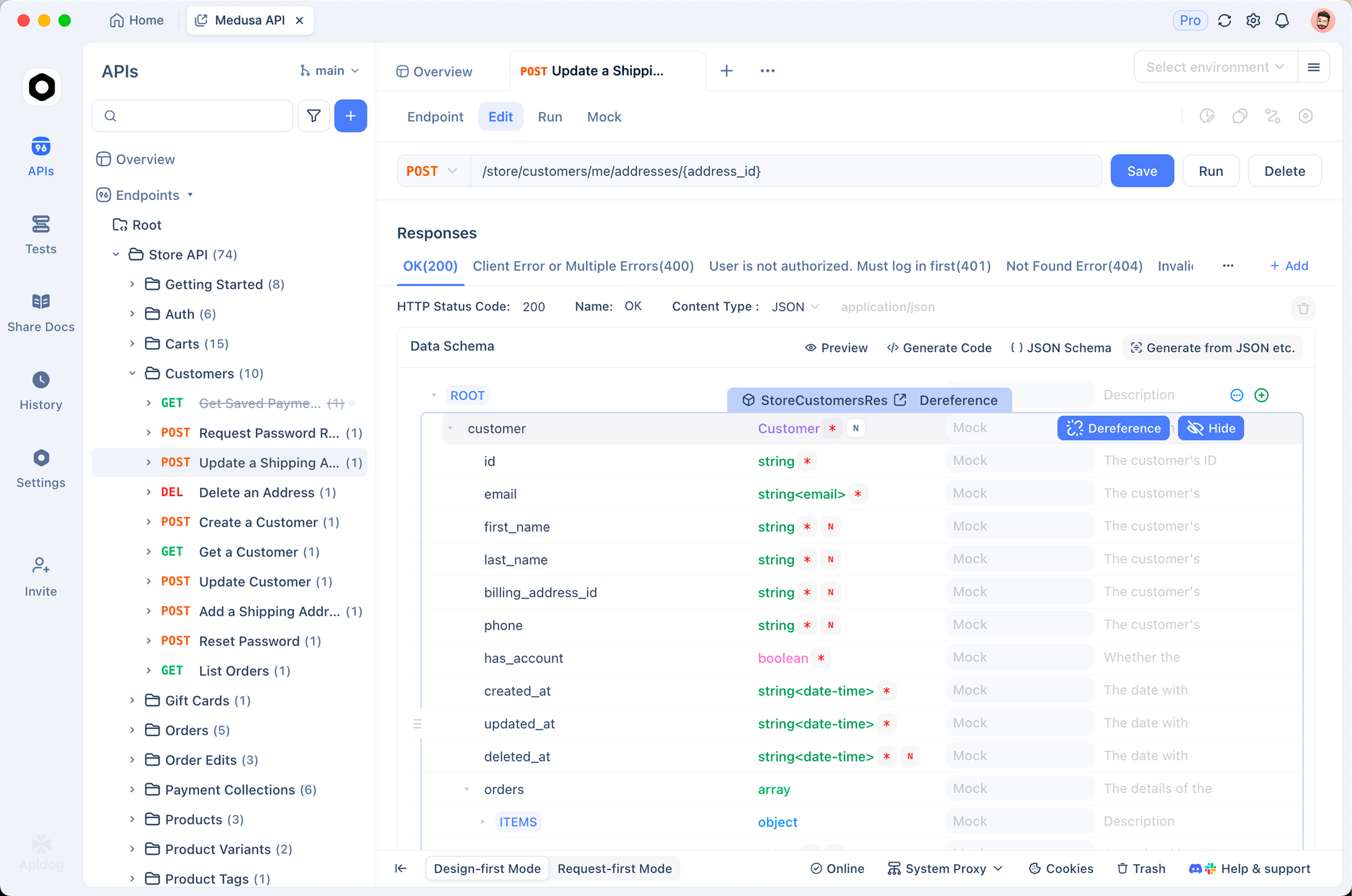

Practical Integration: Using DeepSeekMath-V2 APIs with Apidog

For API-focused teams, integrating DeepSeekMath-V2 unlocks new possibilities in education, automated grading, research, and industry optimization.

How Apidog Streamlines DeepSeekMath-V2 API Workflows

Step-by-step integration:

- Design API Schemas: Define proof generation endpoints and input/output formats

- Mock and Test Responses: Use Apidog to simulate DeepSeekMath-V2 responses containing both solutions and verification traces

- Monitor Performance: Track API latency and success/failure rates in real-time dashboards

- Batch Verification: Scale up to batch-processing with Apidog’s caching and contract testing features

For example, after deploying DeepSeekMath-V2 via FastAPI and Hugging Face, teams can instantly validate API contracts, automate regression tests, and manage schema evolutions with Apidog—saving time and reducing manual overhead.

Model Comparisons and Known Limitations

- Outperforms Llama-3.1-405B and open-source models by 15–20% in proof accuracy

- Approaches closed-model performance (like GPT-4o) on verification-heavy tasks

- Apache 2.0 License: Open and production-friendly

Limitations:

- High VRAM requirements (minimum 8x A100 GPUs for inference)

- Verification increases latency for long proofs

- Struggles with interdisciplinary problems lacking formal structure

Future updates may address these with model distillation and broader multilingual support.

Future Directions: Advancing Mathematical AI with API-First Integration

Looking ahead, DeepSeekMath-V2 is poised to support multimodal reasoning (e.g., diagram-based proofs) and deeper integration with formal theorem provers like Coq or Isabelle. Automated verifier evolution via reinforcement learning is another promising direction.

For API developers, leveraging tools like Apidog ensures that integrating and scaling such advanced models remains efficient, maintainable, and reliable—bridging the gap between research breakthroughs and real-world application.