Local AI model deployment transforms how developers and researchers approach machine learning tasks. The release of DeepSeek R1 0528 marks a significant milestone in open-source reasoning models, offering capabilities that rival proprietary solutions while maintaining complete local control. This comprehensive guide explores how to run DeepSeek R1 0528 Qwen 8B locally using Ollama and LM Studio, providing technical insights and practical implementation strategies.

Understanding DeepSeek R1 0528: The Evolution of Reasoning Models

DeepSeek R1 0528 represents the latest advancement in the DeepSeek reasoning model series. Unlike traditional language models, this iteration focuses specifically on complex reasoning tasks while maintaining efficiency for local deployment. The model builds upon the successful foundation of its predecessors, incorporating enhanced training methodologies and architectural improvements.

The 0528 version introduces several key enhancements over previous iterations. First, the model demonstrates improved benchmark performance across multiple evaluation metrics. Second, developers implemented significant hallucination reduction techniques, resulting in more reliable outputs. Third, the model now includes native support for function calling and JSON output, making it more versatile for practical applications.

Technical Architecture and Performance Characteristics

The DeepSeek R1 0528 Qwen 8B variant utilizes the Qwen3 foundation model as its base architecture. This combination provides several advantages for local deployment scenarios. The 8-billion parameter configuration strikes an optimal balance between model capability and resource requirements, making it accessible to users with moderate hardware specifications.

Performance benchmarks indicate that DeepSeek R1 0528 achieves competitive results compared to larger proprietary models. The model excels particularly in mathematical reasoning, code generation, and logical problem-solving tasks. Additionally, the distillation process from the larger DeepSeek R1 model ensures that essential reasoning capabilities remain intact despite the reduced parameter count.

Memory requirements for the DeepSeek R1 0528 Qwen 8B model vary depending on quantization levels. Users typically need between 4GB to 20GB of RAM, depending on the specific quantization format chosen. This flexibility allows deployment across various hardware configurations, from high-end workstations to modest laptops.

Installing and Configuring Ollama for DeepSeek R1 0528

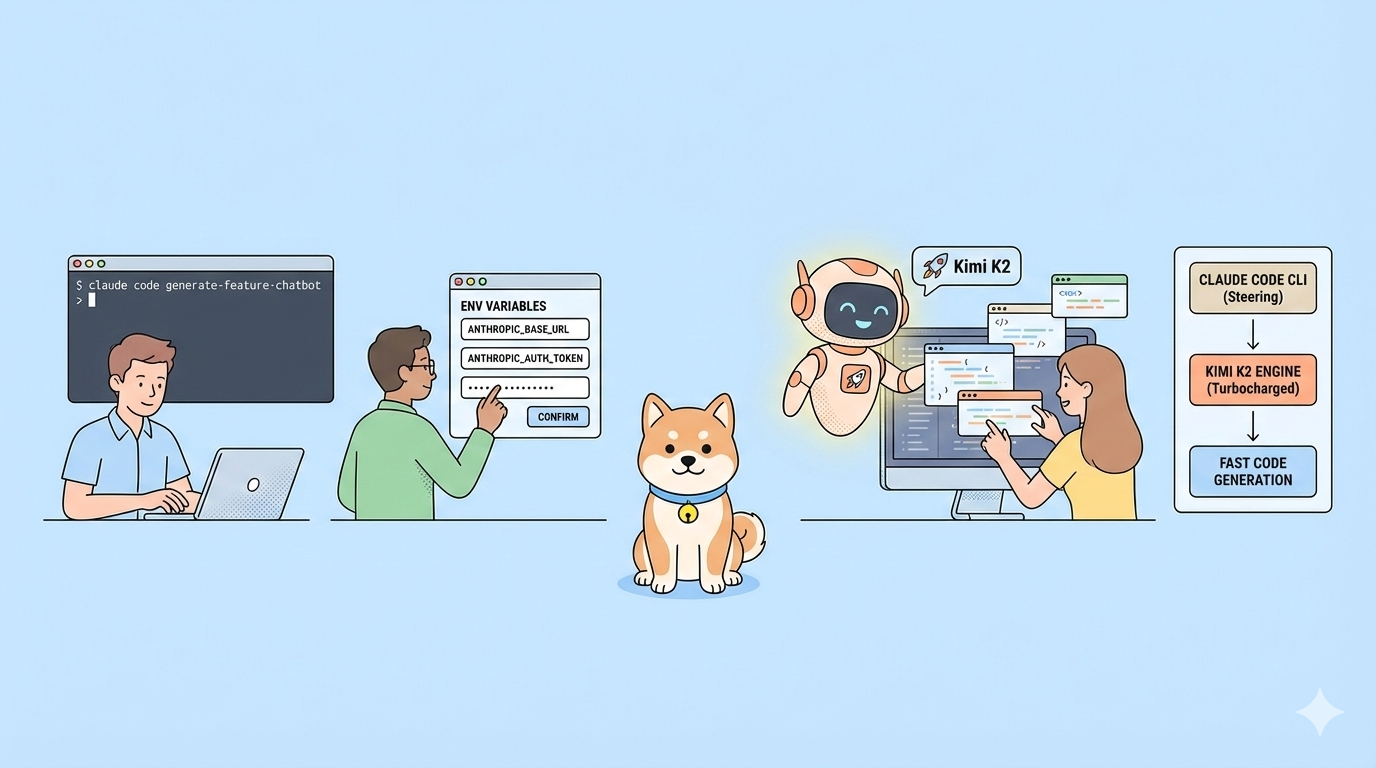

Ollama provides a streamlined approach to running large language models locally. The installation process begins with downloading the appropriate Ollama binary for your operating system. Windows users can download the installer directly, while Linux and macOS users can utilize package managers or direct downloads.

After installing Ollama, users must configure their system environment. The process involves setting up proper PATH variables and ensuring sufficient system resources. Subsequently, users can verify their installation by running basic Ollama commands in their terminal or command prompt.

The next step involves downloading the DeepSeek R1 0528 model through Ollama's registry system. Users execute the command ollama pull deepseek-r1-0528-qwen-8b to fetch the model files. This process downloads the quantized model weights optimized for local inference, typically requiring several gigabytes of storage space.

Once the download completes, users can immediately begin interacting with the model. The command ollama run deepseek-r1 launches an interactive session where users can input queries and receive responses. Additionally, Ollama provides API endpoints for programmatic access, enabling integration with custom applications.

LM Studio Setup and Configuration Process

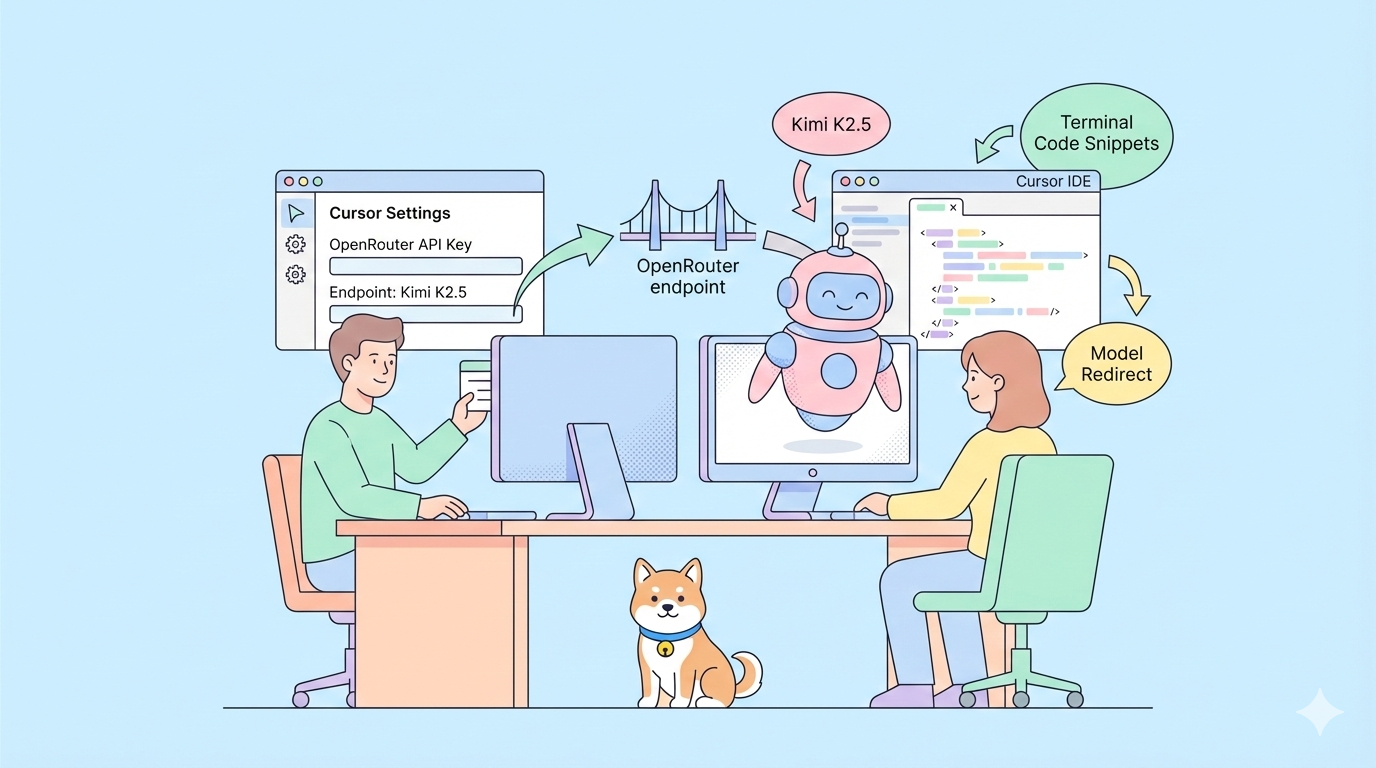

LM Studio offers a graphical user interface for managing local language models, making it particularly accessible for users who prefer visual interfaces. The installation process begins with downloading the appropriate LM Studio application for your operating system. The software supports Windows, macOS, and Linux platforms with native applications.

Setting up DeepSeek R1 0528 in LM Studio involves navigating to the model catalog and searching for "DeepSeek R1 0528" or "Deepseek-r1-0528-qwen3-8b." The catalog displays various quantization options, allowing users to select the version that best matches their hardware capabilities. Lower quantization levels require less memory but may slightly impact model performance.

The download process in LM Studio provides visual progress indicators and estimated completion times. Users can monitor download progress while continuing to use other features of the application. Once the download completes, the model appears in the local model library, ready for immediate use.

LM Studio's chat interface provides an intuitive way to interact with DeepSeek R1 0528. Users can adjust various parameters such as temperature, top-k sampling, and context length to fine-tune model behavior. Moreover, the application supports conversation history management and export functionality for research and development purposes.

Optimizing Performance and Resource Management

Local deployment of DeepSeek R1 0528 requires careful attention to performance optimization and resource management. Users must consider several factors to achieve optimal inference speeds while maintaining reasonable memory usage. Hardware specifications significantly impact model performance, with faster CPUs and adequate RAM being primary considerations.

Quantization plays a crucial role in performance optimization. The DeepSeek R1 0528 Qwen 8B model supports various quantization levels, from FP16 to INT4. Higher quantization levels reduce memory requirements and increase inference speed, though they may introduce minor accuracy trade-offs. Users should experiment with different quantization levels to find the optimal balance for their specific use cases.

CPU optimization techniques can significantly improve inference performance. Modern processors with AVX-512 instruction sets provide substantial acceleration for language model inference. Additionally, users can adjust thread counts and CPU affinity settings to maximize computational efficiency. Memory allocation strategies also impact performance, with proper swap file configuration being essential for systems with limited RAM.

Temperature and sampling parameter tuning affects both response quality and generation speed. Lower temperature values produce more deterministic outputs but may reduce creativity, while higher values increase randomness. Similarly, adjusting top-k and top-p sampling parameters influences the balance between response quality and generation speed.

API Integration and Development Workflows

DeepSeek R1 0528 running locally provides REST API endpoints that developers can integrate into their applications. Both Ollama and LM Studio expose compatible APIs that follow OpenAI-style formatting, simplifying integration with existing codebases. This compatibility allows developers to switch between local and cloud-based models with minimal code changes.

API authentication for local deployments typically requires minimal configuration since the endpoints run on localhost. Developers can immediately begin making HTTP requests to the local model endpoints without complex authentication setups. However, production deployments may require additional security measures such as API keys or network access controls.

Request formatting follows standard JSON structures with prompts, parameters, and model specifications. Response handling includes streaming capabilities for real-time output generation, which proves particularly valuable for interactive applications. Error handling mechanisms provide informative feedback when requests fail or exceed resource limits.

Python integration examples demonstrate how to incorporate DeepSeek R1 0528 into machine learning workflows. Libraries such as requests, httpx, or specialized AI framework integrations enable seamless model access. Furthermore, developers can create wrapper functions to abstract model interactions and implement retry logic for robust applications.

Troubleshooting Common Issues and Solutions

Local deployment of DeepSeek R1 0528 may encounter various technical challenges that require systematic troubleshooting approaches. Memory-related issues represent the most common problems, typically manifesting as out-of-memory errors or system crashes. Users should monitor system resources during model loading and inference to identify bottlenecks.

Model loading failures often result from insufficient disk space or corrupted download files. Verifying download integrity through checksum validation helps identify corrupted files. Additionally, ensuring adequate free disk space prevents incomplete downloads or extraction failures.

Performance issues may stem from suboptimal configuration settings or hardware limitations. Users should experiment with different quantization levels, batch sizes, and threading configurations to optimize performance for their specific hardware. Monitoring CPU and memory usage during inference helps identify resource constraints.

Network connectivity problems can affect model downloads and updates. Users should verify internet connectivity and check firewall settings that might block Ollama or LM Studio communications. Additionally, corporate networks may require proxy configuration for proper model access.

Security Considerations and Best Practices

Local deployment of DeepSeek R1 0528 provides inherent security advantages compared to cloud-based solutions. Data remains entirely within the user's control, eliminating concerns about external data exposure or third-party access. However, local deployments still require proper security measures to protect against various threats.

Network security becomes crucial when exposing local model APIs to external applications. Users should implement proper firewall rules, access controls, and authentication mechanisms to prevent unauthorized access. Additionally, running models on non-standard ports and implementing rate limiting helps prevent abuse.

Data handling practices require attention even in local deployments. Users should implement proper logging controls to prevent sensitive information from being stored in plain text logs. Furthermore, regular security updates for the underlying operating system and model runtime environments help protect against known vulnerabilities.

Access control mechanisms should restrict model usage to authorized users and applications. This includes implementing user authentication, session management, and audit logging for compliance requirements. Organizations should establish clear policies regarding model usage and data handling procedures.

Conclusion

DeepSeek R1 0528 Qwen 8B represents a significant advancement in locally deployable reasoning models. The combination of sophisticated reasoning capabilities with practical resource requirements makes it accessible to a broad range of users and applications. Both Ollama and LM Studio provide excellent platforms for deployment, each offering unique advantages for different use cases.

Successful local deployment requires careful attention to hardware requirements, performance optimization, and security considerations. Users who invest time in proper configuration and optimization will achieve excellent performance while maintaining complete control over their AI infrastructure. The open-source nature of DeepSeek R1 0528 ensures continued development and community support.