Developers across the globe rely on CodeX, OpenAI's powerful AI-driven coding assistant, to streamline their workflows and tackle complex programming tasks. However, recent discussions on platforms like X reveal a growing concern: many users perceive that CodeX delivers suboptimal results compared to its initial performance. You encounter frustrating bugs, slower responses, or incomplete code suggestions, and you question if the tool has indeed declined. This perception persists despite OpenAI's claims of continuous improvements and metrics showing growth in usage.

Engineers report instances where CodeX struggles with intricate tasks, such as applying patches or handling extended conversations, leading to assumptions of degradation. Yet, OpenAI's team actively addresses these issues through rigorous investigations, demonstrating a commitment to transparency. For instance, they recently published a detailed report outlining findings from user feedback and internal evaluations.

Understanding CodeX: Its Core Functionality and Evolution

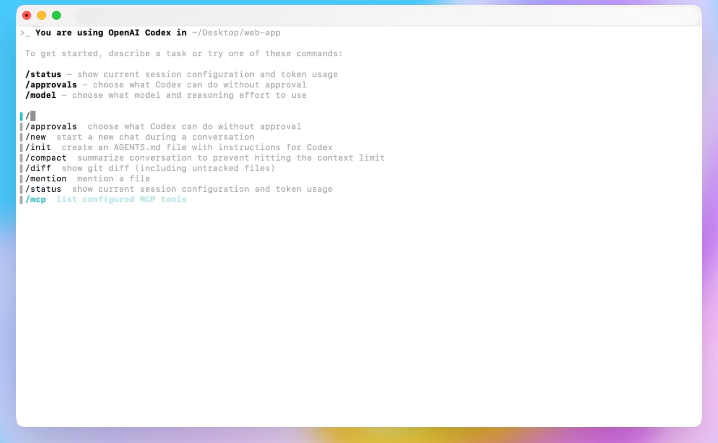

CodeX represents a significant advancement in AI-assisted programming, building on OpenAI's foundation in large language models. Engineers design CodeX to interpret natural language prompts and generate code snippets, debug issues, and even manage entire repositories. Unlike traditional IDE plugins, CodeX integrates deeply with command-line interfaces and editors, allowing seamless interactions.

OpenAI launched CodeX as an evolution of earlier models like Codex, incorporating enhancements from GPT-5 architecture. This iteration focuses on persistence, enabling the AI to retry tasks and adapt to user feedback within sessions. Consequently, developers use CodeX for diverse applications, from simple script writing to complex system integrations.

However, as adoption grows, users push CodeX boundaries. For example, initial tasks might involve basic functions, but advanced users attempt multi-file edits or API orchestrations. This shift reveals limitations, prompting questions about performance consistency.

Furthermore, CodeX employs tools like apply_patch for file modifications and compaction for context management. These features enhance usability but introduce variables that affect outcomes. When you input a prompt, CodeX processes it through a responses API, which streams tokens and parses results. Any discrepancy in this pipeline can manifest as perceived dumbing down.

User Reports: Signs That CodeX Might Be Underperforming

Users actively share experiences on social platforms, highlighting instances where CodeX fails to meet expectations. For instance, one developer on X noted that CodeX excels at initial tasks but struggles with escalating complexity, leading to assumptions of model degradation.

Specifically, reports include CodeX generating incorrect diffs during patch applications, resulting in file deletions and recreations. This behavior disrupts workflows, especially in interrupted sessions. Another common complaint involves latency; tasks that once completed swiftly now extend due to retries with prolonged timeouts.

Moreover, users observe language switches mid-response, such as shifting from English to Korean, attributed to bugs in constrained sampling. These anomalies affect less than 0.25% of sessions but amplify frustration when encountered.

In addition, compaction—a feature that summarizes conversations to manage context—receives criticism. As sessions lengthen, multiple compactions degrade accuracy, prompting OpenAI to add warnings: start new conversations for targeted interactions.

Furthermore, hardware variations contribute; older setups yield slight performance dips, impacting retention. Developers on premium plans report inconsistencies, though metrics show overall growth.

Transitioning from these reports, analyzing quantitative evidence provides clarity on whether these issues indicate true degradation or evolving usage.

Analyzing the Evidence: Metrics, Feedback, and Usage Patterns

OpenAI collects extensive data on CodeX performance, including evals across CLI versions and hardware. Evaluations confirm improvements, such as a 10% reduction in token usage post-CLI 0.45 updates, without regressions in core tasks.

However, user feedback via the /feedback command reveals trends. Engineers triage over 100 issues daily, linking them to specific hardware or features. Predictive models correlate retention with factors like OS and plan type, identifying hardware as a minor culprit.

In addition, session analysis shows increased compaction usage over time, correlating with performance drops. Evals quantify this: accuracy decreases with repeated compactions.

Moreover, web search integration (--search) and prompt changes over two months show no negative impact. Yet, authentication cache inefficiencies add 50ms latency per request, compounding user perceptions.

Furthermore, usage evolves; more developers employ MCP tools, increasing setup complexity. OpenAI recommends minimalist configurations for optimal results.

Consequently, evidence suggests perceptions stem from pushing CodeX on harder tasks rather than inherent dumbing. As one X user summarized, "codex is so good that people kept trying to use it for harder tasks and it didn’t do those as well on those and then people just assumed the model got worse."

This analysis sets the stage for OpenAI's investigative response, which addresses these points directly.

OpenAI's Response: The Transparent Investigation into CodeX Performance

OpenAI commits to transparency, promising to investigate degradation reports seriously. Tibo, a Codex team member, announced the probe on X, outlining a plan to upgrade feedback mechanisms, standardize internal usage, and run additional evals.

Engineers executed swiftly, releasing CLI 0.50 with enhanced /feedback, tying issues to clusters and hardware. They removed over 60 feature flags, simplifying the stack.

Moreover, a dedicated squad hypothesized and tested issues daily. This approach uncovered fixes, from retiring older hardware to refining compaction.

In addition, OpenAI shared a comprehensive report titled "Ghosts in the Codex Machine," detailing findings without major regressions but acknowledging combined factors.

Furthermore, they reset rate limits and refunded credits due to a billing bug, demonstrating user-centric actions.

Transitioning to specifics, the report's key findings illuminate technical nuances behind user concerns.

Key Findings from OpenAI's CodeX Degradation Report

The report concludes no single large issue exists; instead, shifts in behavior and minor problems accumulate. For hardware, evals and models pinpointed older units, leading to their removal and load balancing optimizations.

Regarding compaction, higher frequency over time degrades sessions. OpenAI improved implementations to avoid recursive summaries and added user nudges.

For apply_patch, rare failures prompt risky deletions; mitigations limit such sequences, with model enhancements planned.

Timeouts see no broad regressions—latency improves—but inefficient retries persist. Investments target better long-process handling.

A constrained sampling bug causes out-of-distribution tokens, fixed imminently.

Responses API audits reveal minor encoding changes without performance impact.

Other probes, like evals on CLI versions and prompts, confirm stability.

Moreover, evolving setups with more tools recommend simplicity.

These findings validate user experiences while substantiating no overall dumbing.

Improvements Implemented and Future Directions for CodeX

OpenAI acts on findings, rolling out fixes like compaction warnings and sampling corrections. Hardware purges and latency reductions enhance reliability.

Moreover, they form a permanent team to monitor real-world performance, recruiting talent for ongoing optimizations.

In addition, feedback socialization increases, ensuring continuous input.

Future work includes model persistence improvements and tool adaptability.

Consequently, CodeX evolves, addressing perceptions through data-driven enhancements.

However, while awaiting these, developers seek complements like Apidog.

Complementary Tools: How Apidog Enhances CodeX Workflows

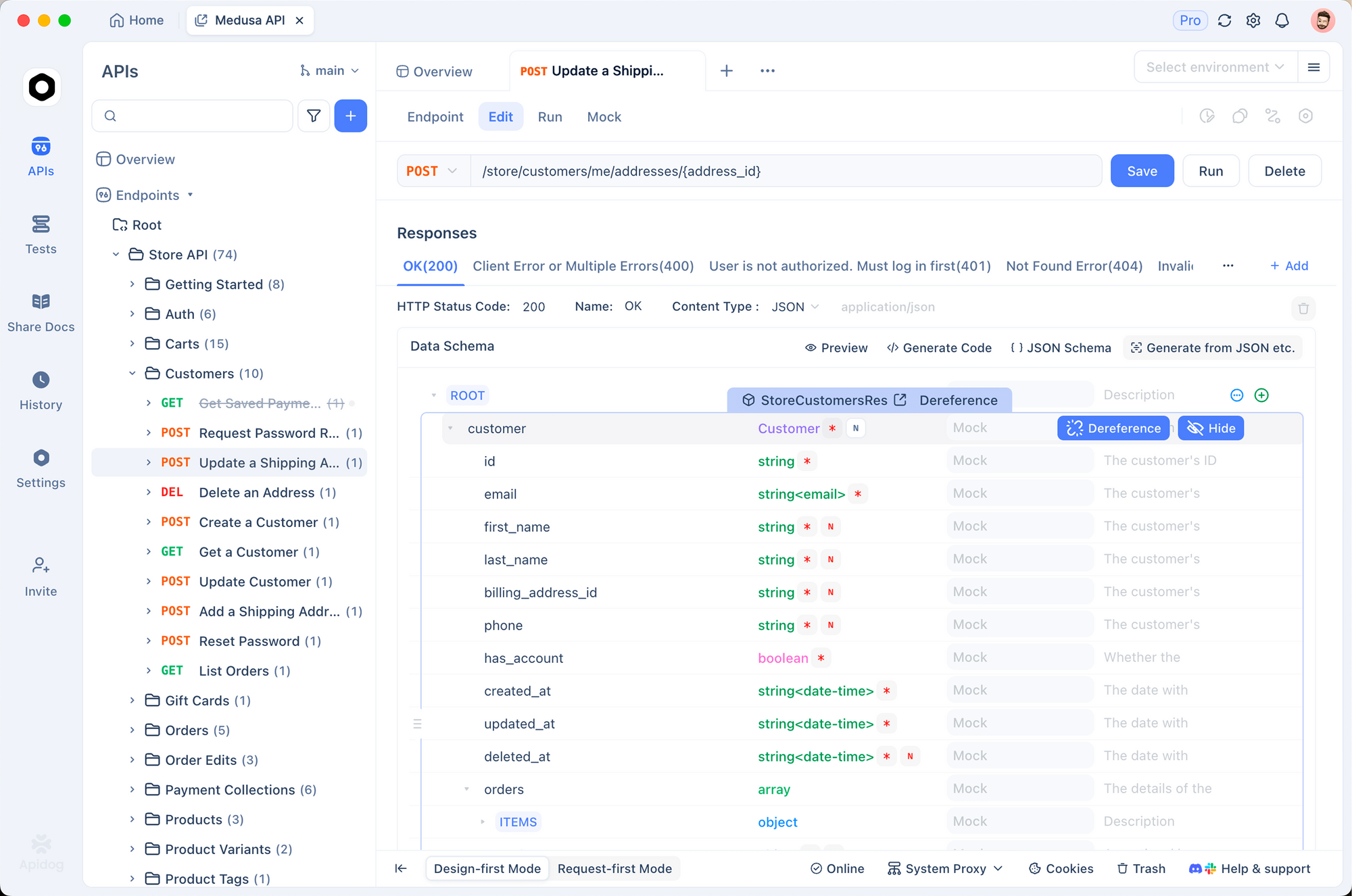

When CodeX handles API tasks, inconsistencies arise, especially in integrations. Apidog, a robust API platform, fills this gap.

Developers use Apidog to design, test, and document APIs, ensuring CodeX-generated code functions correctly.

For instance, simulate endpoints in Apidog before CodeX implementation, reducing errors.

Moreover, Apidog's free download offers collaboration features, versioning, and automation—ideal for teams facing CodeX limitations.

Transitioning smoothly, Apidog integrates with coding environments, validating AI outputs.

Thus, pairing CodeX with Apidog optimizes development, mitigating perceived degradations.

Case Studies: Real-World Examples from X Discussions

X threads provide vivid examples. One user highlighted CodeX's success breeding overambition, echoing the report's usage evolution.

Another discussed CLI speed, switching to alternatives for quick tasks, underscoring latency concerns.

Furthermore, billing resets addressed overcharges, restoring trust.

These anecdotes, combined with report data, illustrate multifaceted issues.

Best Practices for Maximizing CodeX Performance

To counter perceptions, adopt practices: keep sessions short, minimize tools, use /feedback.

Moreover, monitor updates; CLI improvements directly impact results.

In addition, experiment with prompts for precision.

Consequently, these steps enhance experiences.

Conclusion: Embracing Change in CodeX and Beyond

Users perceive CodeX as dumber due to complex tasks and minor issues, but evidence shows evolution, not decline. OpenAI's investigation and fixes affirm commitment.

Moreover, integrating Apidog ensures resilient workflows.

Ultimately, adapt strategies, leverage tools, and contribute feedback—small adjustments yield significant gains in productivity.