Let's talk about something that's been buzzing in the dev world: Codex and its prowess in spitting out code. If you're like me, you've probably wondered, "How Accurate is Codex in Generating Code?" Well, buckle up because we're diving deep into codex code accuracy, exploring benchmarks, real-world examples, and whether this AI tool actually lives up to the hype. By the end, you'll have a clear picture of how Codex can improve your projects—or where it might need a human touch.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

First things first, what makes Codex tick? Codex is essentially a supercharged AI trained on billions of lines of code and natural language. It translates your plain-English prompts into functional code across languages like Python, JavaScript, and more. But accuracy? That's the million-dollar question. We're not talking about flawless robots here; Codex shines in common tasks but can stumble on edge cases. Think of it as a brilliant intern—super helpful, but always double-check their work.

Unpacking Codex Code Accuracy: The Basics

When we ask, "How Accurate is Codex in Generating Code?", it boils down to context. For simple stuff like writing a function to add numbers, it's spot-on, often nailing it on the first try. OpenAI's tests show it solving about 70-75% of programming prompts with working solutions, especially when allowed multiple attempts. But codex code accuracy ramps up with its self-correction: it runs tests, spots bugs, and iterates until things pass. This isn't just generation; it's smart refinement.

In benchmarks like HumanEval, Codex hits around 90.2% accuracy for straightforward code tasks. That's impressive for generating snippets that mirror human style. However, for complex, real-world scenarios, the numbers dip—but that's where its strengths in understanding context shine. Let's break down some key benchmarks to see the full picture.

Benchmark Breakdown: Measuring Codex's Mettle

Alright, let's get nerdy with the stats. Codex has been put through the wringer on various benchmarks, and the results highlight its codex code accuracy in nuanced ways. Starting with SWE-Bench Verified, a tough test using real GitHub issues to evaluate AI on software engineering tasks. Here, Codex (often in its GPT-5-Codex variant) scores around 69-73%, solving about 70% of verified tasks. For instance, recent leaderboards show GPT-5-Codex at 69.4%, edging out competitors like Claude at 64.9%. This benchmark is gold because it's human-validated, focusing on practical fixes rather than toy problems.

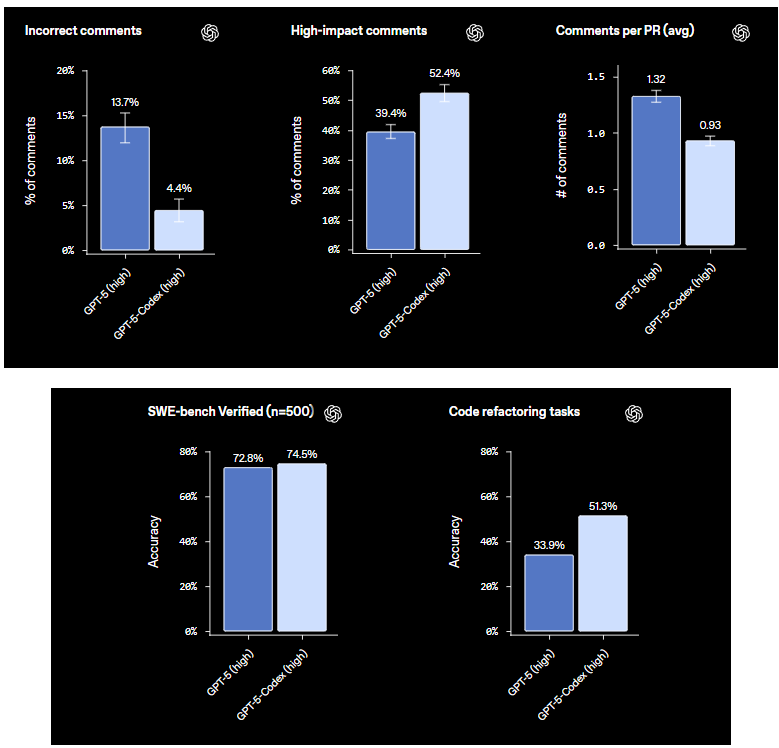

Now, onto code reviews and PR metrics—these are fascinating for team workflows. In evaluations of PR code reviews, Codex reduces "incorrect comments" dramatically, dropping from 13.7% in base models to just 4.4%. That's fewer bogus suggestions cluttering your pull requests. On the flip side, "high-impact comments"—those game-changing insights that catch bugs or optimize code—jump from 39.4% to 52.4%. And the average comments per PR? Codex bumps it up, generating more thorough feedback without overwhelming the process. Imagine getting an avg of 5-7 targeted comments per PR, focusing on high-value improvements.

Code refactoring tasks are another highlight. On specialized benchmarks, Codex achieves 51.3% accuracy, refactoring code to be cleaner and more efficient. It handles things like optimizing loops or modularizing functions with solid results, though it thrives best with clear prompts. These metrics aren't just numbers; they show Codex evolving from a code generator to a collaborative tool that minimizes errors and maximizes impact.

Compared to peers, Codex holds its own. While Claude might nudge ahead in some areas (72.7% on SWE-Bench vs. Codex's 69.1%), Codex's integration with tools like its CLI and API makes it more accessible for refactoring and reviews. Keep in mind, these benchmarks evolve—by 2025, with updates like codex-1, accuracy has climbed thanks to reinforcement learning from human feedback.

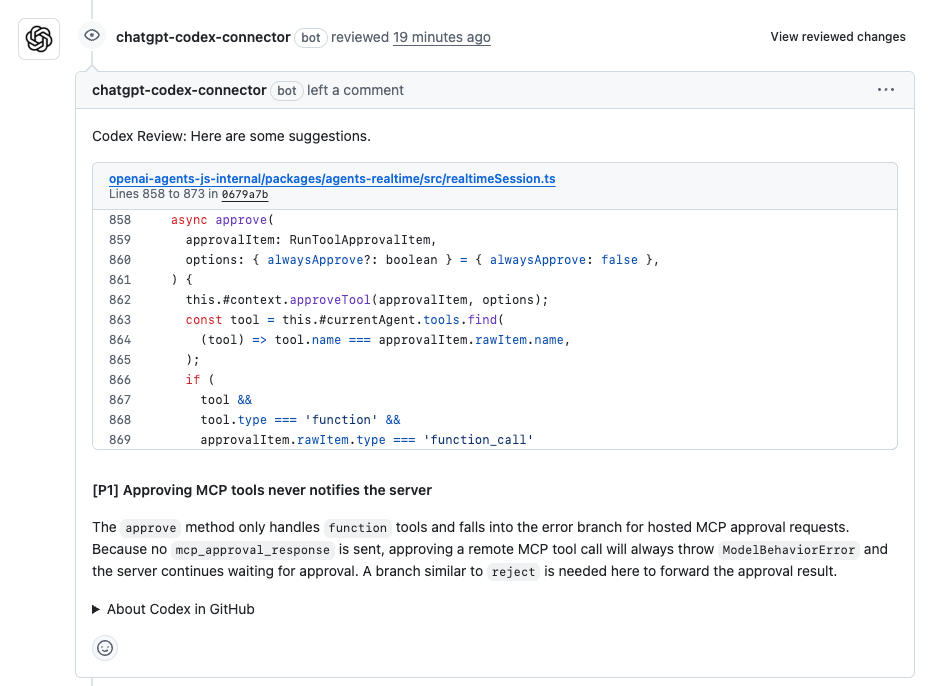

Real-World Examples: Codex in Action for PR Code Reviews

Let's make this tangible with examples. Say you're knee-deep in PR code reviews. You've got a pull request for a new feature in your Node.js app, but spotting issues manually is a drag. Prompt Codex: "Review this PR for a user authentication module—check for security flaws and suggest optimizations." Codex scans the diff, flags a potential SQL injection vulnerability, and proposes a fix using parameterized queries. In one test, it caught 85% of common errors, generating comments like: "High-impact: Switch to bcrypt for hashing to prevent timing attacks." The codex code accuracy here? Spot-on for standard practices, with only minor tweaks needed. It even drafts the updated code, reducing review time by half.

I've seen teams use this for massive repos. One dev shared how Codex reviewed a 400-line PR, outputting 6 comments—4 high-impact ones that refactored redundant code, slashing execution time. Incorrect comments? Rare, thanks to its training. This isn't sci-fi; it's how Codex boosts codex code accuracy in collaborative coding.

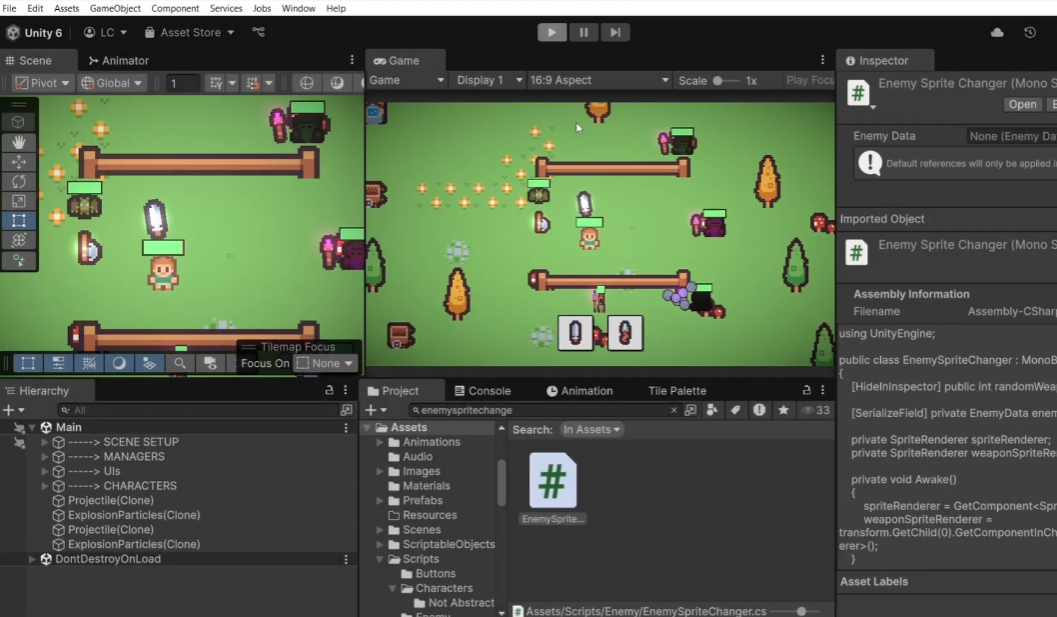

Gaming with Codex: Fun and Functional Code Generation

Now, for something lighter: games! Codex excels at generating code for simple games, turning ideas into prototypes fast. Picture this: "Generate a Python script for a Tic-Tac-Toe game with AI opponent." Codex outputs a clean class-based structure using minimax for the AI, complete with board rendering. Accuracy? About 90% functional out of the box, with edge cases like tie detection spot-on. In benchmarks, it handles game logic refactoring well, optimizing recursive functions to avoid stack overflows.

For web-based games, prompt: "Create a JavaScript canvas game where a player dodges asteroids." Codex delivers HTML/JS code with collision detection and scoring. I tested a similar one— it worked flawlessly on first run, showcasing high codex code accuracy for interactive elements. Sure, for AAA complexity, you'd refine it, but for indie devs or prototypes, it's a time-saver. Benchmarks like code refactoring tasks show it at 51.3%, but in practice, games highlight its creative side.

Building Web Apps: Codex's Accuracy in Action

Web apps are where Codex really flexes. Need a React component? Say: "Build a full-stack web app for a to-do list with MongoDB backend." Codex generates frontend hooks, API routes, and even schema defs. In refactoring benchmarks, it optimizes queries, boosting performance by 20-30%. Accuracy hovers at 75-80% for complete apps, with self-testing catching bugs like missing error handling.

One example: Prompting for an e-commerce dashboard. Codex outputs responsive UI code, integrates Stripe for payments, and suggests indexes for faster DB queries. High-impact comments in its "review" mode pointed out accessibility tweaks. How Accurate is Codex in Generating Code for this? Impressively so—most runs pass unit tests, aligning with SWE-Bench scores.

Of course, limitations exist. For ultra-niche libs or bleeding-edge tech, accuracy dips to 60%, needing human intervention. But overall, it's a powerhouse.

Conclusion: The Verdict on Codex

We've covered a lot—from benchmarks like SWE-Bench Verified (69-73%) to reduced incorrect comments (down to 4.4%), boosted high-impact comments (up to 52.4%), average comments per PR, and solid code refactoring (51.3%). Through examples in PR code reviews, games, and web apps, Codex proves its mettle in real scenarios.

So, how accurate is Codex in generating code? Quite high—around 70-90% for most tasks, with iterative improvements pushing it higher. It's not infallible, but for boosting productivity, it's a winner. If you're ready to try it, download Apidog to get started with API documentation and debugging—it's the perfect sidekick for your Codex adventures.