Developers increasingly rely on advanced AI models to enhance coding efficiency, automate complex workflows, and build intelligent applications. Anthropic's Claude Opus 4.5 emerges as a leading solution in this space, offering superior performance in software engineering, agentic tasks, and multi-step reasoning. This model sets new benchmarks in real-world coding and computer use, making it essential for technical teams tackling production-level projects.

This guide equips you with the technical knowledge to harness Claude Opus 4.5 effectively. We cover setup, core API mechanics, advanced configurations, and optimization strategies. By following these steps, you position your applications to leverage the model's 200K token context window, enhanced tool use, and efficient token management. Consequently, you achieve faster development cycles and more reliable AI-driven features.

What is Claude Opus 4.5?

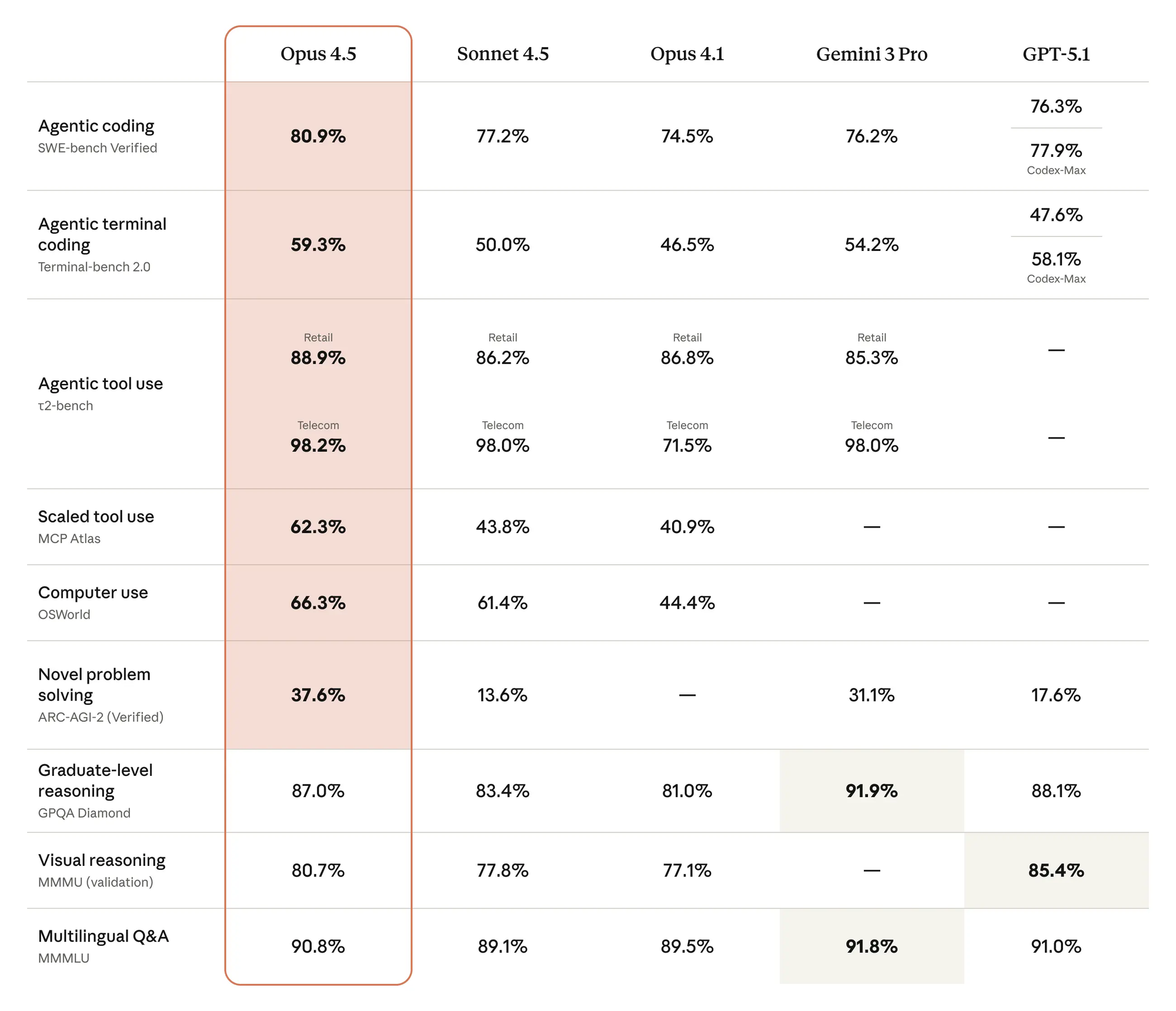

Anthropic engineers designed Claude Opus 4.5 as their flagship model, prioritizing depth in reasoning, coding precision, and agentic autonomy. This iteration builds on previous versions by incorporating breakthroughs in vision processing, mathematical accuracy, and ambiguity resolution. For instance, the model excels at handling tradeoffs in complex scenarios, such as modifying flight itineraries in enterprise simulations or debugging sprawling codebases without explicit guidance.

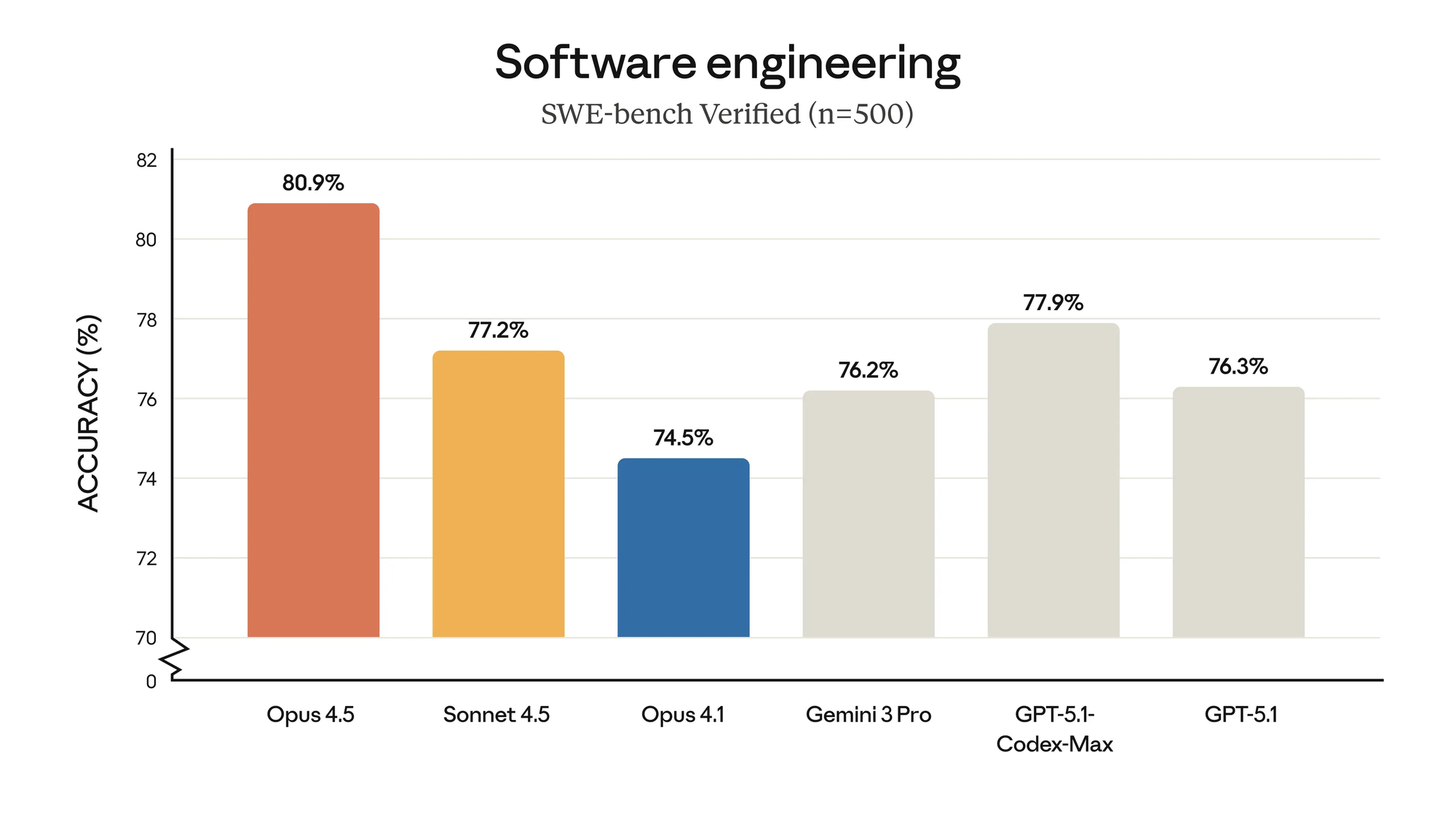

Key capabilities include state-of-the-art results on SWE-bench Verified, where it outperforms predecessors by up to 4.3 percentage points while using 48% fewer output tokens at maximum effort.

Developers access these strengths through the Claude API, which supports a 200K token context window—ideal for long-form analysis or multi-file code reviews. Moreover, the model integrates seamlessly with cloud platforms like Amazon Bedrock, Google Vertex AI, and Microsoft Foundry, enabling scalable deployments.

Pricing reflects its premium positioning: $5 per million input tokens and $25 per million output tokens, with savings via prompt caching (up to 90%) and batch processing (50%). However, these costs underscore the need for precise usage patterns, which we address later. In essence, Claude Opus 4.5 empowers developers to construct agents that manage end-to-end projects, from initial planning to execution, with minimal human oversight.

Setting Up Your Development Environment

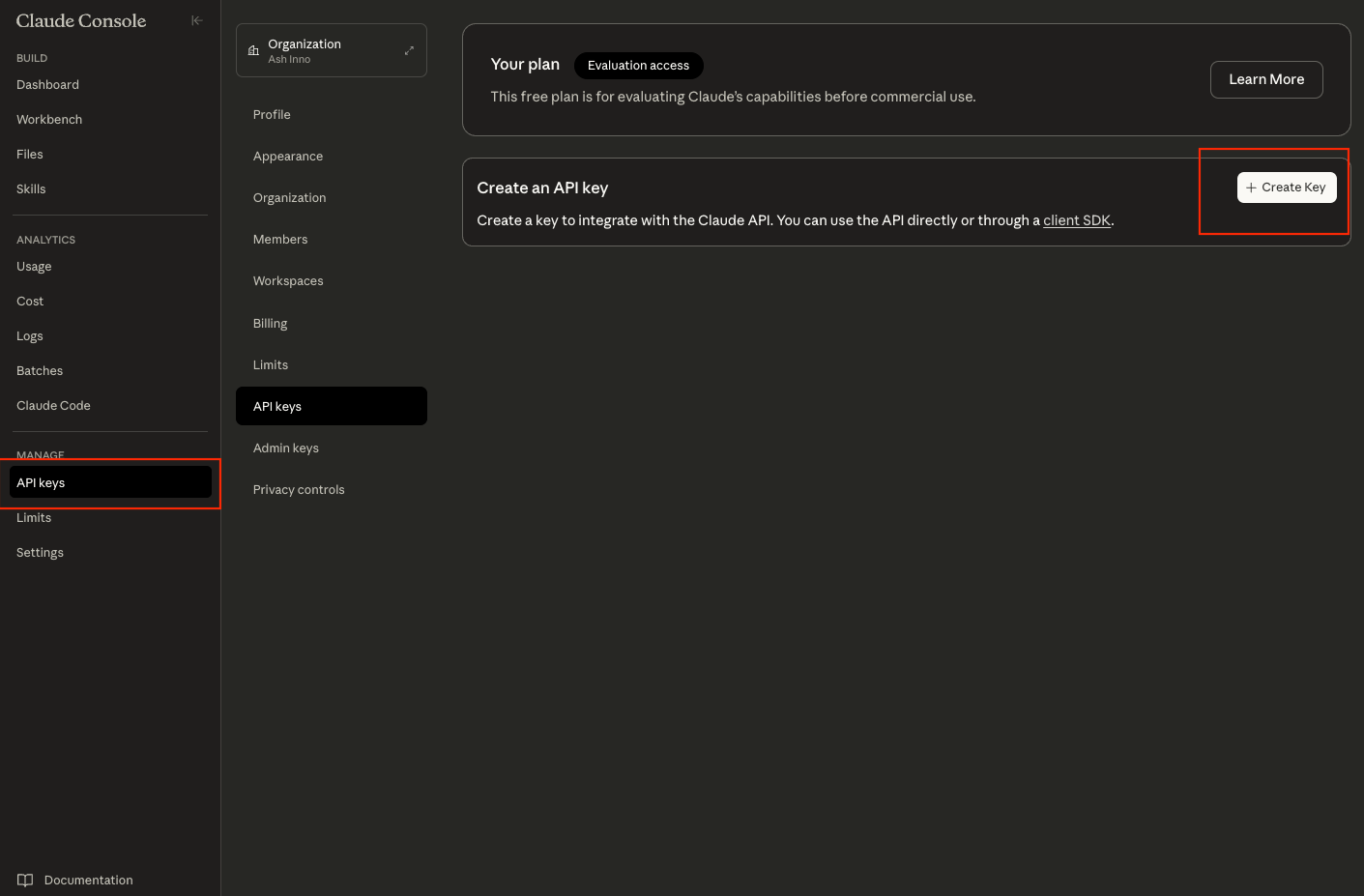

You begin by preparing a robust environment to interact with the Claude API. First, obtain an API key from the Anthropic Console at console.anthropic.com. Sign up or log in, navigate to the "API Keys" section, and generate a new key. Store this securely—use environment variables like export ANTHROPIC_API_KEY='your-key-here' in your terminal or .env files in your project root.

Next, install the official Anthropic SDK, which abstracts HTTP complexities and handles retries. For Python, run pip install anthropic. This library supports synchronous and asynchronous calls, essential for high-throughput applications. Similarly, Node.js developers execute npm install @anthropic-ai/sdk. Verify installation by importing the module: in Python, import anthropic; client = anthropic.Anthropic(api_key=os.getenv('ANTHROPIC_API_KEY')).

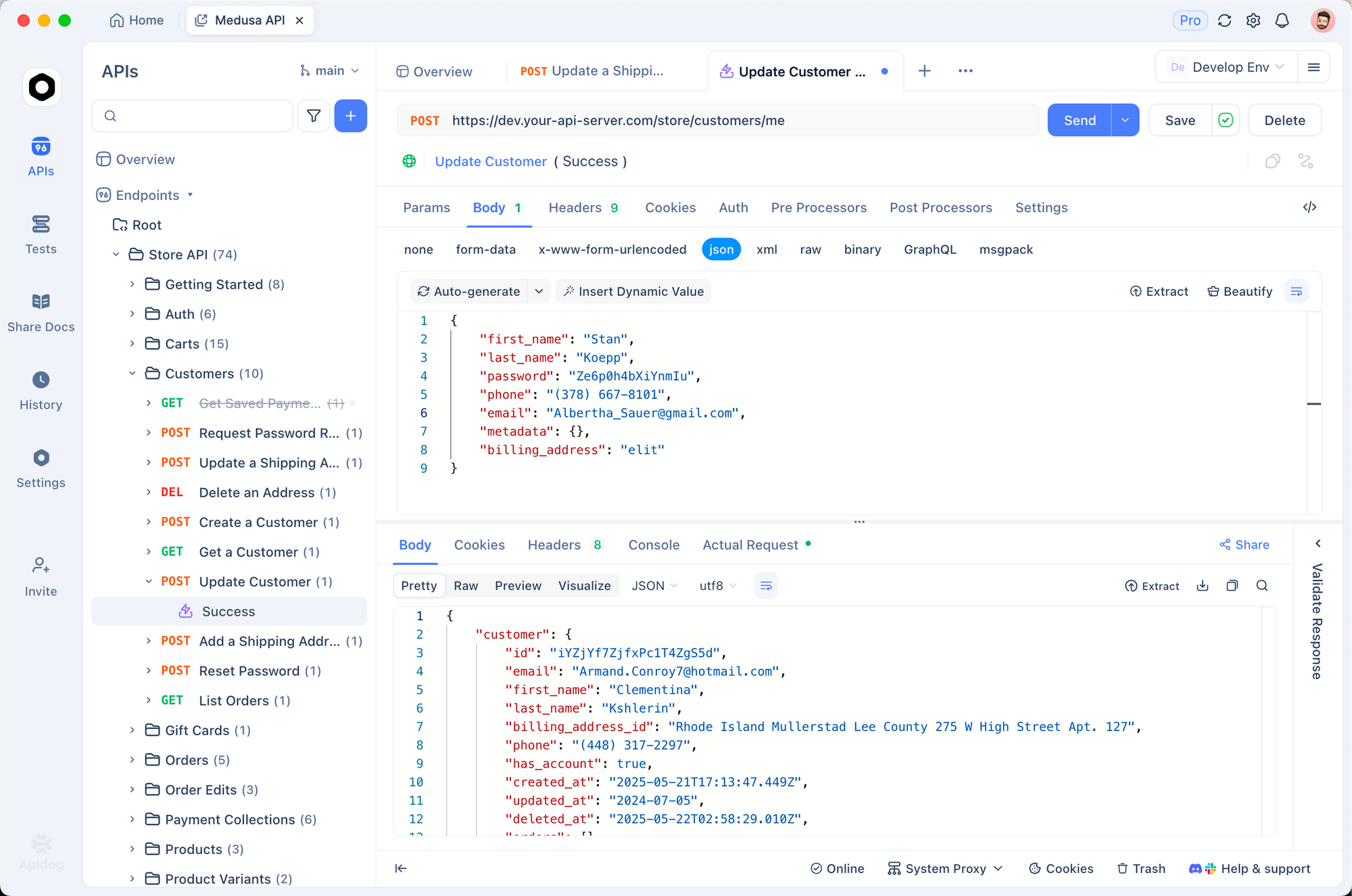

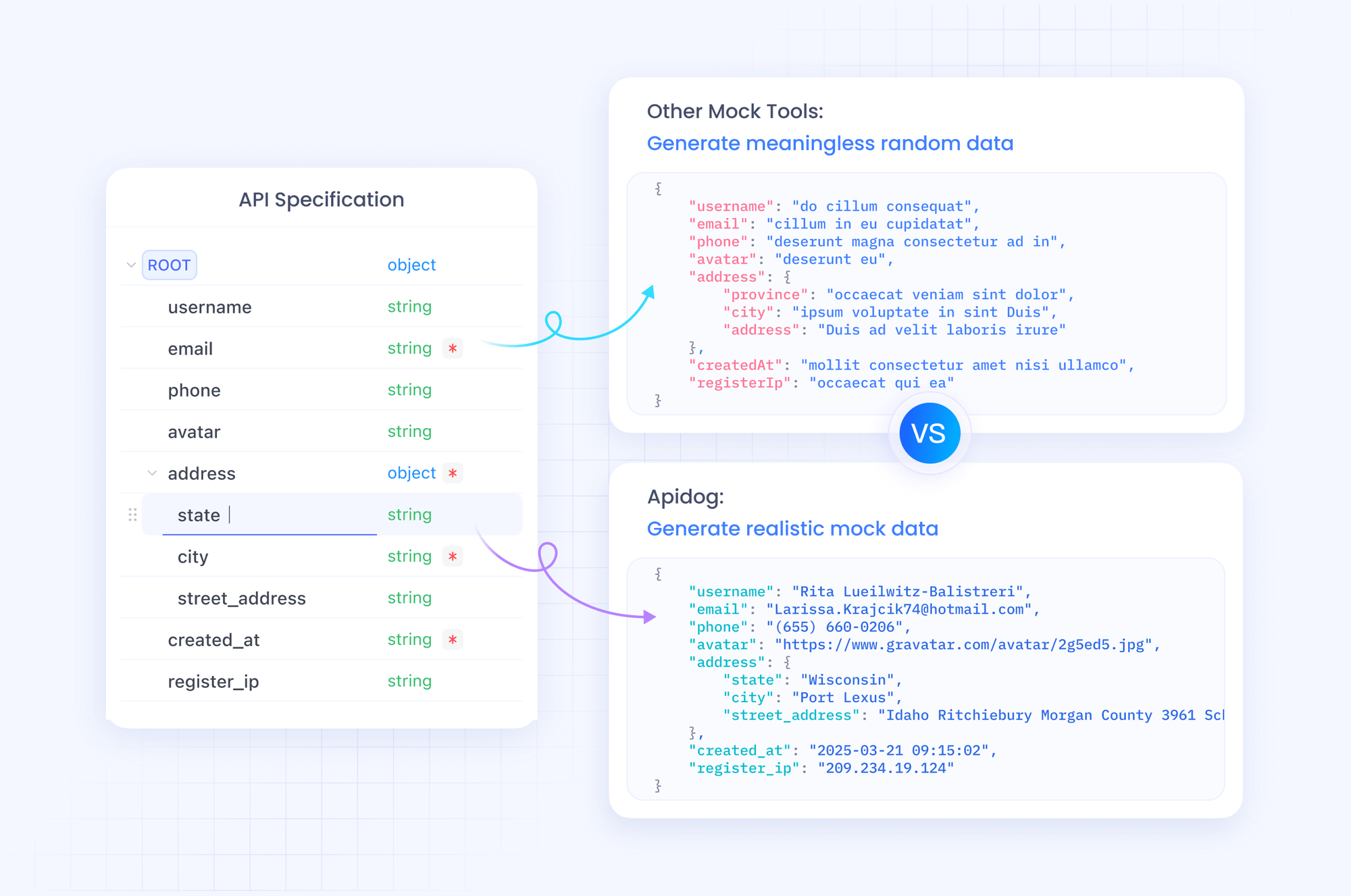

For testing, integrate Apidog early. This tool generates curl commands and Postman collections from your SDK experiments, ensuring consistency across teams. Import your API key into Apidog's environment variables, and create a new request to the /v1/messages endpoint. Such preparation prevents common pitfalls like authentication errors, allowing you to focus on prompt engineering.

Once set up, confirm connectivity with a simple health check. Send a basic request to validate your key and network. This step confirms that your environment handles the API's rate limits—initially 50 requests per minute for Opus models, scalable with usage tiers.

Authentication and API Basics

Anthropic enforces authentication via Bearer tokens, a standard OAuth2-inspired mechanism. Include your API key in the Authorization header as Bearer ${ANTHROPIC_API_KEY} for every request. The base URL is https://api.anthropic.com/v1, with the primary endpoint /messages for chat completions.

Requests follow a JSON payload structure. Define a model field specifying claude-opus-4-5-20251101, the exact identifier for this release. Add a messages array containing role-content pairs: system prompts set behavioral guidelines, while user messages trigger responses. For example:

{

"model": "claude-opus-4-5-20251101",

"max_tokens": 1024,

"messages": [

{"role": "user", "content": "Explain quantum entanglement in simple terms."}

]

}

The SDK simplifies this: in Python, client.messages.create(model="claude-opus-4-5-20251101", max_tokens=1024, messages=[{"role": "user", "content": "Your prompt here"}]). Responses return a content array with text deltas for streaming, or full blocks for batch mode.

Rate limits apply per organization: Opus 4.5 caps at 10,000 tokens per minute initially, with bursts up to 50,000. Monitor via response headers like x-ratelimit-remaining. If exceeded, implement exponential backoff in your code— the SDK handles this natively with retry_on=anthropic.RetryStatus.SERVER_ERROR.

Security best practices include rotating keys quarterly and restricting them to specific IP ranges in the console. Thus, you maintain compliance in enterprise settings while scaling API calls.

Making Your First API Request

Execute your inaugural request to grasp the API's rhythm. Start with a straightforward query that tests the model's reasoning prowess. In Python:

import anthropic

import os

client = anthropic.Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

response = client.messages.create(

model="claude-opus-4-5-20251101",

max_tokens=500,

messages=[

{"role": "user", "content": "Write a Python function to compute Fibonacci numbers up to n=20."}

]

)

print(response.content[0].text)

This code invokes the model, which generates efficient code leveraging memoization—showcasing its coding aptitude. The response arrives in under 2 seconds at default effort, with output tokens around 150 for concise results.

For streaming, append stream=True to the call. This yields incremental deltas, ideal for real-time UIs. Parse them via a generator loop:

stream = client.messages.stream(

model="claude-opus-4-5-20251101",

max_tokens=500,

messages=[{"role": "user", "content": "Your streaming prompt"}]

)

for text in stream:

print(text.content[0].text, end="", flush=True)

Apidog complements this by visualizing streams in its response viewer, highlighting token consumption. Experiment here to refine prompts before production.

Handle errors proactively. A 429 status indicates throttling; catch with try-except blocks. Similarly, 400s signal malformed JSON—validate payloads using Apidog's schema checker. Through these basics, you build a foundation for more intricate integrations.

Advanced Features: Effort Control and Context Management

Claude Opus 4.5 introduces the effort parameter, a game-changer for balancing speed and depth. Set it to "low", "medium", or "high" in requests: low prioritizes quick replies (sub-second latency), while high allocates extended compute for nuanced outputs, boosting benchmarks like SWE-bench by 15 points.

Incorporate it thus:

response = client.messages.create(

model="claude-opus-4-5-20251101",

effort="high",

max_tokens=2000,

messages=[{"role": "user", "content": "Analyze tradeoffs in microservices vs. monoliths for a fintech app."}]

)

At high effort, the model employs interleaved scratchpads and a 64K thinking budget, yielding detailed pros/cons tables. However, this increases costs—medium effort often suffices for 80% of tasks, matching Sonnet 4.5 efficiency with 76% fewer tokens.

Context management follows suit. The 200K window accommodates entire repositories; use the client-side compaction SDK to summarize prior exchanges. Install via pip install anthropic-compaction, then:

from anthropic.compaction import compact_context

compacted = compact_context(previous_messages)

# Append to new messages array

This feature shines in agentic loops, where agents maintain memory across sessions. For multi-agent systems, define subagents via tool calls, enabling Opus 4.5 to orchestrate teams—e.g., one for research, another for validation.

Transitioning to tools, Opus 4.5 supports advanced definitions. Declare JSON schemas for functions like database queries:

{

"name": "get_user_data",

"description": "Fetch user profile",

"input_schema": {"type": "object", "properties": {"user_id": {"type": "string"}}}

}

The model invokes tools autonomously, parsing arguments and injecting results into follow-ups. This enables hybrid workflows, such as API-chained agents for cybersecurity scans.

Integrating Tools and Building Agents

Tool use elevates Claude Opus 4.5 to agentic heights. Define tools in the tools array of requests. The model decides invocation based on context, generating XML-formatted calls for precision.

Example: Integrate a weather API tool.

tools = [

{

"name": "get_weather",

"description": "Retrieve current weather for a city",

"input_schema": {

"type": "object",

"properties": {"city": {"type": "string"}},

"required": ["city"]

}

}

]

response = client.messages.create(

model="claude-opus-4-5-20251101",

max_tokens=1000,

tools=tools,

messages=[{"role": "user", "content": "Plan a trip to Paris; check weather."}]

)

If the model calls the tool, extract from response.stop_reason == "tool_use", execute externally, and append the output as a tool result message. Loop until completion for full agent execution.

For computer use, enable beta features via headers. This allows screen inspection and automation, with the Zoom Tool for pixel-level analysis—crucial for UI debugging.

Apidog streamlines tool testing: mock endpoints in its simulator, then export to SDK code. This iterative approach refines agent reliability, reducing hallucinated calls.

In multi-agent setups, leverage memory tools for state persistence. Store key facts in a memory tool, queried across subagents. Consequently, systems handle sprawling tasks like software audits, where one agent plans, others execute.

Error Handling and Best Practices

Robust applications anticipate failures. Implement comprehensive error handling for API quirks. For 4xx errors, log the error.type (e.g., "invalid_request") and retry with corrected payloads. Use tenacity library for decorators:

from tenacity import retry, stop_after_attempt, wait_exponential

@retry(stop=stop_after_attempt(3), wait=wait_exponential(multiplier=1, min=4, max=10))

def safe_api_call(prompt):

return client.messages.create(model="claude-opus-4-5-20251101", messages=[{"role": "user", "content": prompt}])

Monitor token usage via usage in responses—input, output, and cache hits. Set budgets dynamically: if output exceeds 80% of max_tokens, truncate and summarize.

Best practices include prompt engineering with XML tags for structure: <thinking>Reason step-by-step</thinking><output>Final answer</output>. This guides the model, especially at low effort. Additionally, enable safety via system prompts enforcing ethical guidelines.

For production, batch requests to cut costs: queue non-urgent queries and process in 100s. Cache frequent prompts for 90% savings. Regularly audit outputs for alignment—Opus 4.5 resists injections, but validate sensitive data.

Optimizing Performance and Cost

Optimization ensures sustainable usage. Profile requests with Apidog's analytics: track latency, token spend, and success rates. Identify bottlenecks, like verbose prompts, and condense them using compaction.

Leverage prompt caching: tag reusable prefixes with cache_control: {"type": "ephemeral"}. On hits, pay only 25% for inputs. For agents, persist cache across calls to maintain context affordably.

Scale with async patterns. In Node.js:

const { Anthropic } = require('@anthropic-ai/sdk');

const anthropic = new Anthropic({ apiKey: process.env.ANTHROPIC_API_KEY });

async function parallelRequests(prompts) {

const promises = prompts.map(p =>

anthropic.messages.create({ model: 'claude-opus-4-5-20251101', messages: [{role: 'user', content: p}] })

);

return Promise.all(promises);

}

This handles concurrent agent forks efficiently. At high effort, cap thinking budget to 32K for cost control without sacrificing quality.

Benchmark your setup against baselines: Opus 4.5 achieves 72.5% on SWE-bench, so test custom evals. Adjust effort per task—low for ideation, high for verification.

Conclusion

You now possess the tools to integrate Claude Opus 4.5 API into your stack effectively. From initial setup to agent orchestration, this guide outlines a path to leverage its strengths in coding and reasoning. Remember, small refinements—like caching or effort tuning—yield substantial gains in performance and economy.

Experiment iteratively, using Apidog to validate each layer. As you build, monitor Anthropic's updates for enhancements. Ultimately, Claude Opus 4.5 transforms development from manual toil to orchestrated intelligence. Start implementing today, and watch your projects scale with precision.