MetaStone AI’s XBai o4, released on August 1, 2025, is a fourth-generation open-source language model that outperforms OpenAI-o3-mini in complex reasoning tasks. This Chinese-developed model introduces advanced training techniques and optimized inference, making it a game-changer in AI development. Available on GitHub and Hugging Face, XBai o4 promotes transparency and collaboration.

The Rise of XBai o4: A Technical Overview

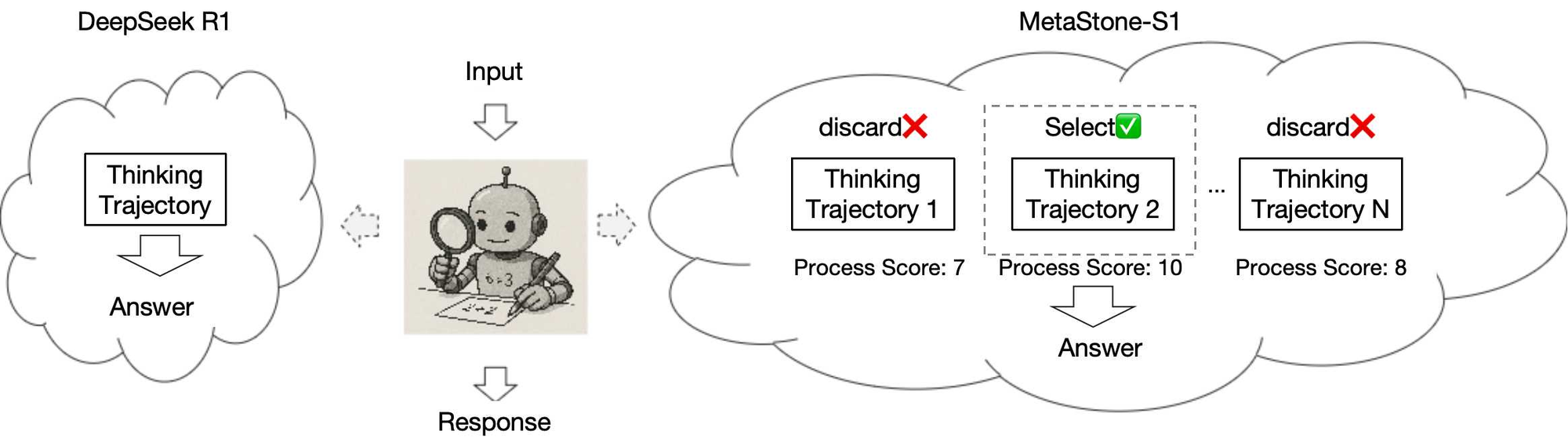

XBai o4, developed by MetaStone AI, represents a leap forward in open-source AI technology. Unlike proprietary models, XBai o4’s codebase and weights are publicly available on GitHub and Hugging Face, fostering transparency and collaboration. Specifically, the model leverages a novel training approach called the “reflective generative form,” which integrates Long-CoT Reinforcement Learning and Process Reward Learning. Consequently, this unified framework enables XBai o4 to excel in deep reasoning and high-quality reasoning trajectory selection, setting it apart from its predecessors and competitors like OpenAI-o3-mini.

Moreover, XBai o4 optimizes inference efficiency by sharing the backbone network between its Policy Reward Models (PRMs) and policy models. This architectural choice reduces the inference cost of PRMs by an impressive 99%, resulting in faster response times and higher-quality outputs. For instance, the model’s parameters are saved in two distinct files: model.safetensors for the policy model checkpoint and a separate file for the SPRM head, as detailed in the Hugging Face repository.

Understanding the Reflective Generative Form

The cornerstone of XBai o4’s success lies in its reflective generative form. This training paradigm combines two advanced techniques:

- Long-CoT Reinforcement Learning: This method extends Chain-of-Thought (CoT) prompting by incorporating reinforcement learning to refine the model’s reasoning process over extended contexts. As a result, XBai o4 can tackle complex, multi-step problems with greater accuracy.

- Process Reward Learning: This approach rewards the model for selecting high-quality reasoning trajectories during training. Consequently, XBai o4 learns to prioritize optimal reasoning paths, enhancing its performance in tasks requiring nuanced decision-making.

By integrating these methods, XBai o4 achieves a balance between deep reasoning and computational efficiency. Furthermore, the shared backbone network minimizes redundancy, allowing the model to process inputs faster without sacrificing quality. This innovation is particularly significant when compared to OpenAI-o3-mini, which, while efficient, lacks the same level of open-source accessibility and optimized reasoning capabilities.

Comparing XBai o4 to OpenAI-o3-mini

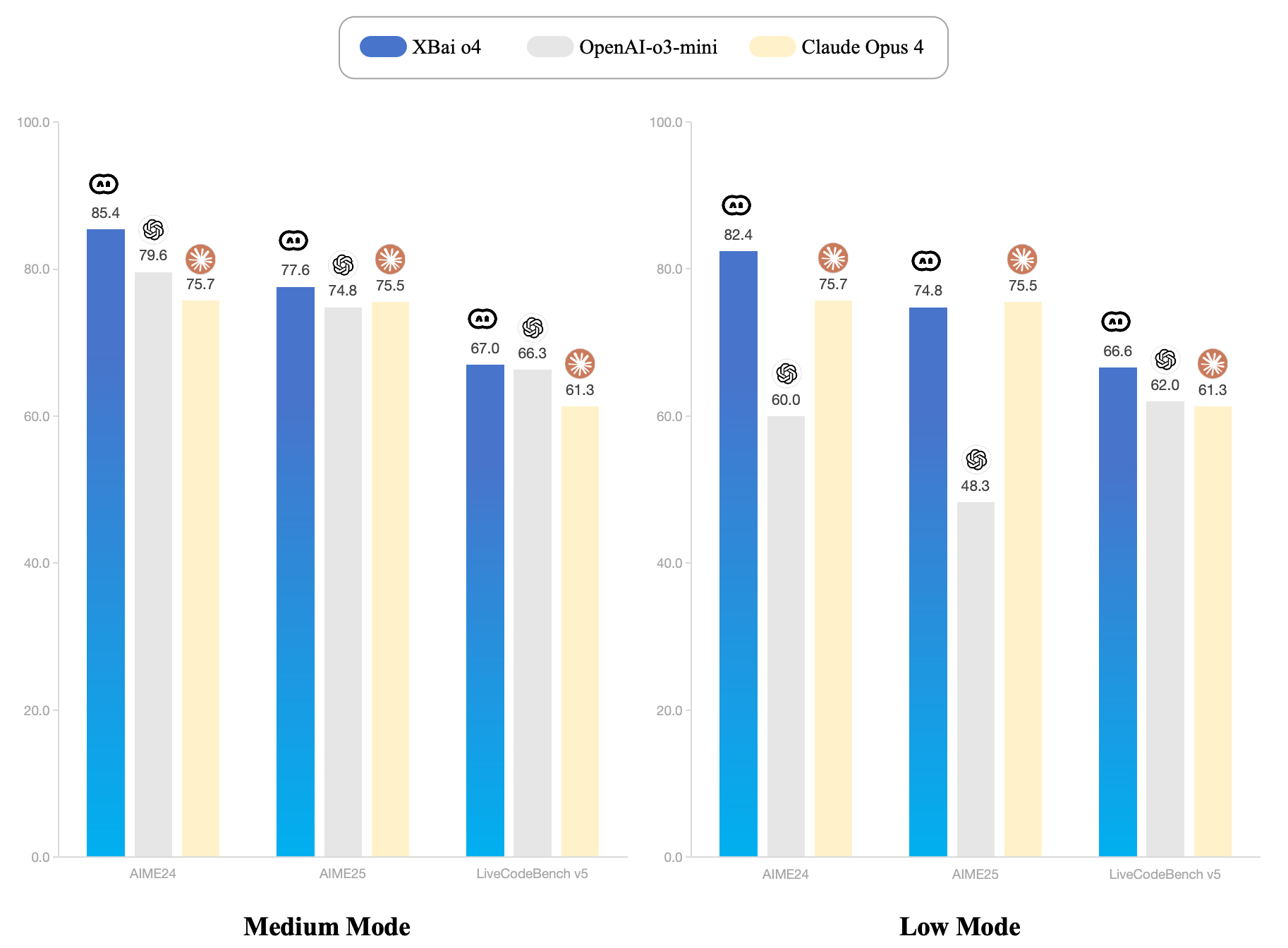

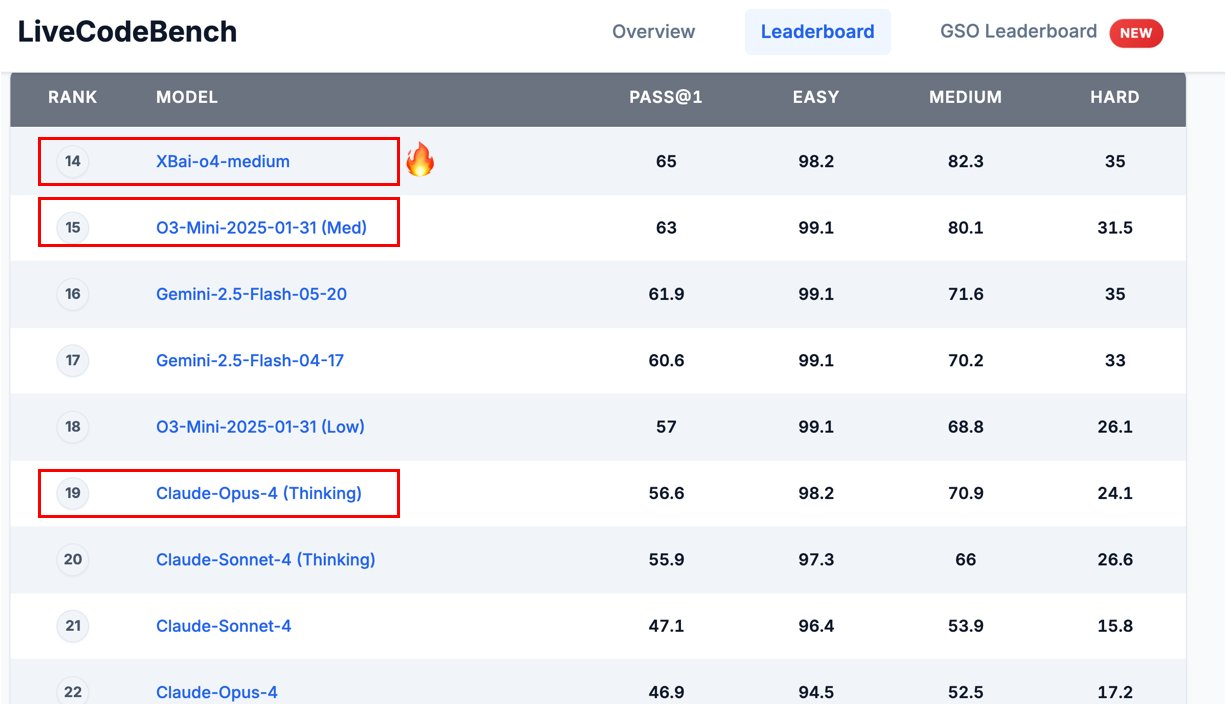

OpenAI-o3-mini, a compact version of OpenAI’s broader o3 series, is designed for efficiency in medium-complexity tasks. However, XBai o4 claims to “completely surpass” OpenAI-o3-mini in Medium mode, as stated in MetaStone AI’s GitHub announcement.

To understand this claim, let’s examine key performance metrics:

- Complex Reasoning: XBai o4’s reflective generative form enables it to handle intricate reasoning tasks, such as mathematical benchmarks (e.g., AIME24), with superior accuracy. In contrast, OpenAI-o3-mini, while competent, struggles with tasks requiring extended reasoning chains.

- Inference Speed: By reducing PRM inference costs by 99%, XBai o4 delivers faster responses, making it ideal for real-time applications. OpenAI-o3-mini, although optimized for speed, does not match this level of efficiency in open-source contexts.

- Open-Source Accessibility: XBai o4’s availability on platforms like GitHub and Hugging Face allows developers to customize and deploy the model freely. Conversely, OpenAI-o3-mini remains proprietary, limiting its adaptability for research and development.

For example, MetaStone AI’s test pipeline for mathematical benchmarks, as outlined in their GitHub repository, demonstrates XBai o4’s ability to process tasks like AIME24 with high precision. The pipeline uses scripts like score_model_queue.py and policy_model_queue.py to evaluate performance, leveraging tools like XFORMERS for optimized attention mechanisms.

Technical Implementation of XBai o4

To deploy XBai o4, developers need a robust setup, as outlined in the GitHub repository. Below is a simplified setup guide based on the provided instructions:

Environment Setup:

- Create a Conda environment with Python 3.10:

conda create -n xbai_o4 python==3.10. - Activate the environment:

conda activate xbai_o4. - Install dependencies:

pip install -e verl,pip install -r requirements.txt, andpip install flash_attn==2.7.4.post1.

Training and Evaluation:

- Start Ray for distributed computing:

bash ./verl/examples/ray/run_worker_n.sh. - Initiate multi-node training:

bash ./scripts/run_multi_node.sh. - Run the test pipeline for mathematical benchmarks:

python test/inference.py --task 'aime24' --input_file data/aime24.jsonl --output_file path/to/result.

API Integration:

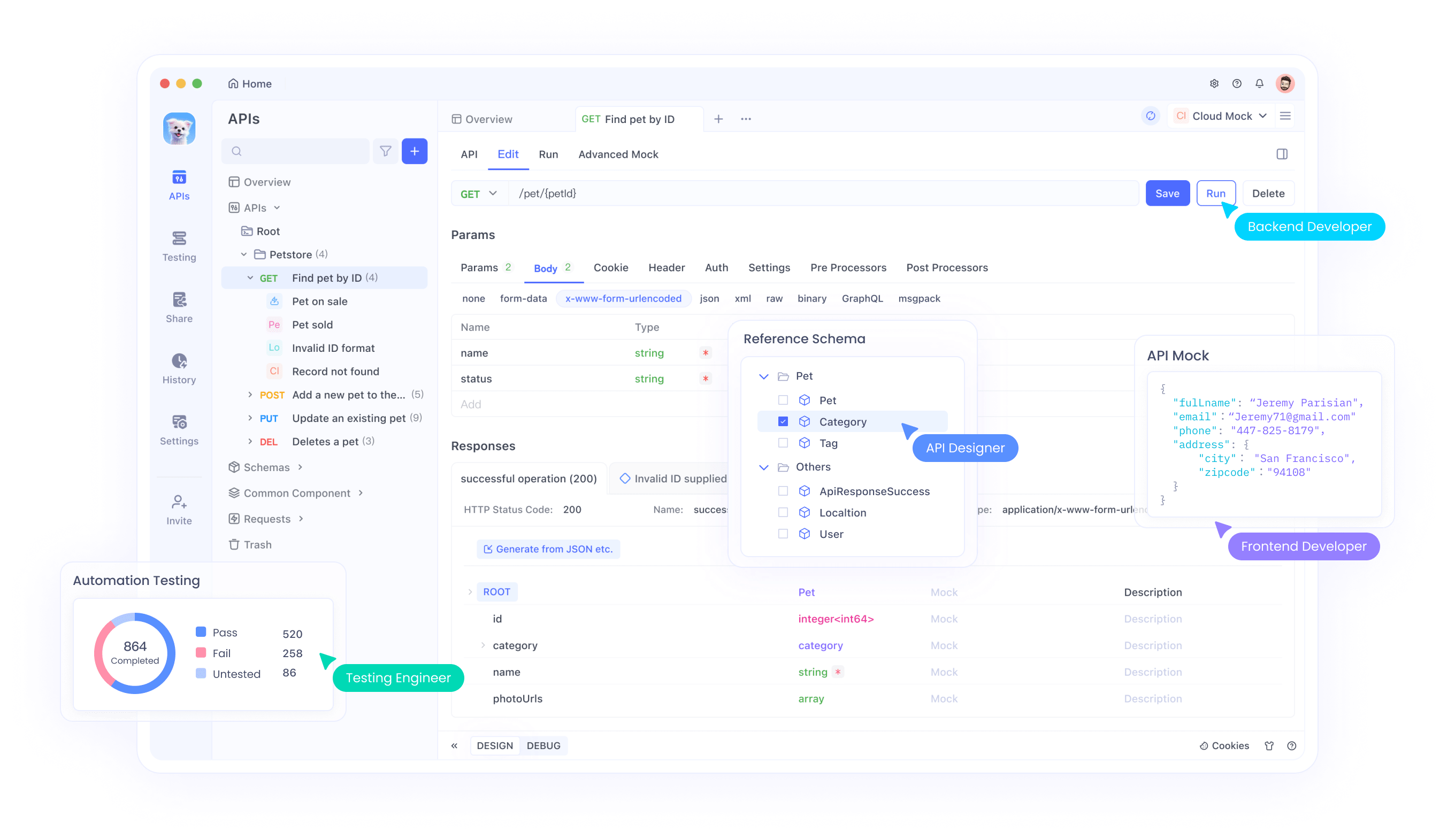

- Launch policy model APIs for fast evaluation:

CUDA_VISIBLE_DEVICES=0 python test/policy_model_queue.py --model_path path/to/huggingface/model --ip '0.0.0.0' --port '8000'. - Use tools like Apidog to test and manage these APIs, ensuring seamless integration into larger systems.

This setup highlights XBai o4’s flexibility for both research and production environments. Additionally, the model’s compatibility with tools like Apidog simplifies API testing, allowing developers to validate endpoints efficiently.

Benchmark Performance and Evaluation

MetaStone AI’s release notes emphasize XBai o4’s superior performance on mathematical benchmarks like AIME24. The test pipeline, detailed in the GitHub repository, uses a combination of policy and score model APIs to evaluate the model’s reasoning capabilities. For instance, the inference.py script processes input files like aime24.jsonl and generates results with 16 samples, leveraging multiple API endpoints for speed.

Moreover, the model’s performance is enhanced by the XFORMERS attention backend, which optimizes memory usage and computation speed. This is particularly evident in the VLLM_ATTENTION_BACKEND=XFORMERS configuration, which ensures efficient processing on GPU-enabled systems.

In contrast, OpenAI-o3-mini, while effective for general tasks, does not provide the same level of transparency in its evaluation process. XBai o4’s open-source nature allows researchers to scrutinize and replicate its benchmarks, fostering trust in its performance claims.

Community Reception and Skepticism

The AI community has responded with a mix of excitement and skepticism to XBai o4’s release. A Reddit post on r/accelerate, for instance, highlights the model’s potential but raises concerns about benchmark overtuning, referencing past issues with models like Llama-4. Some users question the credibility of MetaStone AI, a relatively new player compared to established organizations like Qwen. Nevertheless, the open-source availability of XBai o4’s weights and code encourages independent verification, which could dispel doubts over time.

For example, a user on Threads reported testing XBai o4 on an M4 Max with the mlx-lm backend, noting that it passed the “1+1 vibe test” for reasoning tasks. However, challenges like rendering complex visualizations (e.g., inverse kinematics) suggest areas for improvement.

Integration with Apidog for API Testing

For developers integrating XBai o4 into their workflows, tools like Apidog are invaluable. Apidog simplifies the process of testing and managing APIs, such as those used in XBai o4’s evaluation pipeline. By providing a user-friendly interface for sending requests to endpoints like http://ip:port/score, Apidog ensures that developers can validate model performance without complex manual configurations. Furthermore, its free download makes it accessible to researchers and hobbyists alike, aligning with XBai o4’s open-source ethos.

To illustrate, consider a scenario where a developer uses Apidog to test XBai o4’s policy model API. By configuring the endpoint URL and parameters (e.g., --model_path and --port), Apidog can send test requests and analyze responses, streamlining the debugging process. This integration is particularly useful for scaling evaluations across multiple nodes, as recommended in the GitHub setup instructions.

Future Implications for Open-Source AI

XBai o4’s release underscores the growing importance of open-source AI in democratizing access to advanced technology. Unlike proprietary models like OpenAI-o3-mini, XBai o4 empowers developers to customize and extend the model for specific use cases. For instance, its reflective generative form could be adapted for domains like scientific research, financial modeling, or automated code generation.

Additionally, the model’s efficiency improvements pave the way for deploying large language models on resource-constrained environments. By reducing inference costs, XBai o4 makes it feasible to run sophisticated AI on consumer-grade hardware, broadening its potential applications.

However, challenges remain. The AI community’s skepticism highlights the need for rigorous, transparent benchmarking to validate performance claims. Moreover, while XBai o4 excels in reasoning, its visualization capabilities (e.g., inverse kinematics) require further refinement, as noted in community feedback.

Conclusion: XBai o4’s Place in the AI Ecosystem

In summary, XBai o4 represents a significant advancement in open-source AI, offering superior reasoning capabilities and efficiency compared to OpenAI-o3-mini. Its reflective generative form, combining Long-CoT Reinforcement Learning and Process Reward Learning, sets a new standard for complex problem-solving. Furthermore, its open-source availability on GitHub and Hugging Face fosters collaboration and innovation, making it a valuable resource for developers and researchers.

For those looking to explore XBai o4’s capabilities, tools like Apidog provide an efficient way to test and integrate its APIs, ensuring seamless deployment in real-world applications. As the AI landscape continues to evolve, XBai o4 stands as a testament to the power of open-source innovation, challenging proprietary models and pushing the boundaries of what AI can achieve.