Unlock the full potential of Windsurf AI by integrating MCP (Model Context Protocol) servers. Learn how to set up, connect, and use MCP servers—such as PostgreSQL integration—for seamless, AI-powered database management and workflow automation inside your code editor.

What is an MCP Server? Why Use MCP in Windsurf?

Model Context Protocol (MCP) is an open standard designed to let AI tools like Windsurf securely interact with external resources—databases, APIs, files, and more. Developed by Anthropic, MCP allows your AI assistant (Cascade in Windsurf) to go beyond code suggestions and actually perform actions inside your development environment.

Why integrate MCP servers with Windsurf?

- Direct database access: Run queries, analyze schemas, and debug performance—all from your editor.

- Workflow automation: Automate browser tasks, manage GitHub, or control other systems without context switching.

- Real-time feedback: Get actionable insights instantly, reducing manual work and boosting productivity.

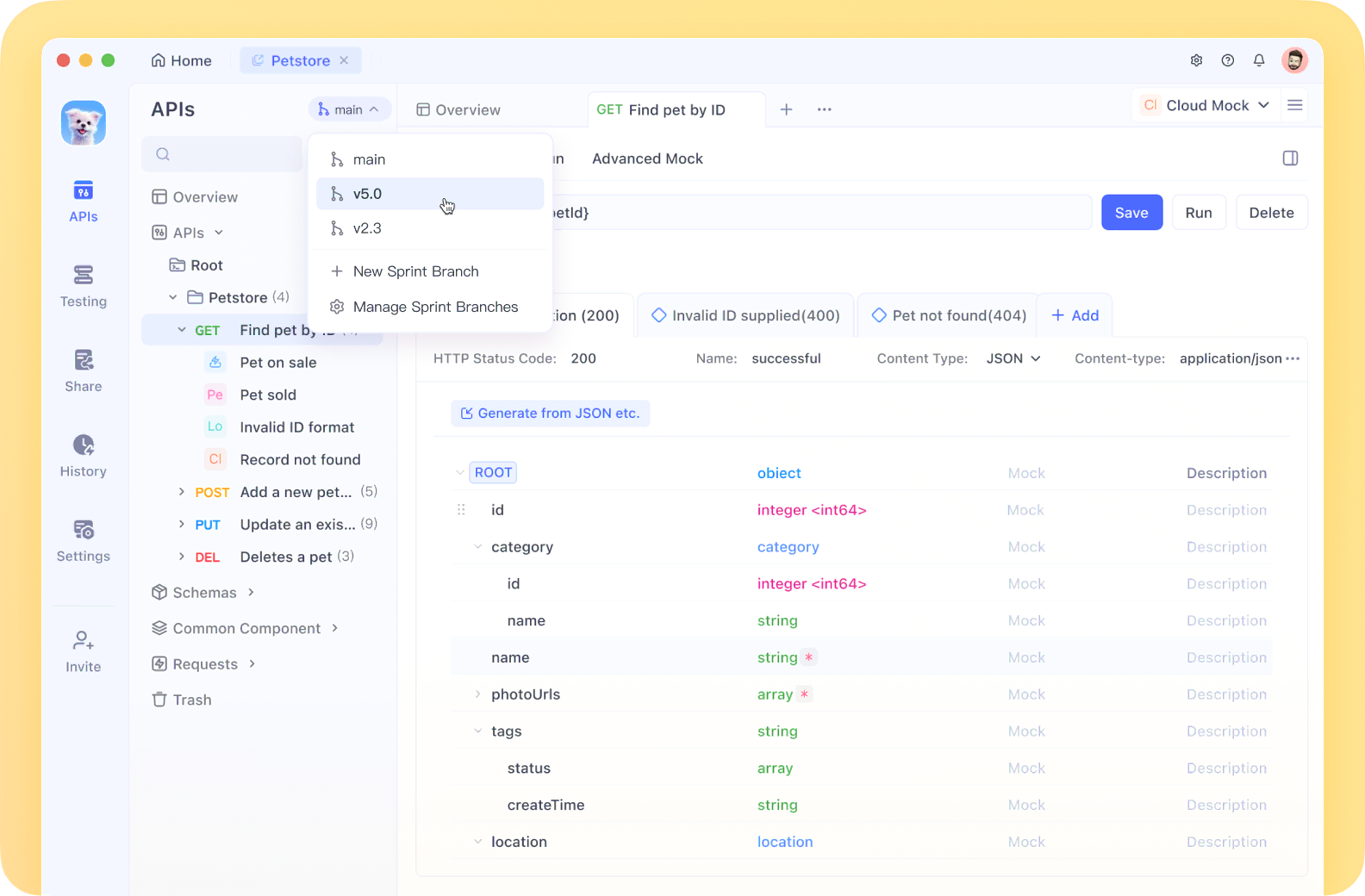

Apidog naturally complements this workflow. As you design, test, or document your APIs, Apidog offers an intuitive platform to streamline API development—making it a smart companion for any developer working with complex integrations. Explore it at apidog.com.

Step-by-Step: Setting Up MCP Servers in Windsurf AI

Follow these instructions to quickly connect an MCP server to Windsurf and start leveraging AI-driven development.

1. Install or Update Windsurf AI

- Download the latest Windsurf version for Mac, Windows, or Linux from the official website.

- Install it on your system. Windsurf builds on VS Code, so the interface feels familiar but comes supercharged with AI features.

- Already have Windsurf? Ensure you’re on the latest version for full MCP support.

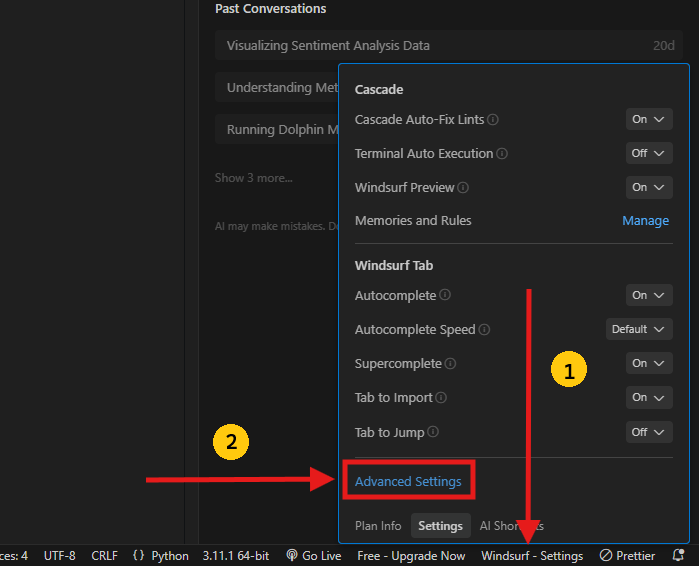

2. Enable MCP Support in Windsurf Settings

- Launch Windsurf.

- Open settings via the “Windsurf - Settings” button (bottom right), or press Cmd+Shift+P (Mac) / Ctrl+Shift+P (Windows/Linux) and search “Open Windsurf Settings.”

- In “Advanced Settings,” navigate to the “Cascade” section.

- Locate and enable the “Model Context Protocol (MCP)” option.

This step allows Windsurf to communicate with MCP servers. Choose an AI model—Claude 3.5 Sonnet is highly recommended for coding tasks.

3. Add and Configure an MCP Server (Example: PostgreSQL)

Windsurf supports both stdio (local CLI) and sse (remote HTTP) MCP servers. Let’s integrate a PostgreSQL MCP Server, a popular choice for database management.

a. Download the PostgreSQL MCP Server

- Clone the repository:

git clone https://github.com/HenkDz/postgresql-mcp-server.git - Navigate into the directory:

cd postgresql-mcp-server

b. Install Dependencies

- Ensure Node.js 18+ is installed.

- Then run:

npm install npm run build

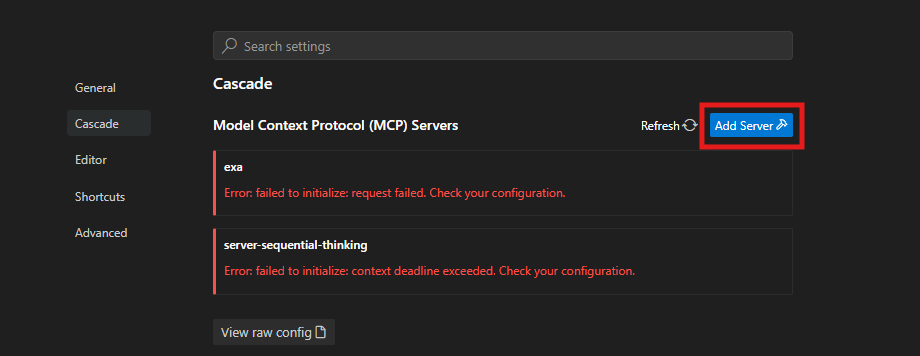

c. Connect the Server to Windsurf

- Open Windsurf settings again.

- Under Cascade, click “Add Server” and locate the

mcp_config.jsonfile (typically at~/.codeium/windsurf/mcp_config.json). - Paste the following (replace

/path/to/with your actual directory):

{

"mcpServers": {

"postgresql-mcp": {

"command": "node",

"args": ["/path/to/postgresql-mcp-server/build/index.js"],

"disabled": false,

"alwaysAllow": []

}

}

}

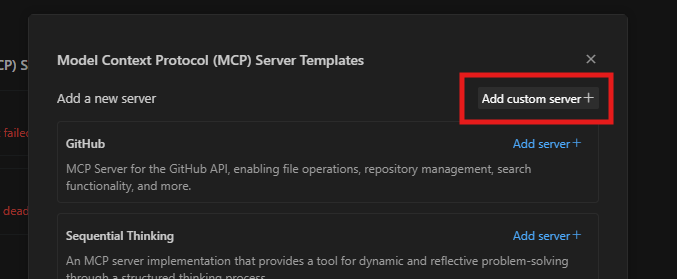

- Alternatively, use the Windsurf UI: select "Add custom server" and follow the prompts for easier configuration.

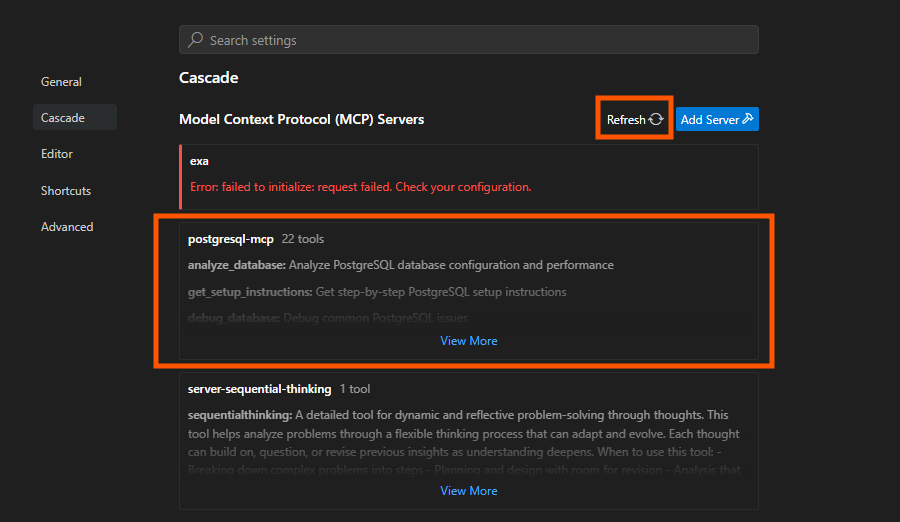

d. Activate the MCP Server in Windsurf

- Save the configuration.

- Click the refresh button in Windsurf settings to load your new MCP server.

For more MCP server options, browse HiMCP.ai - Discover 1682+ MCP Servers or visit windsurf.run.

4. Test Your MCP Server Connection

- Start the PostgreSQL MCP server locally:

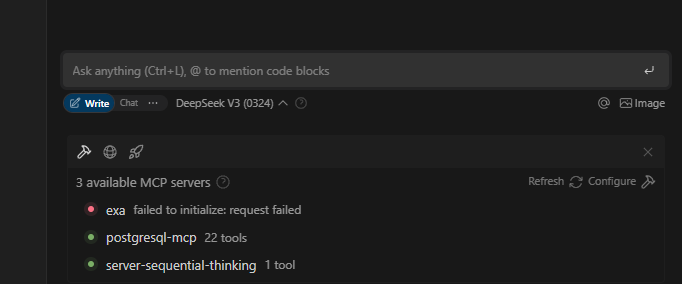

npm run dev - In Windsurf, open the Cascade chat panel.

- Example prompt:

Analyze my PostgreSQL database at postgresql://user:password@localhost:5432/mydb

If set up correctly, Cascade will return insights—such as schema details or performance metrics—directly in your editor.

Practical Ways to Use MCP Servers in Windsurf

Now that your MCP server is live, here are some powerful use cases for backend and API engineers:

Query a Database Schema

Prompt:

Get schema info for postgresql://user:password@localhost:5432/mydb

Cascade will return tables, columns, and constraints in a readable format.

Create Database Tables

Prompt:

Create a table called 'tasks' with columns id (SERIAL), name (VARCHAR), and done (BOOLEAN) in postgresql://user:password@localhost:5432/mydb

Cascade generates and runs the SQL, and you’ll see the result—no manual scripting needed.

Debug Performance Issues

Prompt:

Debug performance issues on postgresql://user:password@localhost:5432/mydb

Cascade can identify bottlenecks or missing indexes, offering actionable suggestions.

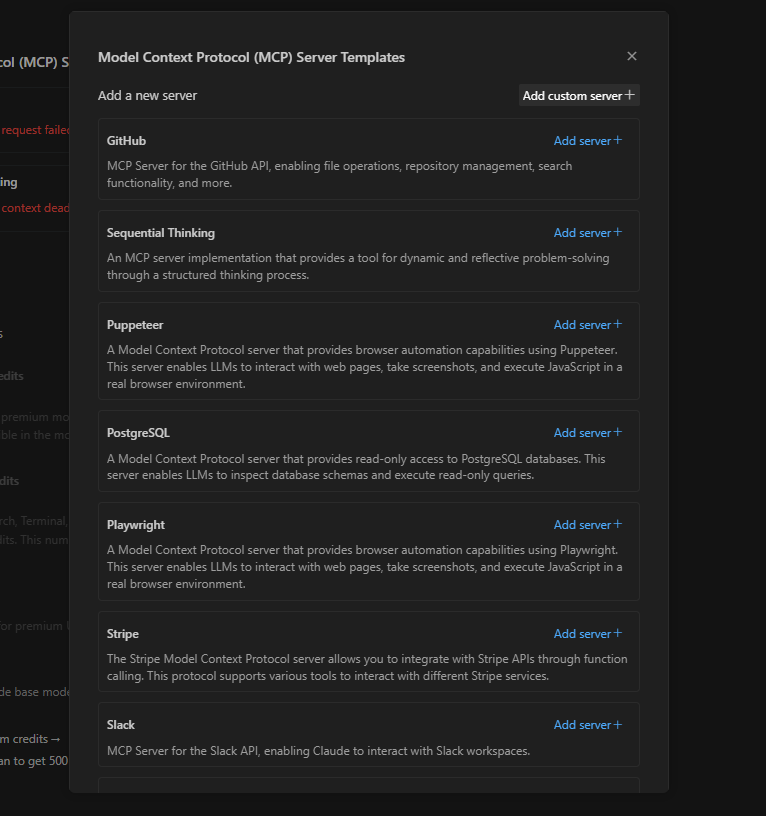

Expand Your Workflow: More MCP Servers for Windsurf

Beyond PostgreSQL, explore dozens of MCP integrations for different workflows:

- Automate GitHub tasks directly from your editor.

- Control browsers using Browserbase’s MCP server.

- Connect to custom APIs and local tools.

Discover and install new MCP servers at windsurf.run or through HiMCP.ai.

0

For detailed documentation and server templates, visit the Windsurf Docs page.

1

Pro Tips for MCP Power Users

- Enable only what you need: Too many MCP servers can clutter Cascade’s suggestions.

- Use @ mentions: Target a specific MCP server in chat with

@postgresql-mcp. - Debug via logs: Check

~/.codeium/windsurf/logsif you encounter issues. - Experiment with prompts: Try “Monitor my database” or “Export table data” to discover advanced capabilities.

Why MCP Integration Makes Windsurf Stand Out

Adding MCP servers transforms Windsurf from a code editor into a developer command center. The seamless, AI-driven connection to databases and tools means less context switching and more focus on building. Compared to alternatives like Cursor, Windsurf’s deep context awareness and extensive tooling unlock major efficiency gains for API and backend teams.

Apidog users especially benefit from this workflow—combining powerful API design/testing with real-time, AI-assisted project automation.

Conclusion: Level Up Your Development with MCP Servers

Integrating MCP servers with Windsurf AI unlocks next-level productivity for API developers and backend engineers. From database management to automated workflows, you’ll streamline complex tasks directly in your editor. For more MCP servers, tips, and detailed integrations, visit windsurf.run.