Large Language Models (LLMs) like Claude and GPT have transformed API development, enabling advanced text generation, Q&A, and automation. However, as API developers, backend engineers, and tech leads know, interacting with LLMs—especially with long or repetitive prompts—can quickly rack up costs and slow down response times. Every repeated system prompt, tool definition, or few-shot example means wasted compute and dollars.

Prompt caching is a crucial optimization for anyone building LLM-powered APIs or tools. By intelligently storing and reusing the computational state of static prompt sections, prompt caching can dramatically improve latency and reduce spend—especially for chatbots, document Q&A, agents, and RAG workflows.

This article breaks down how prompt caching works, its benefits, how to implement it (with real Anthropic Claude API examples), pricing implications, limitations, and best practices. You'll also see how platforms like Apidog empower teams building API integrations for LLMs.

💡 Want a powerful API testing platform that generates beautiful API documentation and maximizes team productivity? Try Apidog—it replaces Postman at a much more affordable price!

What is Prompt Caching? Why Should API Developers Care?

Prompt caching allows LLM providers to store the intermediate computational state associated with the static prefix of a prompt (e.g., system instructions, tool definitions, initial context). When future requests reuse that same prefix, the model skips redundant computation—processing only the dynamic suffix (like a new user query).

Core Advantages:

- Faster API Responses: Skip reprocessing thousands of repeated tokens. AWS Bedrock reports up to 85% latency reduction.

- Lower Costs: Only pay full price for new tokens. Cache hits are billed at up to 90% less than standard input rates.

- Efficient Scaling: Ideal for chatbots, RAG, code assistants, and agentic workflows with recurring prompt structures.

- Seamless Integration: Works alongside other LLM features—no need to compromise on complexity or guardrails.

Real-World Use Cases:

- Chatbots with Fixed System Prompts: Keep responses fast, even as conversation history grows.

- Document Q&A (RAG): Cache large context passages; only process new questions.

- Few-Shot Learning: Cache reusable examples for consistent performance.

- LLM Agents: Cache complex tool definitions and instructions for multi-step automation.

How Prompt Caching Works: Under the Hood

The Caching Workflow

- Cache Miss (First Request or Changed Prefix):

- The LLM processes the entire prompt and stores the internal state of the static prefix.

- A cryptographic hash of the prefix becomes the cache key.

- Cache Hit (Subsequent Identical Prefix):

- The LLM loads the cached state—skipping the prefix.

- Only the new (dynamic) suffix is processed at full compute cost.

What Counts as the Prefix?

- Tools: Function/tool definitions.

- System Prompt: High-level instructions or context.

- Messages: Initial user/assistant turns, few-shot examples, or context snippets.

The prefix’s structure and order (e.g., tools → system → messages) matter, and the cache boundary is set by API parameters.

Cache Characteristics

- Lifetime (TTL): Caches are temporary—commonly 5+ minutes, refreshed on each use.

- Privacy: Caches are organization/account-scoped; no cross-org sharing.

- Exact Matching: Any change, even whitespace, busts the cache.

- Concurrent Requests: If multiple requests hit before the cache is written, only the first creates the cache. Others may miss until the first completes.

Implementing Prompt Caching: Anthropic Claude & AWS Bedrock Example

Structuring Your Prompts

To enable caching, structure API requests so that static content (system prompt, tools, few-shot examples) comes before dynamic content (user query, latest conversation turn).

+-------------------------+--------------------------+

| STATIC PREFIX | DYNAMIC SUFFIX |

| (System Prompt, Tools, | (New User Query, etc.) |

| Few-Shot Examples) | |

+-------------------------+--------------------------+

^

|

Cache Breakpoint Here

How to Enable Caching with Anthropic's Messages API

Anthropic uses a cache_control parameter to enable caching in the request body.

Key Points:

- Add a

"cache_control": {"type": "ephemeral"}object to the block marking the end of your cacheable prefix (system prompt, message, or tool definition). - Up to 4 cache breakpoints can be set per request for advanced scenarios.

- The cache is keyed by the full content (tools, system, messages) up to the cache_control marker.

Example: Caching a System Prompt

import anthropic

client = anthropic.Anthropic(api_key="YOUR_API_KEY")

# First Request (Cache Write)

response1 = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

system=[

{

"type": "text",

"text": "You are a helpful assistant specializing in astrophysics. Your knowledge base includes extensive details about stellar evolution, cosmology, and planetary science. Respond accurately and concisely.",

"cache_control": {"type": "ephemeral"}

}

],

messages=[

{"role": "user", "content": "What is the Chandrasekhar limit?"}

]

)

print("First Response:", response1.content)

print("Usage (Write):", response1.usage)

# Usage(Write): Usage(input_tokens=60, output_tokens=50, cache_creation_input_tokens=60, cache_read_input_tokens=0)

# Subsequent Request (Cache Hit)

response2 = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

system=[

{

"type": "text",

"text": "You are a helpful assistant specializing in astrophysics. Your knowledge base includes extensive details about stellar evolution, cosmology, and planetary science. Respond accurately and concisely.",

"cache_control": {"type": "ephemeral"}

}

],

messages=[

{"role": "user", "content": "Explain the concept of dark energy."}

]

)

print("Second Response:", response2.content)

print("Usage (Hit):", response2.usage)

# Usage(Hit): Usage(input_tokens=8, output_tokens=75, cache_creation_input_tokens=0, cache_read_input_tokens=60)

The first call processes and caches the system prompt. The second, with an identical prefix, hits the cache and only processes the new user input.

Example: Incremental Caching for Chatbots

You can cache conversation turns by applying cache_control to the last static message:

# Turn 1: Cache system + turn 1

response_turn1 = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=500,

system=[{"type": "text", "text": "Maintain a friendly persona."}],

messages=[

{"role": "user", "content": "Hello Claude!"},

{"role": "assistant", "content": "Hello there! How can I help you today?", "cache_control": {"type": "ephemeral"}}

]

)

# Turn 2: Cache hit for system + turn 1, cache write for turn 2

response_turn2 = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=500,

system=[{"type": "text", "text": "Maintain a friendly persona."}],

messages=[

{"role": "user", "content": "Hello Claude!"},

{"role": "assistant", "content": "Hello there! How can I help you today?"},

{"role": "user", "content": "Tell me a fun fact."},

{"role": "assistant", "content": "Did you know honey never spoils?", "cache_control": {"type": "ephemeral"}}

]

)

Tracking Cache Performance

API responses include:

input_tokens: Non-cached tokens processed (dynamic content).output_tokens: Tokens in the generated reply.cache_creation_input_tokens: Tokens used to create a new cache entry.cache_read_input_tokens: Tokens loaded from cache.

Monitor these fields to optimize your caching strategy.

AWS Bedrock Integration

For Claude models on AWS Bedrock, include cache_control in your JSON request body per model API docs—implementation is nearly identical. See Bedrock’s documentation for details.

Prompt Caching Pricing: What Developers Need to Know

Prompt caching introduces a three-tier input token pricing model:

| Model | Base Input (/MTok) | Cache Write (+25%) | Cache Read (-90%) | Output (/MTok) |

|---|---|---|---|---|

| Claude 3.5 Sonnet | $3.00 | $3.75 | $0.30 | $15.00 |

| Claude 3 Haiku | $0.25 | $0.30 | $0.03 | $1.25 |

| Claude 3 Opus | $15.00 | $18.75 | $1.50 | $75.00 |

- Base Input: Standard input tokens (dynamic suffix or non-cached).

- Cache Write: Premium (25% extra) for first-time cached prefixes.

- Cache Read: Deep discount (90% off) for cached tokens.

- Output: Always standard rate.

Always check the latest Anthropic and AWS Bedrock pricing.

Key takeaway: If your static prefix is reused often, prompt caching quickly pays off, leading to major cost savings for high-traffic apps.

Limitations and Gotchas

Prompt caching is powerful, but not a silver bullet. Watch out for these:

- Minimum Cacheable Length: Caching only applies to sufficiently long prefixes.

- Claude 3.5/Opus: 1024+ tokens

- Claude 3 Haiku: 2048+ tokens

- Cache Sensitivity: Any change (even whitespace) in a prefix causes a cache miss.

- Cache Lifetime: Typically 5+ minutes, refreshed on use. No manual clearing.

- Concurrency: Only the first identical request writes the cache; others may miss if sent in parallel before the write finishes.

- Model Support: Not all LLMs offer prompt caching. Confirm availability for your chosen model.

- Debugging: Subtle prompt differences can break caching—be precise in prefix construction.

Best Practices for Maximizing Caching Impact

- Design Stable Prefixes: Place instructions, tools, and reusable context first in the prompt.

- Use

cache_controlDeliberately: Mark the true end of static content. - Monitor Cache Metrics: Watch

cache_read_input_tokensvs.cache_creation_input_tokensto gauge effectiveness. - Standardize Prompt Formatting: Avoid accidental changes (extra spaces, formatting tweaks) that break exact matching.

- Cache for High-Impact Scenarios: Focus on long and frequently reused prefixes—avoid caching for highly variable or short user inputs.

- Iterate and Test: Experiment with structure and breakpoints to fit your workload and team needs.

Building LLM-Powered APIs? Choose the Right Tools

Prompt caching is essential for building scalable, cost-effective LLM solutions. But designing, testing, and maintaining robust API workflows around LLMs also requires the right platform.

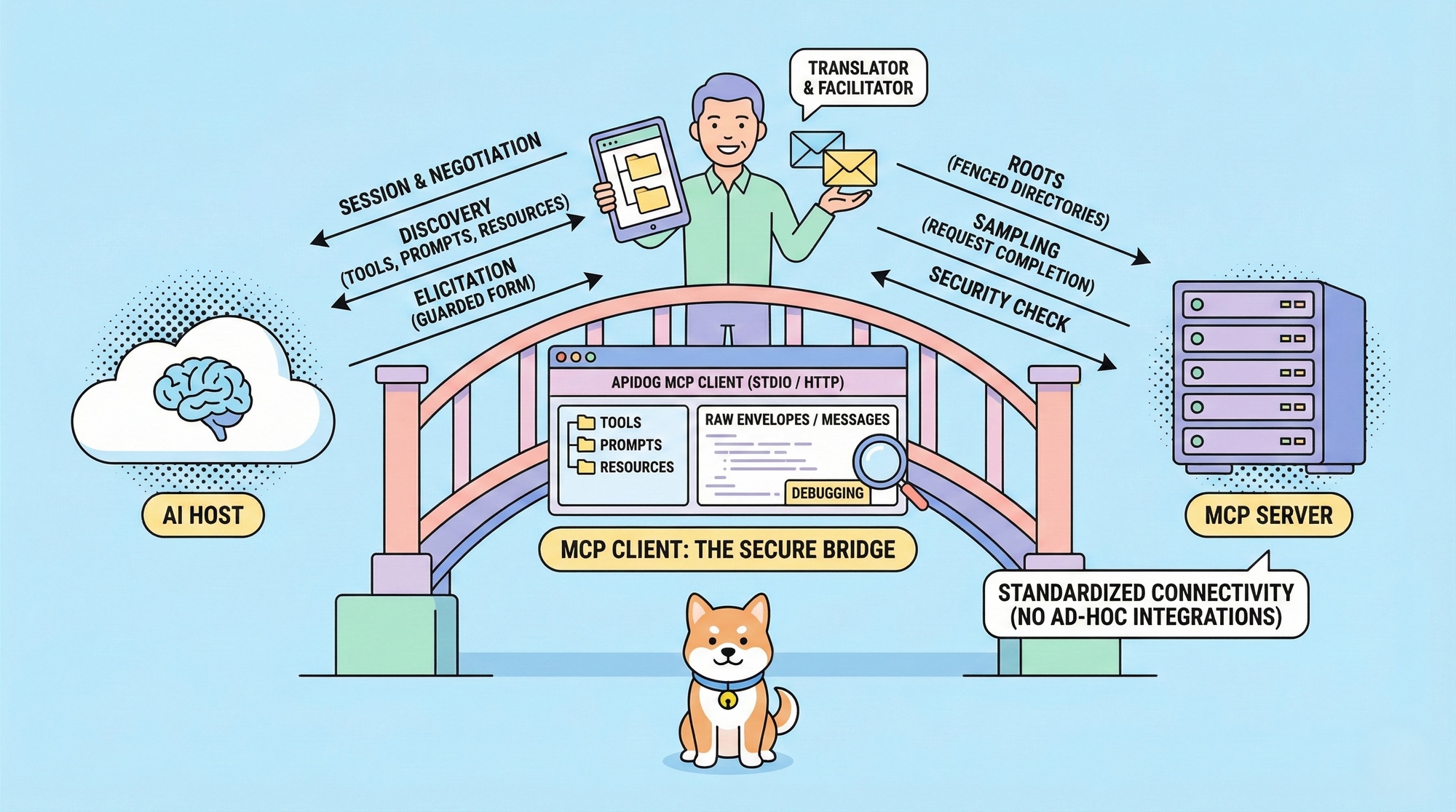

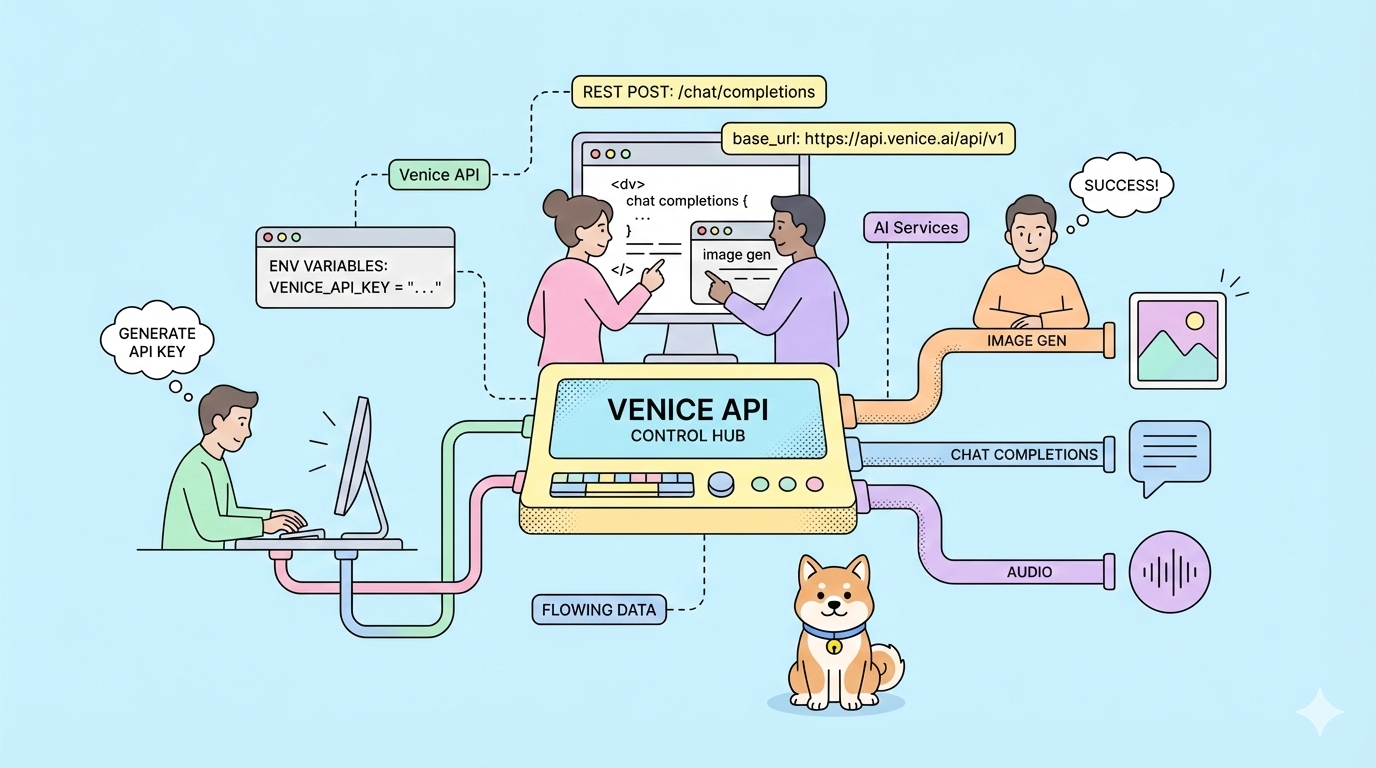

Apidog empowers API teams and backend engineers to:

- Rapidly design and test LLM-powered endpoints.

- Automatically generate beautiful API documentation for collaboration.

- Integrate LLM features—like prompt caching and complex message flows—into your API lifecycle.

- Collaborate with your team for maximum productivity.

- Replace legacy tools like Postman at a much more affordable price.

Try Apidog today to streamline your LLM API development and make the most of prompt caching.