Understanding exactly which API endpoints are available for OpenAI's Codex has become increasingly complex as the platform evolved dramatically from its 2021 launch. Developers frequently ask: "What API endpoints can I actually use with CodeX?" The answer requires distinguishing between legacy systems, current implementations, and emerging capabilities within OpenAI's rapidly evolving ecosystem.

Why Codex API Endpoints Matter in 2026

Codex evolves beyond its 2021 roots as a code completion tool into a full-fledged agent for software engineering. It now handles complex tasks like dependency resolution, test execution, and UI debugging with multimodal inputs. Developers leverage its API endpoints to embed these capabilities into CI/CD pipelines, custom bots, or enterprise applications. Understanding these endpoints unlocks scalable automation, reducing task times by up to 90% in cloud environments.

Evolution of CodeX API: From Completions to Agentic Endpoints

Initially, Codex relied on the /v1/completions endpoint with models like davinci-codex. By 2026, OpenAI pivoted to the Chat Completions API, integrating GPT-5-Codex for advanced reasoning. Beta endpoints for cloud tasks and code reviews further extend functionality, supporting parallel execution and GitHub integration.

This transition addresses earlier limitations, such as context loss and concurrency constraints. Consequently, developers now access Codex through a unified API framework, with beta features available via Pro plans. Apidog complements this by enabling rapid endpoint testing, ensuring smooth adoption.

Core API Endpoints for Codex in 2026

OpenAI structures Codex access around a few key endpoints, primarily through the standard API and beta extensions. Below, we outline each, including HTTP methods, parameters, and code samples.

1. Chat Completions Endpoint: Powering Code Generation

The /v1/chat/completions endpoint (POST) serves as the primary interface for GPT-5-Codex, handling code generation, debugging, and explanations.

Key Parameters:

- model: Use "gpt-5-codex" for coding tasks; "codex-mini-latest" for lighter queries.

- messages: Array of role-content pairs, e.g., [{"role": "system", "content": "You are a Python expert."}, {"role": "user", "content": "Write a Django REST API for user authentication."}].

- max_tokens: Set to 4096 for detailed outputs.

- temperature: 0.2 for precise code; 0.7 for creative explorations.

- tools: Supports function calling for external integrations.

Authentication: Bearer token via Authorization: Bearer $OPENAI_API_KEY.

Example in Python:

python

import openai

client = openai.OpenAI(api_key="sk-your-key")

response = client.chat.completions.create(

model="gpt-5-codex",

messages=[

{"role": "system", "content": "Follow Python PEP 8 standards."},

{"role": "user", "content": "Generate a REST API endpoint for task management."}

],

max_tokens=2000,

temperature=0.3

)

print(response.choices[0].message.content)This endpoint excels in iterative workflows, maintaining context across messages. It resolves 74% of SWE-bench tasks autonomously, outperforming general models. However, optimize prompts to avoid token bloat, using Apidog to monitor usage.

2. Cloud Task Delegation Endpoint: Autonomous Execution

The beta /v1/codex/cloud/tasks endpoint (POST) delegates tasks to sandboxed cloud containers, ideal for parallel processing.

Key Parameters:

- task_prompt: Instructions like "Refactor this module for TypeScript."

- environment: JSON defining runtime, e.g., {"runtime": "node:18", "packages": ["typescript"]}.

- repository_context: GitHub repo URL or branch.

- webhook: URL for task status updates.

- multimodal_inputs: Base64 images for UI tasks.

Example in Node.js:

javascript

const OpenAI = require('openai');

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

async function runTask() {

const task = await openai.beta.codex.cloud.create({

task_prompt: 'Create a React component with Jest tests.',

environment: { runtime: 'node:18', packages: ['react', 'jest'] },

repository_context: 'https://github.com/user/repo/main',

webhook: 'https://your-webhook.com'

});

console.log(`Task ID: ${task.id}`);

}This endpoint reduces completion times by 90% via caching. Use Apidog to mock webhook responses for testing.

3. Code Review Endpoint: Automating PR Analysis

The beta /v1/codex/reviews endpoint (POST) analyzes GitHub PRs, triggered by tags like "@codex review".

Key Parameters:

- pull_request_url: GitHub PR link.

- focus_areas: Array like ["security", "bugs"].

- sandbox_config: Execution settings, e.g., {"network": "restricted"}.

cURL Example:

bash

curl https://api.openai.com/v1/codex/reviews \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"pull_request_url": "https://github.com/user/repo/pull/456",

"focus_areas": ["performance", "dependencies"],

"sandbox_config": {"tests": true}

}'This endpoint enhances code quality by catching issues early, integrating with CI/CD pipelines.

4. Legacy Completions Endpoint: Limited Use for Backward Compatibility

The deprecated /v1/completions endpoint supports codex-mini-latest for basic code generation but is scheduled for phase-out by 2026. It uses a simpler prompt-based model, less suited for agentic tasks.

Key Parameters:

- model: "codex-mini-latest".

- prompt: Raw text input, e.g., "Write a Python function to parse CSV files."

- max_tokens: Up to 2048.

Example in Python:

python

import openai

client = openai.OpenAI(api_key="sk-your-key")

response = client.completions.create(

model="codex-mini-latest",

prompt="Write a Python function to parse CSV files.",

max_tokens=500

)

print(response.choices[0].text)Migrate to chat completions for better context handling and performance, as legacy endpoints lack multimodal support and agentic reasoning.

Best Practices for Using CodeX API Endpoints

Maximize endpoint efficiency with these strategies:

- Craft Precise Prompts: Specify languages and constraints, e.g., "Use Go with error handling."

- Optimize Tokens: Batch requests and monitor via Apidog analytics.

- Handle Errors: Check finish_reason for incomplete outputs and retry.

- Secure Calls: Use sandboxed environments and sanitize inputs.

- Iterate Context: Leverage conversation history for agentic workflows.

These practices cut iterations by 50%, boosting productivity.

API Development Workflows with Apidog Integration

While CodeX has evolved beyond traditional API endpoints, developers working on API-focused projects benefit significantly from combining CodeX assistance with comprehensive API development tools like Apidog. This integration creates powerful workflows that enhance both code generation accuracy and API reliability.

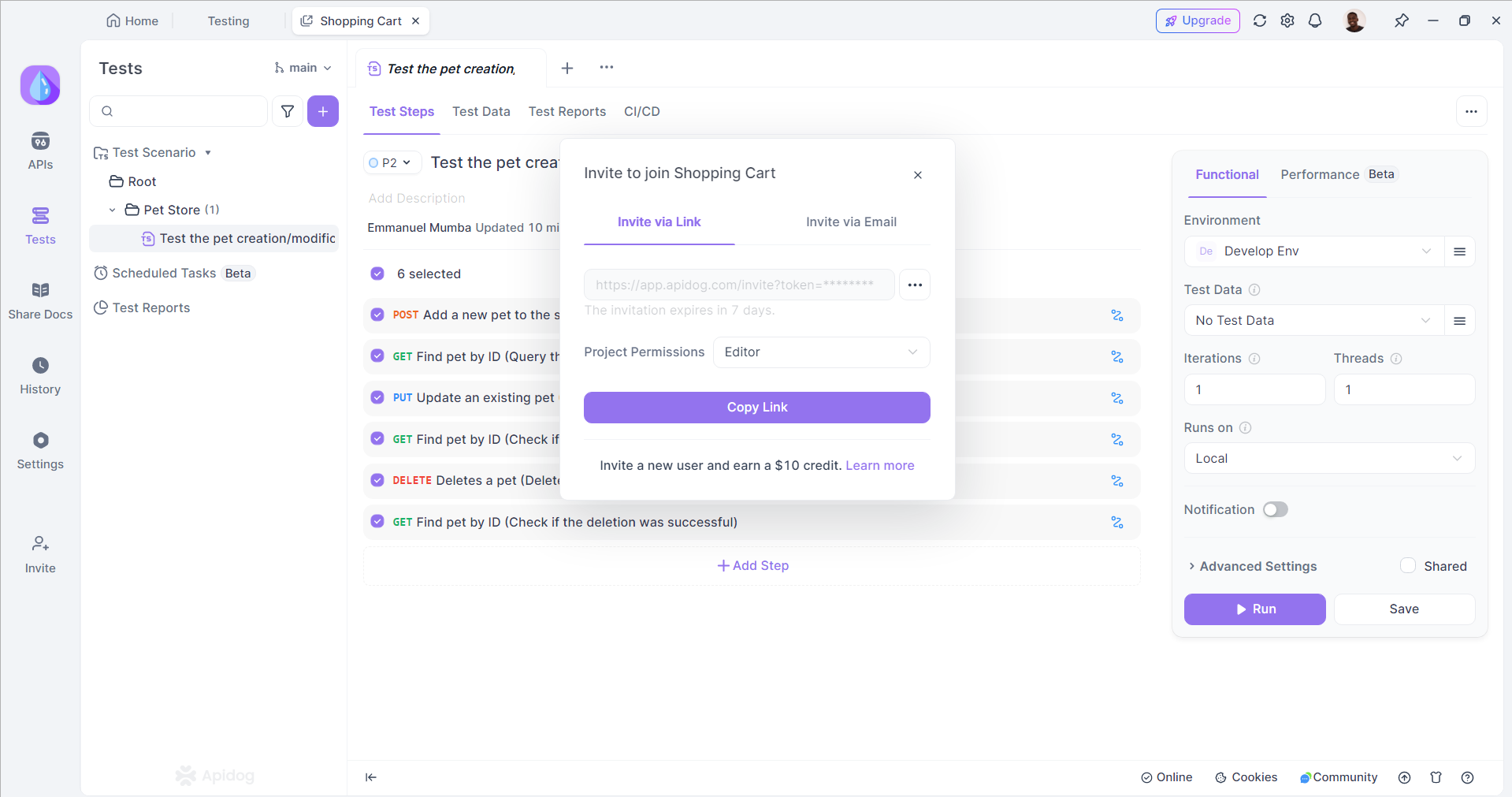

Apidog provides essential API testing, documentation, and collaboration features that complement CodeX's code generation capabilities perfectly. When CodeX generates API implementation code, Apidog can immediately validate, test, and document the resulting endpoints through automated processes.

Using Apidog in Your Development Workflow

Integrating Apidog into your development workflow can enhance your API management process. Here’s how to effectively use Apidog alongside your website development:

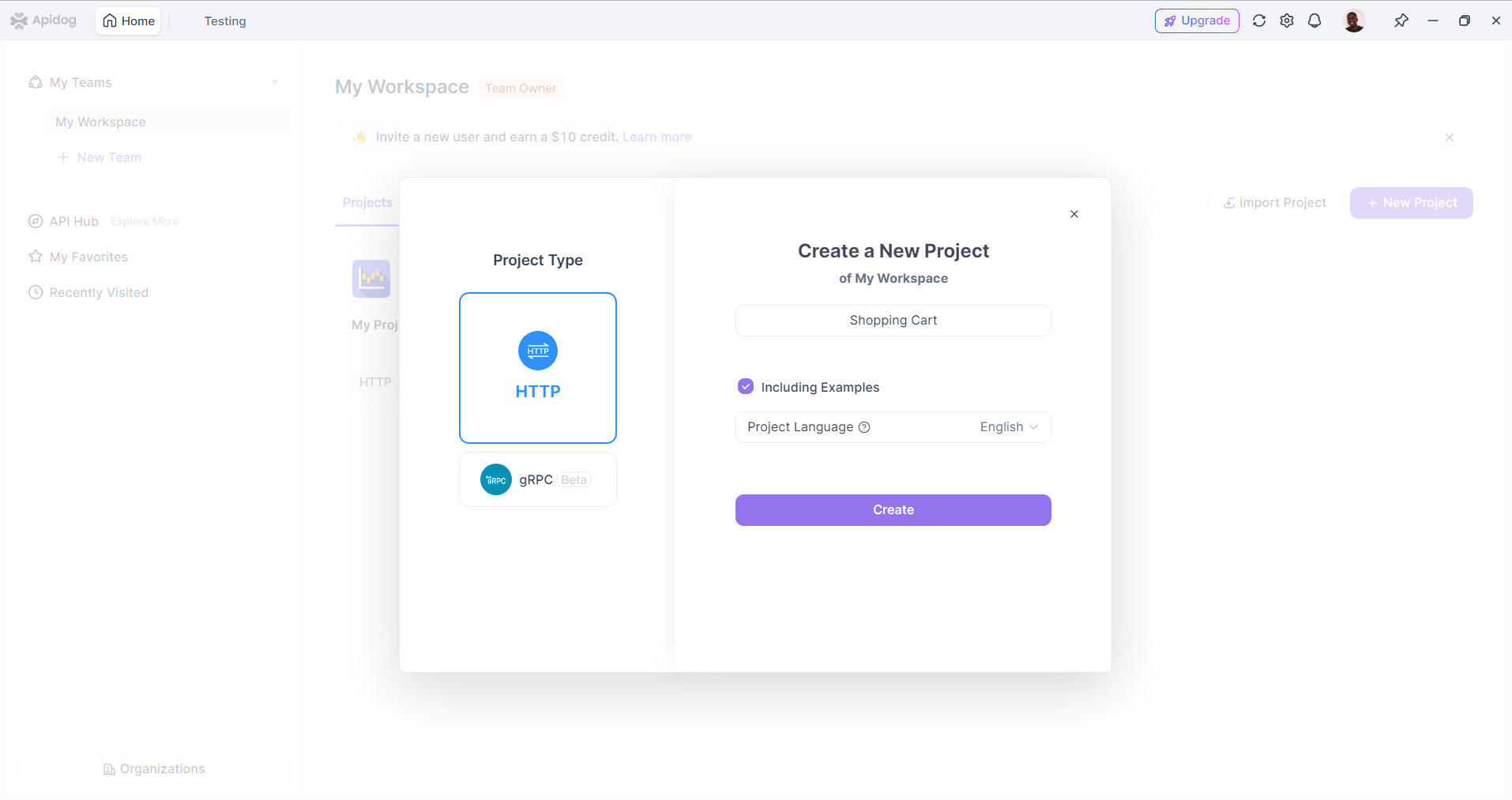

Step 1: Define Your API Specifications

Start by defining your API specifications in Apidog. Create a new API project and outline the endpoints, request parameters, and response formats. This documentation will serve as a reference for your development team.

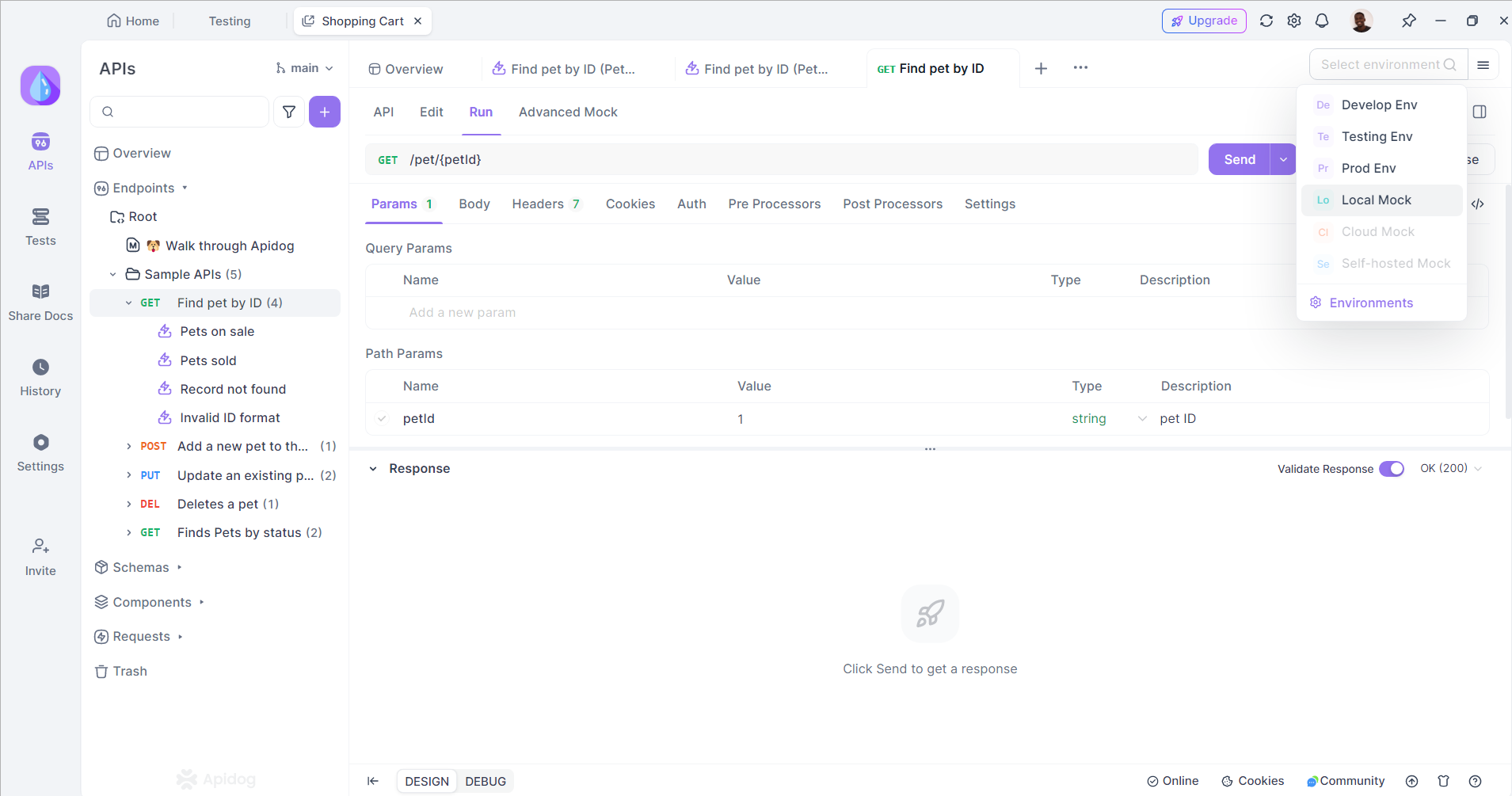

Step 2: Generate Mock Responses

Use Apidog to generate mock responses for your API endpoints. This allows you to test your frontend application without relying on the actual API, which may be under development or unavailable. Mocking responses helps you identify issues early in the development process.

Step 3: Test API Endpoints

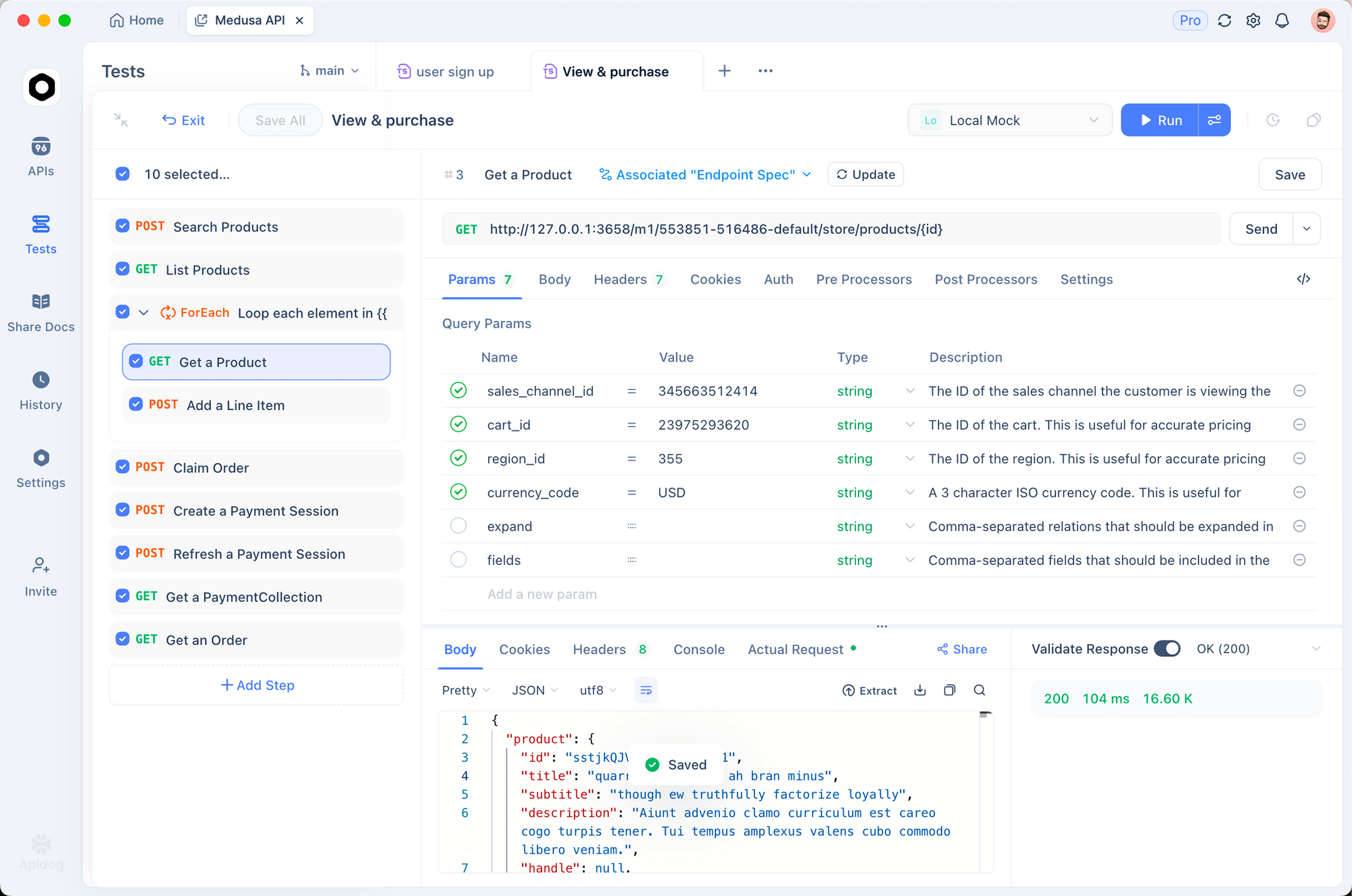

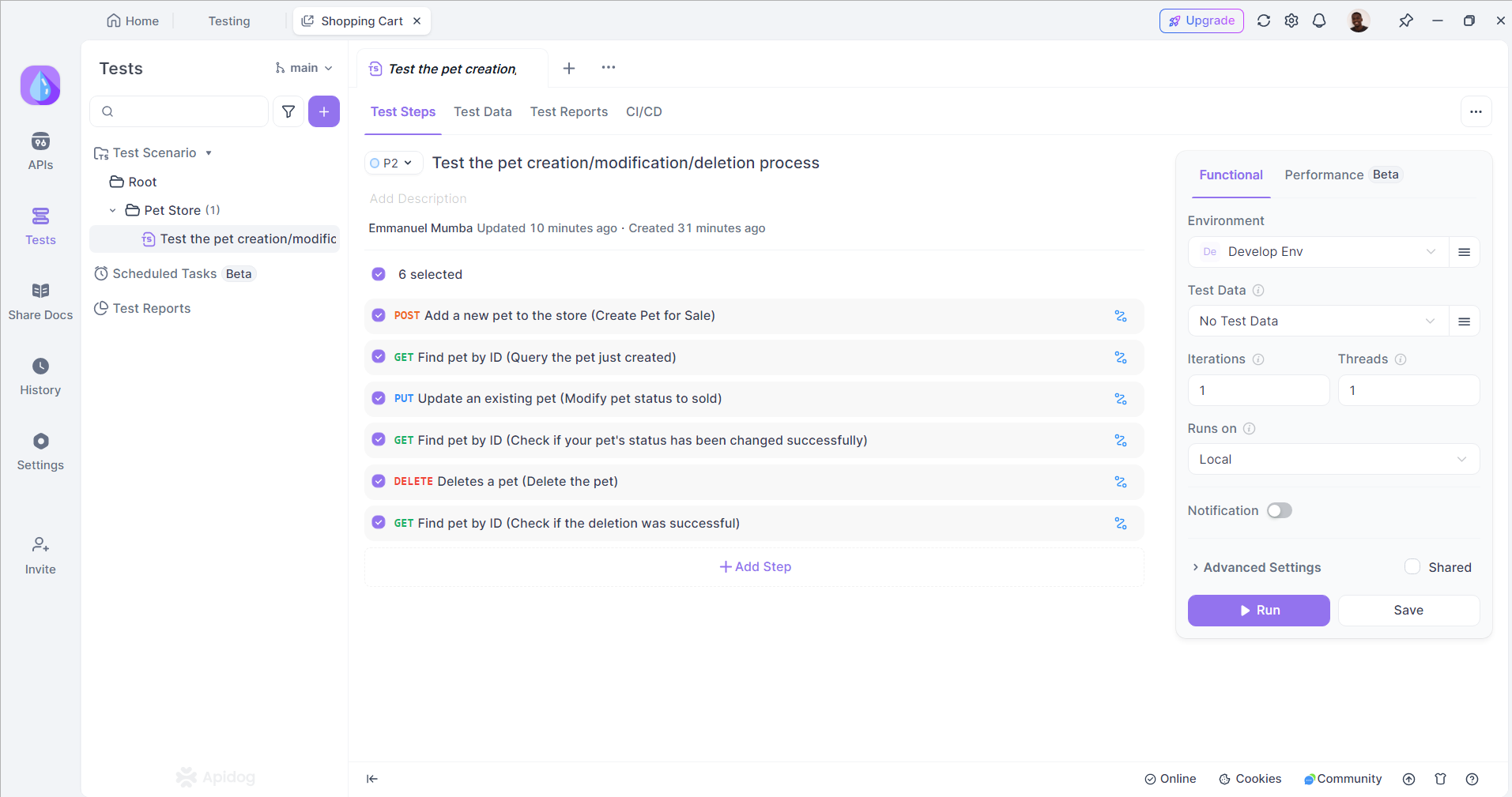

Once your API is ready, use Apidog to test the endpoints. This ensures that they return the expected data and handle errors correctly. You can also use Apidog's testing features to automate this process, saving time and reducing the risk of human error.

Step 4: Collaborate with Your Team

Encourage your team to use Apidog for collaboration. Developers can leave comments on API specifications, suggest changes, and track revisions. This collaborative approach fosters communication and ensures that everyone is on the same page.

Step 5: Maintain Documentation

As your API evolves, make sure to update the documentation in Apidog. This will help keep your team informed about changes and ensure that external partners have access to the latest information.

The integrated workflow typically follows this pattern:

- Natural language specification describes desired API functionality

- CodeX generates implementation code based on the specification

- Apidog automatically imports and validates the generated API endpoints

- Real-time testing ensures generated code meets functional requirements

- Collaborative documentation enables team-wide understanding and maintenance

- Continuous validation maintains API reliability throughout development cycles

Enterprise Configuration and Team Management

Enterprise CodeX implementations require additional configuration layers that extend beyond individual developer setups. These configurations ensure compliance, security, and team collaboration while maintaining the simplified integration experience that characterizes modern CodeX implementations.

Codex is a single agent that runs everywhere you code—terminal, IDE, in the cloud, in GitHub, and on your phone, but enterprise environments may require administrative approval and configuration before team members can access full functionality. This setup process ensures organizational compliance while maintaining development productivity.

Administrative configurations typically encompass:

- User access permissions aligned with organizational roles and responsibilities

- Repository access controls that respect existing GitHub permission structures

- Compliance monitoring for code generation and modification activities

- Usage analytics that provide insights into team productivity and AI assistance utilization

Team-focused features enable collaborative development with AI assistance while maintaining individual accountability and code quality standards. These collaborative capabilities integrate seamlessly with existing team workflows without requiring custom API implementations or endpoint management.

Performance Optimization and Resource Management

Understanding CodeX performance characteristics enables more effective utilization across different development scenarios and use cases. Unlike traditional API endpoints with predictable response times and resource requirements, CodeX performance varies significantly based on task complexity, execution environment, and available computational resources.

The system automatically selects optimal execution environments based on task characteristics, available resources, and performance requirements. Simple operations typically execute locally for immediate response, while complex analysis tasks leverage cloud resources for enhanced computational capabilities.

Performance Optimization Pattern:

python

import time

import logging

def monitor_codex_performance(operation_type):

"""Monitor CodeX performance across different operations"""

start_time = time.time()

try:

if operation_type == "simple_completion":

# Local CLI execution for immediate response

result = execute_local_codex("Generate simple function")

elif operation_type == "complex_analysis":

# Cloud execution for resource-intensive tasks

result = delegate_to_cloud("Analyze entire codebase architecture")

elif operation_type == "code_review":

# GitHub integration for collaborative review

result = trigger_github_review("@codex review security issues")

duration = time.time() - start_time

logging.info(f"{operation_type} completed in {duration:.2f}s")

return result

except Exception as e:

duration = time.time() - start_time

logging.error(f"{operation_type} failed after {duration:.2f}s: {str(e)}")

raiseOptimal CodeX utilization involves understanding these execution patterns:

- Simple code suggestions and completions: Local execution through CLI or IDE integration provides immediate response times

- Complex refactoring and analysis: Cloud environments offer superior computational resources for intensive operations

- Repository-wide operations: GitHub integration provides comprehensive context access and coordination capabilities

- Mobile code review activities: iOS app integration enables location-independent development work

Security Considerations and Best Practices

CodeX implementations incorporate comprehensive security measures that address the unique challenges associated with AI-assisted development. These security features operate transparently within the integration experience while maintaining robust protection for sensitive code and organizational intellectual property.

Modern CodeX implementations require elevated authentication measures compared to traditional API usage patterns. The system mandates multi-factor authentication for email/password accounts while strongly recommending MFA setup for social login providers to ensure account security.

The cloud-based architecture implements comprehensive data protection measures that ensure code privacy while enabling sophisticated AI assistance. Sandboxed execution environments prevent cross-project data exposure while maintaining the contextual awareness necessary for effective development assistance.

Additionally, all code processing occurs within secure, encrypted environments that meet enterprise security standards, ensuring that sensitive intellectual property remains protected throughout the development process.

Embracing the Integrated Development Future

The CodeX ecosystem will continue evolving toward even more seamless integration patterns, but the fundamental principle remains constant: AI assistance should enhance rather than complicate development workflows. By embracing these integrated approaches and leveraging complementary tools like Apidog for API development projects, developers can achieve unprecedented productivity while maintaining the highest standards of code quality and reliability.

The future belongs to development environments where AI assistance operates transparently and intelligently, enabling developers to focus on creative problem-solving and architectural thinking rather than managing technical integration complexity. CodeX represents a significant step toward this future, providing a foundation for the next generation of AI-assisted development experiences.