Vercel has stepped into the AI arena with its v0 API, featuring the v0-1.0-md model. This API is engineered to empower developers in creating modern web applications, offering a suite of features designed for speed, efficiency, and ease of integration. This article provides a comprehensive overview of the Vercel v0-1.0-md API, covering its features, pricing, and how to get started, including a look at how to leverage it with API development tools like APIdog.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

Features of the Vercel v0-1.0-md API

The v0-1.0-md model lies at the heart of the v0 API, bringing a robust set of capabilities tailored for contemporary web development challenges.

Framework Aware Completions: One of the standout features is its awareness of modern web development stacks. The model has been evaluated and optimized for popular frameworks such as Next.js and, naturally, Vercel's own platform. This means developers can expect more relevant and contextually accurate code completions and suggestions when working within these ecosystems.

Auto-fix Capabilities: Writing perfect code on the first try is a rarity. The v0-1.0-md model assists in this by identifying and automatically correcting common coding issues during the generation process. This can significantly reduce debugging time and improve overall code quality.

Quick Edit Functionality: Speed is a recurring theme with the v0 API. The quick edit feature streams inline edits as they become available. This real-time feedback loop allows developers to see changes instantaneously, fostering a more dynamic and interactive development experience.

OpenAI Compatibility: Recognizing the widespread adoption of OpenAI's API standards, Vercel has ensured that the v0-1.0-md model is compatible with the OpenAI Chat Completions API format. This is a significant advantage, as it allows developers to use the v0 API with any existing tools, SDKs, or libraries that already support OpenAI's structure. This interoperability lowers the barrier to entry and allows for easier integration into existing workflows.

Multimodal Input: The API is not limited to text-based interactions. It supports multimodal inputs, meaning it can process both text and image data. Images need to be provided as base64-encoded data. This opens up a range of possibilities for applications that require understanding or generating content based on visual information alongside textual prompts.

Function/Tool Calls: Modern AI applications often require interaction with external systems or the execution of specific functions. The v0-1.0-md model supports function and tool calls, enabling developers to define custom tools that the AI can invoke. This extends the model's capabilities beyond simple text generation, allowing it to perform actions, retrieve data from other APIs, or interact with other services as part of its response generation.

Low Latency Streaming Responses: For applications that require real-time interaction, such as chatbots or live coding assistants, latency is a critical factor. The v0 API is designed to provide fast, streaming responses. This means that instead of waiting for the entire response to be generated, data is sent in chunks as it becomes available, leading to a much more responsive and engaging user experience.

Optimization for Web Development: The model is specifically optimized for frontend and full-stack web development tasks. This focus ensures that its training and capabilities are aligned with the common challenges and requirements of building modern web applications, from generating UI components to writing server-side logic.

Developers can experiment with the v0-1.0-md model directly in the AI Playground provided by Vercel. This allows for testing different prompts, observing the model's responses, and getting a feel for its capabilities before integrating it into a project.

Vercel v0 API Pricing and Access

Access to the Vercel vo API, and consequently the v0-1.0-md model, is currently in beta. To utilize the API, users need to be on a Premium or Team plan with usage-based billing enabled. Detailed information regarding the pricing structure can typically be found on Vercel's official pricing page. As with many beta programs, it's advisable to check the latest terms and conditions directly from Vercel.

To begin using the API, the first step is to create an API key on v0.dev. This key will be used to authenticate requests to the API.

Usage Limits

Like most API services, the Vercel v0 API has usage limits in place to ensure fair usage and maintain service stability. The currently documented limits for the v0-1.0-md model are:

- Max messages per day: 200

- Max context window size: 128,000 tokens

- Max output context size: 32,000 tokens

These limits are subject to change, especially as the API moves out of beta. For users or applications requiring higher limits, Vercel provides a contact point (support@v0.dev) to discuss potential increases. It's also important to note that by using the API, developers agree to Vercel's API Terms.

How to Use the Vercel v0 API

Integrating the Vercel v0 API into a project is designed to be straightforward, particularly for developers familiar with the OpenAI API format or using Vercel's ecosystem.

Integration with AI SDK: Vercel recommends using its AI SDK, a TypeScript library specifically designed for working with <V0Text /> and other OpenAI-compatible models. This SDK simplifies the process of making API calls, handling responses, and integrating AI capabilities into applications.

To get started, you would typically install the necessary packages:

npm install ai @ai-sdk/openai

Example Usage (JavaScript/TypeScript):

The following example demonstrates how to use the generateText function from the AI SDK to interact with the v0-1.0-md model:

import { generateText } from 'ai';

import { createOpenAI } from '@ai-sdk/openai';

// Configure the Vercel v0 API client

const vercel = createOpenAI({

baseURL: 'https://api.v0.dev/v1', // The v0 API endpoint

apiKey: process.env.VERCEL_V0_API_KEY, // Your Vercel v0 API key

});

async function getAIChatbotResponse() {

try {

const { text } = await generateText({

model: vercel('v0-1.0-md'), // Specify the Vercel model

prompt: 'Create a Next.js AI chatbot with authentication',

});

console.log(text);

return text;

} catch (error) {

console.error("Error generating text:", error);

// Handle the error appropriately

}

}

getAIChatbotResponse();

In this example:

- We import

generateTextfrom theailibrary andcreateOpenAIfrom@ai-sdk/openai. - An OpenAI-compatible client is created using

createOpenAI, configured with the v0 API's base URL (https://api.v0.dev/v1) and your Vercel v0 API key (which should be stored securely, for example, as an environment variable). - The

generateTextfunction is called, passing the configuredvercelclient (specifying thev0-1.0-mdmodel) and the desired prompt. - The response from the API, containing the generated text, is then available in the

textvariable.

API Reference:

For direct API interaction, without the SDK, or for understanding the underlying mechanics, the API reference is key.

Endpoint: POST https://api.v0.dev/v1/chat/completions

This single endpoint is used to generate model responses based on a conversation history.

Headers:

Authorization: Required. A Bearer token in the formatBearer $V0_API_KEY.Content-Type: Required. Must beapplication/json.

Request Body: The request body is a JSON object with the following main fields:

model(string, Required): The name of the model. For this API, it should be"v0-1.0-md".messages(array, Required): A list of message objects that form the conversation history. Each message object must have:role(string, Required): Identifies the sender, can be"user","assistant", or"system".content(string or array, Required): The actual message content. This can be a simple string or an array of text and image blocks for multimodal input.stream(boolean, Optional): If set totrue, the API will return the response as a stream of Server-Sent Events (SSE). Defaults tofalse.tools(array, Optional): Definitions of any custom tools (e.g., functions) that the model can call.tool_choice(string or object, Optional): Specifies which tool the model should call, if tools are provided.

Example Request (cURL):

Here's how you might make a direct API call using cURL:

curl https://api.v0.dev/v1/chat/completions \

-H "Authorization: Bearer $V0_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "v0-1.0-md",

"messages": [

{ "role": "user", "content": "Create a Next.js AI chatbot" }

]

}'

Example with Streaming (cURL):

To receive a streamed response:

curl https://api.v0.dev/v1/chat/completions \

-H "Authorization: Bearer $V0_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "v0-1.0-md",

"stream": true,

"messages": [

{ "role": "user", "content": "Add login to my Next.js app" }

]

}'

Response Format:

Non-streaming (stream: false): The API returns a single JSON object containing the full response. This object includes an id, the model name, an object type (e.g., chat.completion), a created timestamp, and a choices array. Each choice in the array contains the message (with role: "assistant" and the content of the response) and a finish_reason (e.g., "stop").

{

"id": "v0-123",

"model": "v0-1.0-md",

"object": "chat.completion",

"created": 1715620000,

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Here's how to add login to your Next.js app..."

},

"finish_reason": "stop"

}

]

}

Streaming (stream: true): The server sends a series of data chunks formatted as Server-Sent Events (SSE). Each event starts with data: followed by a JSON object representing a partial delta of the response. This allows the client to process the response incrementally.

data: {

"id": "v0-123",

"model": "v0-1.0-md",

"object": "chat.completion.chunk",

"choices": [

{

"delta": {

"role": "assistant",

"content": "Here's how"

},

"index": 0,

"finish_reason": null

}

]

}

A final chunk will typically have a finish_reason other than null.

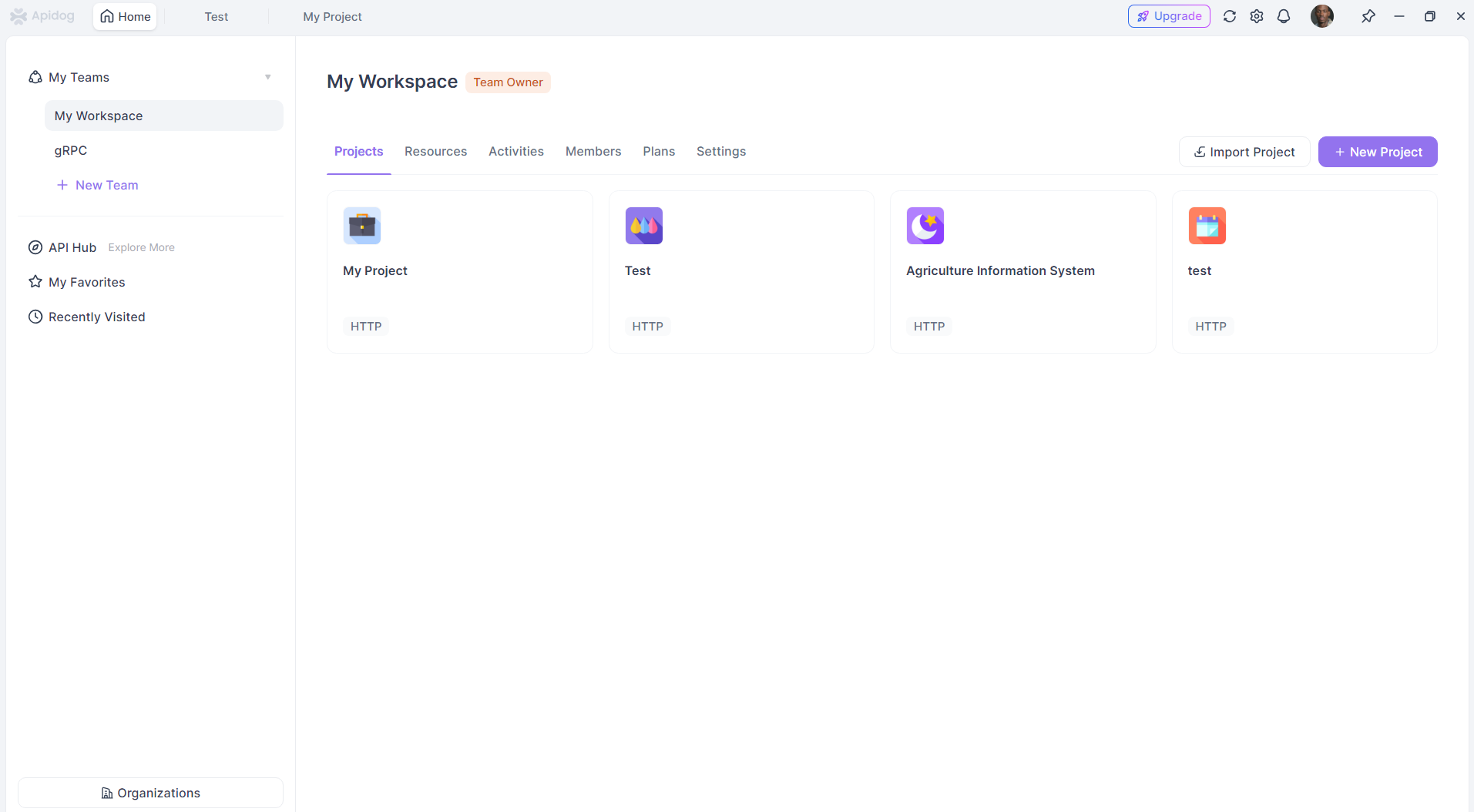

Using the Vercel v0 API with APIdog

APIdog is a comprehensive API platform designed for designing, developing, testing, and documenting APIs. Its strength lies in unifying these different stages of the API lifecycle. You can use APIdog to interact with the Vercel v0 API, just like any other HTTP-based API.

Here's a general approach to using the Vercel v0 API with APIdog:

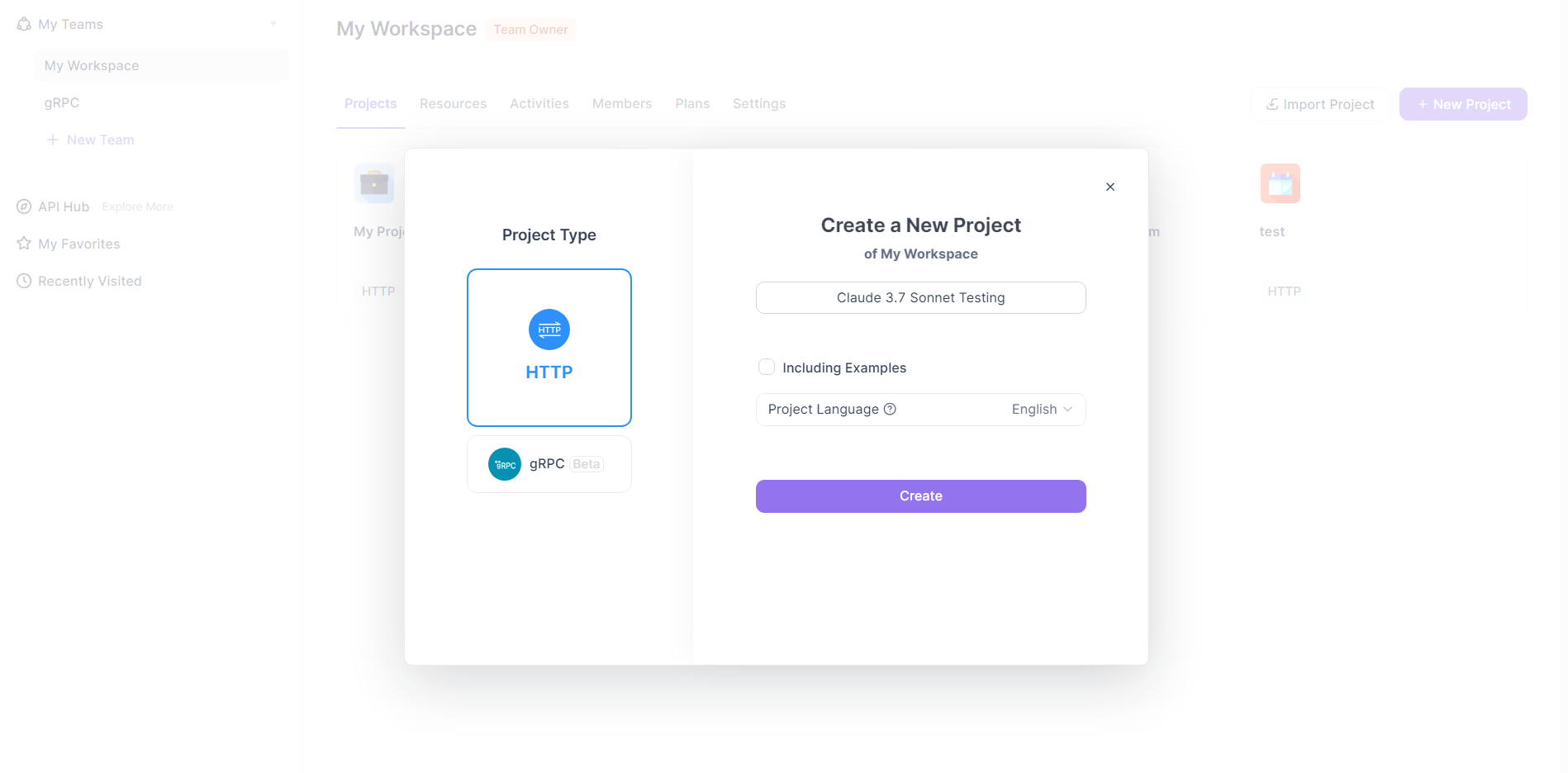

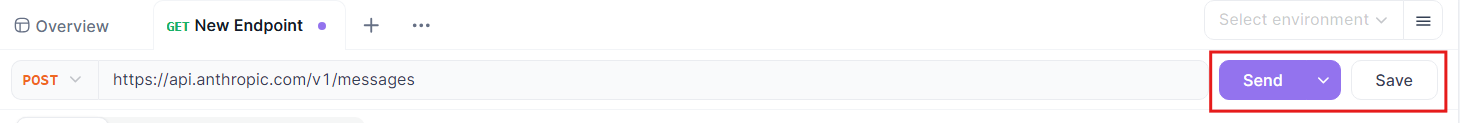

Create a New Request in APIdog:

- Within your APIdog project, initiate the creation of a new request. This is typically done by clicking a "+" icon and selecting "New Request" or a similar option.

- APIdog supports various request types; for the Vercel v0 API, you'll be creating an HTTP request.

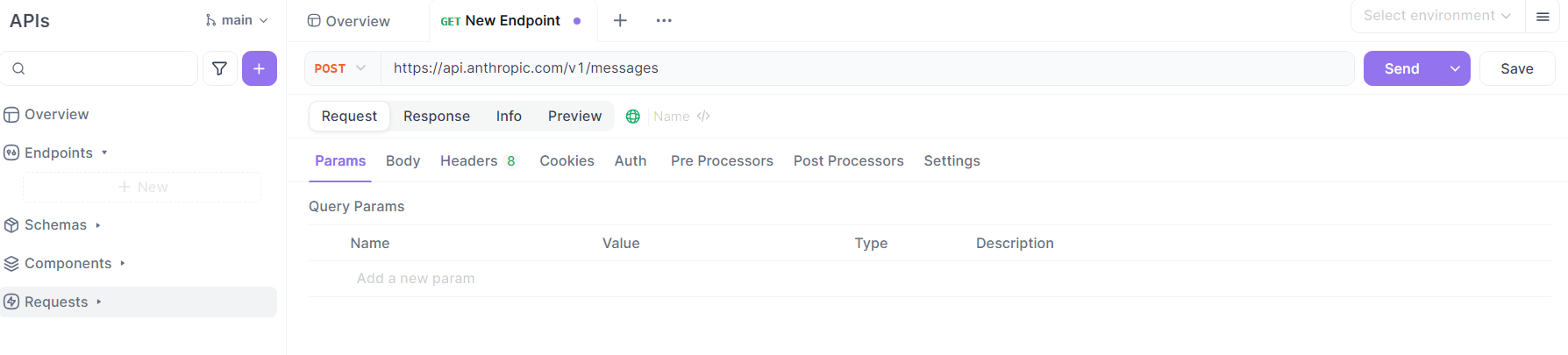

Configure the Request Details:

- Method: Select

POSTas the HTTP method, as specified in the Vercel v0 API documentation. - URL: Enter the Vercel v0 API endpoint:

https://api.v0.dev/v1/chat/completions. - Headers:

- Add an

Authorizationheader. The value should beBearer YOUR_VERCEL_V0_API_KEY, replacingYOUR_VERCEL_V0_API_KEYwith the actual API key you obtained from v0.dev. - Add a

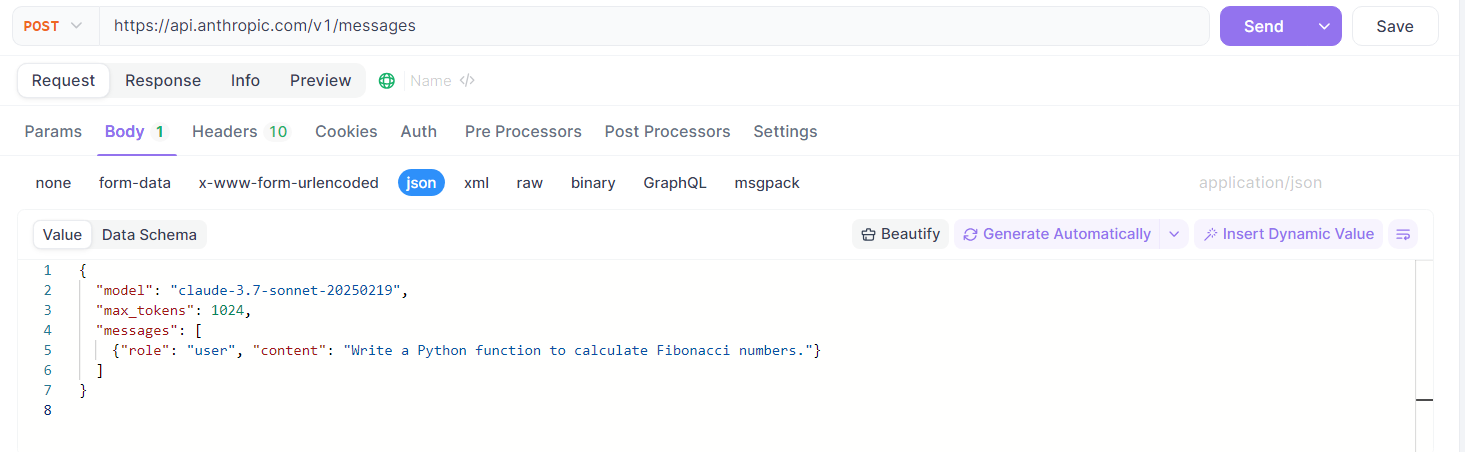

Content-Typeheader with the valueapplication/json. - Body:

Switch to the "Body" tab in APIdog and select the "raw" input type, then choose "JSON" as the format.

Construct the JSON payload according to the Vercel v0 API specifications. For example:

{

"model": "v0-1.0-md",

"messages": [

{ "role": "user", "content": "Generate a React component for a loading spinner." }

],

"stream": false // or true, depending on your needs

}

You can customize the messages array with your desired conversation history and prompt. You can also include optional fields like stream, tools, or tool_choice as needed.

Send the Request:

- Once all the details are configured, click the "Send" button in APIdog.

View the Response:

- APIdog will display the server's response in the response panel.

- If

streamwasfalse, you'll see the complete JSON response. - If

streamwastrue, APIdog's handling of SSE might vary. It may display the chunks as they arrive or accumulate them. Refer to APIdog's documentation for specifics on handling streamed responses. You might need to use APIdog's scripting capabilities or test scenarios for more advanced stream handling.

Utilize APIdog Features (Optional):

- Environments: Store your

V0_API_KEYand the base URL (https://api.v0.dev) as environment variables in APIdog for easier management across different requests or projects. - Endpoint Saving: Save this configured request as an "Endpoint Case" or within an API definition in APIdog. This allows you to easily reuse and version your interactions with the Vercel v0 API.

- Test Scenarios: If you need to make a sequence of calls or incorporate the Vercel v0 API into a larger testing workflow, create Test Scenarios in APIdog.

- Automated Validation: If you define an API specification for the Vercel v0 API within APIdog (or import one if available), APIdog can automatically validate responses against this schema.

By following these steps, you can effectively use APIdog as a client to send requests to the Vercel v0 API, inspect responses, and manage your API interactions in a structured manner. This is particularly useful for testing prompts, exploring API features, and integrating AI-generated content or logic into applications during the development and testing phases.

Conclusion

The Vercel v0-1.0-md API represents a significant step by Vercel into the realm of AI-assisted development. Its focus on modern web frameworks, OpenAI compatibility, and features like auto-fix and multimodal input make it a compelling option for developers looking to build next-generation web applications. While currently in beta and subject to specific plan requirements, the API's design and the supporting AI SDK suggest a commitment to providing a powerful yet accessible tool. Whether used directly via its REST API, through the AI SDK, or managed with tools like APIdog, the v0-1.0-md model offers a promising avenue for integrating advanced AI capabilities into the web development workflow, streamlining tasks and unlocking new creative possibilities. As the API matures and potentially expands its offerings, it will undoubtedly be a space to watch for developers keen on leveraging AI in their projects.