Developers increasingly turn to advanced AI models like Veo 3.1 to create dynamic video content. This API, integrated within the Gemini ecosystem, enables precise video generation with enhanced audio and narrative features. Before proceeding, consider tools that streamline API interactions.

Google introduced Veo 3.1 as an upgrade to its video generation capabilities, focusing on improved prompt adherence and audiovisual quality. This model builds on previous iterations by incorporating richer audio elements and better control over scenes. Consequently, users achieve more realistic outputs suitable for applications in filmmaking, marketing, and education.

Want to Build AI Apps at lightening fast speed? There is an awesome Wavespeed AI Alternative you need to know of: Hypereal AI.

Hypereal AI replaces fragmented model and infra stacks with a unified application layer for modern AI products.

First, understand the core enhancements. Veo 3.1 offers stronger integration of audio, including natural dialogues and synchronized sound effects. Additionally, it supports features like using reference images for consistency and extending videos seamlessly. These advancements make the API a powerful tool for technical users who require granular control.

Accessing Veo 3.1 demands a structured approach. The following sections outline the necessary steps, from setup to advanced usage, ensuring you implement the API effectively.

What Is Veo 3.1 API and Its Key Features

Veo 3.1 represents Google's latest iteration in AI-driven video generation, available through the Gemini API. Engineers at Google DeepMind developed this model to address limitations in earlier versions, such as Veo 3, by enhancing image-to-video conversions and adding native audio support. As a result, Veo 3.1 produces videos with superior realism, capturing textures, lighting, and movements accurately.

Key features include:

- Prompt Adherence: The model interprets text prompts more accurately, reducing discrepancies between user intent and output.

- Audiovisual Integration: It generates synchronized audio, including dialogues and effects, directly within videos.

- Narrative Control: Users specify cinematic styles, transitions, and character consistencies.

- Image-Based Generation: Converts static images into dynamic videos with enhanced quality.

- Extension and Transition Tools: Extends existing clips or bridges start and end frames smoothly.

These capabilities position Veo 3.1 as a versatile API for developers building creative applications. For example, content creators use it to prototype storyboards, while enterprises apply it in automated video production pipelines.

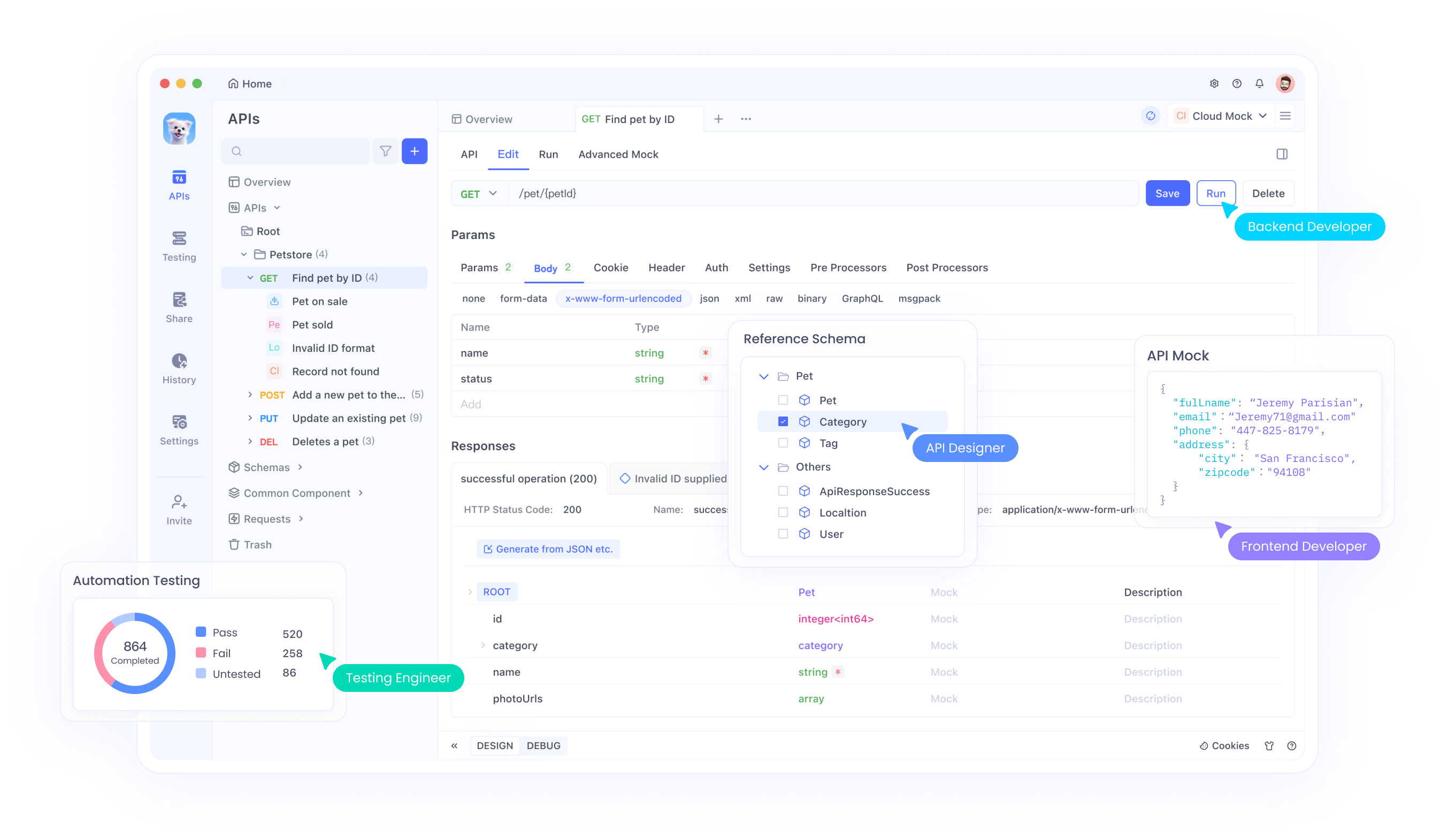

Moreover, Veo 3.1 integrates with tools like Apidog, which allows users to mock endpoints and test requests without direct API calls. This integration proves invaluable during development, as it minimizes errors and accelerates iteration.

To illustrate the output quality, consider examples of Veo 3.1-generated content.

This image highlights the model's ability to handle varied scenarios, from abstract art to photorealistic landscapes.

Transitioning to practical aspects, developers must prepare their environment before invoking the API.

Prerequisites for Using Veo 3.1 API

Before you integrate Veo 3.1, ensure your setup meets the requirements. First, obtain access to the Gemini API, as Veo 3.1 operates within this framework. Google provides this through Google AI Studio or Vertex AI for enterprise users.

Essential prerequisites include:

Google Cloud Account: Create an account if you lack one. This enables billing and API key management.

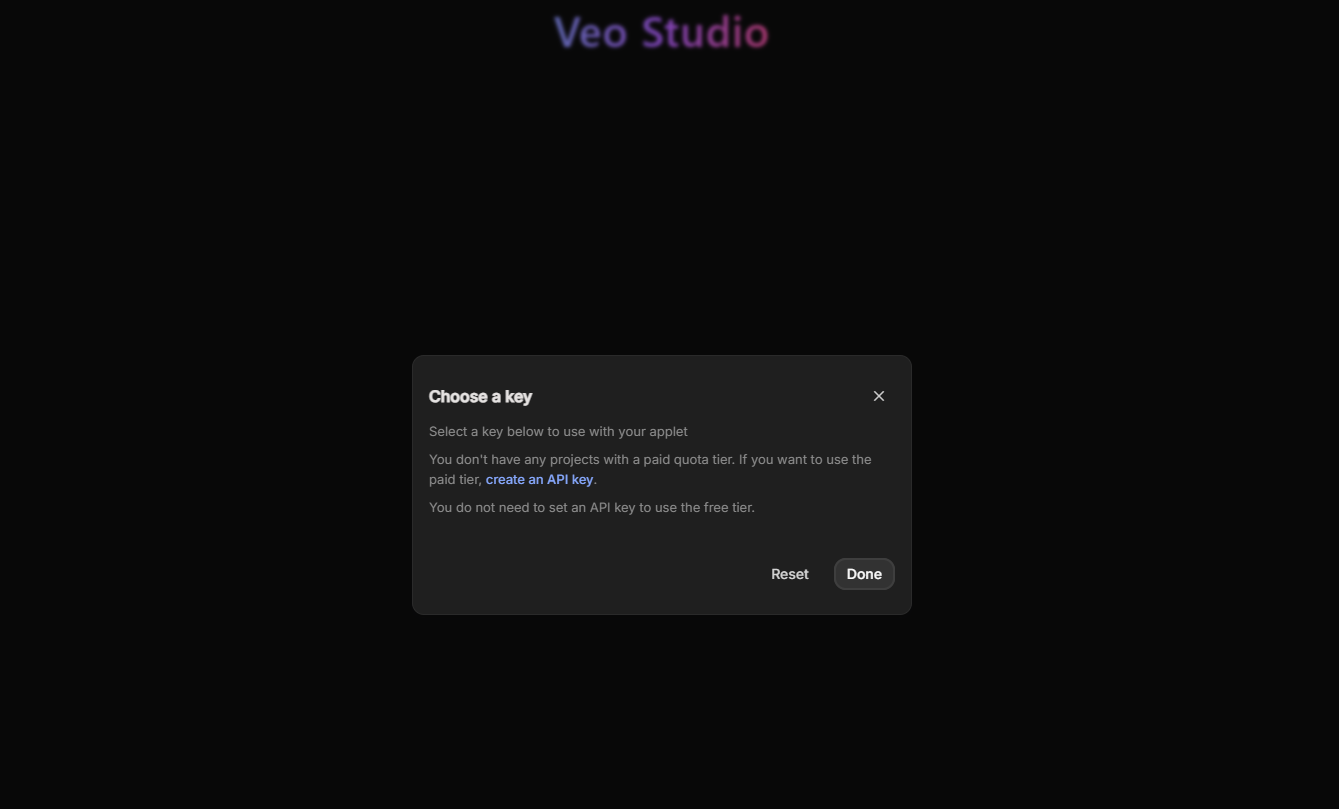

API Key: Generate a paid API key, since Veo 3.1 is available only on the paid tier.

Development Environment: Install Python 3.8 or higher, along with the Google Generative AI library via pip: pip install google-generativeai.

Familiarity with REST APIs: Veo 3.1 uses HTTP requests, so knowledge of JSON payloads and authentication helps.

Testing Tool: Download Apidog for free to handle request construction and response validation. Apidog's interface allows you to import OpenAPI specs for Veo 3.1, making it easier to experiment with parameters.

Once set up, authenticate your requests. This step prevents unauthorized access and tracks usage for billing.

Furthermore, verify your system's resources. Video generation demands computational power, though the API offloads processing to Google's servers. Nevertheless, ensure stable internet for uploading images or videos.

With these in place, proceed to authentication.

Authentication and API Key Management for Veo 3.1

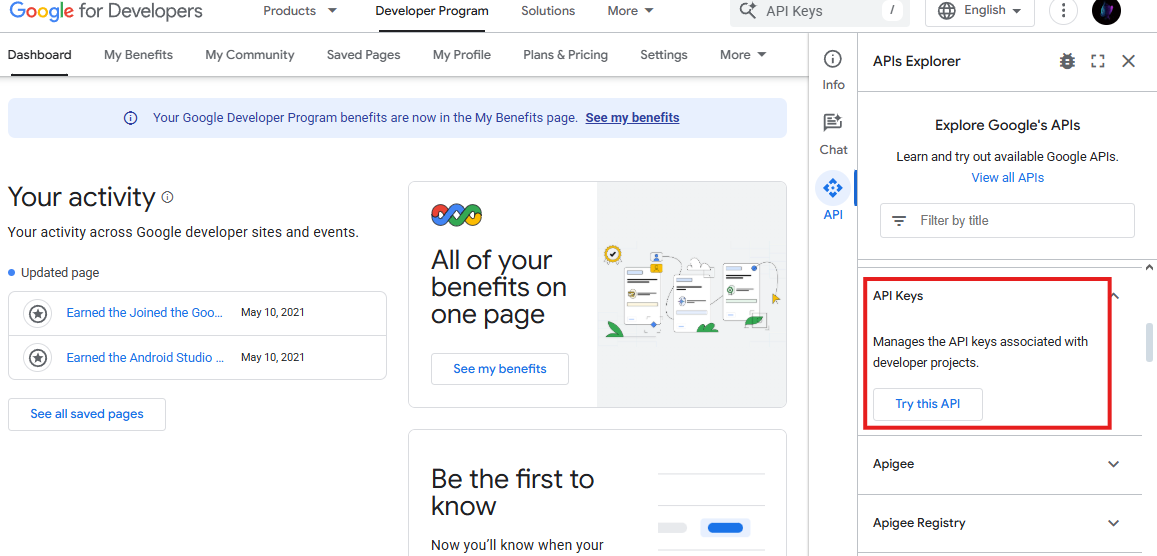

Authentication forms the backbone of secure API usage. Veo 3.1 requires an API key from the Gemini API dashboard. Developers generate this key after enabling billing.

Follow these steps:

Navigate to ai.google.dev and sign in.

Create a new API key, selecting the paid tier.

Configure the key with restrictions, such as IP allowlisting, to enhance security. Store the key securely, avoiding hardcoding in scripts.

In code, initialize the client like this:

import google.generativeai as genai

genai.configure(api_key="YOUR_API_KEY")

This setup authenticates subsequent calls. If you encounter errors, check the key's validity and quota limits.

Additionally, use Apidog to test authentication. Import the Veo 3.1 endpoint, add your API key as a header, and send a sample request. Apidog's debugging features reveal issues like invalid credentials quickly.

Once authenticated, explore the API's capabilities in depth.

Exploring Veo 3.1 API Capabilities in Detail

Veo 3.1 excels in generating videos from prompts, images, or existing clips. Its capabilities extend beyond basic generation to include advanced editing-like functions.

For instance, the "Ingredients to Video" feature uses reference images to guide output. Provide up to three images, and the model maintains consistency in characters or styles.

Similarly, "Scene Extension" appends content to an existing video's last frame, creating longer narratives.

"First and Last Frame" generates transitions between two images, complete with audio.

These features support resolutions up to 1080p and lengths exceeding one minute, depending on configuration.

To visualize, here's another example:

Such outputs showcase the model's prowess in handling complex prompts.

Moreover, Veo 3.1 handles audio natively, synchronizing sounds with visuals. This eliminates the need for post-production in many cases.

Transitioning to implementation, examine the endpoints.

Veo 3.1 API Endpoints and Parameters Explained

The primary endpoint for Veo 3.1 is the generate_videos method in the Gemini API. It accepts parameters via a POST request.

Key parameters:

- model: Set to "veo-3.1-generate-preview" or "veo-3.1-fast-generate-preview".

- prompt: String describing the video.

- config: Object containing optional settings like reference_images (list of images), last_frame (image for transitions).

- video: Existing video for extensions.

- image: Starting image.

For example, a basic request structure in JSON:

{

"model": "veo-3.1-generate-preview",

"prompt": "A cowboy riding through a golden field at sunset"

}

Apidog facilitates parameter testing by allowing you to build and modify payloads visually.

Furthermore, control video length via prompts or configurations, though defaults apply.

Handle responses asynchronously, as generation takes time. Poll for completion using the operation ID.

With endpoints understood, apply them in code.

Code Examples for Basic Veo 3.1 API Usage

Developers implement Veo 3.1 primarily in Python. Start with a simple generation:

from google.generativeai import types

import google.generativeai as genai

genai.configure(api_key="YOUR_API_KEY")

client = genai.GenerativeModel('veo-3.1-generate-preview')

prompt = "A futuristic cityscape with flying cars and neon lights"

operation = client.generate_content([prompt], generation_config=types.GenerationConfig(candidate_count=1))

# Wait for completion

result = operation.result()

video_url = result.candidates[0].content.parts[0].video.uri

print(video_url)

This code generates a video and retrieves its URL.

However, adapt for errors by adding try-except blocks.

Use Apidog to replicate this request in a GUI, exporting curl commands for scripting.

Expand to advanced examples next.

Advanced Usage: Reference Images with Veo 3.1 API

Reference images enhance consistency. Supply them in the config:

from google.generativeai import types

config = types.GenerationConfig(

reference_images=[image1, image2] # Image objects or URLs

)

operation = client.generate_content([prompt], generation_config=config)

This maintains character appearances across scenes.

For instance, in storytelling apps, reference a protagonist's image to ensure uniformity.

Test variations in Apidog by uploading different images and observing outputs.

Additionally, combine with prompts for stylistic control, like "in the style of Pixar."

Implementing Scene Extension in Veo 3.1 API

Extend videos to build longer content:

operation = client.generate_content(

[prompt],

video=existing_video # Video object

)

The model continues from the last second, preserving style and audio.

This feature suits applications like video editing tools, where users append segments iteratively.

Monitor extension limits to avoid exceeding quotas.

Creating Transitions with First and Last Frame in Veo 3.1

Bridge frames smoothly:

config = types.GenerationConfig(

last_frame=last_image

)

operation = client.generate_content(

[prompt],

image=first_image,

generation_config=config

)

This generates interpolations with audio.

Use cases include animations or tutorials requiring seamless shifts.

Visualize potential results:

Integrating Audio Features in Veo 3.1 API

Veo 3.1 generates audio by default. Specify in prompts: "Include dialogue between characters."

The model synchronizes sounds, enhancing immersion.

For custom audio, post-process outputs, but native support reduces this need.

Test audio quality in Apidog by downloading generated videos.

Best Practices for Optimizing Veo 3.1 API Calls

Optimize to minimize costs and improve efficiency:

- Craft precise prompts to reduce iterations.

- Use the Fast variant for quicker generations.

- Batch requests where possible.

- Monitor usage via Google Cloud Console.

- Leverage Apidog for mocking to test without charges.

Additionally, handle rate limits by implementing exponential backoff.

Follow ethical guidelines, avoiding harmful content.

Veo 3.1 API Pricing and Cost Management

Veo 3.1 operates on a pay-per-use model. Pricing details:

| Model Variant | Price per Second (USD) |

|---|---|

| Veo 3.1 Standard (with audio) | $0.40 |

| Veo 3.1 Fast (with audio) | $0.15 |

No free tier exists; all usage requires the paid tier. Costs accrue based on generated video length.

Manage expenses by estimating: A 10-second video on Fast costs $1.50.

Track billing in the dashboard and set alerts.

Troubleshooting Common Issues with Veo 3.1 API

Common errors include invalid keys or exceeded quotas. Resolve by verifying credentials.

If outputs mismatch prompts, refine descriptions.

For network issues, ensure stable connections.

Apidog aids troubleshooting by logging requests.

Conclusion: Mastering Veo 3.1 API for Innovative Applications

Veo 3.1 API empowers developers to create sophisticated videos efficiently. By following this guide, you integrate its features seamlessly. Remember, tools like Apidog enhance productivity—download it free today to elevate your Veo 3.1 workflows.