Large language models (LLMs) continue to push the boundaries of what machines can achieve. Among these innovations stands the Qwen3 series, developed by Alibaba Cloud’s Qwen team. Specifically, the Qwen3-30B-A3B and Qwen3-235B-A22B models offer impressive capabilities as Mixture-of-Experts (MoE) architectures. The Qwen3-30B-A3B features 30 billion total parameters with 3 billion activated, while the Qwen3-235B-A22B scales up to 235 billion total parameters with 22 billion activated. These models excel in reasoning, multilingual support, and instruction-following, making them valuable tools for developers and researchers alike.

Fortunately, you can access these powerful Qwen 3 models for free via APIs provided by OpenRouter.

Introduction to Qwen3 and Its Models

The Qwen3 series represents a leap forward in LLM design, blending dense and MoE approaches. Unlike traditional dense models that activate all parameters for every task, MoE models like Qwen3-30B-A3B and Qwen3-235B-A22B activate only a subset, improving computational efficiency without sacrificing performance. This efficiency stems from their expert-based architecture, where specific "experts" handle different aspects of a task.

The Qwen3-30B-A3B, with its 30 billion parameters and 3 billion active, suits smaller-scale applications or resource-constrained environments.

Conversely, the Qwen3-235B-A22B, with 235 billion parameters and 22 billion active, targets more demanding tasks requiring deeper reasoning or broader language coverage. Both models support over 100 languages and offer features like thinking mode for complex problem-solving, making them versatile for global use cases.

Now, let’s dive into accessing these models’ APIs for free and testing them with Apidog.

Accessing Qwen3 APIs via OpenRouter

To use the Qwen3-30B-A3B and Qwen3-235B-A22B APIs without spending a dime, OpenRouter provides a convenient solution. OpenRouter hosts these models and offers an OpenAI-compatible API, simplifying integration with existing tools and libraries. Here’s how you get started.

First, sign up for an OpenRouter account at their website. After logging in, navigate to the API section to generate an API key.

This key authenticates your requests, so keep it secure. OpenRouter’s free tier includes access to Qwen3-30B-A3B and Qwen3-235B-A22B. However, expect limitations like rate caps or lower priority, which may slow responses during high traffic.

The API endpoint you’ll use is https://openrouter.ai/api/v1/chat/completions. This endpoint accepts POST requests in the OpenAI format, requiring a JSON body with the model name and message details. For instance, specify "qwen/qwen3-30b-a3b:free" or "qwen/qwen3-235b-a22b:free" as the model. With OpenRouter set up, you’re ready to test the API using Apidog.

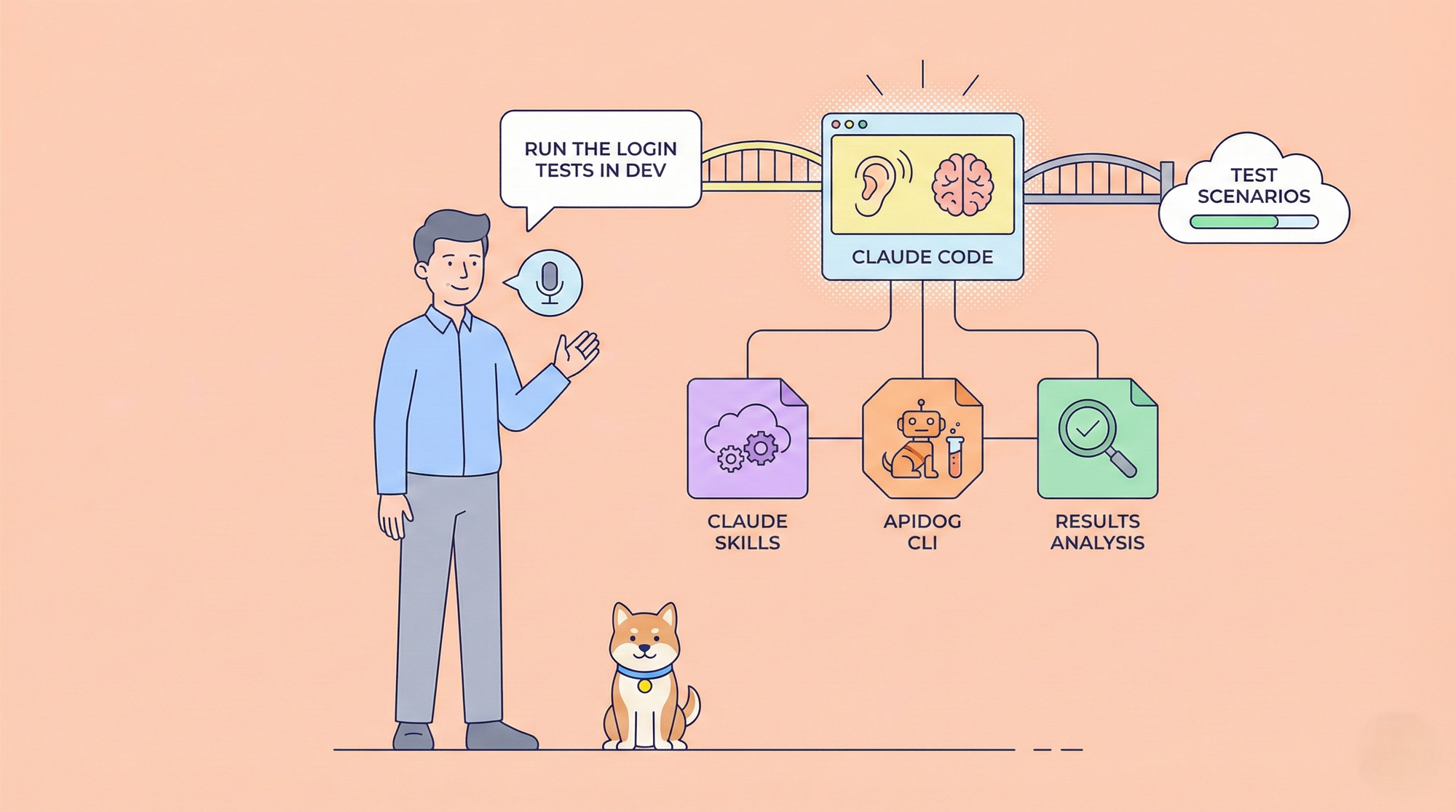

Setting Up Apidog for API Testing

Apidog, a robust API testing platform, simplifies interacting with the Qwen 3 APIs. Its intuitive interface lets you send requests, view responses, and debug issues efficiently. Follow these steps to configure it.

Start by downloading Apidog and installing it on your system. Launch the application and create a new project named “Qwen3 API Testing.”

Inside this project, add a new request. Set the method to POST and input the OpenRouter endpoint: https://openrouter.ai/api/v1/chat/completions.

Next, configure the headers. Add an “Authorization” header with the value Bearer YOUR_API_KEY, replacing YOUR_API_KEY with the key from OpenRouter. This authenticates your request. Then, switch to the body tab, select JSON format, and craft your request payload. Here’s an example for Qwen3-30B-A3B:

{

"model": "qwen/qwen3-30b-a3b:free",

"messages": [

{"role": "user", "content": "Hello, how are you?"}

]

}

Click “Send” in Apidog to execute the request. The response pane will display the model’s output, typically including generated text and metadata like token usage. Apidog’s features, such as saving requests or organizing them into collections, enhance your workflow. With this setup, you can now explore the Qwen 3 models’ capabilities.

Sending Requests to Qwen3 Models

Sending requests to the Qwen3-30B-A3B and Qwen3-235B-A22B models via OpenRouter is straightforward with Apidog. Let’s break down the process and highlight key features.

Each request requires two main components: the model field and the messages array. Set model to "qwen/qwen3-30b-a3b:free" or "qwen/qwen3-235b-a22b:free" based on your choice. The messages array holds the conversation, with each entry containing a role (e.g., "user" or "assistant") and content (the text). For a basic query, use:

{

"model": "qwen/qwen3-235b-a22b:free",

"messages": [

{"role": "user", "content": "What’s the capital of Brazil?"}

]

}

The Qwen 3 models also support a unique feature: thinking mode. Enabled by default, this mode generates a <think>...</think> block for complex tasks, showing the model’s reasoning. To disable it for simpler queries, append /no_think to your prompt. For example:

{

"model": "qwen/qwen3-30b-a3b:free",

"messages": [

{"role": "user", "content": "Tell me a fun fact. /no_think"}

]

}

This returns a direct response without reasoning. Test both modes in Apidog to see how response times and outputs vary, especially on the free tier where delays might occur.

Examples and Use Cases

To showcase the Qwen3-30B-A3B and Qwen3-235B-A22B models’ potential, let’s explore practical examples using Apidog. These scenarios highlight their strengths in reasoning, multilingual tasks, and code generation.

Example 1: Solving a Math Problem

For a reasoning task, ask the Qwen3-235B-A22B to solve a quadratic equation:

{

"model": "qwen/qwen3-235b-a22b:free",

"messages": [

{"role": "user", "content": "Solve x^2 - 5x + 6 = 0"}

]

}

The response might include:

<think>

Using the quadratic formula x = [-b ± sqrt(b^2 - 4ac)] / 2a, where a = 1, b = -5, c = 6: x = [5 ± sqrt(25 - 24)] / 2 = [5 ± 1] / 2. Thus, x = 3 or x = 2.

</think>

Solutions: x = 3, x = 2.

This demonstrates the model’s ability to reason step-by-step.

Example 2: Multilingual Translation

Test the Qwen 3 models’ language skills by translating text. For Qwen3-30B-A3B:

{

"model": "qwen/qwen3-30b-a3b:free",

"messages": [

{"role": "user", "content": "Translate 'Good morning' to Spanish. /no_think"}

]

}

Expect a quick reply: "Buenos días". The /no_think command ensures efficiency for simple tasks.

Example 3: Code Generation

Ask the Qwen3-235B-A22B to write a Python function:

{

"model": "qwen/qwen3-235b-a22b:free",

"messages": [

{"role": "user", "content": "Write a Python function to find the maximum of three numbers."}

]

}

The response could be:

<think>

I’ll use simple comparisons to find the maximum efficiently.

</think>

def find_max(a, b, c):

if a >= b and a >= c:

return a

elif b >= a and b >= c:

return b

else:

return c

These examples illustrate how the Qwen 3 models adapt to diverse tasks, making them ideal for applications like chatbots, educational tools, or coding assistants.

Conclusion and Next Steps

Using the Qwen3-30B-A3B and Qwen3-235B-A22B APIs for free via OpenRouter, paired with Apidog, opens up a world of possibilities. From solving equations to generating code, these models deliver powerful performance at no cost. Apidog enhances this experience by providing a seamless way to test and refine your API interactions.

As you experiment, tweak prompts, toggle thinking modes, and monitor responses to optimize your use case. Sign up for OpenRouter, grab your API key, and start sending requests today. Download Apidog for free to elevate your testing game. With Qwen 3 and Apidog, you’re set to build innovative solutions effortlessly.