AI development is evolving fast, and integrating external tools with language models is a critical step forward. OpenRouter provides a unified API to access numerous language models, while MCP Servers (Model Context Protocol Servers) enable these models to execute external tools and access live data. Combining them creates a powerful system for building advanced AI applications.

In this post, I’ll guide you through integrating MCP Servers with OpenRouter. You’ll learn their core functionalities, the integration process, and practical examples.

Understanding MCP Servers and OpenRouter

To integrate MCP Servers with OpenRouter, you first need to grasp what each component does.

OpenRouter: Unified Access to Language Models

OpenRouter is a platform that simplifies interaction with large language models (LLMs) from providers like OpenAI, Anthropic, and xAI. It offers a single API endpoint https://openrouter.ai/api/v1/chat/completions compatible with OpenAI’s API structure. Key features include:

- Model Aggregation: Access hundreds of LLMs through one interface.

- Cost Optimization: Routes requests to cost-effective models based on availability and pricing.

- Load Balancing: Distributes requests to prevent overload on any single provider.

- Fallbacks: Switches to alternative models if one fails.

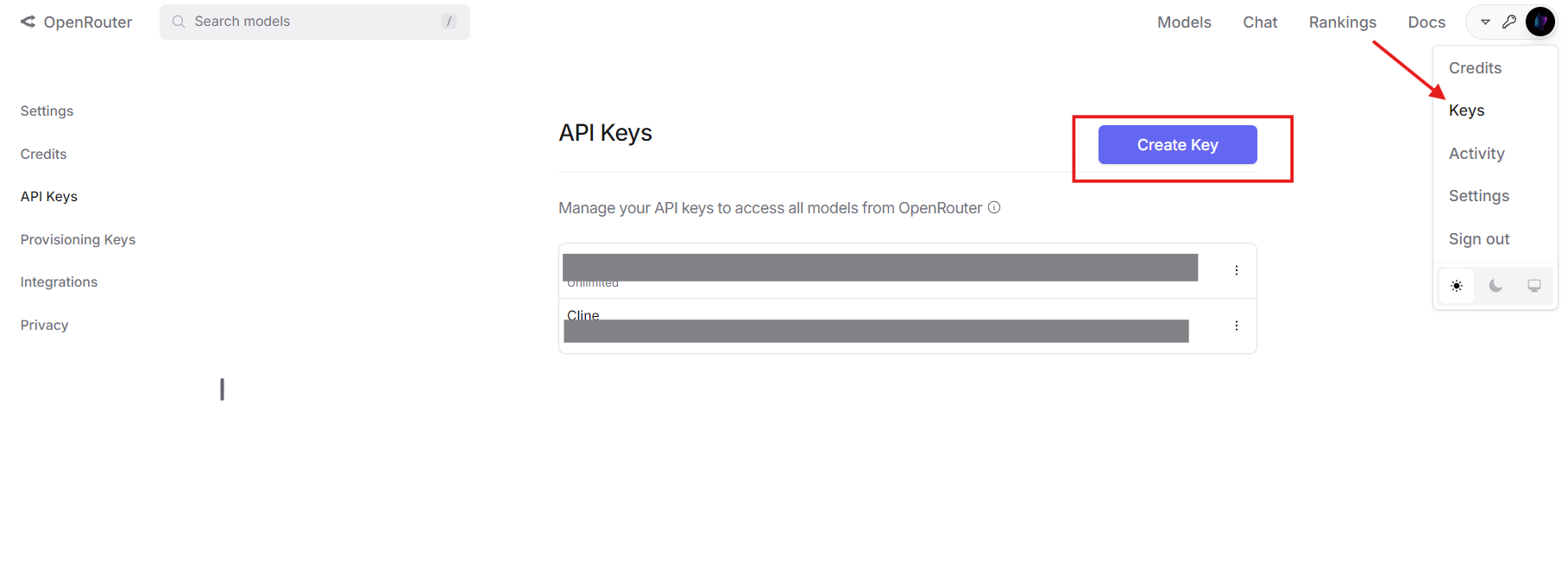

You’ll need an OpenRouter account and an API key to proceed. Get yours at openrouter.ai.

MCP Servers: Extending Model Capabilities

MCP Servers implement the Model Context Protocol, enabling LLMs to call external tools. Unlike standalone models limited to their training data, MCP Servers allow real-time interaction with systems like file directories, databases, or third-party APIs. A typical MCP tool definition includes:

- Name: Identifies the tool (e.g.,

list_files). - Description: Explains its purpose.

- Parameters: Specifies inputs in JSON Schema format.

For example, an MCP tool to list directory files might look like this:

{

"name": "list_files",

"description": "Lists files in a specified directory",

"parameters": {

"type": "object",

"properties": {

"path": {"type": "string", "description": "Directory path"}

},

"required": ["path"]

}

}

Together, OpenRouter provides the model, and MCP Servers supply the tools, forming a robust AI ecosystem.

Why Integrate MCP Servers with OpenRouter?

Combining these technologies offers several technical advantages:

- Model Flexibility: Use any OpenRouter-supported LLM with tool-calling capabilities.

- Cost Reduction: Pair cheaper models with external tools to offload complex tasks.

- Enhanced Capabilities: Enable models to fetch live data or perform actions (e.g., file operations).

- Scalability: Switch models or tools without rewriting your core logic.

- Future-Proofing: Adapt to new LLMs and tools as they emerge.

This integration is ideal for developers building AI systems that need real-world interactivity.

Step-by-Step Integration Process

Now, let’s get technical. Here’s how to integrate MCP Servers with OpenRouter.

Prerequisites

Ensure you have:

- OpenRouter Account and API Key: From openrouter.ai.

- MCP Server: Running locally (e.g., at

http://localhost:8000) or remotely. For this guide, I’ll use a file system MCP server. - Python 3.8+: With

requestslibrary (pip install requests). - Apidog: Optional but recommended for API testing. Download free.

Step 1: Define and Convert MCP Tools

OpenRouter uses OpenAI’s tool-calling format, so you must convert MCP tool definitions. Start with the MCP definition:

{

"name": "list_files",

"description": "Lists files in a specified directory",

"parameters": {

"type": "object",

"properties": {

"path": {"type": "string", "description": "Directory path"}

},

"required": ["path"]

}

}

Convert it to OpenAI format by adding a type field and nesting the function details:

{

"type": "function",

"function": {

"name": "list_files",

"description": "Lists files in a specified directory",

"parameters": {

"type": "object",

"properties": {

"path": {"type": "string", "description": "Directory path"}

},

"required": ["path"]

}

}

}

This JSON structure is what OpenRouter expects in its API payload.

Step 2: Configure the API Request

Prepare an API request to OpenRouter. Define headers with your API key and a payload with the model, messages, and tools. Here’s a Python example:

import requests

import json

# Headers

headers = {

"Authorization": "Bearer your_openrouter_api_key",

"Content-Type": "application/json"

}

# Payload

payload = {

"model": "openai/gpt-4", # Replace with your preferred model

"messages": [

{"role": "user", "content": "List files in the current directory."}

],

"tools": [

{

"type": "function",

"function": {

"name": "list_files",

"description": "Lists files in a specified directory",

"parameters": {

"type": "object",

"properties": {

"path": {"type": "string", "description": "Directory path"}

},

"required": ["path"]

}

}

}

]

}

Replace your_openrouter_api_key with your actual key.

Step 3: Send the Initial API Request

Make a POST request to OpenRouter’s endpoint:

response = requests.post(

"https://openrouter.ai/api/v1/chat/completions",

headers=headers,

json=payload

)

response_data = response.json()

Step 4: Process Tool Calls

Check if the response includes a tool call:

{

"choices": [

{

"message": {

"role": "assistant",

"content": null,

"tool_calls": [

{

"id": "call_123",

"type": "function",

"function": {

"name": "list_files",

"arguments": "{\"path\": \".\"}"

}

}

]

}

}

]

}

Extract the tool call details:

message = response_data["choices"][0]["message"]

if "tool_calls" in message:

tool_call = message["tool_calls"][0]

function_name = tool_call["function"]["name"]

arguments = json.loads(tool_call["function"]["arguments"])

Step 5: Call the MCP Server

Send the tool request to your MCP Server:

mcp_response = requests.post(

"http://localhost:8000/call",

json={

"name": function_name,

"arguments": arguments

}

)

tool_result = mcp_response.json()["result"] # e.g., ["file1.txt", "file2.txt"]

Step 6: Return Tool Result to OpenRouter

Append the assistant’s tool call and the result to the message history:

messages = payload["messages"] + [

{

"role": "assistant",

"content": null,

"tool_calls": [tool_call]

},

{

"role": "tool",

"tool_call_id": tool_call["id"],

"content": json.dumps(tool_result)

}

]

# Update payload

payload["messages"] = messages

# Send follow-up request

final_response = requests.post(

"https://openrouter.ai/api/v1/chat/completions",

headers=headers,

json=payload

)

final_output = final_response.json()["choices"][0]["message"]["content"]

print(final_output) # e.g., "Files: file1.txt, file2.txt"

Step 7: Handle Multiple Tool Calls

If the model requires multiple tool calls, loop through the process:

messages = payload["messages"]

while True:

response = requests.post(

"https://openrouter.ai/api/v1/chat/completions",

headers=headers,

json={"model": "openai/gpt-4", "messages": messages}

)

message = response.json()["choices"][0]["message"]

if "tool_calls" not in message:

print(message["content"])

break

for tool_call in message["tool_calls"]:

function_name = tool_call["function"]["name"]

arguments = json.loads(tool_call["function"]["arguments"])

mcp_response = requests.post(

"http://localhost:8000/call",

json={"name": function_name, "arguments": arguments}

)

tool_result = mcp_response.json()["result"]

messages.extend([

{"role": "assistant", "content": null, "tool_calls": [tool_call]},

{"role": "tool", "tool_call_id": tool_call["id"], "content": json.dumps(tool_result)}

])

This ensures all tool calls are processed.

Real-World Example: File System Interaction

Let’s apply this to a practical scenario listing files with an MCP Server.

- Tool Definition: Use the

list_filestool from earlier. - MCP Server: Assume it’s running at

http://localhost:8000. - API Call: Send “List files in the current directory” to OpenRouter.

- Response Handling: The model calls

list_fileswith{"path": "."}. - MCP Execution: The server returns

["file1.txt", "file2.txt"]. - Final Output: The model responds, “Files found: file1.txt, file2.txt.”

Here’s the complete code:

import requests

import json

headers = {"Authorization": "Bearer your_openrouter_api_key", "Content-Type": "application/json"}

payload = {

"model": "openai/gpt-4",

"messages": [{"role": "user", "content": "List files in the current directory."}],

"tools": [{

"type": "function",

"function": {

"name": "list_files",

"description": "Lists files in a specified directory",

"parameters": {

"type": "object",

"properties": {"path": {"type": "string", "description": "Directory path"}},

"required": ["path"]

}

}

}]

}

response = requests.post("https://openrouter.ai/api/v1/chat/completions", headers=headers, json=payload)

message = response.json()["choices"][0]["message"]

if "tool_calls" in message:

tool_call = message["tool_calls"][0]

function_name = tool_call["function"]["name"]

arguments = json.loads(tool_call["function"]["arguments"])

mcp_response = requests.post("http://localhost:8000/call", json={"name": function_name, "arguments": arguments})

tool_result = mcp_response.json()["result"]

messages = payload["messages"] + [

{"role": "assistant", "content": null, "tool_calls": [tool_call]},

{"role": "tool", "tool_call_id": tool_call["id"], "content": json.dumps(tool_result)}

]

final_response = requests.post("https://openrouter.ai/api/v1/chat/completions", headers=headers, json={"model": "openai/gpt-4", "messages": messages})

print(final_response.json()["choices"][0]["message"]["content"])

Troubleshooting Common Issues

Here are solutions to frequent problems:

- Tool Not Called: Verify tool definition syntax and prompt clarity.

- Invalid Arguments: Ensure parameters match the JSON Schema.

- MCP Server Failure: Check server logs and endpoint (

http://localhost:8000/call). - API Authentication: Confirm your OpenRouter key is correct.

Use Apidog to debug API requests and responses efficiently.

Expanding the Integration

To exceed 2000 words and add depth, consider these extensions:

Database Query Example

Define an MCP tool to query a database:

{

"type": "function",

"function": {

"name": "query_db",

"description": "Queries a database with SQL",

"parameters": {

"type": "object",

"properties": {"sql": {"type": "string", "description": "SQL query"}},

"required": ["sql"]

}

}

}

Send “Get all users from the database” to OpenRouter, process the query_db call, and return results like [{"id": 1, "name": "Alice"}].

Error Handling

Add robust error handling:

try:

mcp_response = requests.post("http://localhost:8000/call", json={"name": function_name, "arguments": arguments})

mcp_response.raise_for_status()

except requests.RequestException as e:

tool_result = f"Error: {str(e)}"

This ensures your application remains stable.

Conclusion

Integrating MCP Servers with OpenRouter enables your AI to leverage external tools through a single API. This guide covered setup, tool conversion, API calls, and practical examples like file system interaction. With benefits like cost savings and enhanced functionality, this approach is a must-try for technical developers.

Start experimenting now grab Apidog for free here to test your APIs. Let me know how it goes!