Large Language Models (LLMs) have transformed natural language processing, enabling developers to build sophisticated AI-driven applications. However, accessing these models often comes with costs. Fortunately, platforms like OpenRouter and various online services offer free access to LLMs through APIs, making it possible to experiment without financial commitment. This technical guide explores how to leverage free LLMs using OpenRouter and online platforms, detailing available APIs, setup processes, and practical implementation steps.

Why Use Free LLMs?

LLMs, such as Meta’s Llama or Mistral’s Mixtral, power applications like chatbots, code generators, and text analyzers. Free access to these models eliminates cost barriers, enabling developers to prototype and deploy AI features. OpenRouter, a unified inference API, provides standardized access to multiple LLMs, while online platforms like GitHub Models offer user-friendly interfaces. By combining these with Apidog, you can test and debug API calls effortlessly, ensuring optimal performance.

Understanding OpenRouter and Its Role in Free LLM Access

OpenRouter is a powerful platform that aggregates LLMs from various providers, offering a standardized, OpenAI-compatible API. It supports both free and paid tiers, with free access to models like Llama 3 and Mistral 7B. OpenRouter’s key features include:

- API Normalization: Converts provider-specific APIs into a unified format.

- Intelligent Routing: Dynamically selects backends based on availability.

- Fault Tolerance: Ensures service continuity with fallback mechanisms.

- Multi-Modal Support: Handles text and image inputs.

- Context Length Optimization: Maximizes token window efficiency.

By using OpenRouter, developers access a diverse range of LLMs without managing multiple provider accounts. Apidog complements this by providing tools to test and visualize OpenRouter API calls, ensuring accurate request formatting.

Free OpenRouter APIs for LLMs

OpenRouter offers access to several free LLMs, each with unique architectures and capabilities. Below is a comprehensive list of free models available as of April 2025, based on technical specifications from recent analyses:

Mixtral 8x22B Instruct (Mistral AI)

- Architecture: Mixture-of-Experts (MoE) with sparse activation.

- Parameters: 400B total, 17B active per forward pass (128 experts).

- Context Length: 256,000 tokens (1M theoretical maximum).

- Modalities: Text + Image → Text.

- Use Cases: Multimodal reasoning, complex symbolic reasoning, high-throughput API deployments.

Scout 109B (xAI)

- Architecture: MoE with optimized routing.

- Parameters: 109B total, 17B active per forward pass (16 experts).

- Context Length: 512,000 tokens (10M theoretical maximum).

- Modalities: Text + Image → Text.

- Use Cases: Visual instruction following, cross-modal inference, deployment-optimized tasks.

Kimi-VL-A3B-Thinking (Moonshot AI)

- Architecture: Lightweight MoE with specialized visual reasoning.

- Parameters: 16B total, 2.8B active per step.

- Context Length: 131,072 tokens.

- Modalities: Text + Image → Text.

- Use Cases: Resource-constrained visual reasoning, mathematical problem-solving, edge AI applications.

Nemotron-8B-Instruct (NVIDIA)

- Architecture: Modified transformer with NVIDIA optimizations.

- Parameters: 8B.

- Context Length: 8,192 tokens.

- Modalities: Text → Text.

- Use Cases: NVIDIA-optimized inference, efficient tensor parallelism, quantization-friendly deployments.

Llama 3 8B Instruct (Meta AI)

- Architecture: Transformer-based.

- Parameters: 8B.

- Context Length: 8,000 tokens.

- Modalities: Text → Text.

- Use Cases: General chat, instruction-following, efficient baseline tasks.

Mistral 7B Instruct (Mistral AI)

- Architecture: Transformer-based.

- Parameters: 7B.

- Context Length: 8,000 tokens.

- Modalities: Text → Text.

- Use Cases: General-purpose NLP, lightweight inference.

Gemma 2/3 Instruct (Google)

- Architecture: Transformer-based.

- Parameters: 9B.

- Context Length: 8,000 tokens.

- Modalities: Text → Text.

- Use Cases: Compact, high-performance tasks, multilingual applications.

Qwen 2.5 Instruct (Alibaba)

- Architecture: Transformer-based.

- Parameters: 7B.

- Context Length: 32,000 tokens.

- Modalities: Text → Text.

- Use Cases: Multilingual, multimodal reasoning, instruction-following.

These models are accessible via OpenRouter’s free tier, though limits apply (e.g., 30 requests/minute, 60,000 tokens/minute). Developers must sign up and obtain an API key, with phone verification sometimes required.

Other Free Online Platforms for LLMs

Beyond OpenRouter, several platforms provide free access to LLMs, each with distinct advantages:

GitHub Models

- Access: Integrated into GitHub workflows, tied to Copilot subscriptions.

- Models: Llama 3 8B, Phi-3 (Mini, Small, Medium) with 128K context.

- Features: Free tier with token limits, ideal for developer workflows.

- Use Cases: Code generation, text analysis.

- Integration: Apidog simplifies API testing within GitHub’s ecosystem.

Cloudflare Workers AI

- Access: Free tier with quantized models (AWQ, INT8).

- Models: Llama 2 (7B/13B), DeepSeek Coder (6.7B).

- Features: Efficient baselines, no payment verification required.

- Use Cases: Lightweight inference, cost-effective deployments.

- Integration: Apidog ensures accurate request formatting for Cloudflare APIs.

Google AI Studio

- Access: Free API key with rate limits (10 requests/minute, 1,500 daily).

- Models: Gemini 2.0 Flash.

- Features: Function calling, high-performance reasoning.

- Use Cases: Multimodal tasks, rapid prototyping.

- Integration: Apidog visualizes Gemini’s API responses for debugging.

These platforms complement OpenRouter by offering alternative access methods, from browser-based interfaces to API-driven integrations. Apidog enhances productivity by providing a unified interface to test and document these APIs.

Setting Up OpenRouter for Free LLM Access

To use OpenRouter’s free APIs, follow these steps:

Create an Account

- Visit openrouter.ai and sign up.

- Provide an email and, if prompted, verify your phone number.

- Generate an API key from the dashboard. Keep it secure, as it’s required for authentication.

Understand Rate Limits

- Free tier limits include 30 requests/minute, 60,000 tokens/minute, and 1,000,000 tokens/day.

- Monitor usage via OpenRouter’s dashboard to avoid exceeding quotas.

Install Prerequisites

- Ensure you have Python (3.7+) or Node.js installed for scripting API calls.

- Install Apidog to streamline API testing and documentation.

Configure Your Environment

- Store your API key in an environment variable (e.g.,

OPENROUTER_API_KEY) to avoid hardcoding. - Use Apidog to set up a project, import OpenRouter’s API specification, and configure your key.

Making an API Call with OpenRouter

OpenRouter’s API follows an OpenAI-compatible format, making it straightforward to integrate. Below is a step-by-step guide to making an API call, including a sample Python script.

Step 1: Prepare the Request

- Endpoint:

https://openrouter.ai/api/v1/chat/completions - Headers:

Authorization:Bearer <YOUR_API_KEY>Content-Type:application/json- Body: Specify the model, prompt, and parameters (e.g., temperature, max_tokens).

Step 2: Write the Code

Here’s a Python example using the requests library to query Llama 3 8B Instruct:

import requests

import json

# Configuration

api_key = "your_openrouter_api_key"

url = "https://openrouter.ai/api/v1/chat/completions"

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

# Request payload

payload = {

"model": "meta-ai/llama-3-8b-instruct",

"messages": [

{"role": "user", "content": "Explain the benefits of using LLMs for free."}

],

"temperature": 0.7,

"max_tokens": 500

}

# Make the API call

response = requests.post(url, headers=headers, data=json.dumps(payload))

# Process the response

if response.status_code == 200:

result = response.json()

print(result["choices"][0]["message"]["content"])

else:

print(f"Error: {response.status_code}, {response.text}")

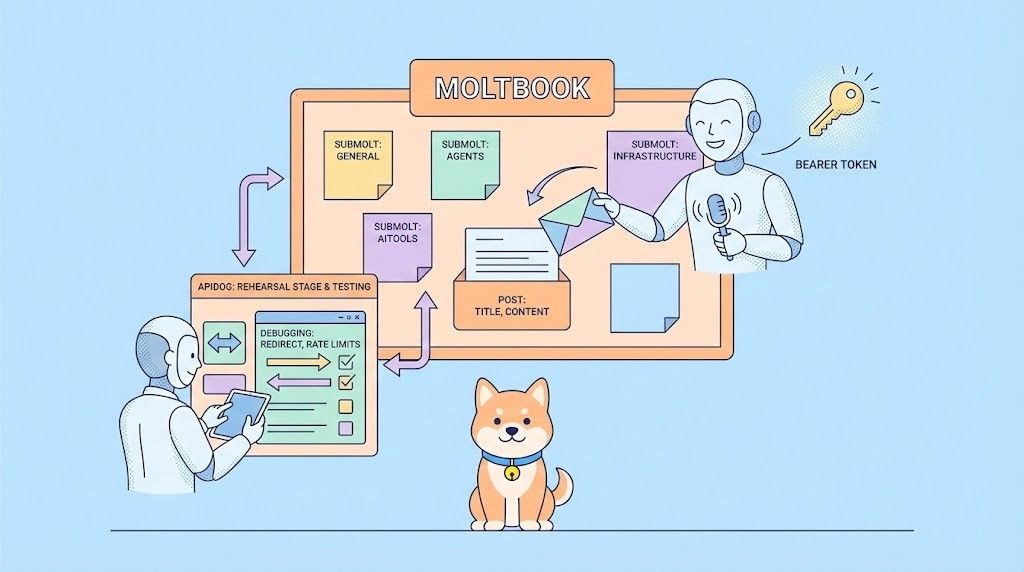

Step 3: Test with Apidog

- Import the OpenRouter API specification into Apidog.

- Create a new request, paste the endpoint, and add headers.

- Input the payload and send the request.

- Use Apidog’s visualization tools to inspect the response and debug errors.

Step 4: Handle Responses

- Check for

200 OKstatus to confirm success. - Parse the JSON response to extract the generated text.

- Handle errors (e.g.,

429 Too Many Requests) by implementing retry logic.

Step 5: Optimize Usage

- Use models with smaller context windows (e.g., 8K tokens) for cost efficiency.

- Monitor token usage to stay within free tier limits.

- Leverage Apidog to automate testing and generate API documentation.

This script demonstrates a basic API call. For production, add error handling, rate limiting, and logging. Apidog simplifies these tasks by providing a user-friendly interface for request management.

Best Practices for Using Free LLMs

To maximize the benefits of free LLMs, follow these technical best practices:

Select the Right Model

- Choose models based on task requirements (e.g., Llama 3 for general chat, DeepSeek Coder for programming).

- Consider context length and parameter size to balance performance and efficiency.

Optimize API Calls

- Minimize token usage by crafting concise prompts.

- Use batch processing for multiple queries to reduce overhead.

- Test prompts with Apidog to ensure clarity and accuracy.

Handle Rate Limits

- Implement exponential backoff for retrying failed requests.

- Cache responses for frequently asked queries to reduce API calls.

Ensure Data Privacy

- Review provider policies on data usage (e.g., Google AI Studio’s training data warnings).

- Avoid sending sensitive data unless the provider guarantees privacy.

Monitor Performance

- Use Apidog to log response times and error rates.

- Benchmark models against task-specific metrics (e.g., accuracy, fluency).

Leverage Quantization

- Opt for quantized models (e.g., AWQ, FP8) on Cloudflare or GitHub Models for faster inference.

- Understand trade-offs between precision and efficiency.

By adhering to these practices, you ensure efficient and reliable use of free LLMs, with Apidog enhancing your workflow through streamlined testing and documentation.

Challenges and Limitations

While free LLMs offer significant advantages, they come with challenges:

Rate Limits

- Free tiers impose strict quotas (e.g., 1,000,000 tokens/month on OpenRouter).

- Mitigate by optimizing prompts and caching responses.

Context Window Restrictions

- Some models (e.g., Nemotron-8B) have limited context lengths (8K tokens).

- Use models like Phi-3 (128K) for tasks requiring long contexts.

Performance Variability

- Smaller models (e.g., Mistral 7B) may underperform on complex tasks.

- Test multiple models with Apidog to identify the best fit.

Data Privacy Concerns

- Providers may use input data for training unless explicitly stated otherwise.

- Review terms of service and use local models (e.g., via AnythingLLM) when possible.

Dependency on Provider Infrastructure

- Free tiers may experience downtime or throttling.

- Implement fallback mechanisms using OpenRouter’s fault tolerance.

Despite these limitations, free LLMs remain a powerful tool for developers, especially when paired with Apidog for robust API management.

Integrating Free LLMs into Your Applications

To integrate free LLMs into your applications, follow this workflow:

Define Requirements

- Identify tasks (e.g., chatbot, text summarization).

- Determine performance and scalability needs.

Select a Platform

- Use OpenRouter for API-driven access to multiple models.

- Opt for Grok or GitHub Models for simpler interfaces.

Develop the Integration

- Write scripts to handle API calls (see the Python example above).

- Use Apidog to test and refine requests.

Deploy and Monitor

- Deploy your application on a cloud platform (e.g., Vercel, AWS).

- Monitor API usage and performance with Apidog’s analytics.

Iterate and Optimize

- Experiment with different models and prompts.

- Use Apidog to document and share API specifications with your team.

This workflow ensures seamless integration, with Apidog playing a critical role in testing and documentation.

Conclusion

Free LLMs, accessible via OpenRouter and online platforms, empower developers to build AI-driven applications without financial barriers. By using OpenRouter’s unified API, you can tap into models like Llama 3, Mixtral, and Scout, while platforms like Grok and GitHub Models offer alternative access methods. Apidog enhances this process by providing tools to test, debug, and document API calls, ensuring a smooth development experience. Start experimenting today by signing up for OpenRouter and downloading Apidog for free. With the right approach, free LLMs can unlock endless possibilities for your projects.