OpenAI launched GPT-5.1-Codex-Max on November 19, 2025, marking a significant advancement in agentic AI for software development. This model excels at sustained, long-running coding tasks that previous generations handled inconsistently. Furthermore, developers now integrate it seamlessly into Codex environments, enabling multi-hour autonomous operations with context compaction over millions of tokens.

This guide explains exactly how you access and apply GPT-5.1-Codex-Max in real-world scenarios. You configure environments, craft effective prompts, leverage reasoning efforts, and handle long-context sessions.

What Is GPT-5.1-Codex-Max? Key Capabilities and Architecture

OpenAI positions GPT-5.1-Codex-Max as the pinnacle of its Codex family. Engineers build it on an updated foundational reasoning model trained extensively on agentic tasks in software engineering, mathematics, research, and computer use.

Unlike general-purpose models such as GPT-5.1, this variant optimizes specifically for coding agents. It introduces native support for context compaction – a technique that summarizes and retains essential information when approaching token limits. Consequently, the model maintains coherence across sessions spanning millions of tokens, often running autonomously for hours or even over 24-hour periods in internal tests.

GPT-5.1-Codex-Max incorporates training on Windows-specific environments, addressing a longstanding gap in prior models that favored macOS and Linux. It also enhances collaboration in CLI workflows, making it a stronger partner for interactive sessions.

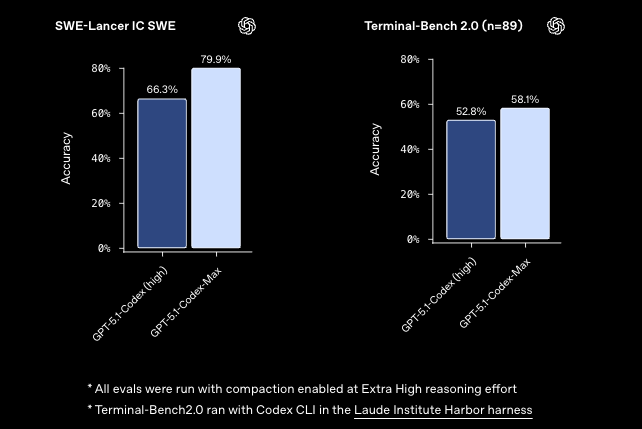

Benchmark performance underscores these gains. For instance:

- On SWE-bench Verified (n=500), it achieves 77.9% resolution at 'xhigh' reasoning effort.

- On SWE-Lancer IC SWE, it scores 79.9%.

- On Terminal-Bench 2.0, it reaches 58.1%.

These figures surpass GPT-5.1-Codex by notable margins, often with 30% fewer thinking tokens at equivalent effort levels. Therefore, developers experience faster iterations without sacrificing accuracy.

Accessing GPT-5.1-Codex-Max: Plans, Surfaces, and Initial Setup

You access GPT-5.1-Codex-Max primarily through OpenAI's Codex platform, which replaced older GitHub Copilot integrations for advanced agentic use.

First, ensure you subscribe to a qualifying plan: ChatGPT Plus, Pro, Business, Education, or Enterprise. As of launch, the model deploys immediately in these tiers and becomes the default in Codex surfaces, including:

- Codex CLI (open-source local agent)

- IDE extensions (VS Code, JetBrains)

- Cloud-based Codex web interface

- Code review tools

To begin, update your Codex CLI if installed locally:

codex update

This command pulls the latest version supporting GPT-5.1-Codex-Max. Next, verify the active model:

codex config model

You see gpt-5.1-codex-max listed as default. However, you can override it per session if needed.

For API users, OpenAI rolled out direct access shortly after launch under the model ID gpt-5.1-codex-max. Pricing aligns with prior Codex models, though rate limits vary by tier. Enterprise users contact sales for custom quotas.

When testing API endpoints for GPT-5.1-Codex-Max calls, Apidog proves invaluable. You import OpenAI's OpenAPI schema directly into Apidog, generate mock servers for offline development, and automate assertion-based tests – all without writing extra scripts.

Mastering Codex CLI with GPT-5.1-Codex-Max: Step-by-Step Configuration

Codex CLI represents the most powerful way to harness GPT-5.1-Codex-Max for local, secure agentic workflows.

Install the CLI first if you haven't:

npm install -g @openai/codex-cli

Alternatively, use the standalone binary from OpenAI's downloads.

Authenticate with your API key:

codex auth login

This stores the key securely. Now, initiate a new session in your project directory:

cd my-large-codebase

codex session new

The CLI automatically selects GPT-5.1-Codex-Max. You then issue natural-language commands, such as:

Refactor the entire authentication module to use OAuth 2.1 with refresh token rotation, update all dependencies, and add comprehensive tests.

The model analyzes the repository, proposes changes via diffs, runs tests iteratively, and applies fixes until passing. Thanks to compaction, it handles codebases exceeding 1 million tokens without losing context.

Furthermore, enable 'xhigh' reasoning for complex refactors:

codex config reasoning_effort xhigh

This allocates extended thinking time, yielding superior results on long-horizon tasks like full-stack feature implementation or vulnerability remediation.

Prompt Engineering Strategies for GPT-5.1-Codex-Max

Effective usage demands precise prompt engineering. You structure inputs hierarchically because the model responds well to explicit goals, constraints, and step-by-step directives.

For example, start sessions with a system-level preamble:

Generate a single self-contained browser app that renders an interactive CartPole RL sandbox with canvas graphics, a tiny policy-gradient controller, metrics, and an SVG network visualizer.

Features

Must be able to actually train a policy to make model better at cart pole

Visualizer for the activations/weights when the model is training or at inference

Steps in the episode, rewards this episode

Last survival time and best survival time in steps

Then, chain commands naturally. Additionally, leverage built-in tools: the model natively calls file operations, git commands, test runners, and external APIs when permitted.

Integrating GPT-5.1-Codex-Max into IDEs and CI/CD Pipelines

Beyond CLI, you embed GPT-5.1-Codex-Max into VS Code via the official Codex extension.

Install it from the marketplace, then select the model in settings. Features include:

- Inline suggestions with full-project awareness

- Autonomous PR generation

- Deep debugging sessions that trace issues across files

In CI/CD, script Codex CLI for automated code reviews:

# Example GitHub Action

- name: Codex Review

run: codex review pr ${{ github.event.pull_request.number }} --model gpt-5.1-codex-max --effort xhigh

This flags issues early, enforcing standards at scale.

Testing OpenAI API Interactions with Apidog: Best Practices

As you scale GPT-5.1-Codex-Max usage, robust API testing becomes essential. Apidog excels here by providing a unified environment for designing requests, validating responses, and automating regression suites.

Begin by importing OpenAI's API spec into Apidog:

- Create a new project.

- Import from URL:

https://raw.githubusercontent.com/openai/openai-openapi/master/openapi.yaml - Update endpoints to use

gpt-5.1-codex-maxas the model parameter.

Next, define environments for different API keys (dev, staging, production). Apidog's visual editor lets you craft complex chat completions with tool calls, then run pre- and post-scripts for assertions.

For instance, test long-context handling:

- Send a prompt exceeding 100k tokens.

- Assert response coherence and compaction triggers.

Apidog automatically generates mock servers, allowing frontend teams to prototype against GPT-5.1-Codex-Max endpoints before full deployment. Moreover, its built-in JMeter-like automation runs thousands of scenarios, catching token overflow or reasoning drift issues.

Advanced Use Cases: Long-Horizon Tasks and Agentic Workflows

GPT-5.1-Codex-Max truly shines in scenarios requiring sustained reasoning.

One common application involves project-scale refactors. You point the model at a monorepo and instruct:

Migrate the entire codebase from React 17 to React 19, implement concurrent mode, and optimize bundle size by 30%.

The agent works autonomously, creating branches, running builds, fixing failures, and submitting PRs – often completing in under 8 hours what takes humans days.

Another strength lies in cybersecurity assistance (defensive only). It scans repositories for vulnerabilities, proposes patches, and verifies fixes while adhering to OpenAI's safeguards.

In research-oriented coding, combine it with external tools via allowed function calling. For example, integrate with databases or cloud services for end-to-end data pipeline construction.

Performance Optimization and Cost Management

You balance capability and efficiency by selecting appropriate reasoning efforts:

mediumfor quick reviewshighfor standard tasksxhighfor frontier challenges

Monitor usage via OpenAI dashboard. Compaction reduces overall tokens by 20-40% in long sessions, lowering costs.

When prototyping expensive calls, use Apidog's mocking to simulate GPT-5.1-Codex-Max responses based on historical data – this saves credits during development.

Limitations and Responsible Usage

Despite strengths, GPT-5.1-Codex-Max remains under OpenAI's Medium cybersecurity preparedness level. Therefore, avoid offensive security tasks. Additionally, hallucinations can occur in extremely novel domains, so always verify outputs.

Follow rate limits strictly, and implement human-in-the-loop for production deployments.

Conclusion: Elevate Your Development Workflow with GPT-5.1-Codex-Max

GPT-5.1-Codex-Max redefines what AI-assisted coding can achieve in 2025. You now orchestrate multi-hour autonomous agents that refactor, debug, and innovate at scales previously impossible.

Start small: install Codex CLI, run a simple refactor, then scale to full projects. Pair it with Apidog for bulletproof API testing, and you create a development stack that outpaces traditional methods.

The future of software engineering arrives today – configure GPT-5.1-Codex-Max and experience the difference firsthand.