When we perform software tests, we often wonder whether or not the results are truly accurate. This is where Test Oracle comes in handy! Testing isn’t just about executing steps; it’s about knowing what should happen when those steps complete. Without a reliable way to determine pass or fail, even the most thorough test execution is just guessing.

The concept of a Test Oracle sounds academic, but it's one of the most practical ideas in software quality assurance. Knowing how to build and use test oracles will increase the reliability of your tests and the confidence of your releases, whether you're testing sophisticated algorithms, APIs, or user interfaces.

What Exactly is a Test Oracle?

A Test Oracle is a mechanism that determines whether a test has passed or failed by comparing the actual output of a system against expected behavior. Think of it as your referee—it watches the game and definitively calls “goal” or “no goal.” Without an oracle, you’re just kicking the ball around without knowing if you scored.

Every test needs an oracle, even if it’s implicit. When you manually test a login page and see “Welcome back!” after entering credentials, your brain serves as the oracle: you know that success looks like a welcome message, and not an error page. In automated testing, we must make these oracles explicit and reliable.

The Classic Oracle Problem

Consider testing a function that calculates shipping costs. You input destination, weight, and shipping method, and get back a price. How do you know that the price is correct?

// Example of an unclear oracle

function calculateShipping(weight, zone, method) {

return 15.99; // Is this right? Who knows!

}

Your oracle could be:

- Another implementation of the same algorithm (a reference system)

- A table of pre-calculated expected values

- A business rule like “must be between $5 and $100”

- A mathematical model you trust

Without one, that 15.99 is just a number, not a verified result.

Types of Test Oracles: Choose the Right Tool

Not all oracles work the same way. Selecting the right type for your situation is half the battle.

| Oracle Type | How It Works | Best Used For | Limitations |

|---|---|---|---|

| Specified Oracle | Compare output against documented requirements | API contracts, acceptance criteria | Requirements must be complete and accurate |

| Heuristic Oracle | Use rules of thumb and business logic | Performance thresholds, format validation | May miss subtle bugs, can be subjective |

| Reference Oracle | Compare against a trusted system or model | Data migration testing, algorithm validation | Requires a reliable reference that may not exist |

| Statistical Oracle | Check if results fall within expected ranges | Load testing, performance baselines | Needs historical data, may miss outliers |

| Human Oracle | Manual verification by domain expert | Exploratory testing, UX validation | Slow, expensive, inconsistent |

Example: Testing an API with Multiple Oracles

Let’s examine a GET /api/users/{id} endpoint:

# Test case with multiple oracles

def test_get_user_by_id():

response = client.get("/api/users/123")

# Oracle 1: Specified - Status code must be 200

assert response.status_code == 200

# Oracle 2: Heuristic - Response time under 500ms

assert response.elapsed < 0.5

# Oracle 3: Specified - Schema validation

schema = {"id": int, "email": str, "name": str}

assert validate_schema(response.json(), schema)

# Oracle 4: Reference - Compare to database

user_from_db = db.query("SELECT * FROM users WHERE id=123")

assert response.json()["email"] == user_from_db.email

This layered approach catches different defect types. The status code oracle finds routing errors, the heuristic catches performance issues, schema validation detects format bugs, and the database oracle uncovers data corruption.

So, When Should You Use a Test Oracle: Practical Scenarios

Knowing how to use a Test Oracle means recognizing when you need explicit verification versus when implicit checks suffice.

Use an explicit oracle when:

- The expected result isn’t obvious from the test context

- Business logic is complex and error-prone

- You’re testing calculations or transformations

- Regulatory compliance requires documented verification

- Testing across multiple systems that must stay in sync

Implicit oracles work for:

- Simple UI interactions (button click → page loads)

- Smoke tests where any response is better than no response

- Exploratory testing where human judgment is sufficient

How to Write a Test Oracle: A Step-by-Step Process

Creating a reliable Test Oracle follows a simple pattern:

Step 1: Identify What Needs Verification

Ask: What output proves this feature works? Is it a status code? A database record? A UI message? A calculation result?

Example: For a payment API, the oracle might check:

- HTTP 200 status

- Payment ID in response

- Transaction record created in database

- Email receipt sent

- Balance updated correctly

Step 2: Choose Oracle Type

Select based on what you can trust. If requirements are solid, use specified oracles. If you have a legacy system, use it as a reference oracle. For performance, use heuristic thresholds.

Step 3: Make It Deterministic

A good oracle never waffles. Avoid vague assertions like response.should_be_fast(). Instead: assert response_time < 200ms.

Step 4: Layer Multiple Oracles

Critical paths deserve multiple verification methods. A payment might pass a status code check but fail a database integrity check.

Step 5: Automate and Maintain

Oracles should live in your test code, not in testers’ heads. Version control them alongside your tests and update them when requirements change.

Code Example: Complete Test with Oracle

Here’s a robust API test with multiple oracles:

describe('Order API', () => {

it('creates order with valid items', async () => {

// Arrange (Given)

const orderData = {

items: [{ productId: 123, quantity: 2 }],

shippingAddress: { city: 'New York', zip: '10001' }

};

// Act (When)

const response = await api.post('/api/orders', orderData);

const order = response.data;

// Assert (Then) - Multiple oracles

// Oracle 1: Specified - Status and structure

expect(response.status).toBe(201);

expect(order).toHaveProperty('orderId');

// Oracle 2: Heuristic - Reasonable total

expect(order.totalAmount).toBeGreaterThan(0);

expect(order.totalAmount).toBeLessThan(10000);

// Oracle 3: Reference - Database consistency

const dbOrder = await db.orders.findById(order.orderId);

expect(dbOrder.status).toBe('confirmed');

// Oracle 4: Side effect - Inventory reduced

const inventory = await db.products.getStock(123);

expect(inventory).toBe(initialStock - 2);

});

});

This test will catch bugs that a single oracle would miss—performance issues, data inconsistency, or missing business logic.

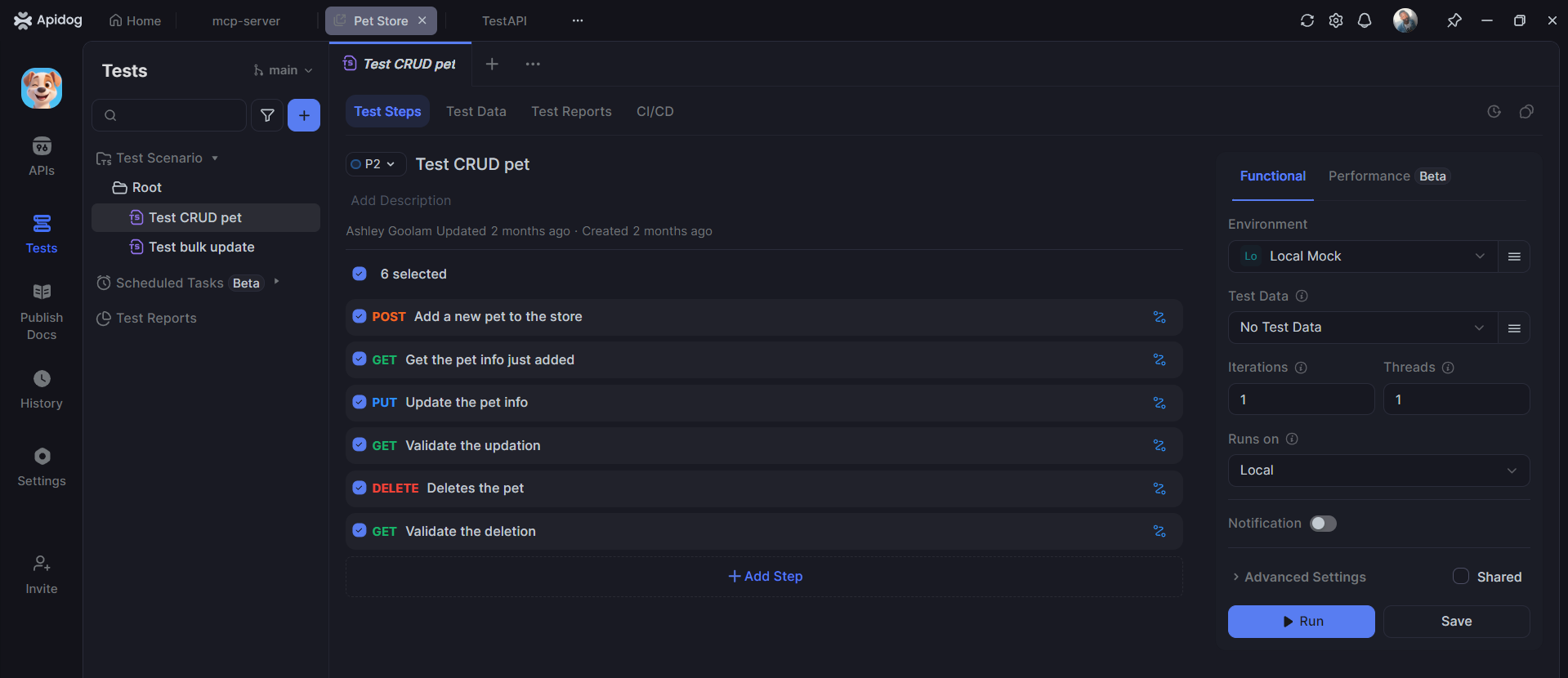

How Apidog Helps with Automating API Test Oracles

When testing APIs manually, creating oracles is tedious. You must craft expected responses, validate schemas, and check status codes for each and every endpoint. Apidog automates this entire process, turning your API specification into a suite of executable test oracles.

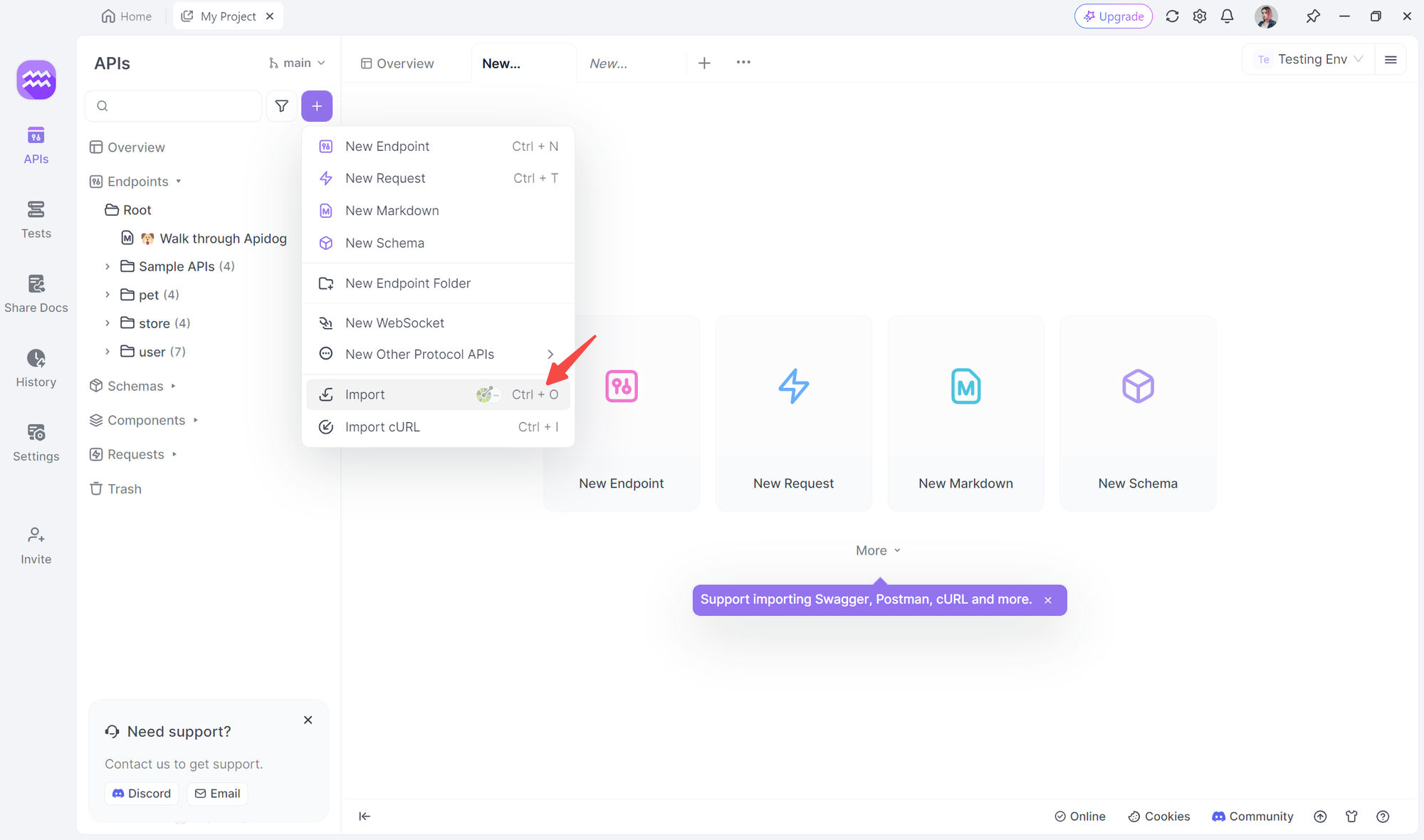

Automatic Test Case Generation from API Spec

Import your OpenAPI specification into Apidog, and it instantly creates intelligent test oracles for each endpoint:

For GET /api/users/{id}, Apidog generates oracles that verify:

- Status code is 200 for valid IDs, 404 for invalid IDs

- Response schema matches the User model

- Response time is under 500ms (configurable)

- Required fields (id, email, name) are present and non-null

- Data types are correct (id is integer, email is string)

For POST /api/users, Apidog creates oracles for:

- Successful creation returns 201 with Location header

- Invalid email format returns 400 with specific error message

- Missing required fields trigger validation errors

- Duplicate email returns 409 conflict status

- Response body contains generated userId matching the request

This automation means your API tests are derived directly from your API contract. When the spec changes, Apidog flags affected tests and suggests updates, preventing test drift.

Frequently Asked Questions

Q1: What’s the difference between a test oracle and a test case?

Ans: A test case describes the steps to execute. A Test Oracle is the mechanism that decides if the result of those steps is correct. Think of the test case as the recipe and the oracle as the taste test that judges whether the dish turned out right.

Q2: Can Apidog generate test oracles automatically?

Ans: Yes. Apidog analyzes your API specification and automatically creates oracles for status codes, schemas, data types, required fields, and performance thresholds. These oracles are derived directly from your API contract and update automatically when the spec changes.

Q3: How do I know if my test oracle is good enough?

Ans: A good oracle is deterministic (always gives the same answer), accurate (matches business rules), and efficient (doesn’t slow tests). If your tests sometimes pass and sometimes fail for the same code, your oracle is too vague. If it misses real bugs, it’s too weak.

Q4: Should I use multiple test oracles for one test?

Ans: Absolutely, especially for critical paths. A payment API should verify status code, transaction record, email receipt, and account balance. Each oracle catches a different class of bugs. Just balance thoroughness with test execution speed.

Q5: Is a test oracle necessary for unit tests?

Ans: Yes, but they’re often simpler. A unit test oracle might just compare a function’s return value to an expected constant. The principle is the same: you need a reliable way to determine pass/fail, even if it’s just assertEquals(expected, actual).

Conclusion

Understanding Test Oracle is what separates amateur testing from professional quality assurance. It’s not enough to run tests—you must know, with confidence, whether the results are correct. Whether you’re using specified requirements, trusted references, or heuristic rules, a well-designed oracle is your safety net against false confidence.

For API testing, the challenge of creating and maintaining oracles is daunting. Manual approaches can’t keep pace with API evolution. This is where tools like Apidog become essential. By automatically generating oracles from your API specification, Apidog ensures your tests stay aligned with your contract, catch real defects, and free your team to focus on strategic quality decisions rather than repetitive validation.

Start treating your test oracles as first-class artifacts. Document them, version them, and automate them. Your future self—and your users—will thank you when production releases go smoothly because your tests actually knew what “correct” looked like.