If you’ve ever handed a test case to a colleague only to hear, "I don't understand what this means", then you already know why test case specification matters. We’ve all been there, staring at a test step that made perfect sense when we wrote it, but now reads like a riddle. Clear specifications separate effective testing from wasted effort, yet many teams treat them as an afterthought.

This guide will show you how to write test case specification documents that are precise, actionable, and valuable to everyone who touches them. Whether you're a tester trying to improve your craft, a lead aiming to standardize your team's output, or a developer who wants to understand what’s being tested, you’ll find practical advice that works in the real world.

What Exactly Is Test Case Specification?

Test case specification is the formal documentation of a single test scenario, including its purpose, inputs, execution steps, expected results, and pass/fail criteria. Think of it as a recipe that anyone on your team can follow—whether they wrote the feature or just joined the project yesterday.

A well-crafted specification answers five questions before execution begins:

- What specific behavior are we testing?

- What conditions must be in place before we start?

- What precise actions do we perform?

- How do we know if the result is correct?

- What proves the test passed or failed?

Without clear answers, you’re not testing—you’re exploring. Exploration has its place, but repeatable, reliable quality assurance requires rigorous test case specification.

Why Test Case Specification Deserves Your Attention

The effort you invest in test case specification pays dividends across the entire development lifecycle. Here’s what you gain:

- Clarity Under Pressure: When deadlines loom and tensions rise, ambiguous tests get executed poorly or skipped entirely. Clear specifications survive stressful release cycles because they remove guesswork.

- Onboarding Acceleration: New team members can contribute meaningfully on day one when test cases spell out exactly what to do. You reduce pairing time and empower people to work independently faster.

- Defect Reproduction: When a test fails in CI at 2 AM, detailed specifications help developers reproduce the issue without waking you up. Steps, data, and environment details matter.

- Audit and Compliance: In regulated industries, test case specification isn’t just helpful—it’s mandatory. Auditors want proof that you tested what you claimed to test, and vague test cases don’t hold up.

- Automation Readiness: Manual tests with clear specifications are far easier to automate later. The logic, data, and expected results are already defined; you’re just translating them into code.

Core Components of Every Test Case Specification

Before we talk about best practices, let’s align on the non-negotiable elements. A complete test case specification includes:

| Component | Purpose | What to Include |

|---|---|---|

| Test Case ID | Unique identification | A consistent naming convention (e.g., TC_Login_001) |

| Test Scenario | High-level description | One-line summary of what’s being tested |

| Requirement Traceability | Link to source | Requirement ID, user story, or design doc reference |

| Preconditions | Setup requirements | Data state, user permissions, environment config |

| Test Steps | Action sequence | Numbered, atomic steps: action + input + target |

| Test Data | Input values | Specific usernames, amounts, file names—never “valid data” |

| Expected Result | Pass criteria | Precise output, UI state, database change, or error message |

| Postconditions | Cleanup needs | How to restore the system to its original state |

| Pass/Fail Criteria | Judgment standard | Measurable condition that removes ambiguity |

Skimping on any component invites confusion. For example, writing “Enter valid credentials” as a step forces the tester to hunt for what “valid” means. Specify the exact username and password instead.

Best Practices for Writing Test Case Specifications That Work

Writing good test case specification is a skill, not a talent. Follow these practices to improve immediately:

1. Write for a Stranger

Imagine the person executing your test knows nothing about the feature. Use simple language, avoid jargon, and define any terms that aren’t universally understood. If you must use an acronym, spell it out first.

2. Make Steps Atomic

Each test step should contain one action. Instead of “Enter username and password, then click login,” break it into three steps. This makes debugging easier when a failure occurs—you know exactly which action failed.

3. Specify, Don’t Imply

Never assume the tester knows what you mean. “Verify the report looks correct” is subjective. “Verify the report displays transaction ID, date, and amount in sequence” is objective and verifiable.

4. Cover Negative and Boundary Cases

Most testers naturally write positive-path tests. A strong test case specification intentionally includes invalid inputs, missing data, and boundary values. Think about what happens when users do everything wrong.

5. Version Control Your Specifications

Test cases evolve with your product. Use the same version control system for specifications that you use for code. Track changes, review updates, and maintain a history. When a test fails, you want to know if the spec changed recently.

6. Standardize Across the Team

Create a test case specification template and enforce its use. Standardization reduces cognitive load and makes reviews faster. Everyone knows where to find preconditions, expected results, and traceability links.

Common Pitfalls That Weaken Test Case Specification

Even experienced testers fall into these traps. Watch for them in your own work:

- Vague Expected Results: “System should handle the request gracefully” is not a result. What does “gracefully” look like? A message? A redirect? A logged event?

- Over-Specification: Including implementation details like “Click the blue button with ID #btn-123” makes tests brittle. When the UI changes, your spec is obsolete. Focus on user actions, not technical selectors.

- Missing Precondition Cleanup: If your test creates data, specify how to delete it. Uncleaned preconditions poison subsequent tests and create cascading failures.

- No Traceability Link: When a requirement changes, how do you know which tests to update? Without traceability, you don’t. Link every test to its source requirement.

- Assuming Tester Knowledge: “Test the checkout flow as usual” assumes everyone defines “usual” the same way. They don’t. Spell it out.

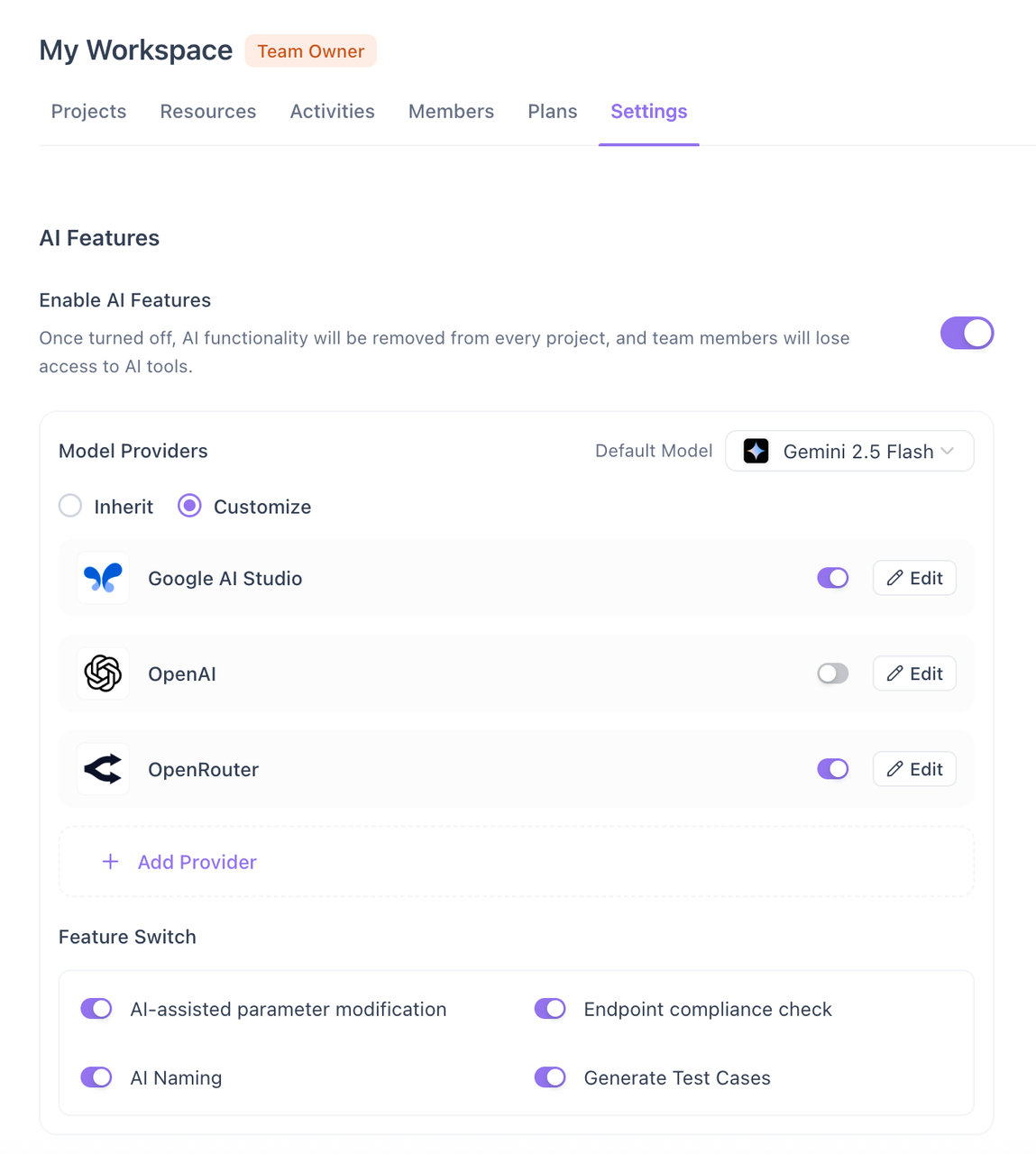

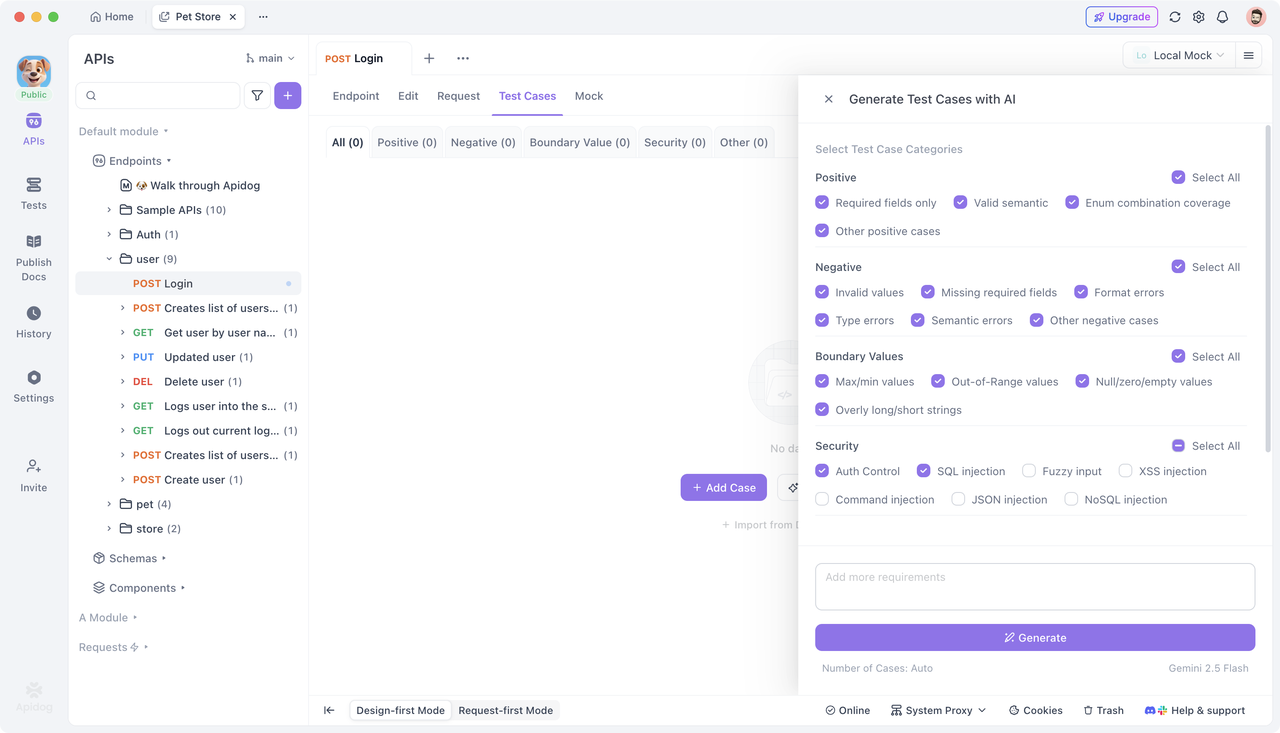

How Apidog Streamlines Test Case Specification with AI

For API testing, manual test case specification can be particularly tedious. You must consider status codes, response schemas, authentication, headers, query parameters, and countless edge cases. Apidog transforms this burden into a competitive advantage.

By analyzing your API documentation or live endpoints, Apidog can automatically generate comprehensive test cases using AI.

It creates positive tests for happy paths, negative tests for invalid inputs, boundary tests for numeric fields, and security tests for authentication edge cases. Each specification includes precise inputs, expected outputs, and assertions, ready for review and execution.

Instead of starting from scratch, your team reviews AI-generated test case specification drafts, refining them for business context rather than basic coverage. This approach ensures consistency, eliminates human oversight, and reduces specification time by up to 70%. The result is a higher-quality test suite that aligns with your API contract from day one.

Frequently Asked Questions

Q1: How detailed should a test case specification be for exploratory testing?

Ans: Exploratory testing values freedom, but even here, test case specification plays a role. Create charter-based specs that define the mission, scope, and time box without scripting every step. For example: “Explore the payment flow using invalid credit cards for 60 minutes, focusing on error handling.” This provides structure while preserving tester autonomy.

Q2: Who owns the test case specification—the author or the team?

Ans: The team owns it. While the author writes the initial version, the test case review process makes it a shared artifact. Once baselined, anyone can propose updates through your version control workflow. Collective ownership prevents knowledge silos and ensures specs stay current.

Q3: Should user interface locators be included in test case specifications?

Ans: Generally, no. Keep specifications focused on user actions and expected outcomes. Locators belong in your automation layer’s page objects, not in human-readable specs. This separation keeps specs stable when UI implementation changes and makes them accessible to manual testers who don’t care about CSS selectors.

Q4: How do we maintain test case specifications when requirements change frequently?

Ans: Use requirement traceability as your compass. When a requirement changes, query your test management tool for all linked test cases and review them in a targeted session. Version control helps you track spec history, and automated tools like Apidog can regenerate API test specifications after endpoint changes, flagging diffs for human review.

Q5: Can we reuse test case specifications across projects?

Ans: Yes, for common functions like login, search, or user profile updates. Maintain a library of standardized test case specification templates for these patterns. When reusing, always review and adapt them to project-specific context and data. Reuse the structure, not blindly the content.

Conclusion

Mastering test case specification is one of the most valuable skills a software quality professional can develop. It bridges the gap between intent and execution, between requirements and validation, between hope and confidence. When specifications are clear, complete, and collaborative, your entire team moves faster with fewer surprises.

Start by auditing your current specs against the components and best practices outlined here. Pick one improvement—maybe adding traceability links or breaking down compound steps—and apply it consistently for a month. The results will speak for themselves. Quality doesn’t happen by accident; it happens by specification.