Every software team wants to ship high-quality products, but here's the uncomfortable truth: even the most skilled testers write imperfect test cases. A test might miss a critical edge case, use unclear language, or even duplicate effort from another suite. These issues don't just waste time; they let bugs slip into production. And that's where a disciplined test case review process becomes your safety net.

If you've ever found yourself wondering whether your test cases are good enough, or maybe you're tired of discovering gaps only after a feature goes live, this guide will walk you through a proven review workflow that catches problems early, covers modern testing tools like Apidog and builds a test suite that you can trust.

What Is a Test Case Review Process?

A test case review process is a structured evaluation of test cases before anyone executes them. Think of it as a quality gate for your quality assurance. The goal is simple: ensure every test case is clear, correct, complete, and tightly aligned with requirements. When done right, this process reveals defects in the test design itself (e.g. missing scenarios, incorrect expected results, or unclear steps) so you can fix them with minimal rework.

Rather than treating test cases as disposable artifacts, a proper review process treats them as living documentation that deserves the same scrutiny as production code. It's the difference between hoping your tests work and knowing they will.

Why Does the Test Case Review Process Actually Matter?

The test case review process isn't paperwork for paperwork's sake. It delivers measurable value:

- Improves accuracy and coverage by systematically checking that all functional paths, edge cases, and both positive and negative scenarios map back to documented requirements. No more guessing if you’ve covered everything.

- Reduces rework and cost dramatically. Finding issues during test design costs pennies compared to discovering them during execution—or worse, after release when customers find them first.

- Enhances team collaboration by forcing conversations between testers, developers, and business stakeholders. These discussions surface misunderstandings about requirements before code is written.

- Enforces consistency across your testing practice. Standard templates, terminology, and approaches make your test suite maintainable as teams scale and projects evolve.

Skipping the review might feel faster initially, but it's a classic case of measuring twice, cutting once. The hour you spend reviewing saves days of debugging later.

Who Should Participate in a Test Case Review?

Effective reviews are multi-stakeholder by design. Different perspectives catch different problems. Here's who should be in the room:

| Role | Primary Focus | Value They Bring |

|---|---|---|

| Test Leads/Managers | Alignment with test strategy and project goals | Ensures tests serve business objectives, not just technical checkboxes |

| Peers/Senior Testers | Technical correctness, data validity, coverage depth | Catches logical errors, suggests overlooked scenarios, validates test data |

| Developers | Technical feasibility and alignment with implementation | Flags tests that can’t be automated, identifies architectural constraints |

| Business Analysts/Product Owners | Business rule coverage and user scenario validation | Confirms tests reflect real user needs and regulatory requirements |

The magic happens when these voices challenge each other. A developer might spot that a test assumes an impossible state. A product owner might realize a critical business rule was never translated into a test scenario. The test case review process thrives on this constructive friction.

The Seven-Step Test Case Review Workflow

A repeatable test case review process follows a clear sequence. Here's how to run it effectively:

Step 1: Preparation

Gather the latest test cases and verify they reflect current requirements from your SRS, FRD, or user stories. Perform a quick entry check: Are the test cases complete enough to review, or do they need basic cleanup first? A premature review wastes everyone’s time.

Step 2: Assign Reviewers and Optional Kick-off

Select reviewers based on the feature’s complexity and technical domain. For major features, hold a 15-minute kick-off to explain scope, objectives, and reference materials. This aligns everyone before they dive into individual review.

Step 3: Individual Preparation

Each reviewer independently examines test cases using checklists and standards. They note defects, questions about requirements ambiguity, and suggestions for better coverage. This solo work ensures fresh perspectives aren’t diluted by groupthink.

Step 4: Review Session or Meeting

The group convenes—virtually or in person—to discuss findings. The author walks through test cases while reviewers ask questions and challenge assumptions. The moderator logs defects in real-time, focusing on clarifying intent rather than defending ego.

Step 5: Defect Logging and Classification

Not all issues are equal. Classify them to prioritize rework:

- Critical: Missing coverage for core functionality or safety-critical paths

- Major: Incorrect expected results or missing negative scenarios that could hide bugs

- Minor: Grammar issues, typos, or formatting inconsistencies that reduce clarity

Typical defects include incomplete preconditions, wrong test data, missing boundary tests, or test cases that no longer match the implemented feature.

Step 6: Rework

The author updates test cases to address logged defects. This isn’t just fixing typos, but it’s improving clarity, expanding coverage, and sometimes merging redundant tests. The goal is a lean, effective suite, not a bloated one.

Step 7: Follow-up and Verification

A moderator or lead verifies that all agreed changes were applied correctly. Once satisfied, stakeholders give formal approval, and the test cases are baselined in your repository. This closure step prevents endless revision cycles.

Building a Central Test Case Repository

Your test case review process is only as good as what happens after approval. Approved test cases must live in a central repository with version control. This isn’t just filing paperwork—it’s creating a reusable asset.

A well-managed repository enables:

- Traceability: Link tests to requirements and defects, so you know exactly why a test exists

- Reusability: Regression testing becomes plug-and-play instead of rewrite-everything

- Consistency: New team members learn your standards by example

- Collaboration: Multiple teams can contribute without stepping on each other’s work

When the repository becomes the single source of truth, the test case review process transforms from a one-time activity into a foundation for continuous quality.

Common Review Techniques to Fit Your Team

Not every team needs formal inspections. Choose the technique that matches your maturity and timeline:

- Self-review: The author checks their own work against requirements and guidelines. Good for a quick sanity check but prone to blind spots.

- Peer review: A fellow tester reviews using a maker-checker approach. Balances thoroughness with speed.

- Supervisory review: Test leads conduct formal inspections using detailed checklists. Best for high-risk features.

- Walkthroughs: The author presents test cases to the team, who jointly refine them. Excellent for knowledge sharing.

Many teams use a hybrid: peer reviews for routine features, walkthroughs for complex new functionality, and supervisory reviews before major releases.

Streamlining the Test Case Review Process with Apidog

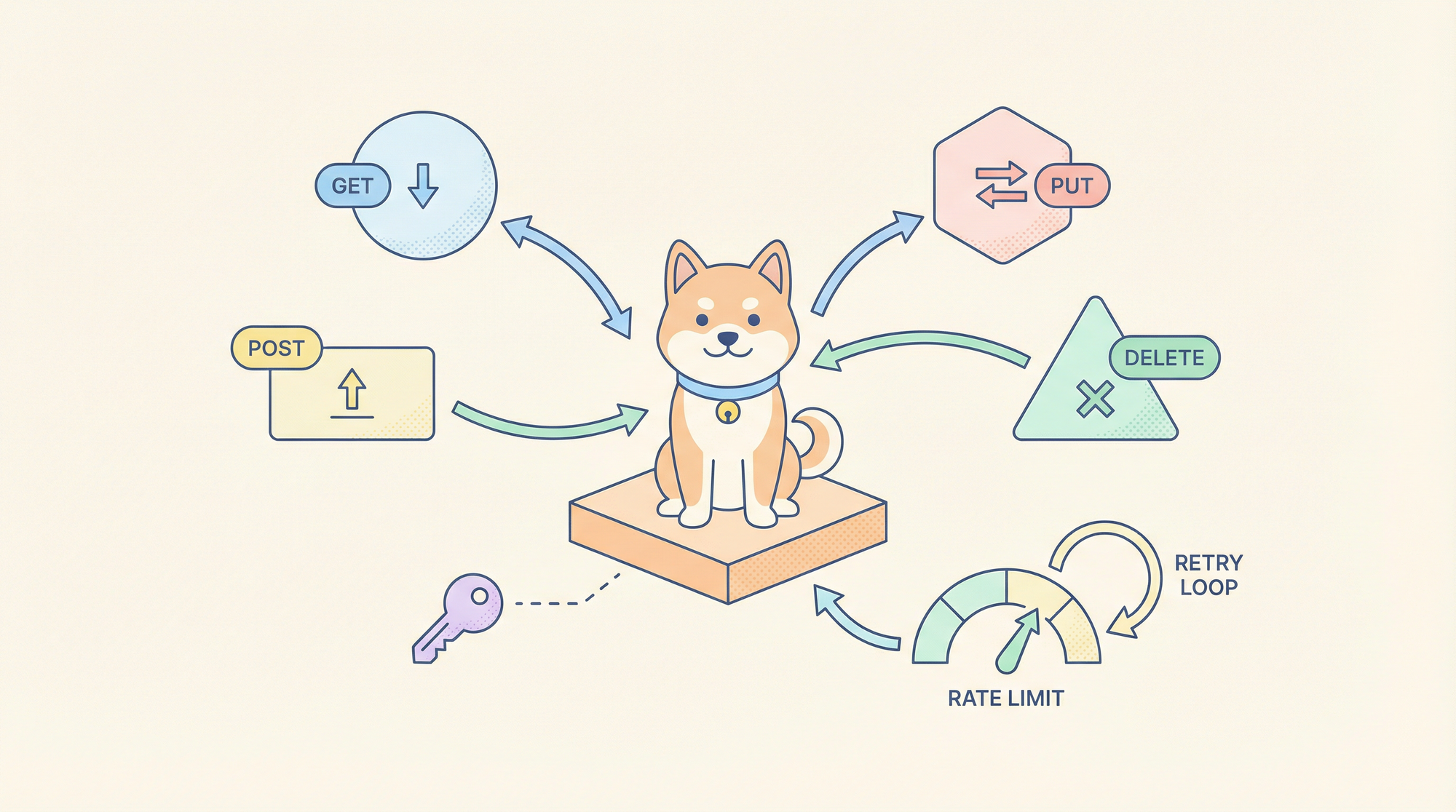

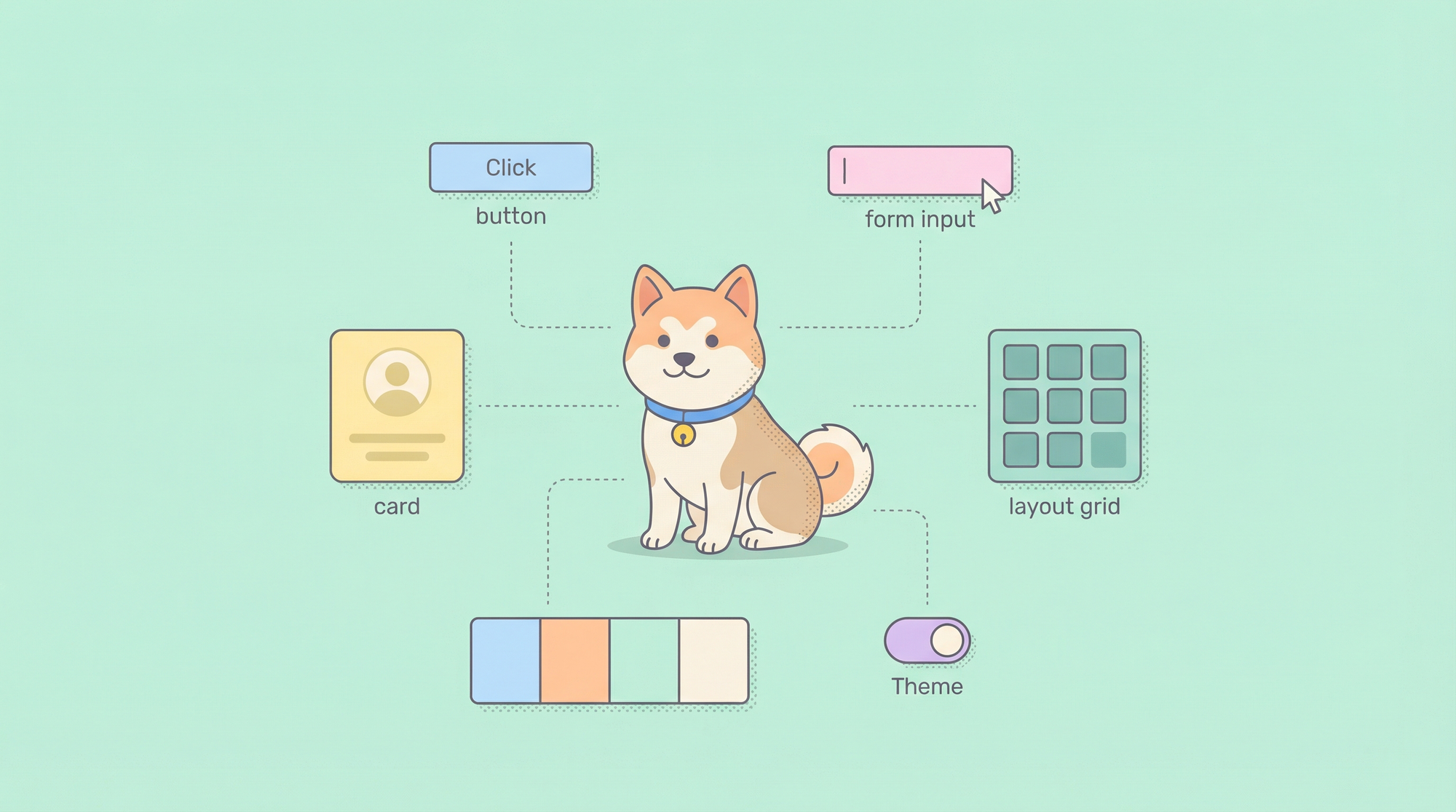

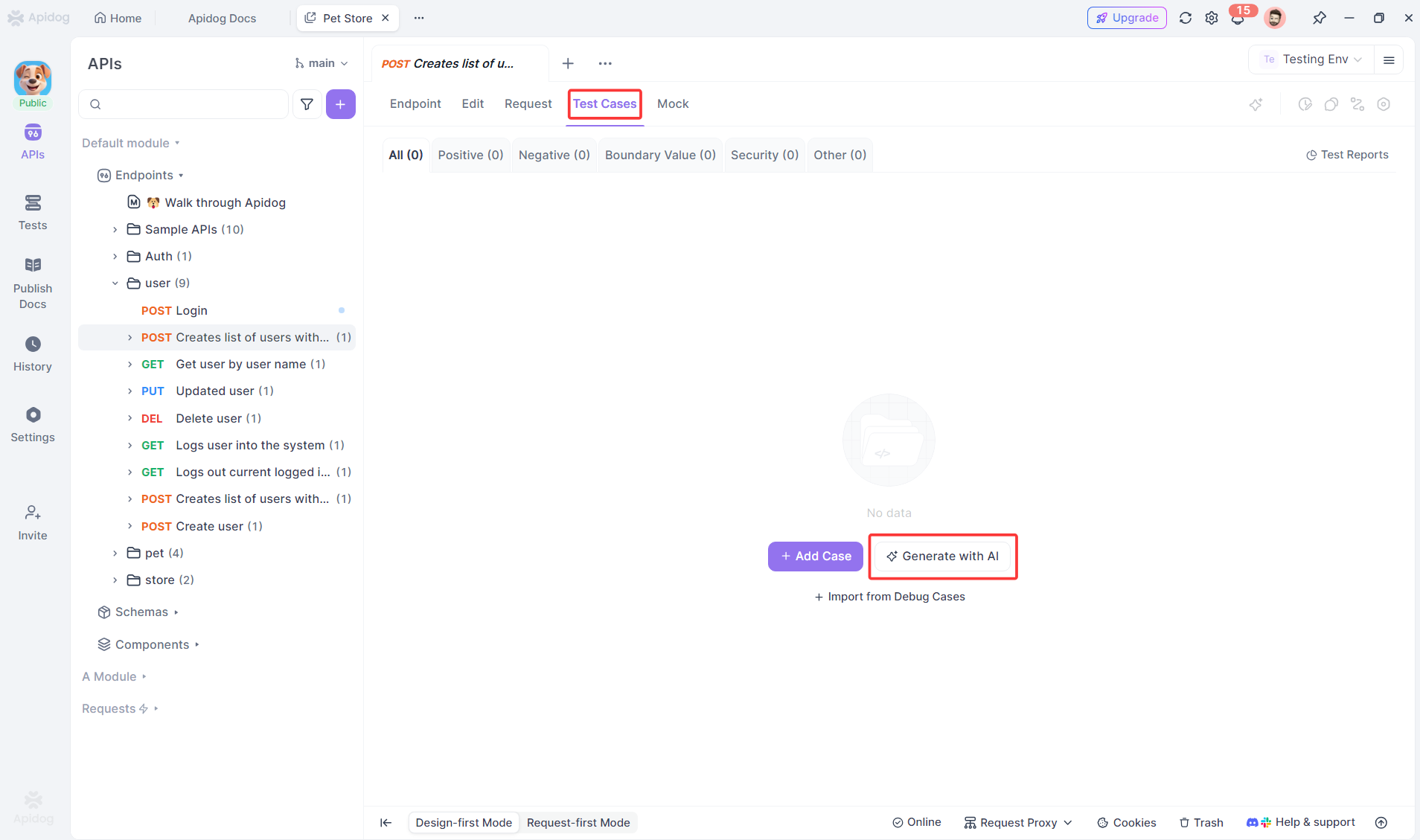

For API testing, the test case review process can be streamlined significantly with modern tools. Apidog automates the heavy lifting of test case creation, giving reviewers a solid starting point instead of a blank page.

AI-Generated Test Cases from API Specifications

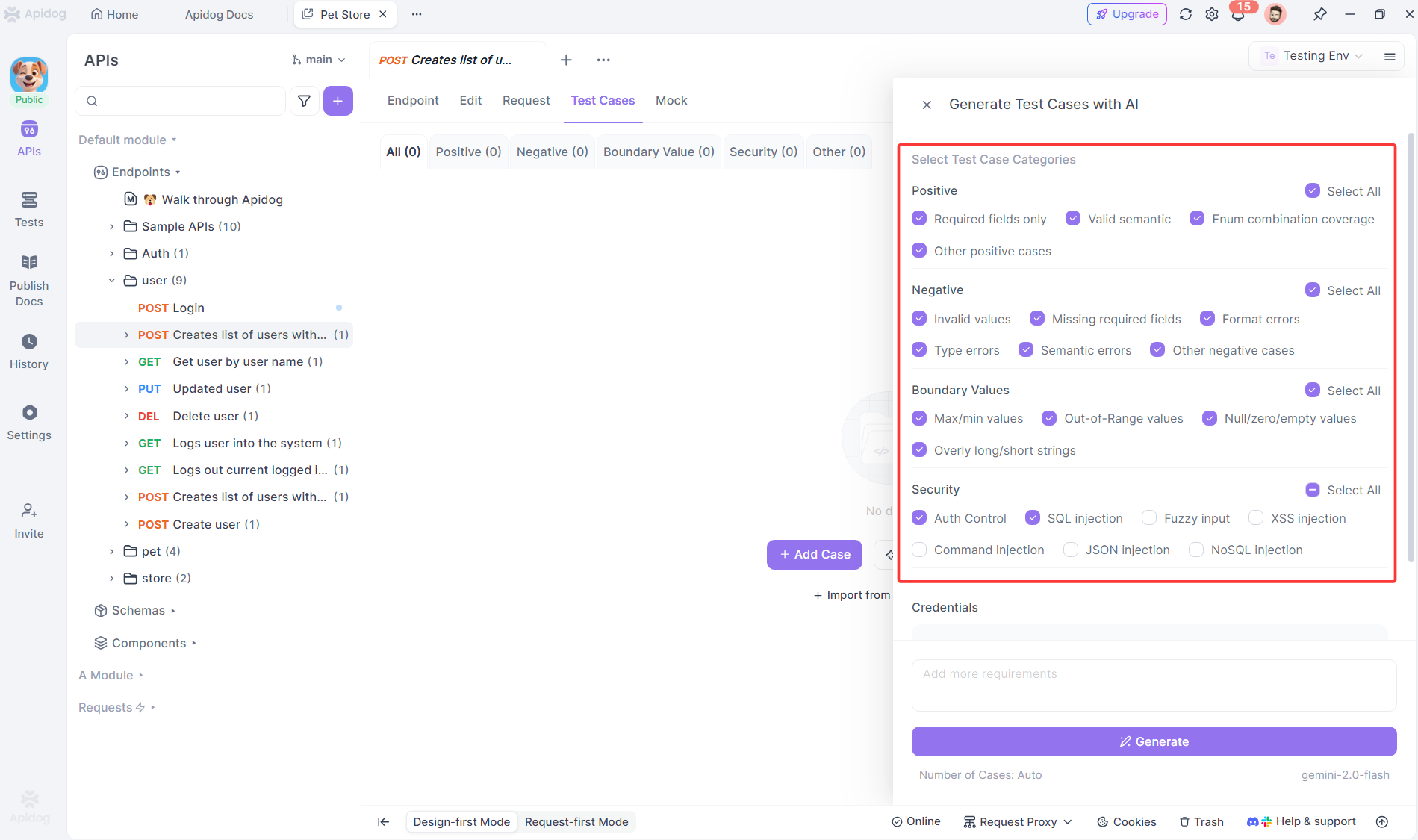

Using AI-powered analysis, Apidog generates comprehensive test cases for your API endpoints by examining request parameters, response schemas, and business rules. When you import an OpenAPI specification into Apidog, you can automatically generate positive tests, negative tests, security tests, and edge case scenarios using AI.

Instead of manually writing dozens of tests for boundary values, null checks, and error scenarios, Apidog creates them instantly.

Intelligent Test Case Expansion

But Apidog's AI capabilities go beyond initial generation. The platform can now intelligently generate additional test cases based on your existing endpoint test cases, transforming how teams scale their API testing coverage during the review process.

When you have existing test cases for an endpoint, Apidog's AI analyzes your current test patterns, parameter combinations, and validation logic to automatically generate complementary test scenarios you might have missed. The AI examines your existing test cases and identifies gaps in coverage—generating boundary value tests, negative scenarios, edge cases, and error conditions that follow the same patterns as your current created tests, greatly speeding up your test case review process.

Frequently Asked Questions

Q1. How long should a typical test case review session take?

Ans: A focused review session should last 30 to 60 minutes. If you need more time, split the review into multiple sessions covering different feature areas. Longer meetings lead to fatigue and missed issues. The key is preparation—well-prepared reviewers complete solo analysis beforehand, so meeting time is spent on discussion, not silent reading.

Q2. Can we still do test case reviews in an Agile environment with short sprints?

Ans: Absolutely. In fact, Agile makes reviews more critical. Embed them into your definition of done: a user story isn‘t complete until its test cases are reviewed and approved. Use lightweight peer reviews for routine stories and save walkthroughs for complex features. The time investment pays back during sprint execution when fewer bugs disrupt your velocity.

Q3. What if reviewers disagree on whether a test case is necessary?

Ans: Disagreement is healthy. Document the decision rationale in your test management tool. If the dispute is about business priority, escalate to the product owner. If it’s about technical feasibility, let the developer and tester pair on a compromise. The test case review process should surface these debates early, not suppress them.

Q4. How do we prevent the review process from becoming a bottleneck?

Ans: Set clear entry and exit criteria. Don’t send half-baked test cases for review. Use smaller, asynchronous peer reviews for straightforward features. Automate what you can—tools like Apidog generate test cases using AI instantly, reducing manual drafting time. Track review turnaround time in your project metrics and address delays proactively.

Q5. Should we review automated test scripts the same way as manual test cases?

Ans: Yes, but with a technical lens. Automated scripts need review for code quality, maintainability, and execution speed in addition to coverage. Include developers in these reviews to enforce coding standards and suggest more stable locators. The logic and coverage should still map to requirements, just like manual tests.

Conclusion

A disciplined test case review process is one of the highest-return investments a software team can make. It transforms testing from a reactive bug hunt into a proactive quality strategy. By following the seven-step workflow, engaging the right stakeholders, and leveraging modern tools like Apidog for API test generation, you build a test suite that catches real defects and earns the team’s trust.

Start small. Pick one feature for a pilot review. Measure the bugs you prevent. As the benefits become clear, expand the practice across your projects. Quality isn’t an accident—it’s the result of deliberate processes, and the test case review process is a cornerstone of that deliberate approach.