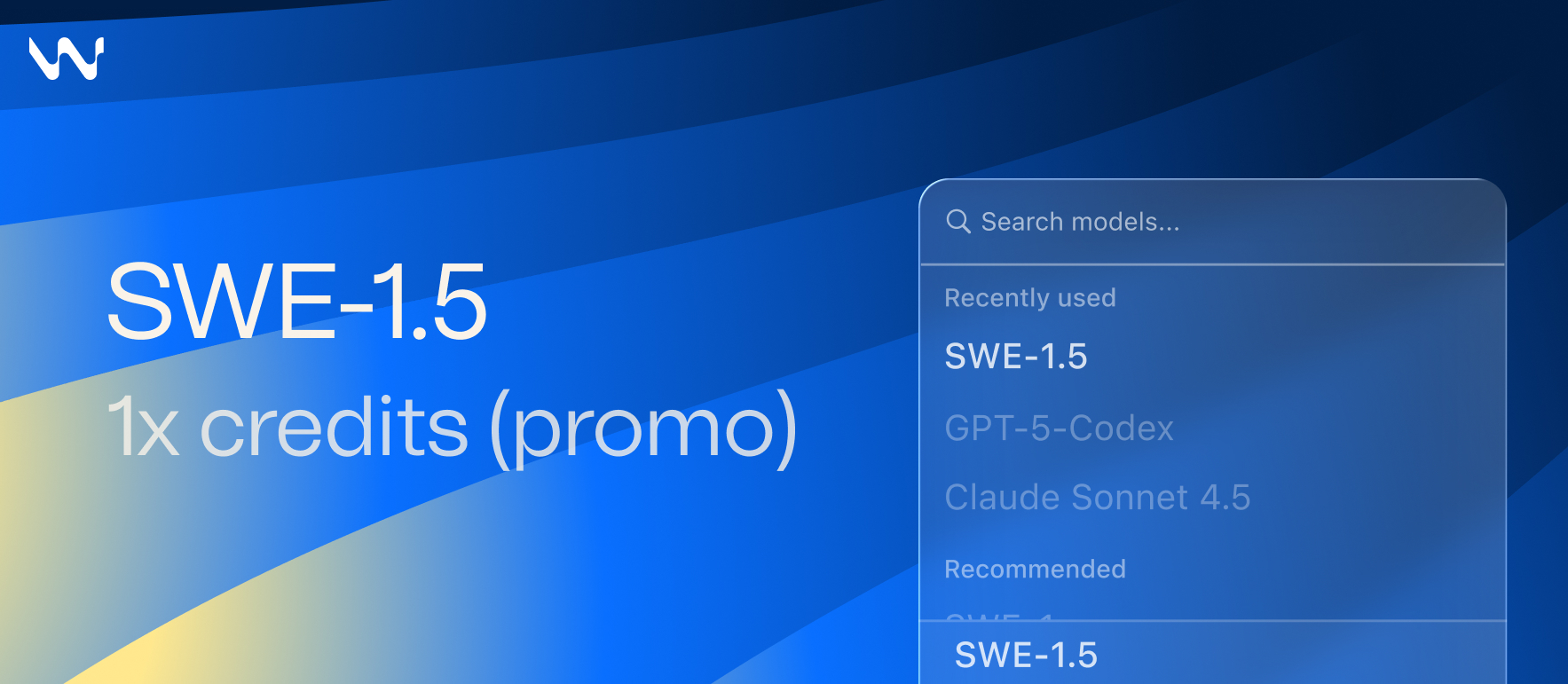

Developers constantly seek tools that accelerate workflows without sacrificing quality. Cognition delivers exactly that with the release of SWE-1.5, now available on Windsurf. This advanced AI model optimizes software engineering tasks, enabling faster code generation and problem-solving. Engineers integrate such models into their environments through APIs, ensuring smooth interactions and reliable performance.

Cognition engineers designed SWE-1.5 to address a core challenge in AI-assisted coding: the balance between rapid response times and intelligent outputs. Moreover, this model marks a significant advancement in agent-based systems, where speed directly impacts user experience. As teams adopt SWE-1.5 on Windsurf, they experience reduced latency in tasks ranging from debugging to full-stack application building. However, achieving this required innovative partnerships and infrastructure upgrades, which we explore next.

Understanding SWE-1.5: A Frontier-Size Model Tailored for Software Engineering

Cognition builds SWE-1.5 as a specialized model with hundreds of billions of parameters, focusing exclusively on software engineering applications. Developers benefit from its ability to handle complex codebases efficiently. For instance, the model processes queries and generates responses at speeds that rival human thought processes, making it ideal for real-time collaboration.

SWE-1.5 incorporates end-to-end reinforcement learning, trained on real-world task environments. This approach ensures the model adapts to diverse programming languages and scenarios. Engineers at Cognition iterated continuously on the model's architecture, refining its harness and tools to maximize performance. Consequently, users on Windsurf access a system that not only thinks quickly but also delivers accurate solutions.

The model's design emphasizes unification: it combines the core AI with inference engines and agent orchestration. This integration eliminates bottlenecks common in disjointed systems. As a result, SWE-1.5 outperforms general-purpose models in specialized tasks. Developers who deploy it notice immediate improvements in productivity, such as editing configuration files in seconds rather than minutes.

The Partnership with Cerebras: Powering Unmatched Inference Speeds

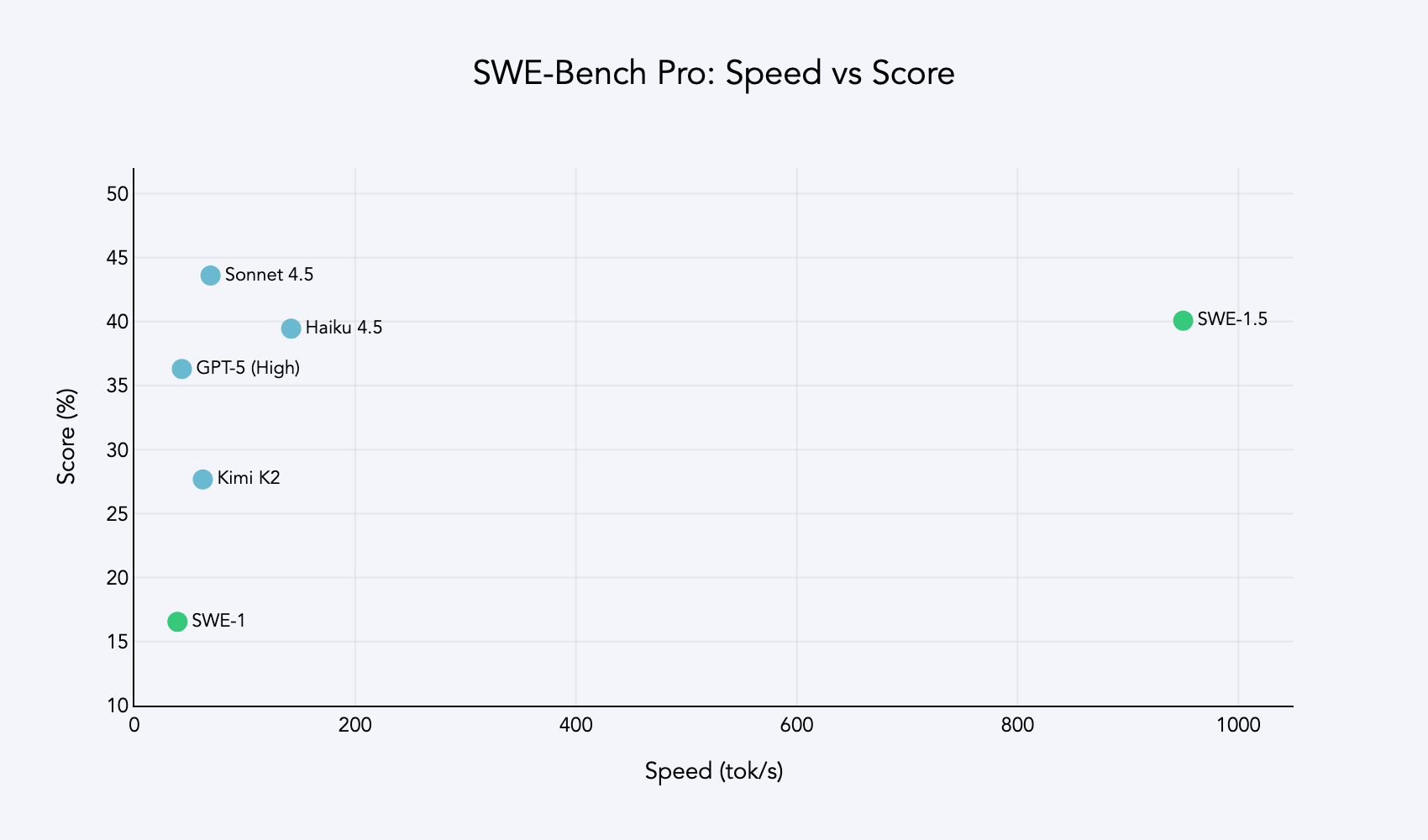

Cognition partners with Cerebras to achieve inference speeds of up to 950 tokens per second in SWE-1.5. This collaboration leverages advanced hardware to push the boundaries of AI performance. Specifically, Cerebras' technology enables speculative decoding and optimized token generation, which accelerates the model's output without compromising quality.

Moreover, this speed advantage positions SWE-1.5 as 6x faster than Haiku 4.5 and 13x faster than Sonnet 4.5. Engineers measure these gains in practical terms: tasks that once took 20 seconds now complete in under five. Such efficiency fits within the "flow window," where developers maintain concentration without interruptions.

However, realizing these speeds demanded system-wide optimizations. Cognition rewrote core tools from scratch, reducing overhead in lint checking and command execution. These enhancements benefit all models on Windsurf, creating a more responsive ecosystem. Consequently, users experience seamless interactions, whether exploring large repositories or automating routine fixes.

Benchmarks That Set SWE-1.5 Apart: Dominating SWE-Bench Pro

SWE-1.5 excels on the SWE-Bench Pro benchmark from Scale AI, achieving near-state-of-the-art results across challenging tasks. This dataset tests models on diverse codebases, simulating real-world software engineering problems. Cognition's model completes these evaluations in a fraction of the time required by competitors, highlighting its efficiency.

Additionally, internal benchmarks at Cognition reveal practical advantages. For example, engineers use SWE-1.5 to build full-stack applications rapidly, integrating frontend and backend components with minimal manual intervention. The model's performance on metrics like minimum retweets or engagement filters in related tools underscores its robustness.

Furthermore, comparisons with prior models show marked improvements. SWE-1.5 reduces error rates in tool calls and enhances code quality through rubric-based grading. Developers appreciate how it handles edge cases, such as Kubernetes manifest edits, with precision. As a result, teams on Windsurf adopt it for mission-critical projects, confident in its reliability.

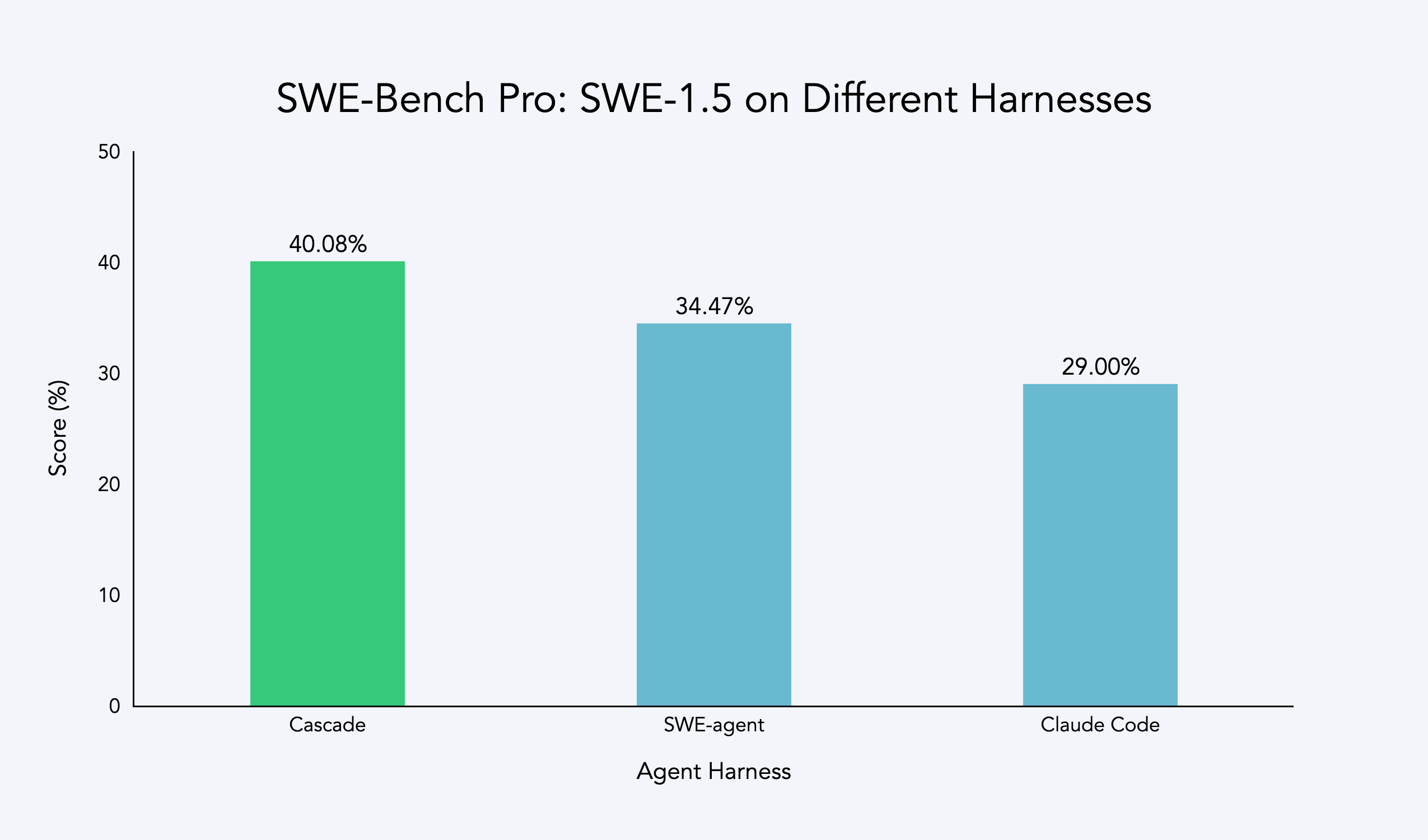

The Cascade Agent Harness: Foundation of SWE-1.5's Intelligence

Cognition develops the Cascade agent harness specifically for SWE-1.5, enabling co-optimization of model and orchestration. This harness facilitates reinforcement learning on multi-turn trajectories, ensuring the AI handles extended interactions effectively.

Moreover, the harness incorporates tools rewritten for speed, accommodating the model's 10x faster runtime. Engineers monitor performance through beta deployments, gathering data on metrics like token throughput and response accuracy. This iterative process refines the system, eliminating weaknesses identified in earlier versions.

However, challenges arose during development, such as tool call failures in alternative setups. Cognition addressed these by enhancing the harness's compatibility, leading to superior evaluations. Consequently, SWE-1.5 performs optimally on Windsurf, where users leverage its full capabilities for tasks like codebase exploration via Codemaps.

Training Infrastructure: Scaling with GB200 Chips and Otterlink

Cognition trains SWE-1.5 on thousands of GB200 NVL72 chips, marking one of the first production uses of this hardware. Early access in June 2025 allowed the team to build fault-tolerant systems and optimize NVLink communications.

Additionally, the Otterlink VM hypervisor scales environments to tens of thousands of concurrent machines, aligning training with production realities. This setup mirrors Devin and Windsurf workloads, ensuring the model generalizes well.

Furthermore, the training process employs an unbiased policy gradient for stability on long sequences. Engineers select base models through rigorous evaluations, applying post-training RL to fine-tune for software engineering. As a result, SWE-1.5 emerges as a robust tool, ready for deployment on Windsurf.

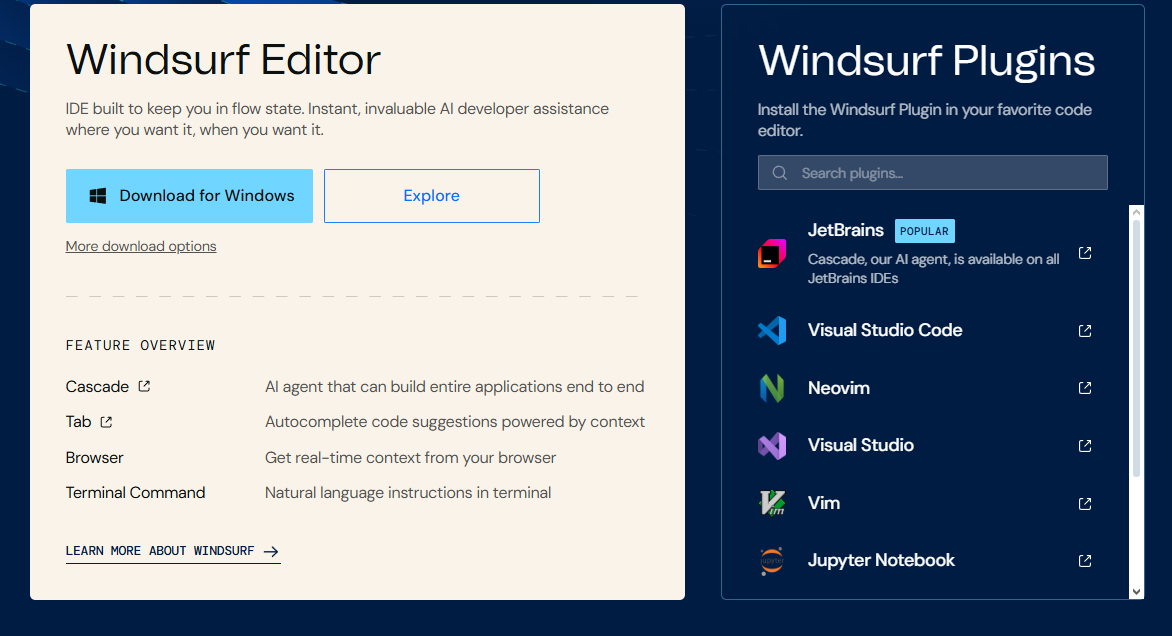

Availability on Windsurf: Seamless Integration for Developers

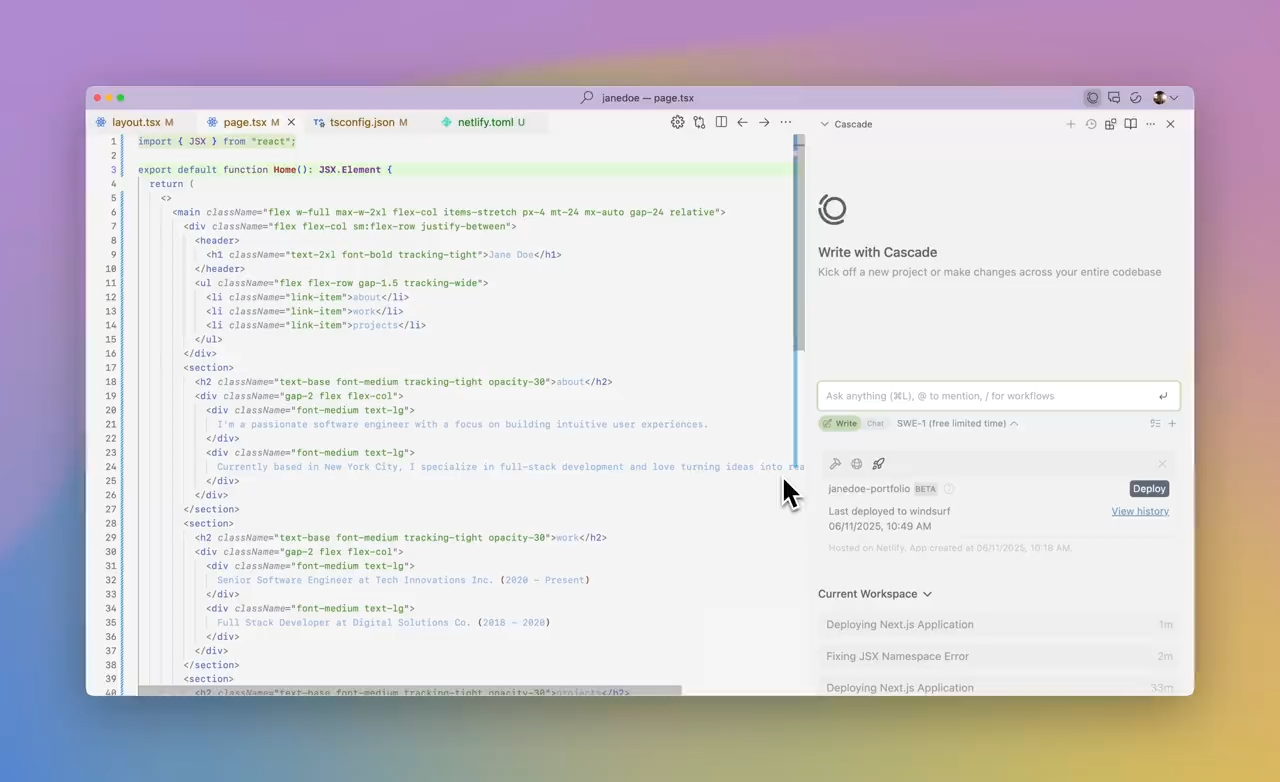

Cognition makes SWE-1.5 available on Windsurf today. This platform integrates the model deeply, offering features like Codemaps for navigating large codebases.

Moreover, Windsurf's acquisition by Cognition enhances compatibility, allowing users to deploy SWE-1.5 in familiar environments. Developers download and configure it easily, benefiting from custom request priority systems for smooth sessions.

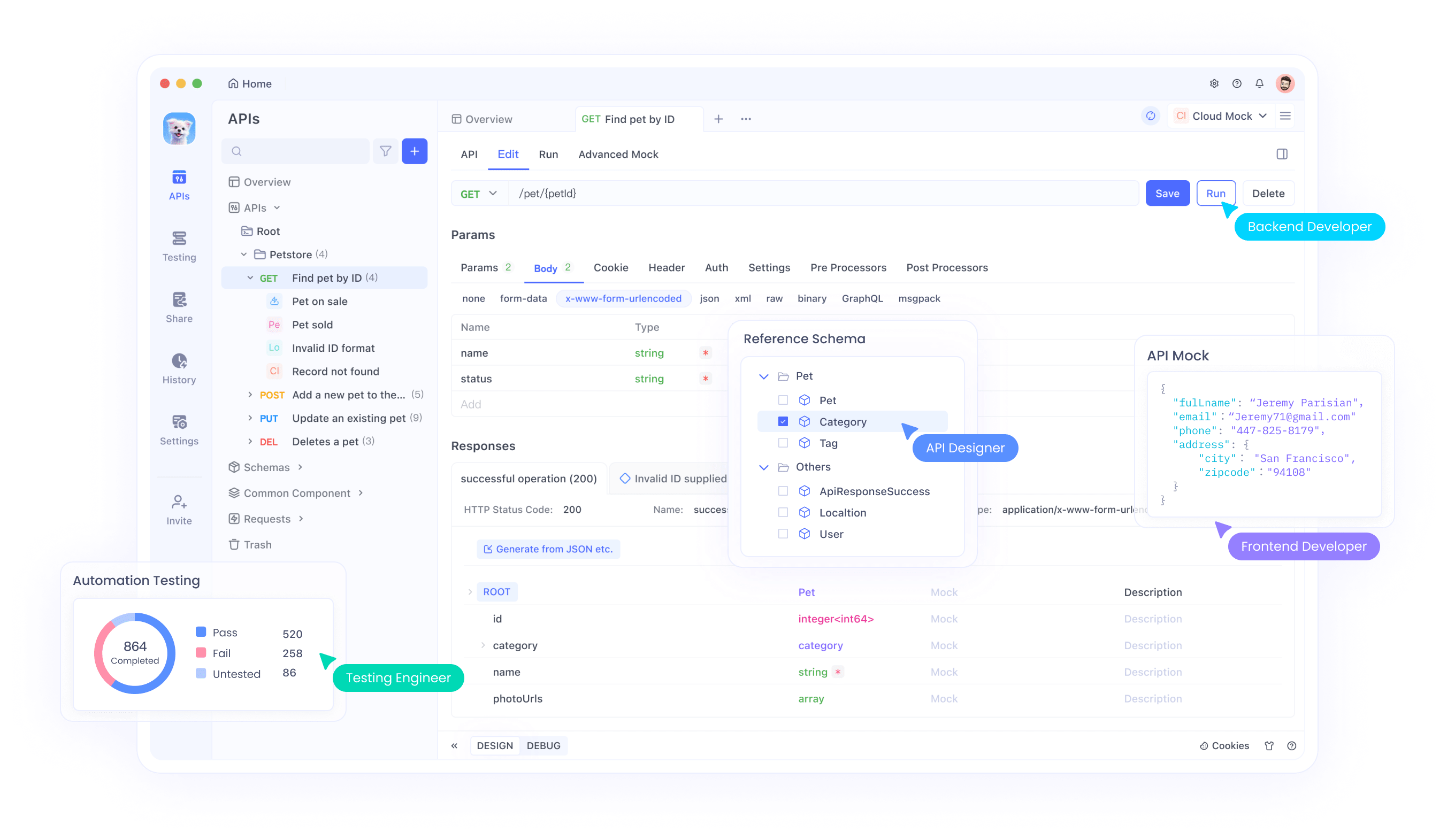

However, to maximize its potential, teams combine it with API tools. As mentioned earlier, downloading Apidog for free aids in managing integrations, ensuring APIs connected to SWE-1.5 function flawlessly.

Overcoming Challenges: From Beta Testing to Production

Cognition deploys multiple beta versions of SWE-1.5, named "Falcon Alpha," to gather real-world feedback. Engineers dogfood the model internally, identifying areas for improvement.

Moreover, reward hardening prevents gaming of evaluators, ensuring genuine performance gains. Human experts scrutinize outputs, refining graders for accuracy.

However, initial hurdles like system delays prompted rewrites, ultimately strengthening the platform. Consequently, SWE-1.5 launches on Windsurf as a polished product, ready for widespread adoption.

The Impact of Speed on Developer Experience

Speed defines SWE-1.5's value on Windsurf, enabling "flow state" coding. Engineers complete tasks without waiting, boosting creativity and output.

Moreover, this responsiveness encourages experimentation, as quick feedback loops refine ideas rapidly.

However, maintaining quality alongside speed requires careful balancing, which Cognition achieves through rigorous testing.

Comparing SWE-1.5 to Industry Peers

SWE-1.5 surpasses Haiku 4.5 and Sonnet 4.5 in speed while matching intelligence. Benchmarks confirm its edge in software-specific tasks.

Additionally, unlike general models, it specializes in engineering, offering tailored solutions.

Furthermore, Windsurf's ecosystem amplifies these advantages, providing a cohesive environment.

Security and Ethical Considerations in Deploying SWE-1.5

Cognition prioritizes security in SWE-1.5, implementing safeguards against vulnerabilities. Users on Windsurf benefit from encrypted interactions and access controls.

Moreover, ethical training ensures unbiased outputs, promoting fair use in development.

However, teams must monitor integrations, using tools like Apidog to verify API security.

Integrating SWE-1.5 with Existing Toolchains

Developers connect SWE-1.5 to IDEs and CI/CD pipelines on Windsurf. APIs facilitate this, allowing custom automations.

Additionally, compatibility with languages like Python and JavaScript broadens appeal.

Furthermore, community contributions enhance features, fostering innovation.

Optimizing Performance: Tips for Users on Windsurf

To maximize SWE-1.5, optimize prompts for clarity. Use Codemaps for context.

Additionally, monitor usage metrics to fine-tune interactions.

Furthermore, integrate with Apidog for API-related tasks, ensuring efficiency.

The Role of Reinforcement Learning in SWE-1.5's Success

Reinforcement learning shapes SWE-1.5, rewarding correct actions in simulations.

Moreover, multi-turn training handles conversations effectively.

However, scaling RL demands robust infrastructure, which Cognition provides.

Hardware Innovations Enabling SWE-1.5

GB200 chips power training, offering high throughput.

Additionally, Cerebras inference accelerates deployment.

Furthermore, Otterlink scales environments, aligning with real-world needs.

Environmental Considerations in AI Model Training

Cognition optimizes energy use in training SWE-1.5.

Moreover, efficient inference reduces carbon footprint.

However, scaling requires sustainable practices.

Global Adoption Trends for AI in Software Engineering

Developers worldwide embrace models like SWE-1.5.

Additionally, Windsurf facilitates access in diverse regions.

Furthermore, localization efforts expand reach.

Collaborative Development: How Teams Use SWE-1.5

Groups collaborate via shared sessions on Windsurf.

Additionally, real-time edits enhance teamwork.

Furthermore, version control integration streamlines workflows.

Conclusion: Why SWE-1.5 on Windsurf Matters Now

Cognition launches SWE-1.5 on Windsurf, redefining AI in software engineering. Its speed and smarts empower developers, fostering innovation. As you explore, remember small optimizations yield big impacts—start with downloading Apidog for free to handle APIs seamlessly.