OpenAI's Sora 2 stands out as a powerful tool that combines text prompts with advanced audio synchronization to produce realistic clips. Developers frequently seek ways to harness this technology while avoiding visual distractions like watermarks, which can detract from professional applications. Fortunately, using the Sora 2 API offers a pathway to cleaner outputs, especially when integrated with robust API management tools.

This article explores the technical aspects of Sora 2, focusing on API utilization and strategies to eliminate watermarks. You will learn how to set up your environment, execute API calls, and apply post-processing methods. Additionally, the guide incorporates practical examples and optimization tips to enhance your projects.

Understanding Sora 2 and Its Capabilities

OpenAI released Sora 2 on September 30, 2025, marking a significant advancement in video generation technology. This model builds on the original Sora by incorporating more accurate physics simulations, realistic animations, and synchronized audio. For instance, Sora 2 accurately renders complex scenarios such as a basketball rebounding off a backboard or a gymnast performing intricate routines. Developers leverage these features to create dynamic content for applications in entertainment, education, and marketing.

Does Sora 2 Have a Content Violation Policy?

Yes, Sora 2 operates under strict content violation policies implemented by OpenAI to prevent misuse and ensure responsible AI usage. Understanding these guidelines is crucial for maintaining access and avoiding account suspension. Namely, Sora 2 implements multiple safeguards:

Watermarking: All generated videos contain embedded digital watermarks using C2PA standards that identify them as AI-created content.

Prompt Filtering: The system analyzes text prompts before processing, rejecting requests with flagged keywords or patterns associated with prohibited content.

Post-Generation Review: Suspicious outputs undergo automated review using computer vision algorithms trained to detect policy violations.

To maintain access and use Sora 2 responsibly:

- Review OpenAI's full usage policies before creating content

- Obtain explicit permission when using the "cameo" feature with individuals

- Disclose that content is AI-generated when sharing publicly

- Monitor policy updates as terms evolve with technology

For developers, implementing your own content review layer using tools like Apidog adds an extra safety buffer by validating API responses against custom rule sets.

How to Use Sora 2 Via API?

Transitioning to the API version, Sora 2 provides greater flexibility. The API, available in preview for developers, allows programmatic access to generation endpoints without the constraints of the consumer app. You access it through OpenAI's platform, requiring an API key and potentially a Pro subscription for enhanced features like higher resolutions and no built-in watermarks in certain configurations. According to developer documentation, the API endpoints focus on text-to-video requests, where you specify parameters like prompt, duration, and style.

Platforms like Replicate and ComfyUI offer hosted versions of the Sora 2 API, enabling no-watermark outputs through their interfaces. These alternatives integrate seamlessly with tools like Apidog, which handles authentication and request formatting. By using the API, you bypass the default watermarking applied in the free app tier, although some implementations still embed metadata. Nevertheless, this approach empowers you to generate clean videos tailored to your needs.

Accessing the Sora 2 API: Prerequisites and Setup

Before you generate videos, you must secure access to the Sora 2 API. OpenAI rolls out API availability to developers with active accounts, often prioritizing those with Pro or enterprise subscriptions. Start by logging into the OpenAI developer platform at platform.openai.com. There, you create an API key under the API keys section. Ensure your account meets the requirements for Sora 2 preview access, which may involve joining a waitlist or verifying your use case.

Once approved, you configure your development environment. Install necessary libraries, such as the OpenAI Python SDK, using pip: pip install openai. This library simplifies API interactions. For JavaScript users, the Node.js package offers similar functionality. Additionally, if you opt for third-party hosts like Replicate, sign up on their site and obtain an API token.

Security plays a crucial role here. Always store your API key in environment variables rather than hardcoding it, to prevent exposure. For example, in Python, use os.environ['OPENAI_API_KEY'] = 'your-key'. This practice safeguards your credentials during collaborative projects.

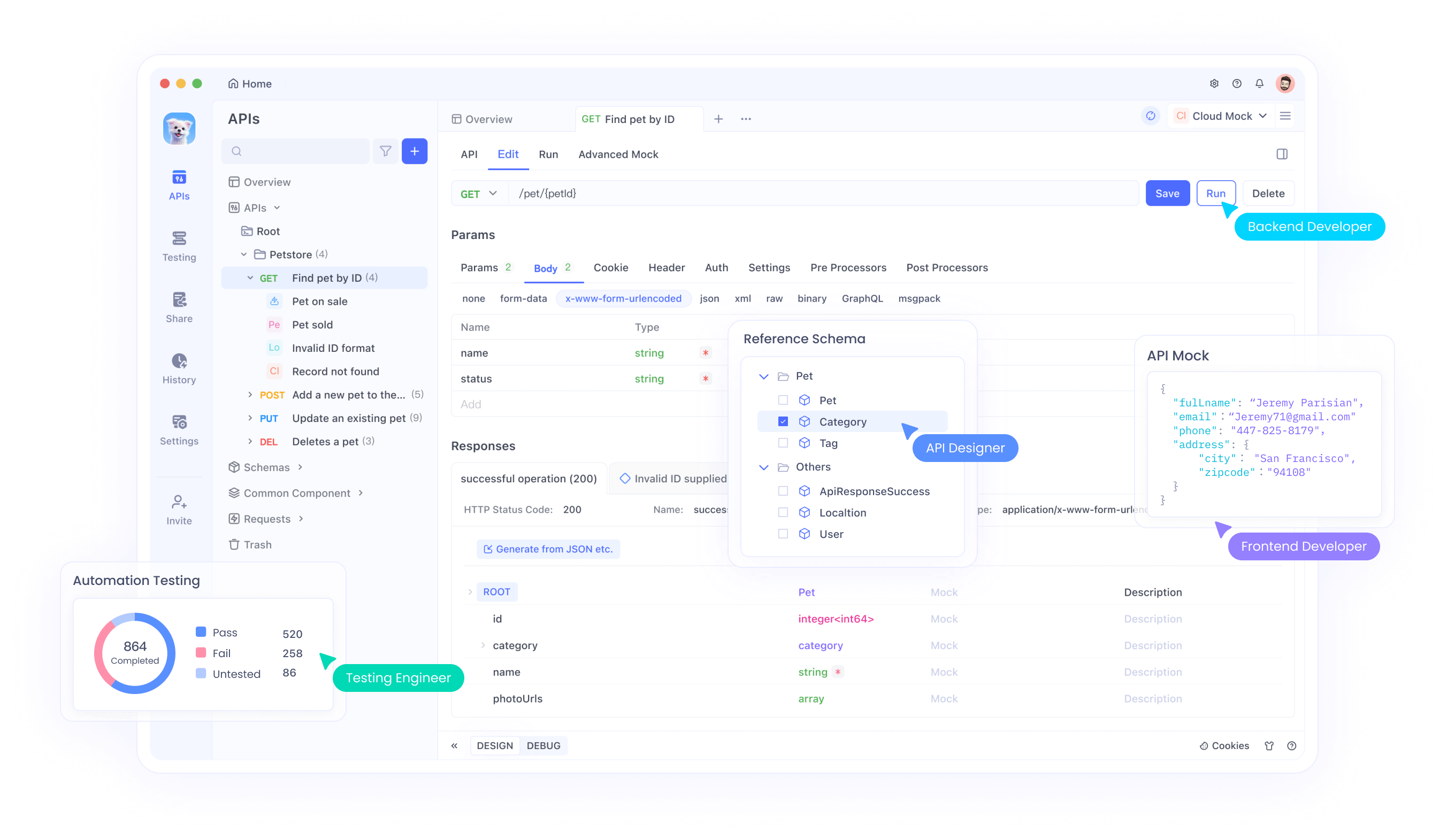

Now, integrate Apidog into your setup. As a comprehensive API client, Apidog excels in testing and documenting OpenAI endpoints, including Sora 2. Download and install Apidog from their official website. Upon launch, create a new project and import the OpenAI API specification by pasting the OpenAPI schema URL or uploading a JSON file. Apidog automatically generates endpoints for models like Sora 2, allowing you to customize requests with parameters such as model name ("sora-2") and input prompts.

Apidog's interface resembles Postman but offers advanced features like automated testing and mocking. You add your API key to the authorization header, typically as a Bearer token. Test a simple endpoint, like the models list, to verify connectivity: Send a GET request to /v1/models and confirm Sora 2 appears in the response. This step ensures your setup functions correctly before proceeding to video generation.

Configuring Apidog for Sora 2 API Requests

With your environment ready, you dive into Apidog's capabilities for Sora 2. Create a new API collection dedicated to video generation. Within it, add a POST request to the Sora 2 endpoint, usually /v1/video/generations or similar, based on OpenAI's documentation. Set the request body to JSON format.

Key parameters include:

model: Specify "sora-2" to invoke the latest version.prompt: A detailed text description, e.g., "A cat performing a triple axel on ice with synchronized meowing audio."duration: In seconds, up to 20.resolution: Options like "720p" or "1080p" for Pro users.style: "realistic", "cinematic", or "anime".

Apidog allows you to parameterize these for reusability. For instance, use variables like {{prompt}} to test multiple scenarios quickly. Additionally, enable environment variables for switching between test and production API keys.

To handle asynchronous responses—since video generation can take minutes—you configure webhooks or polling in Apidog. Set up a script to check the generation status via a GET request to the job ID endpoint. This automation saves time and ensures you retrieve completed videos efficiently.

Moreover, Apidog supports scripting with JavaScript for pre- and post-request actions. Write a script to validate the prompt length before sending, preventing errors. For example:

if (pm.variables.get('prompt').length > 1000) {

throw new Error('Prompt too long');

}

This technical safeguard enhances reliability. Once configured, send your first request and monitor the response, which typically includes a video URL or base64-encoded file.

Generating Videos with Sora 2 API: Step-by-Step Examples

You now execute actual generations. Begin with a basic prompt to test the waters. In Apidog, populate the body:

{

"model": "sora-2",

"prompt": "A bustling city street at dusk with pedestrians and cars, ambient street sounds included.",

"duration": 10,

"resolution": "720p"

}

Send the request. The API processes it and returns a job ID. Poll the status endpoint every 10 seconds until "completed". Retrieve the video URL from the response.

For advanced usage, incorporate cameos—a feature allowing real-world elements like a person's likeness. Upload a reference video via a multipart form request in Apidog. The body might look like:

{

"model": "sora-2",

"prompt": "The uploaded person giving a TED talk on AI.",

"cameo_video": "base64-encoded-video",

"audio_sync": true

}

Apidog handles file uploads natively, making this seamless.

However, outputs may still contain watermarks if using the standard tier. Pro API access, available to ChatGPT Pro subscribers, often provides options for watermark-free downloads. Check your subscription level; upgrading unlocks this.

To optimize prompts, experiment with details. Add physics descriptors like "gravity-defying leap" to leverage Sora 2's simulation strengths. Track usage metrics in Apidog's analytics to stay within rate limits, typically 500 generations per month for priority access.

Strategies to Remove Watermarks from Sora 2 Videos

Even with API access, some videos include watermarks. You address this through post-processing. First, understand the watermark types: visible overlays (moving logos) and invisible metadata (C2PA standards).

For visible removal, employ AI-based tools. Vmake AI, a free online service, uses deep learning to detect and erase moving watermarks. Upload your Sora 2 video, select the watermark area, and process. The tool preserves quality by inpainting removed sections with contextual pixels.

Alternatively, use open-source libraries like OpenCV in Python. Load the video:

import cv2

cap = cv2.VideoCapture('sora_video.mp4')

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

fps = cap.get(cv2.CAP_PROP_FPS)

out = cv2.VideoWriter('clean_video.mp4', cv2.VideoWriter_fourcc(*'mp4v'), fps, (width, height))

while cap.isOpened():

ret, frame = cap.read()

if not ret:

break

# Detect and mask watermark (custom logic, e.g., ROI cropping or ML model)

clean_frame = remove_watermark(frame) # Implement function

out.write(clean_frame)

cap.release()

out.release()

Implement remove_watermark using a pre-trained model from Hugging Face, such as a segmentation network trained on watermark datasets.

For metadata, strip C2PA tags with FFmpeg: ffmpeg -i input.mp4 -codec copy -bsf:v "filter_units=remove_types=6" output.mp4. This command removes specific bitstream filters without re-encoding.

However, consider legal implications. OpenAI's terms prohibit tampering with provenance signals, but for internal use or where permitted, these methods apply. Always attribute AI-generated content ethically.

Integrating this into Apidog workflows, automate post-processing via scripts. After retrieving the video URL, download and run removal code.

Advanced Techniques for Sora 2 API Optimization

To elevate your usage, chain multiple API calls. Generate a base video, then remix it with a follow-up prompt: "Extend the previous scene with a dramatic twist." Use the job ID as a reference in subsequent requests.

Additionally, incorporate audio enhancements. Sora 2 syncs sound effects automatically, but you fine-tune by specifying "include orchestral background music" in prompts.

Performance tuning involves batching requests. Apidog supports collections for running multiple tests concurrently, ideal for A/B prompting experiments.

Furthermore, monitor costs. Sora 2 API pricing starts at $0.05 per second of video for standard, with Pro at higher rates for premium features. Track via OpenAI's dashboard and set alerts in Apidog.

For scalability, deploy in production environments. Use cloud functions like AWS Lambda to handle API calls, triggered by user inputs. Secure with API gateways.

Best Practices and Troubleshooting for Sora 2 with Apidog

Adopt these practices to maximize efficiency. First, validate inputs rigorously—poor prompts yield subpar videos. Use Apidog's assertion tests to check response status codes (200 for success).

Troubleshoot common issues: If authentication fails, regenerate your key. For rate limits, implement exponential backoff in scripts:

import time

def api_call_with_retry(func, max_retries=5):

for attempt in range(max_retries):

try:

return func()

except Exception as e:

if 'rate_limit' in str(e):

time.sleep(2 ** attempt)

else:

raise

Network errors? Switch to Apidog's proxy settings.

Moreover, collaborate by sharing Apidog projects via links, ensuring team alignment on Sora 2 configurations.

Case Studies: Real-World Applications of Watermark-Free Sora 2

Consider a marketing firm using Sora 2 API to create ad clips. They generate videos without watermarks for client presentations, integrating Apidog for rapid iterations.

In education, teachers produce animated lessons. Post-removal, videos embed cleanly in platforms like YouTube.

These examples illustrate Sora 2's versatility when unencumbered by watermarks.

Future Prospects and Updates for Sora 2 API

OpenAI continues evolving Sora 2, with planned expansions like longer durations and better integrations. Stay updated via their blog.

Apidog regularly adds features, such as AI-assisted request generation, complementing Sora 2 advancements.

In summary, mastering Sora 2 without watermarks involves API access, Apidog facilitation, and targeted removal techniques. Implement these steps to produce professional-grade videos efficiently.