Developers increasingly integrate advanced AI models into applications to create engaging media content. OpenAI's Sora 2 and Sora 2 Pro models represent significant advancements in video generation technology. These models enable users to produce richly detailed videos complete with synchronized audio, starting from simple text prompts or reference images. Furthermore, they support asynchronous processing, which allows applications to handle generation tasks without blocking other operations.

Sora 2 focuses on speed and flexibility, making it suitable for rapid prototyping and experimentation. In contrast, Sora 2 Pro delivers higher quality outputs, ideal for production environments where visual precision matters. Both models operate through the OpenAI API, providing endpoints that streamline video creation, status checking, and retrieval.

Want to build production-ready AI Apps with Sora 2 & Sora 2 Pro API, at lightening fast speed? Hypereal AI is your ultimate solution!

Hypereal AI replaces fragmented model and infra stacks with a unified application layer for modern AI products.

As developers explore these models, they discover that small adjustments in prompts or parameters yield substantial improvements in output quality. Therefore, understanding the core capabilities sets the foundation for successful integration.

Understanding Sora 2 and Sora 2 Pro: Core Capabilities and Differences

OpenAI designed Sora 2 as a flagship video generation model that transforms natural language descriptions or images into dynamic clips with audio. The model excels in maintaining physical consistency, temporal coherence, and spatial awareness across frames. For instance, it simulates realistic motion, such as objects interacting in a 3D space, and ensures audio syncs seamlessly with visual elements.

Sora 2 Pro builds on this foundation but enhances fidelity and stability. Developers choose Sora 2 Pro when they need polished results, such as cinematic footage or marketing videos. The Pro variant handles complex scenes with greater accuracy, reducing artifacts in lighting, textures, and movements. However, this comes at the cost of longer render times and higher expenses.

Key differences emerge in performance metrics. Sora 2 prioritizes quick turnaround, often completing generations in minutes for basic resolutions. Sora 2 Pro, on the other hand, invests more computational resources to refine details, making it preferable for high-stakes applications. Additionally, supported resolutions vary: Sora 2 limits outputs to 1280x720 or 720x1280, while Sora 2 Pro extends to 1792x1024 or 1024x1792 for sharper visuals.

Limitations apply to both models. They reject prompts involving real people, copyrighted content, or inappropriate material. Input images cannot include human faces, and generations adhere to content policies for audiences under 18. Consequently, developers must craft prompts carefully to avoid rejections and ensure compliance.

By comparing these models, developers select the appropriate one based on project needs. Next, setting up access becomes the priority.

Getting Started with Sora 2 Pro API: Setup and Authentication

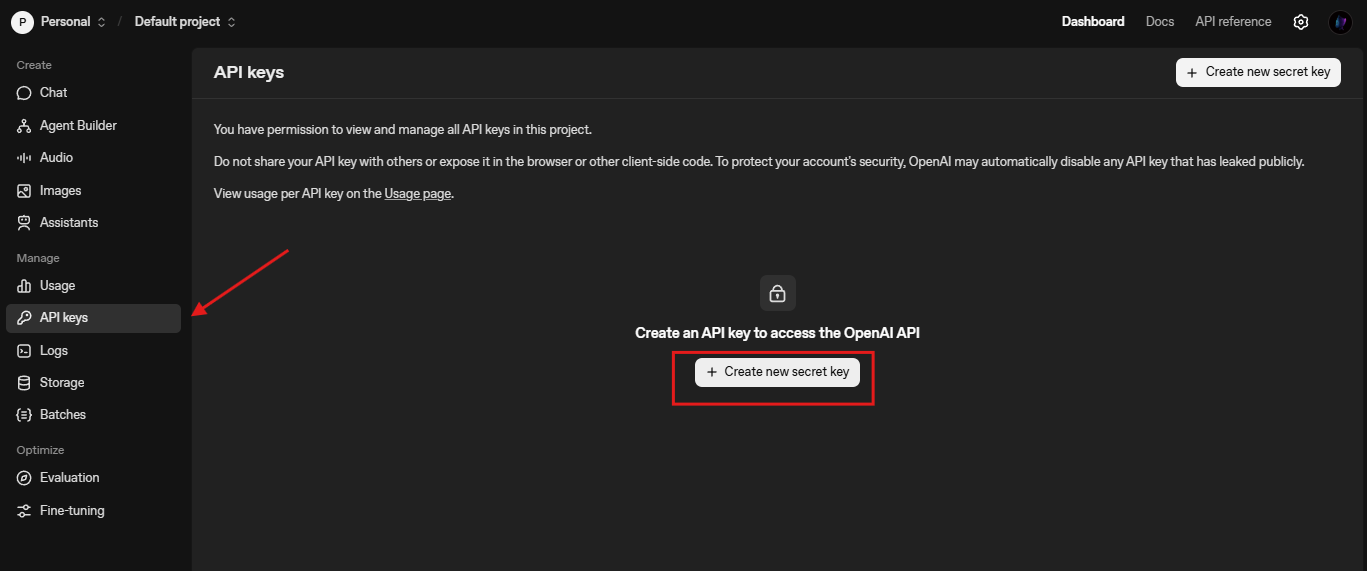

Developers begin by creating an OpenAI account at After registration, they apply for Sora access, as the API remains in preview and requires approval. The application process involves describing use cases and agreeing to responsible AI guidelines. Once approved, OpenAI grants API keys through the dashboard.

Authentication relies on bearer tokens. Developers include the API key in request headers for all endpoints. For security, they store keys in environment variables rather than hardcoding them. Tools like Python's dotenv library facilitate this practice.

In Python, developers install the OpenAI SDK with pip install openai. They then initialize the client:

import os

from openai import OpenAI

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

JavaScript developers use npm install openai and import the library similarly. This setup enables calls to the videos endpoint, where Sora 2 and Sora 2 Pro reside.

Rate limits and tiers influence access. Free tiers do not support Sora models; paid tiers start at Tier 1 with minimal requests per minute (RPM). As usage increases, tiers upgrade automatically, expanding limits. Developers monitor usage in the dashboard to avoid throttling.

With authentication configured, developers proceed to explore endpoints. This step ensures seamless integration into applications.

Exploring API Endpoints for Sora 2 and Sora 2 Pro

The Sora 2 Pro API centers on the /v1/videos endpoint family, supporting creation, retrieval, listing, and deletion of videos. Developers initiate generations with POST /v1/videos, specifying the model as 'sora-2' or 'sora-2-pro'.

The creation endpoint accepts parameters like prompt (text description), size (resolution string), and seconds (duration as "4", "8", or "12"). Optional fields include input_reference for image-guided starts and remix_video_id for modifications.

Responses return JSON with an ID, status (queued or in_progress), and progress percentage. Developers poll GET /v1/videos/{video_id} to track status until completion or failure.

Upon success, GET /v1/videos/{video_id}/content downloads the MP4 file. Variants allow thumbnail (WEBP) or spritesheet (JPG) retrieval. Listings via GET /v1/videos provide pagination with limit and after parameters.

Deletion uses DELETE /v1/videos/{video_id} to manage storage. For remixing, POST /v1/videos/{previous_video_id}/remix applies targeted changes via a new prompt.

Webhooks notify on completion or failure, reducing polling needs. Developers configure them in settings, receiving events with video IDs.

These endpoints form the backbone of Sora integrations. Consequently, mastering parameters enhances control over outputs.

Key Parameters and Request Formats in Sora 2 Pro API

Parameters dictate video characteristics. The model parameter selects 'sora-2' for efficiency or 'sora-2-pro' for quality. Prompt strings describe scenes in detail, incorporating camera angles, actions, lighting, and dialogue.

Size specifies resolution, such as "1280x720" for landscape or "720x1280" for portrait. Sora 2 Pro supports higher options like "1792x1024". Seconds limits duration to supported values, with shorter clips yielding more reliable results.

Input_reference uploads images via multipart/form-data, matching the size parameter. This anchors the first frame, useful for consistent branding.

Request formats vary: JSON for text-only, multipart for images. Headers include Authorization: Bearer {API_KEY} and Content-Type as needed.

Response formats consistently use JSON for metadata, with binary streams for content downloads. Errors return standard HTTP codes and messages, such as 400 for invalid parameters.

By adjusting these parameters, developers fine-tune generations. For example, combining high resolution with Sora 2 Pro maximizes fidelity, though it extends processing time.

Transitioning to examples illustrates practical application.

Code Examples: Implementing Sora 2 Pro API in Python and JavaScript

Developers implement Sora 2 Pro API through SDKs. In Python, a basic creation looks like this:

response = client.videos.create(

model="sora-2-pro",

prompt="A futuristic cityscape at dusk with flying vehicles and neon lights reflecting on wet streets.",

size="1792x1024",

seconds="8"

)

print(response)

Polling follows:

import time

video_id = response.id

while True:

status = client.videos.retrieve(video_id)

if status.status == "completed":

break

elif status.status == "failed":

raise Exception("Generation failed")

time.sleep(10)

Downloading saves the file:

content = client.videos.download_content(video_id)

with open("output.mp4", "wb") as f:

f.write(content)

In JavaScript, using async/await:

const openai = new OpenAI();

async function generateVideo() {

const video = await openai.videos.create({

model: 'sora-2-pro',

prompt: 'An ancient forest awakening at dawn, with mist rising and animals stirring.',

size: '1024x1792',

seconds: '12'

});

let status = video.status;

while (status === 'queued' || status === 'in_progress') {

await new Promise(resolve => setTimeout(resolve, 10000));

const updated = await openai.videos.retrieve(video.id);

status = updated.status;

}

if (status === 'completed') {

const content = await openai.videos.downloadContent(video.id);

// Handle binary content, e.g., save to file

}

}

generateVideo();

For image references in cURL:

curl -X POST "https://api.openai.com/v1/videos" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "Content-Type: multipart/form-data" \

-F model="sora-2-pro" \

-F prompt="The character jumps over the obstacle and lands gracefully." \

-F size="1280x720" \

-F seconds="4" \

-F input_reference="@start_frame.jpg;type=image/jpeg"

Remixing example:

curl -X POST "https://api.openai.com/v1/videos/$VIDEO_ID/remix" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{"prompt": "Change the background to a starry night sky."}'

These examples demonstrate core workflows. Developers extend them for batch processing or error handling.

As applications scale, pricing considerations become crucial.

API Pricing for Sora 2 and Sora 2 Pro: Cost Breakdown and Optimization

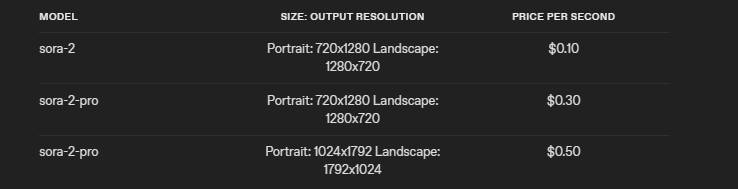

OpenAI prices Sora models per second of generated video, varying by model and resolution. Sora 2 costs $0.10 per second for 720p resolutions (1280x720 or 720x1280). Sora 2 Pro raises this to $0.30 per second for the same, and $0.50 per second for higher resolutions (1792x1024 or 1024x1792).

For a 12-second video at 720p using Sora 2, the cost totals $1.20. The same with Sora 2 Pro at high resolution reaches $6.00. Developers calculate expenses based on duration and volume.

Optimization strategies reduce costs. Use Sora 2 for drafts and switch to Sora 2 Pro for finals. Limit durations to essentials, and test prompts at lower resolutions. Batch short clips and stitch them post-generation.

Rate limits tie into tiers: Tier 1 allows 1-2 RPM for Pro, scaling to 20 RPM in Tier 5. Higher tiers unlock after consistent usage and spending.

By monitoring costs in the dashboard, developers maintain budgets. This awareness supports sustainable scaling.

Furthermore, effective prompting minimizes retries and waste.

Best Practices for Prompting in Sora 2 Pro API

Prompts drive output quality. Developers structure them with cinematography details: camera shots (e.g., wide angle), actions in beats, lighting (e.g., volumetric god rays), and palettes (3-5 colors).

API parameters override prose for size and seconds. Use image inputs to control starting frames, ensuring resolution matches.

For motion, describe simple, timed actions: "The bird flaps wings twice, then glides for three seconds." Dialogue blocks follow visuals: "Character: 'Hello world.'"

Iterate via remixes for tweaks, preserving structures. Test variations: short prompts for creativity, detailed for precision.

Common pitfalls include overcomplexity, leading to inconsistencies. Start simple, add layers.

These practices yield reliable results. Integrating tools like Apidog streamlines testing.

Integrating Apidog with Sora 2 Pro API for Efficient Development

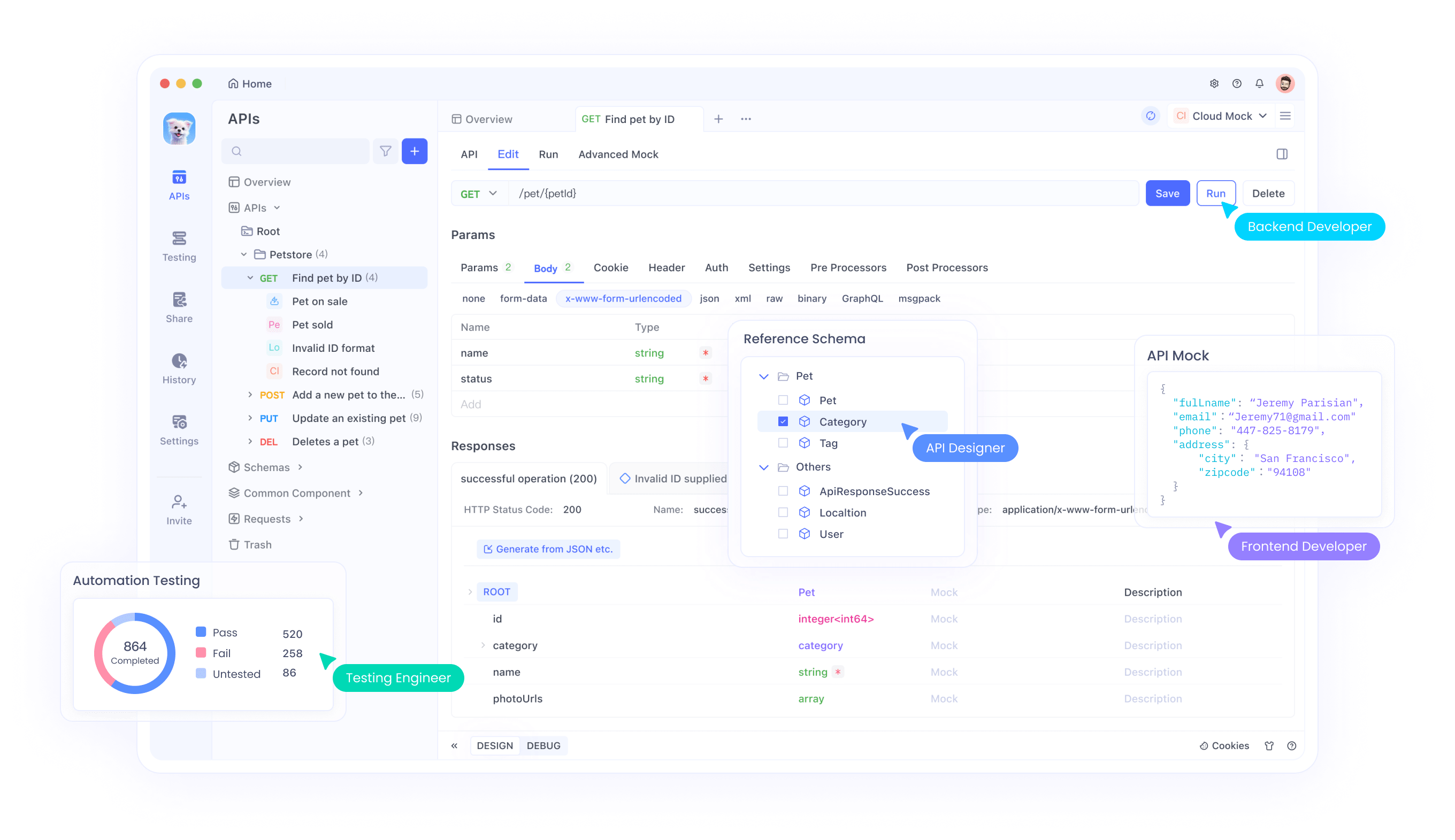

Apidog serves as an advanced API client, surpassing basic tools like Postman. Developers use it to mock endpoints, generate code, and debug Sora 2 Pro calls.

First, import OpenAI's API spec into Apidog. Create collections for video endpoints, setting variables for keys.

Apidog features AI enhancements for prompt generation and response validation. For Sora, chain requests: create, poll status, download.

Code generation exports Python or JS snippets directly from requests. This accelerates prototyping.

Moreover, Apidog's documentation tools create shareable guides for teams.

By incorporating Apidog, developers reduce setup time and focus on innovation.

Troubleshooting follows naturally.

Troubleshooting Common Issues in Sora 2 Pro API Usage

Issues arise from invalid parameters or policy violations. Status "failed" often stems from rejected prompts—check for prohibited content.

Rate limit errors (429) require backoff retries. Implement exponential delays in code.

Incomplete generations signal network problems; verify connections.

For low-quality outputs, refine prompts with specifics. If resolutions mismatch in inputs, requests fail.

Logs in the OpenAI dashboard provide insights. Developers resolve most issues by aligning with docs.

This proactive approach maintains smooth operations.

Advanced Use Cases: Building Applications with Sora 2 Pro API

Developers build diverse apps. In marketing, generate personalized ads from user data. E-learning platforms create explanatory videos dynamically.

Games use Sora for procedural cutscenes. Social media tools remix user content.

Integrate with other OpenAI APIs: Use GPT to enhance prompts before Sora calls.

Scale with queues and async processing. For high volume, employ webhooks for notifications.

These cases showcase versatility. Security remains paramount.

Security and Compliance in Sora 2 Pro API Integrations

Developers secure keys with vaults and rotate them regularly. Comply with data policies, avoiding sensitive inputs.

Monitor for abuse via usage analytics. Ensure outputs suit audiences.

By adhering to guidelines, developers foster ethical use.

Wrapping up, Sora empowers creative tech.

Conclusion: Maximizing Value from Sora 2 Pro API

Sora 2 and Sora 2 Pro transform media creation. Developers harness them through structured APIs, optimized prompts, and tools like Apidog.

As technology evolves, staying updated ensures competitiveness. Experiment boldly, iterate wisely.