Running large language models (LLMs) locally empowers developers with privacy, control, and cost savings. OpenAI’s open-weight models, collectively known as GPT-OSS (gpt-oss-120b and gpt-oss-20b), offer powerful reasoning capabilities for tasks like coding, agentic workflows, and data analysis. With Ollama, an open-source platform, you can deploy these models on your own hardware without cloud dependencies. This technical guide walks you through installing Ollama, configuring GPT-OSS models, and debugging with Apidog, a tool that simplifies API testing for local LLMs.

Why Run GPT-OSS Locally with Ollama?

Running GPT-OSS locally using Ollama provides distinct advantages for developers and researchers. First, it ensures data privacy, as your inputs and outputs remain on your machine. Second, it eliminates recurring cloud API costs, making it ideal for high-volume or experimental use cases. Third, Ollama’s compatibility with OpenAI’s API structure allows seamless integration with existing tools, while its support for quantized models like gpt-oss-20b (requiring only 16GB memory) ensures accessibility on modest hardware.

Moreover, Ollama simplifies the complexities of LLM deployment. It handles model weights, dependencies, and configurations through a single Modelfile, akin to a Docker container for AI. Paired with Apidog, which offers real-time visualization of streaming AI responses, you gain a robust ecosystem for local AI development. Next, let’s explore the prerequisites for setting up this environment.

Prerequisites for Running GPT-OSS Locally

Before proceeding, ensure your system meets the following requirements:

- Hardware:

- For gpt-oss-20b: Minimum 16GB RAM, ideally with a GPU (e.g., NVIDIA 1060 4GB).

- For gpt-oss-120b: 80GB GPU memory (e.g., a single 80GB GPU or high-end data center setup).

- 20-50GB free storage for model weights and dependencies.

- Software:

- Operating System: Linux or macOS recommended; Windows supported with additional setup.

- Ollama: Download from ollama.com.

- Optional: Docker for running Open WebUI or Apidog for API testing.

- Internet: Stable connection for initial model downloads.

- Dependencies: NVIDIA/AMD GPU drivers if using GPU acceleration; CPU-only mode works but is slower.

With these in place, you’re ready to install Ollama and deploy GPT-OSS. Let’s move to the installation process.

Step 1: Installing Ollama on Your System

Ollama’s installation is straightforward, supporting macOS, Linux, and Windows. Follow these steps to set it up:

Download Ollama:

- Visit ollama.com and download the installer for your OS.

- For Linux/macOS, use the terminal command:

curl -fsSL https://ollama.com/install.sh | sh

This script automates the download and setup process.

Verify Installation:

- Run

ollama --versionin your terminal. You should see a version number (e.g., 0.1.44). If not, check the Ollama GitHub for troubleshooting.

Start the Ollama Server:

- Execute

ollama serveto launch the server, which listens onhttp://localhost:11434. Keep this terminal running or configure Ollama as a background service for continuous use.

Once installed, Ollama is ready to download and run GPT-OSS models. Let’s proceed to downloading the models.

Step 2: Downloading GPT-OSS Models

OpenAI’s GPT-OSS models (gpt-oss-120b and gpt-oss-20b) are available on Hugging Face and optimized for Ollama with MXFP4 quantization, reducing memory requirements. Follow these steps to download them:

Choose the Model:

- gpt-oss-20b: Ideal for desktops/laptops with 16GB RAM. It activates 3.6B parameters per token, suitable for edge devices.

- gpt-oss-120b: Designed for data centers or high-end GPUs with 80GB memory, activating 5.1B parameters per token.

Download via Ollama:

- In your terminal, run:

ollama pull gpt-oss-20b

or

ollama pull gpt-oss-120b

Depending on your hardware, the download (20-50GB) may take time. Ensure a stable internet connection.

Verify Download:

- List installed models with:

ollama list

Look for gpt-oss-20b:latest or gpt-oss-120b:latest.

With the model downloaded, you can now run it locally. Let’s explore how to interact with GPT-OSS.

Step 3: Running GPT-OSS Models with Ollama

Ollama provides multiple ways to interact with GPT-OSS models: command-line interface (CLI), API, or graphical interfaces like Open WebUI. Let’s start with the CLI for simplicity.

Launch an Interactive Session:

- Run:

ollama run gpt-oss-20b

This opens a real-time chat session. Type your query (e.g., “Write a Python function for binary search”) and press Enter. Use /help for special commands.

One-Off Queries:

- For quick responses without interactive mode, use:

ollama run gpt-oss-20b "Explain quantum computing in simple terms"

Adjust Parameters:

- Modify model behavior with parameters like temperature (creativity) and top-p (response diversity). For example:

ollama run gpt-oss-20b --temperature 0.1 --top-p 1.0 "Write a factual summary of blockchain technology"

Lower temperature (e.g., 0.1) ensures deterministic, factual outputs, ideal for technical tasks.

Next, let’s customize the model’s behavior using Modelfiles for specific use cases.

Step 4: Customizing GPT-OSS with Ollama Modelfiles

Ollama’s Modelfiles allow you to tailor GPT-OSS behavior without retraining. You can set system prompts, adjust context size, or fine-tune parameters. Here’s how to create a custom model:

Create a Modelfile:

- Create a file named

Modelfilewith:

FROM gpt-oss-20b

SYSTEM "You are a technical assistant specializing in Python programming. Provide concise, accurate code with comments."

PARAMETER temperature 0.5

PARAMETER num_ctx 4096

This configures the model as a Python-focused assistant with moderate creativity and a 4k token context window.

Build the Custom Model:

- Navigate to the directory containing the Modelfile and run:

ollama create python-gpt-oss -f Modelfile

Run the Custom Model:

- Launch it with:

ollama run python-gpt-oss

Now, the model prioritizes Python-related responses with the specified behavior.

This customization enhances GPT-OSS for specific domains, such as coding or technical documentation. Now, let’s integrate the model into applications using Ollama’s API.

Step 5: Integrating GPT-OSS with Ollama’s API

Ollama’s API, running on http://localhost:11434, enables programmatic access to GPT-OSS. This is ideal for developers building AI-powered applications. Here’s how to use it:

API Endpoints:

- POST /api/generate: Generates text for a single prompt. Example:

curl http://localhost:11434/api/generate -H "Content-Type: application/json" -d '{"model": "gpt-oss-20b", "prompt": "Write a Python script for a REST API"}'

- POST /api/chat: Supports conversational interactions with message history:

curl http://localhost:11434/v1/chat/completions -H "Content-Type: application/json" -d '{"model": "gpt-oss-20b", "messages": [{"role": "user", "content": "Explain neural networks"}]}'

- POST /api/embeddings: Generates vector embeddings for semantic tasks like search or classification.

OpenAI Compatibility:

- Ollama supports OpenAI’s Chat Completions API format. Use Python with the OpenAI library:

from openai import OpenAI

client = OpenAI(base_url="http://localhost:11434/v1", api_key="ollama")

response = client.chat.completions.create(

model="gpt-oss-20b",

messages=[{"role": "user", "content": "What is machine learning?"}]

)

print(response.choices[0].message.content)

This API integration allows GPT-OSS to power chatbots, code generators, or data analysis tools. However, debugging streaming responses can be challenging. Let’s see how Apidog simplifies this.

Step 6: Debugging GPT-OSS with Apidog

Apidog is a powerful API testing tool that visualizes streaming responses from Ollama’s endpoints, making it easier to debug GPT-OSS outputs. Here’s how to use it:

Install Apidog:

- Download Apidog from apidog.com and install it on your system.

Configure Ollama API in Apidog:

- Create a new API request in Apidog.

- Set the URL to

http://localhost:11434/api/generate. - Use a JSON body like:

{

"model": "gpt-oss-20b",

"prompt": "Generate a Python function for sorting",

"stream": true

}

Visualize Responses:

- Apidog merges streamed tokens into a readable format, unlike raw JSON outputs. This helps identify formatting issues or logical errors in the model’s reasoning.

- Use Apidog’s reasoning analysis to inspect GPT-OSS’s step-by-step thought process, especially for complex tasks like coding or problem-solving.

Comparative Testing:

- Create prompt collections in Apidog to test how different parameters (e.g., temperature, top-p) affect GPT-OSS outputs. This ensures optimal model performance for your use case.

Apidog’s visualization transforms debugging from a tedious task into a clear, actionable process, enhancing your development workflow. Now, let’s address common issues you might encounter.

Step 7: Troubleshooting Common Issues

Running GPT-OSS locally may present challenges. Here are solutions to frequent problems:

GPU Memory Error:

- Issue: gpt-oss-120b fails due to insufficient GPU memory.

- Solution: Switch to gpt-oss-20b or ensure your system has an 80GB GPU. Check memory usage with

nvidia-smi.

Model Won’t Start:

- Issue:

ollama runfails with an error. - Solution: Verify the model is downloaded (

ollama list) and Ollama server is running (ollama serve). Check logs in~/.ollama/logs.

API Doesn’t Respond:

- Issue: API requests to

localhost:11434fail. - Solution: Ensure

ollama serveis active and port 11434 is open. Usenetstat -tuln | grep 11434to confirm.

Slow Performance:

- Issue: CPU-based inference is sluggish.

- Solution: Enable GPU acceleration with proper drivers or use a smaller model like gpt-oss-20b.

For persistent issues, consult the Ollama GitHub or Hugging Face community for GPT-OSS support.

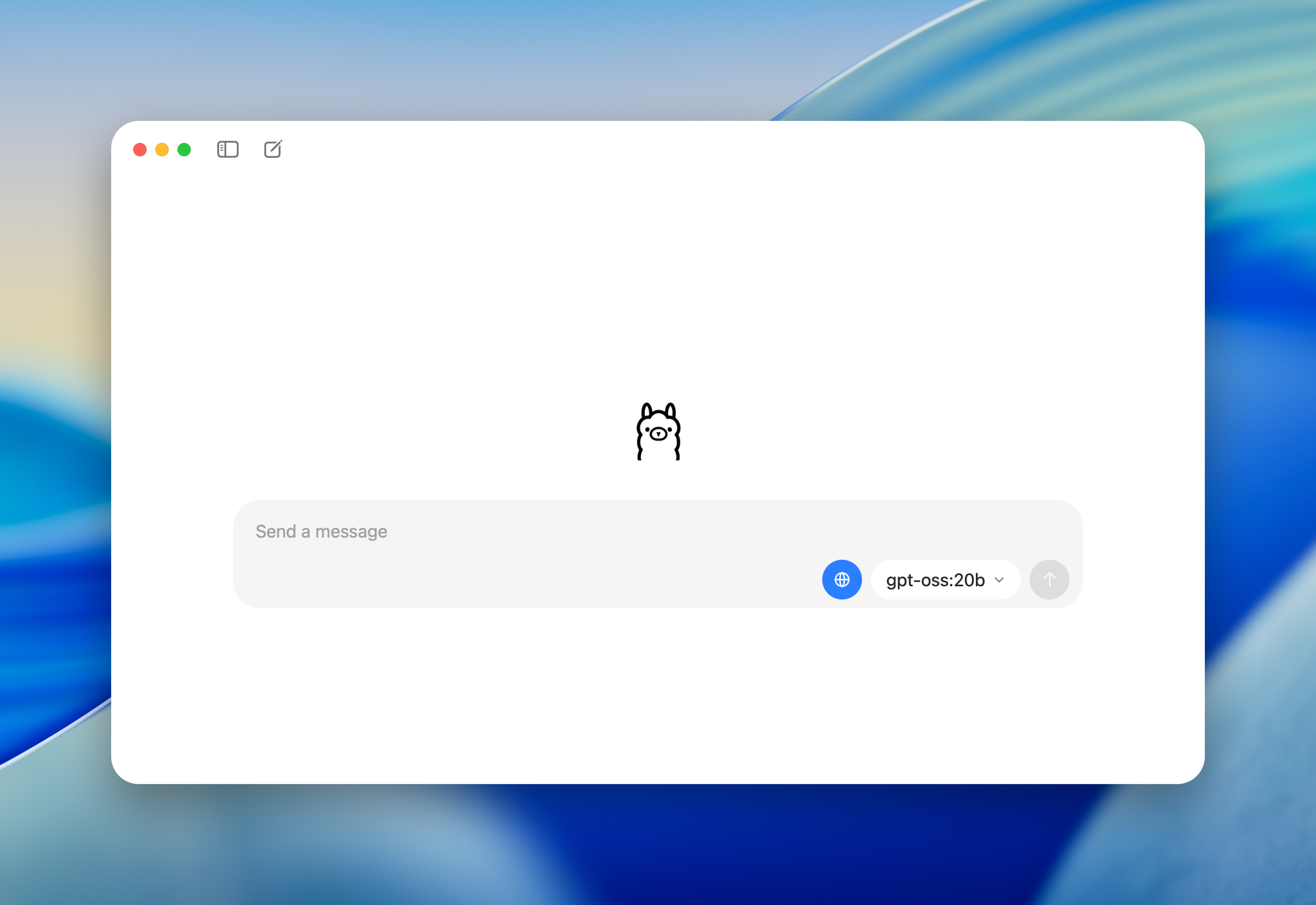

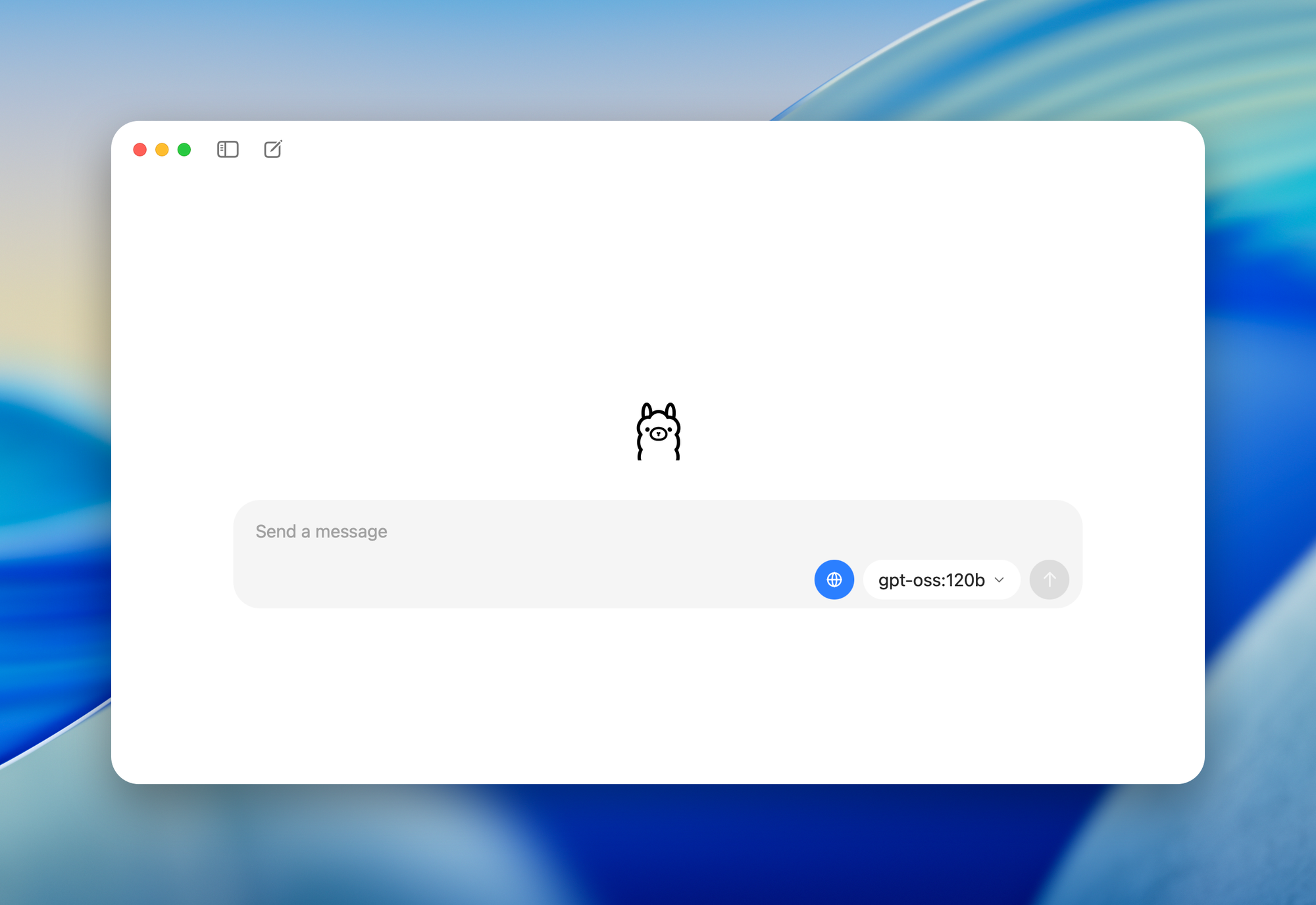

Step 8: Enhancing GPT-OSS with Open WebUI

For a user-friendly interface, pair Ollama with Open WebUI, a browser-based dashboard for GPT-OSS:

Install Open WebUI:

- Use Docker:

docker run -d -p 3000:8080 --name open-webui ghcr.io/open-webui/open-webui:main

Access the Interface:

- Open

http://localhost:3000in your browser. - Select

gpt-oss-20borgpt-oss-120band start chatting. Features include chat history, prompt storage, and model switching.

Document Uploads:

- Upload files for context-aware responses (e.g., code reviews or data analysis) using Retrieval-Augmented Generation (RAG).

Open WebUI simplifies interaction for non-technical users, complementing Apidog’s technical debugging capabilities.

Conclusion: Unleashing GPT-OSS with Ollama and Apidog

Running GPT-OSS locally with Ollama empowers you to harness OpenAI’s open-weight models for free, with full control over privacy and customization. By following this guide, you’ve learned to install Ollama, download GPT-OSS models, customize behavior, integrate via API, and debug with Apidog. Whether you’re building AI-powered applications or experimenting with reasoning tasks, this setup offers unmatched flexibility. Small tweaks, like adjusting parameters or using Apidog’s visualization, can significantly enhance your workflow. Start exploring local AI today and unlock the potential of GPT-OSS!