Running large language models (LLMs) locally offers unmatched privacy, control, and cost-efficiency. Google’s Gemma 3 QAT (Quantization-Aware Training) models, optimized for consumer GPUs, pair seamlessly with Ollama, a lightweight platform for deploying LLMs. This technical guide walks you through setting up and running Gemma 3 QAT with Ollama, leveraging its API for integration, and testing with Apidog, a superior alternative to traditional API testing tools. Whether you’re a developer or AI enthusiast, this step-by-step tutorial ensures you harness Gemma 3 QAT’s multimodal capabilities efficiently.

Why Run Gemma 3 QAT with Ollama?

Gemma 3 QAT models, available in 1B, 4B, 12B, and 27B parameter sizes, are designed for efficiency. Unlike standard models, QAT variants use quantization to reduce memory usage (e.g., ~15GB for 27B on MLX) while maintaining performance. This makes them ideal for local deployment on modest hardware. Ollama simplifies the process by packaging model weights, configurations, and dependencies into a user-friendly format. Together, they offer:

- Privacy: Keep sensitive data on your device.

- Cost Savings: Avoid recurring cloud API fees.

- Flexibility: Customize and integrate with local applications.

Moreover, Apidog enhances API testing, providing a visual interface to monitor Ollama’s API responses, surpassing tools like Postman in ease of use and real-time debugging.

Prerequisites for Running Gemma 3 QAT with Ollama

Before starting, ensure your setup meets these requirements:

- Hardware: A GPU-enabled computer (NVIDIA preferred) or a strong CPU. Smaller models (1B, 4B) run on less powerful devices, while 27B demands significant resources.

- Operating System: macOS, Windows, or Linux.

- Storage: Sufficient space for model downloads (e.g., 27B requires ~8.1GB).

- Basic Command-Line Skills: Familiarity with terminal commands.

- Internet Connection: Needed initially to download Ollama and Gemma 3 QAT models.

Additionally, install Apidog to test API interactions. Its streamlined interface makes it a better choice than manual curl commands or complex tools.

Step-by-Step Guide to Install Ollama and Gemma 3 QAT

Step 1: Install Ollama

Ollama is the backbone of this setup. Follow these steps to install it:

Download Ollama:

- Visit ollama.com/download.

- Choose the installer for your OS (macOS, Windows, or Linux).

- For Linux, run:

curl -fsSL https://ollama.com/install.sh | sh

Verify Installation:

- Open a terminal and run:

ollama --version

- Ensure you’re using version 0.6.0 or higher, as older versions may not support Gemma 3 QAT. Upgrade if needed via your package manager (e.g., Homebrew on macOS).

Start the Ollama Server:

- Launch the server with:

ollama serve

- The server runs on

localhost:11434by default, enabling API interactions.

Step 2: Pull Gemma 3 QAT Models

Gemma 3 QAT models are available in multiple sizes. Check the full list at ollama.com/library/gemma3/tags. For this guide, we’ll use the 4B QAT model for its balance of performance and resource efficiency.

Download the Model:

- In a new terminal, run:

ollama pull gemma3:4b-it-qat

- This downloads the 4-bit quantized 4B model (~3.3GB). Expect the process to take a few minutes, depending on your internet speed.

Verify the Download:

- List available models:

ollama list

- You should see

gemma3:4b-it-qatin the output, confirming the model is ready.

Step 3: Optimize for Performance (Optional)

For resource-constrained devices, optimize the model further:

- Run:

ollama optimize gemma3:4b-it-qat --quantize q4_0

- This applies additional quantization, reducing memory footprint with minimal quality loss.

Running Gemma 3 QAT: Interactive Mode and API Integration

Now that Ollama and Gemma 3 QAT are set up, explore two ways to interact with the model: interactive mode and API integration.

Interactive Mode: Chatting with Gemma 3 QAT

Ollama’s interactive mode lets you query Gemma 3 QAT directly from the terminal, ideal for quick tests.

Start Interactive Mode:

- Run:

ollama run gemma3:4b-it-qat

- This loads the model and opens a prompt.

Test the Model:

- Type a query, e.g., “Explain recursion in programming.”

- Gemma 3 QAT responds with a detailed, context-aware answer, leveraging its 128K context window.

Multimodal Capabilities:

- For vision tasks, provide an image path:

ollama run gemma3:4b-it-qat "Describe this image: /path/to/image.png"

- The model processes the image and returns a description, showcasing its multimodal prowess.

API Integration: Building Applications with Gemma 3 QAT

For developers, Ollama’s API enables seamless integration into applications. Use Apidog to test and optimize these interactions.

Start the Ollama API Server:

- If not already running, execute:

ollama serve

Send API Requests:

- Use a curl command to test:

curl http://localhost:11434/api/generate -d '{"model": "gemma3:4b-it-qat", "prompt": "What is the capital of France?"}'

- The response is a JSON object containing Gemma 3 QAT’s output, e.g.,

{"response": "The capital of France is Paris."}.

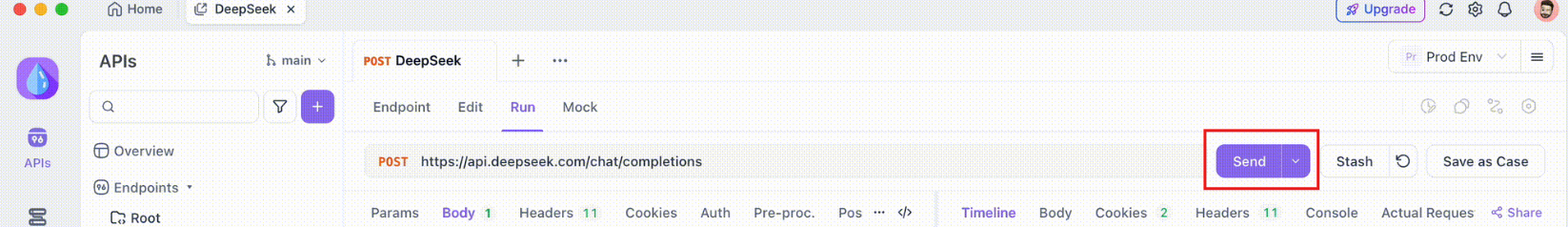

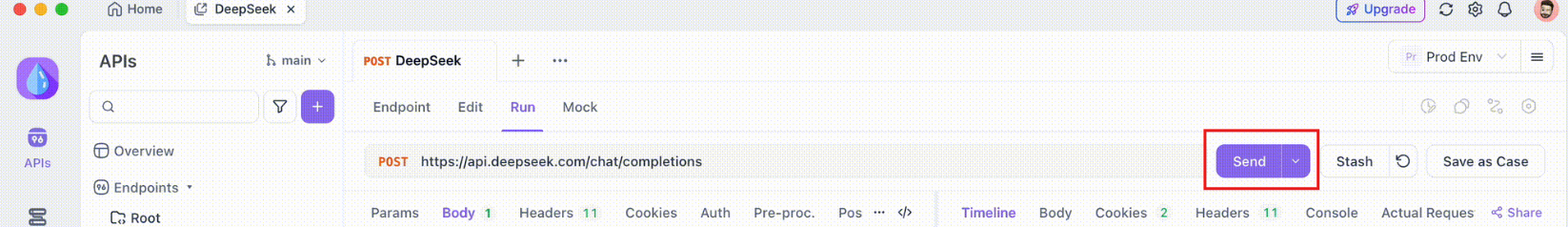

Test with Apidog:

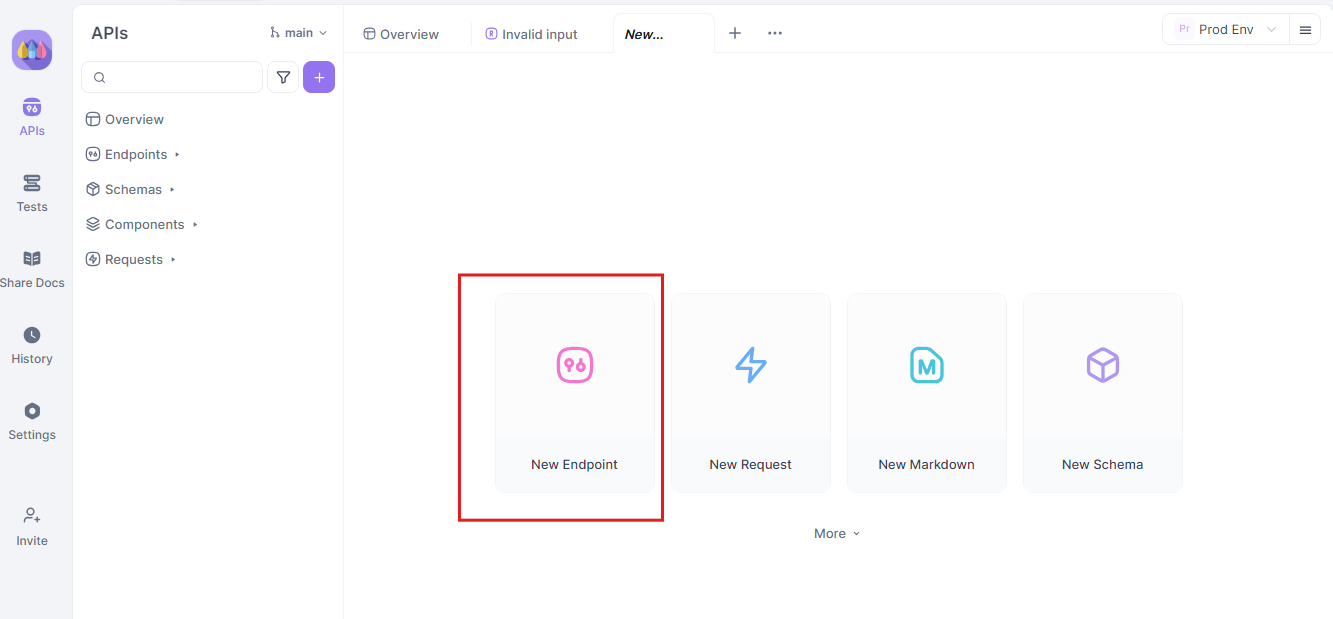

- Open Apidog (download it from the button below).

- Create a new API request:

- Endpoint:

http://localhost:11434/api/generate

- Payload:

{

"model": "gemma3:4b-it-qat",

"prompt": "Explain the theory of relativity."

}

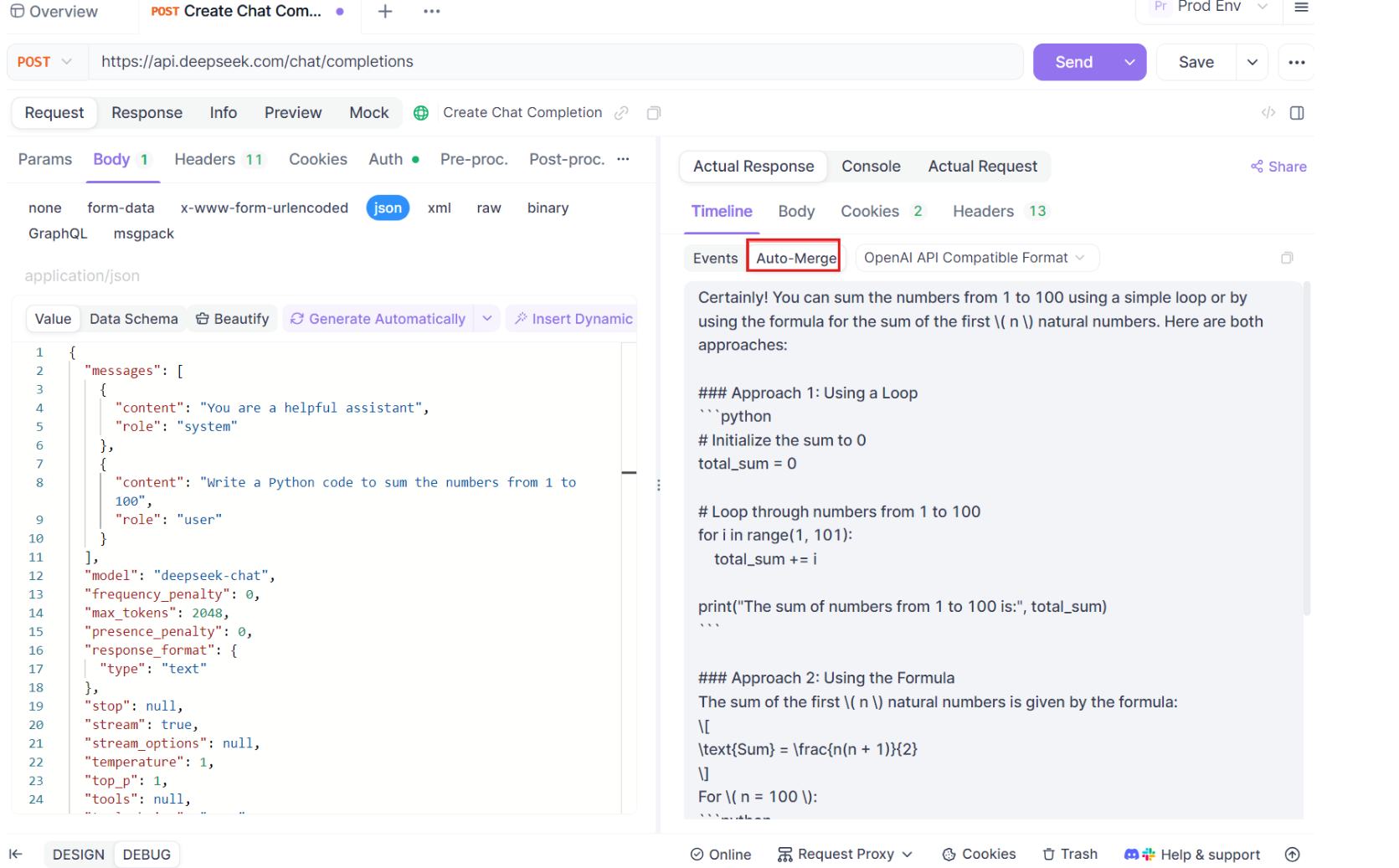

- Send the request and monitor the response in Apidog’s real-time timeline.

- Use Apidog’s JSONPath extraction to parse responses automatically, a feature that outshines tools like Postman.

Streaming Responses:

- For real-time applications, enable streaming:

curl http://localhost:11434/api/generate -d '{"model": "gemma3:4b-it-qat", "prompt": "Write a poem about AI.", "stream": true}'

- Apidog’s Auto-Merge feature consolidates streamed messages, simplifying debugging.

Building a Python Application with Ollama and Gemma 3 QAT

To demonstrate practical use, here’s a Python script that integrates Gemma 3 QAT via Ollama’s API. This script uses the ollama-python library for simplicity.

Install the Library:

pip install ollama

Create the Script:

import ollama

def query_gemma(prompt):

response = ollama.chat(

model="gemma3:4b-it-qat",

messages=[{"role": "user", "content": prompt}]

)

return response["message"]["content"]

# Example usage

prompt = "What are the benefits of running LLMs locally?"

print(query_gemma(prompt))

Run the Script:

- Save as

gemma_app.pyand execute:

python gemma_app.py

- The script queries Gemma 3 QAT and prints the response.

Test with Apidog:

- Replicate the API call in Apidog to verify the script’s output.

- Use Apidog’s visual interface to tweak payloads and monitor performance, ensuring robust integration.

Troubleshooting Common Issues

Despite Ollama’s simplicity, issues may arise. Here are solutions:

- Model Not Found:

- Ensure you pulled the model:

ollama pull gemma3:4b-it-qat

- Memory Issues:

- Close other applications or use a smaller model (e.g., 1B).

- Slow Responses:

- Upgrade your GPU or apply quantization:

ollama optimize gemma3:4b-it-qat --quantize q4_0

- API Errors:

- Verify the Ollama server is running on

localhost:11434. - Use Apidog to debug API requests, leveraging its real-time monitoring to pinpoint issues.

For persistent problems, consult the Ollama community or Apidog’s support resources.

Advanced Tips for Optimizing Gemma 3 QAT

To maximize performance:

Use GPU Acceleration:

- Ensure Ollama detects your NVIDIA GPU:

nvidia-smi

- If undetected, reinstall Ollama with CUDA support.

Customize Models:

- Create a

Modelfileto adjust parameters:

FROM gemma3:4b-it-qat

PARAMETER temperature 1

SYSTEM "You are a technical assistant."

- Apply it:

ollama create custom-gemma -f Modelfile

Scale with Cloud:

- For enterprise use, deploy Gemma 3 QAT on Google Cloud’s GKE with Ollama, scaling resources as needed.

Why Apidog Stands Out

While tools like Postman are popular, Apidog offers distinct advantages:

- Visual Interface: Simplifies endpoint and payload configuration.

- Real-Time Monitoring: Tracks API performance instantly.

- Auto-Merge for Streaming: Consolidates streamed responses, ideal for Ollama’s API.

- JSONPath Extraction: Automates response parsing, saving time.

Download Apidog for free at apidog.com to elevate your Gemma 3 QAT projects.

Conclusion

Running Gemma 3 QAT with Ollama empowers developers to deploy powerful, multimodal LLMs locally. By following this guide, you’ve installed Ollama, downloaded Gemma 3 QAT, and integrated it via interactive mode and API. Apidog enhances the process, offering a superior platform for testing and optimizing API interactions. Whether building applications or experimenting with AI, this setup delivers privacy, efficiency, and flexibility. Start exploring Gemma 3 QAT today, and leverage Apidog to streamline your workflow.