Developers seek tools that enhance productivity while maintaining control over their workflows. Devstral, an open-source AI model from Mistral AI, emerges as a powerful solution for coding tasks. Designed to generate, debug, and explain code, Devstral stands out for its ability to run locally via Ollama, a platform that deploys AI models on your hardware. This approach delivers privacy, reduces latency, and eliminates cloud costs; key benefits for technical users. Moreover, it supports offline use, ensuring uninterrupted coding sessions.

Why choose local deployment? First, it safeguards sensitive codebases, critical in regulated sectors like finance or healthcare. Second, it cuts response times by bypassing internet delays, ideal for real-time assistance. Third, it saves money by avoiding subscription fees, broadening access for solo developers. Ready to harness Devstral?

Setting Up Ollama: Step-by-Step Installation

To run Devstral locally, you first install Ollama. This platform simplifies AI model deployment, making it accessible even on modest hardware. Follow these steps to get started:

System Requirements

Ensure your machine meets these specs:

- OS: Windows, macOS, or Linux

- RAM: Minimum 16GB (32GB preferred for larger models)

- CPU: Modern multi-core processor

- GPU: Optional but recommended (NVIDIA with CUDA support)

- Storage: At least 20GB free for model files

Installation Process

- Download Ollama: Visit ollama.com and grab the installer for your OS.

- Run the Installer:

- On Windows, execute the

.exeand follow prompts. - On macOS, open the

.dmgand drag Ollama to Applications. - On Linux, use

curl -fsSL https://ollama.com/install.sh | shfor a quick setup.

- Verify Installation: Open a terminal and type

ollama --version. You should see the version number (e.g., 0.1.x). If not, check your PATH variable.

Fetching Devstral

With Ollama installed, pull Devstral from its library:

- Run

ollama pull devstral. This downloads the model, which may take time based on your bandwidth (expect 10-15GB). - Confirm availability with

ollama list. Devstral should appear in the output.

Launching Devstral

Execute ollama run devstral. The terminal displays a loading message, followed by a prompt indicating readiness. If errors occur (e.g., insufficient memory), verify your hardware or consult Ollama’s troubleshooting docs.

By completing these steps, you establish a local Devstral instance, primed for coding tasks.

Using Devstral for Coding: Practical Applications

Devstral excels at coding, leveraging its training on vast code datasets. Here’s how you actively use it:

Code Generation

Need a function fast? Type a clear prompt:

- Input: “Generate a Python function to reverse a string.”

- Output:

def reverse_string(text):

return text[::-1]

This uses Python’s slicing, showcasing Devstral’s efficiency.

Debugging Support

Stuck on a bug? Provide your code and issue:

- Input: “Why does this JavaScript fetch return undefined?

fetch('https://api.example.com').then(data => console.log(data));” - Output: “You’re missing

.json()to parse the response. Try:fetch('https://api.example.com').then(res => res.json()).then(data => console.log(data));”

Devstral pinpoints the fix, saving debugging time.

Code Completion

Start a function, and let Devstral finish:

- Input: “Complete this Python function:

def factorial(n):” - Output:

def factorial(n):

if n == 0 or n == 1:

return 1

return n * factorial(n - 1)

This recursive solution demonstrates Devstral’s grasp of algorithms.

Learning New Concepts

Exploring a language? Ask for explanations:

- Input: “Show me how to create a class in C++.”

- Output:

class MyClass {

public:

int value;

MyClass(int v) : value(v) {}

void print() { std::cout << value << std::endl; }

};

Devstral pairs code with implicit context, aiding comprehension.

Interact via the terminal after launching ollama run devstral. For advanced use, explore API integration if supported—check Ollama’s docs for endpoints.

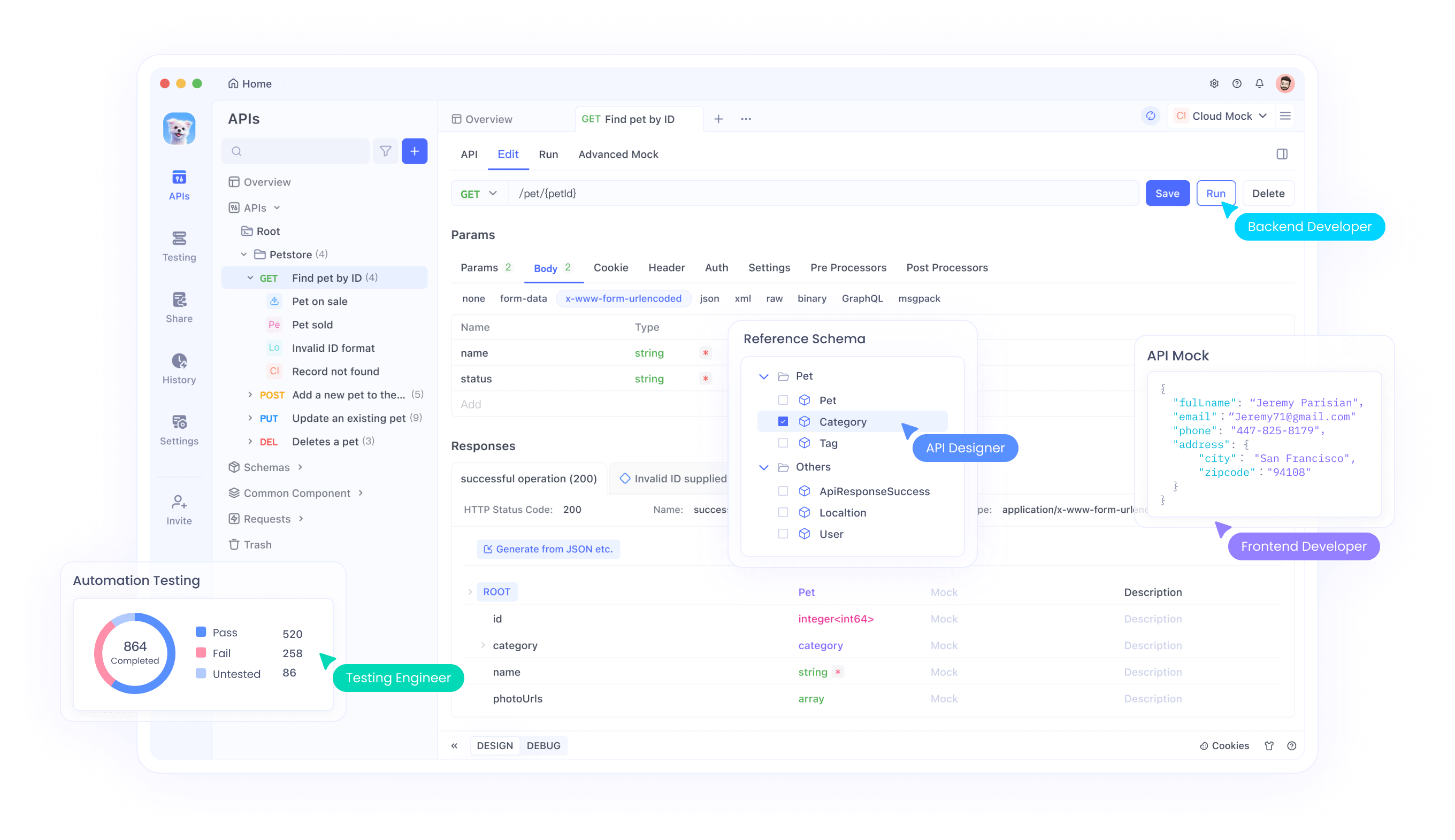

Enhancing Workflow with Apidog: API Testing Integration

While Devstral handles coding, Apidog ensures your APIs perform reliably. This tool streamlines API development, complementing Devstral’s capabilities.

Testing APIs

Validate endpoints with Apidog:

- Launch Apidog and create a project.

- Define an endpoint (e.g.,

GET /users). - Set parameters and run tests. Check for 200 status and valid JSON.

Mock Servers

Simulate APIs during development:

- In Apidog, access the mock server tab.

- Specify responses (e.g.,

{ "id": 1, "name": "Test" }). - Use the generated URL in your code, testing without live servers.

API Documentation

Generate docs automatically:

- Build test cases in Apidog.

- Export documentation as HTML or Markdown for team sharing.

Integrating Apidog ensures your APIs align with Devstral-generated code, creating a robust pipeline.

Advanced Usage: Customizing Devstral

Maximize Devstral’s potential with these techniques:

Parameter Tuning

Adjust settings like temperature (randomness) or top-p (output diversity) via Ollama’s config options. Test values to balance creativity and precision.

IDE Integration

Seek Ollama-compatible plugins for VS Code or JetBrains IDEs. This embeds Devstral directly into your editor, enhancing workflow.

API Utilization

If Ollama exposes an API, craft scripts to automate tasks. Example: a Python script sending prompts to Devstral via HTTP requests.

Community Engagement

Track updates on mistral.ai or Ollama’s forums. Contribute fixes or share use cases to shape development.

These steps tailor Devstral to your needs, boosting efficiency.

Technical Background: Under the Hood

Devstral and Ollama combine cutting-edge tech:

Devstral Architecture

Mistral AI built Devstral as a transformer-based LLM, trained on code and text. Its multi-language support stems from extensive datasets, enabling precise code generation.

Ollama Framework

Ollama optimizes models for local execution, supporting CPU and GPU acceleration. It handles model loading, memory management, and inference, abstracting complexity for users.

This synergy delivers high-performance AI without cloud dependency.

Conclusion

Running Devstral locally with Ollama empowers developers with a private, cost-effective, and offline-capable coding tool. You set it up easily, use it for diverse coding tasks, and enhance it with Apidog’s API testing. This combination drives productivity and quality. Join the Devstral community, experiment with customizations, and elevate your skills. Download Apidog free today to complete your toolkit.