Unlocking advanced natural language processing on your own hardware is now easier than ever. If you want to build powerful Retrieval-Augmented Generation (RAG) systems, search engines, or document analysis pipelines—while maintaining privacy and control—this guide walks you through running Alibaba Cloud’s Qwen3 embedding and reranker models locally using Ollama.

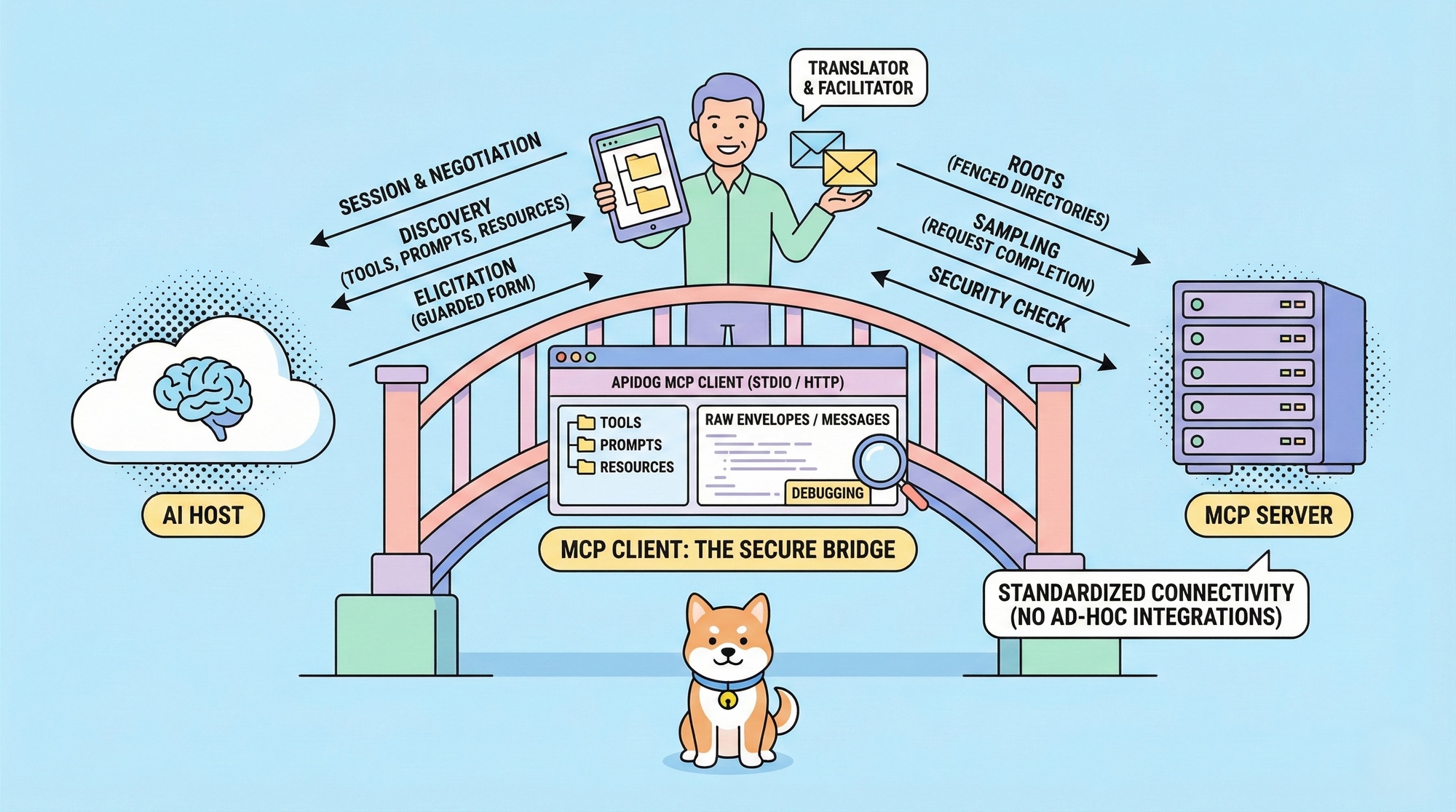

💡 Looking for an all-in-one API testing solution that generates beautiful API documentation and helps your developer team collaborate more productively? Apidog is built for backend and API professionals, offering seamless integration and a more affordable alternative to Postman.

Why Run LLMs Locally?

For API developers and backend teams, cloud-based large language models (LLMs) often mean trade-offs: ongoing costs, privacy concerns, and limited customization. Running models locally addresses these challenges:

- Data never leaves your infrastructure

- One-time hardware investment with no recurring cloud fees

- Full control for custom workflows and integration

Open-source models like Alibaba Cloud’s Qwen3 family, paired with the lightweight Ollama runtime, now make this approach accessible—even for laptops and modest servers.

Core Concepts: Embedding and Reranker Models in RAG

Before you get hands-on, it’s vital to understand how embedding and reranker models work together in a RAG (Retrieval-Augmented Generation) system.

Embedding Models: Fast Semantic Search

Think of an embedding model as your intelligent indexer. It transforms text—be it a sentence, paragraph, or document—into a high-dimensional vector that captures its meaning. Similar documents cluster together in this “semantic space,” making it possible to rapidly retrieve relevant content using vector search.

Example:

When a user asks, “How do I run models locally?”, the embedding model encodes this query and finds documents whose vectors are closest—surfacing potentially relevant answers.

Reranker Models: Precise Relevance Scoring

After initial retrieval, the reranker model steps in as the subject-matter expert. It reviews top candidate documents, directly comparing each with the query. This cross-encoder approach enables nuanced, context-aware ranking—ensuring the most relevant responses rise to the top.

In brief:

- Embedding: Fast, broad recall

- Reranker: Deep, accurate ranking

Introducing Qwen3: Next-Generation Open Source NLP

Alibaba Cloud’s Qwen3 series sets a new bar for open-source language models, especially for multilingual and embedding tasks. Key advantages:

- Top-tier performance: The Qwen3-Embedding-8B model ranks #1 on the MTEB (Massive Text Embedding Benchmark) multilingual leaderboard.

- 100+ language support: Ideal for international and cross-border applications.

- Scalable: Choose from 0.6B, 4B, and 8B parameter sizes to fit your hardware and speed needs.

- Customizable: Supports instruction tuning for domain-specific tasks.

- Efficient: Quantization options (like Q5_K_M) reduce memory usage with minimal accuracy loss.

Step 1: Setting Up Ollama and Downloading Qwen3 Models

Install Ollama

Ollama provides a streamlined CLI for managing, running, and interacting with LLMs locally.

For macOS/Linux:

curl -fsSL https://ollama.com/install.sh | sh

For Windows:

Download the installer from the official Ollama site.

Verify your installation:

ollama --version

Download Qwen3 Embedding and Reranker Models

With Ollama running, pull the recommended Qwen3 models:

ollama pull dengcao/Qwen3-Embedding-8B:Q5_K_M

# 4B parameter reranker model

ollama pull dengcao/Qwen3-Reranker-4B:Q5_K_M

The :Q5_K_M quantization tag delivers a strong balance of speed and accuracy. To view all installed models:

ollama list

Step 2: Generate Embeddings with Qwen3 in Python

Install the Ollama Python library:

pip install ollama

Here’s a practical code snippet to encode any text into a vector:

import ollama

EMBEDDING_MODEL = 'dengcao/Qwen3-Embedding-8B:Q5_K_M'

def get_embedding(text: str):

try:

response = ollama.embeddings(

model=EMBEDDING_MODEL,

prompt=text

)

return response['embedding']

except Exception as e:

print(f"Error: {e}")

return None

# Example usage

sentence = "Ollama makes it easy to run LLMs locally."

embedding = get_embedding(sentence)

if embedding:

print(f"First 5 dimensions: {embedding[:5]}")

print(f"Total dimensions: {len(embedding)}")

Tip: Store these vectors in a vector database or in-memory array for fast semantic search.

Step 3: Rerank Search Results for Maximum Relevance

The Qwen3 reranker works via the chat endpoint. Here’s how to score a document’s relevance to a query:

import ollama

RERANKER_MODEL = 'dengcao/Qwen3-Reranker-4B:Q5_K_M'

def rerank_document(query: str, document: str) -> float:

prompt = f"""

You are an expert relevance grader. Is the following document relevant to the user's query? Reply 'Yes' or 'No'.

Query: {query}

Document: {document}

"""

try:

response = ollama.chat(

model=RERANKER_MODEL,

messages=[{'role': 'user', 'content': prompt}],

options={'temperature': 0.0}

)

answer = response['message']['content'].strip().lower()

return 1.0 if 'yes' in answer else 0.0

except Exception as e:

print(f"Error: {e}")

return 0.0

# Example usage

query = "How do I run models locally?"

doc1 = "Ollama is a tool for running large language models on your own computer."

doc2 = "The capital of France is Paris."

print(rerank_document(query, doc1)) # Expected: 1.0

print(rerank_document(query, doc2)) # Expected: 0.0

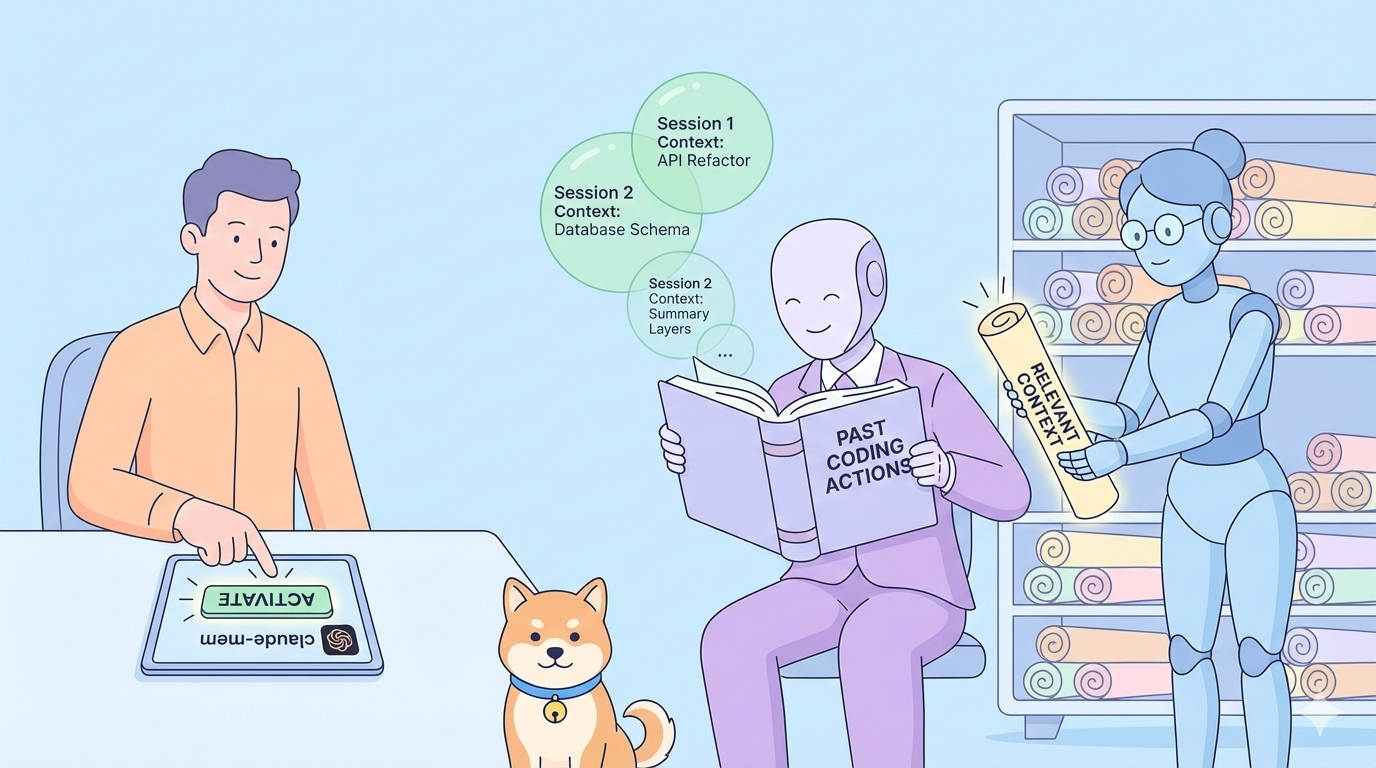

Step 4: End-to-End RAG Workflow Example

Combine embedding, retrieval, and reranking for an efficient RAG mini-pipeline.

Install numpy if you haven’t:

pip install numpy

Full Example:

import ollama

import numpy as np

EMBEDDING_MODEL = 'dengcao/Qwen3-Embedding-8B:Q5_K_M'

RERANKER_MODEL = 'dengcao/Qwen3-Reranker-4B:Q5_K_M'

documents = [

"The Qwen3 series of models was developed by Alibaba Cloud.",

"Ollama provides a simple command-line interface for running LLMs.",

"A reranker model refines search results by calculating a precise relevance score.",

"To install Ollama on Linux, you can use a curl command.",

"Embedding models convert text into numerical vectors for semantic search.",

]

# Generate embeddings for your corpus

corpus_embeddings = [ollama.embeddings(model=EMBEDDING_MODEL, prompt=doc)['embedding'] for doc in documents]

def cosine_similarity(v1, v2):

return np.dot(v1, v2) / (np.linalg.norm(v1) * np.linalg.norm(v2))

# User query

user_query = "How do I install Ollama?"

query_embedding = ollama.embeddings(model=EMBEDDING_MODEL, prompt=user_query)['embedding']

# Retrieve top 3 similar docs

scores = [cosine_similarity(query_embedding, emb) for emb in corpus_embeddings]

top_k_indices = np.argsort(scores)[::-1][:3]

print("--- Initial Retrieval ---")

for idx in top_k_indices:

print(f"Score: {scores[idx]:.4f} | {documents[idx]}")

# Rerank

retrieved_docs = [documents[i] for i in top_k_indices]

reranked = [(doc, rerank_document(user_query, doc)) for doc in retrieved_docs]

reranked.sort(key=lambda x: x[1], reverse=True)

print("--- After Reranking ---")

for doc, score in reranked:

print(f"Relevance: {score:.2f} | {doc}")

Result:

The system first retrieves documents broadly relevant to “install Ollama,” then the reranker pinpoints the most precise answer, such as the Linux curl installation command.

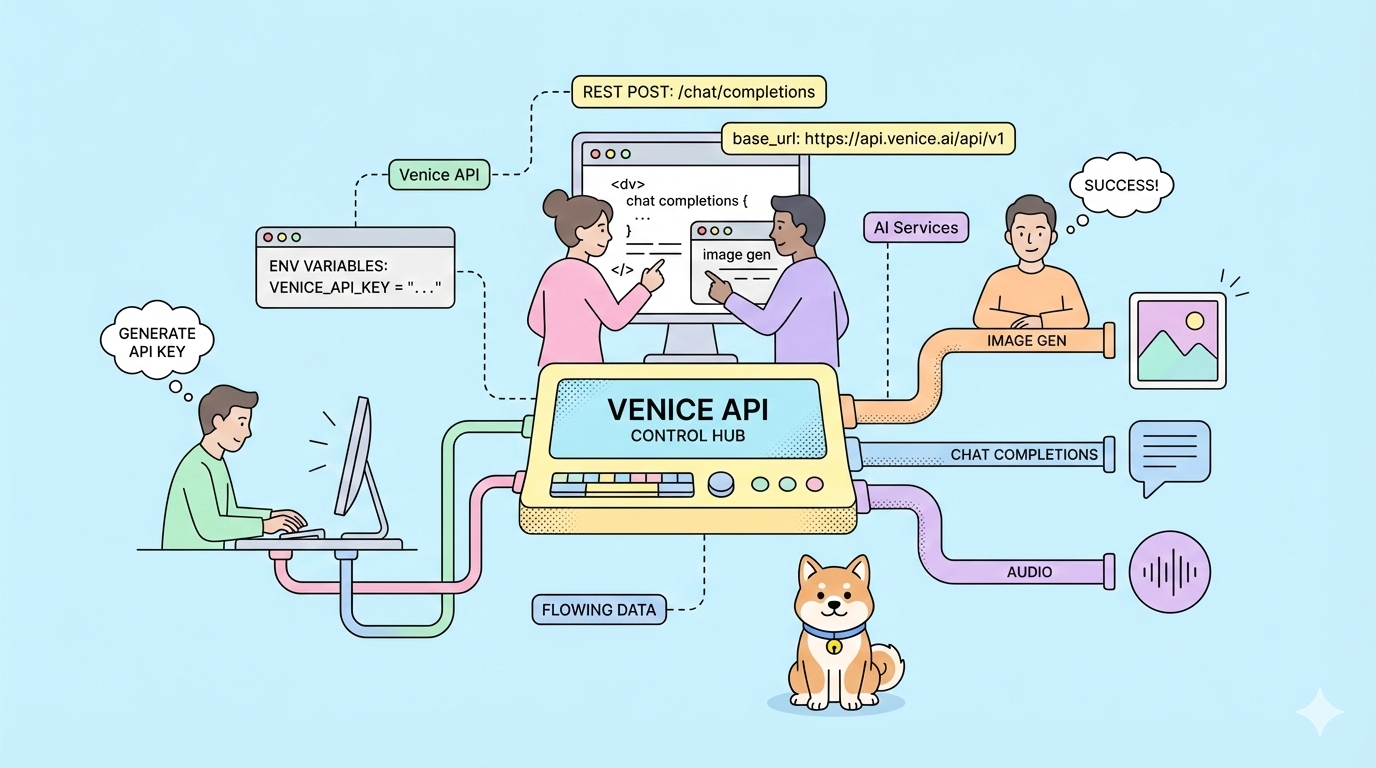

Building Advanced API Solutions

Integrating these models into your API backend lets you:

- Deliver smarter semantic search and document Q&A

- Power privacy-sensitive applications

- Customize vector storage and ranking logic for your domain

Apidog’s unified API platform makes it easy to design, test, and document your endpoints—whether you’re serving embeddings, reranker outputs, or any LLM-powered workflow. Explore beautiful API docs and team productivity features, and see why Apidog outperforms Postman for less.

Conclusion

Running Qwen3 embedding and reranker models locally with Ollama puts state-of-the-art language understanding at your fingertips—no cloud dependency required. This approach empowers API developers and engineering teams to innovate rapidly, scale securely, and retain full control over their NLP workflows.

Ready to push your API workflows further? Give Apidog a try for streamlined API management, team collaboration, and best-in-class documentation.