AI is rapidly transforming how development teams build, test, and maintain software. While proprietary tools like Devin AI have generated buzz, a powerful open-source alternative is gaining traction among engineers: OpenHands. Developed by All-Hands-AI (and formerly known as OpenDevin), OpenHands empowers teams to automate real-world development tasks—far beyond basic code generation—while retaining full transparency and control.

As developer workflows grow more complex and collaborative, integrating AI with robust API tools is increasingly essential. Platforms like Apidog streamline API testing and documentation, working hand-in-hand with advanced AI agents like OpenHands to maximize productivity and code quality.

💡 Want a great API Testing tool that generates beautiful API Documentation? Need an all-in-one platform for your dev team to achieve maximum productivity? Apidog delivers it all—replacing Postman at a much more affordable price!

What Is OpenHands? Why Are Developers Excited?

OpenHands is not just another AI code assistant. It's an open-source framework where AI agents can:

- Modify codebases intelligently: Refactor code, add new endpoints, update dependencies.

- Run secure shell commands: Install packages, execute scripts, manage version control, all within a sandboxed Docker environment.

- Browse the web: Research solutions, fetch documentation, or pull answers from Stack Overflow.

- Interact with APIs: Integrate third-party services and automate API workflows.

- Manage files and projects: Create, update, and organize files as part of development tasks.

OpenHands is licensed under MIT, fostering community-driven development, deep customization, and eliminating vendor lock-in—a stark contrast to closed alternatives like Devin AI.

Who Should Use OpenHands?

- API developers automating repetitive coding or testing tasks.

- Backend engineers managing complex projects across multiple services.

- QA teams seeking to automate test creation, bug reproduction, or environment setup.

- Engineering leads who want to scale productivity without sacrificing control.

OpenHands is especially valuable when combined with comprehensive API tools like Apidog, which further simplify collaboration, testing, and documentation.

How to Install OpenHands (Mac, Linux, Windows)

OpenHands is designed for local, secure operation using Docker. Here’s how to get started on any major OS:

Prerequisites

- OS: macOS (with Docker Desktop), Linux (Ubuntu 22.04+), or Windows (WSL 2 + Docker Desktop).

- Hardware: Modern CPU, 4GB+ RAM (more recommended for large models/tasks).

Step-by-Step Installation

1. Install Docker Desktop

- Download Docker Desktop for your OS.

- Complete installation and start Docker.

2. OS-Specific Docker Configuration

macOS:

- Open Docker Desktop → Settings (gear icon) → Advanced.

- Ensure “Allow the default Docker socket to be used” is enabled.

Linux:

- Follow official Docker Desktop for Linux instructions.

- Confirm Docker is running.

Windows:

- Open PowerShell as Administrator:

wsl --install(if WSL not installed). - Check:

wsl --version(must be 2 or higher). - Install Docker Desktop. In Docker Desktop → Settings:

- Enable “Use the WSL 2 based engine.”

- Under Resources > WSL Integration, enable default distro integration.

- Run all subsequent Docker commands from your WSL (e.g., Ubuntu) terminal.

3. Pull the Runtime Docker Image (Optional, but recommended)

docker pull docker.all-hands.dev/all-hands-ai/runtime:0.34-nikolaik

(Confirm latest tag on the official repo.)

4. Start OpenHands

docker run -it --rm --pull=always \

-e SANDBOX_RUNTIME_CONTAINER_IMAGE=docker.all-hands.dev/all-hands-ai/runtime:0.34-nikolaik \

-e LOG_ALL_EVENTS=true \

-v /var/run/docker.sock:/var/run/docker.sock \

-v ~/.openhands-state:/.openhands-state \

-p 3000:3000 \

--add-host host.docker.internal:host-gateway \

--name openhands-app \

docker.all-hands.dev/all-hands-ai/openhands:0.34

5. Access the OpenHands Web UI

- After startup, visit http://localhost:3000 in your browser.

Initial Setup: Connecting OpenHands to an LLM

OpenHands requires a Large Language Model (LLM) backend to function. You can use commercial APIs (OpenAI, Anthropic, Google Gemini, OpenRouter) or local models (Ollama).

Configuring Your LLM

- On first launch, a settings modal will appear.

- Select LLM Provider: OpenAI, Anthropic, Google, OpenRouter, or local (e.g., Ollama).

- Choose Model: e.g.,

gpt-4o,claude-3-5-sonnet-20240620,gemini-1.5-pro-latest. - Enter API Key: Paste your provider’s API key.

- Advanced Options: Set custom model IDs or base URLs (useful for local/proxy setups).

Provider-Specific Instructions

OpenAI:

- Get your key at OpenAI Platform.

- Configure billing.

- Enter your key and select a GPT-4+ model.

Anthropic (Claude):

- Sign up at Anthropic.

- Create API key, complete billing.

- Use

claude-3-5-sonnet-20240620or similar.

Google Gemini:

- Get a key from AI Studio or Cloud Vertex AI.

- Enable needed APIs.

- Use

gemini-1.5-pro-latest.

OpenRouter:

- Sign up at OpenRouter, add credits.

- Use model identifiers like

anthropic/claude-3.5-sonnet.

Local LLMs (Ollama example):

- Install Ollama, pull a model (

ollama pull llama3). - In OpenHands, set provider to Ollama, set the base URL to

http://host.docker.internal:11434. - Specify your model name (e.g.,

llama3).

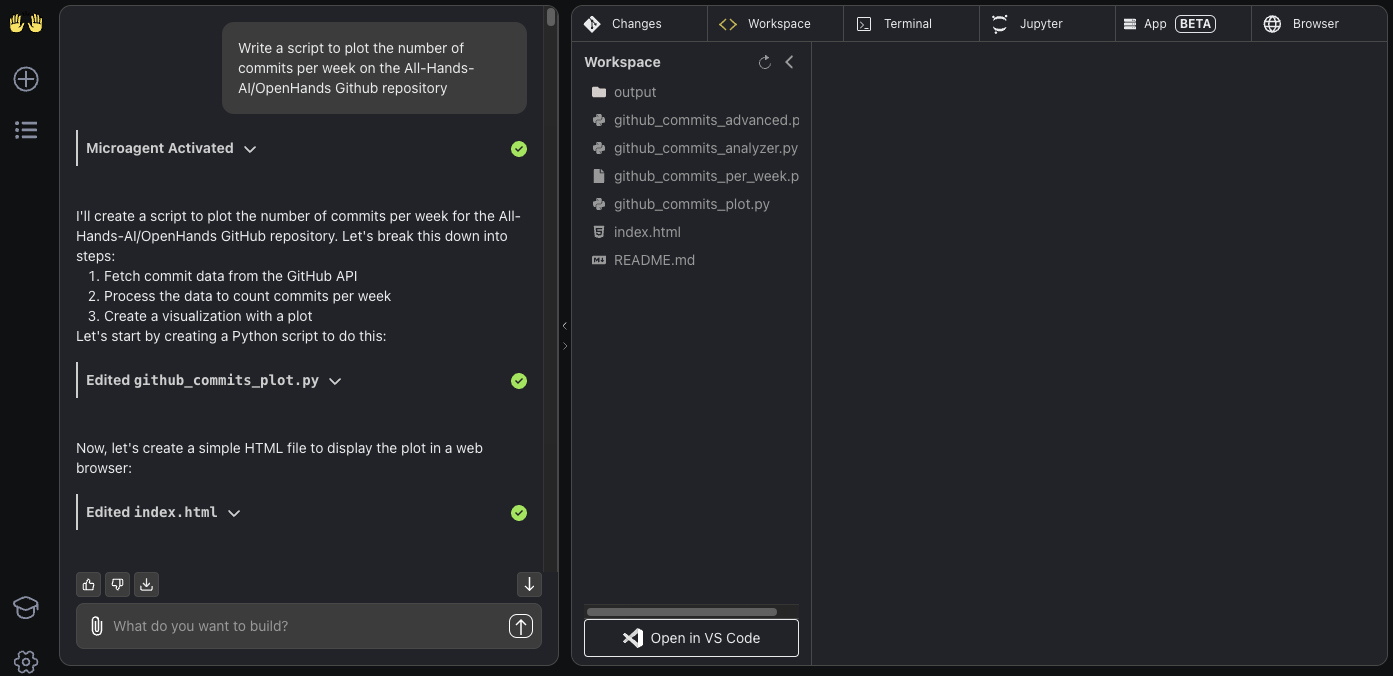

Core Features: How OpenHands Works for Developers

OpenHands agents are designed to automate and accelerate real-world development cycles. Here’s how they function in typical engineering scenarios:

1. Code Modification

Agents read, understand, and update code based on your prompts.

Example:

- Refactor: “Refactor the

processDatafunction inutils/data.jsfor clarity.” - Feature: “Add a new POST

/api/tasksendpoint inroutes/tasks.js.”

2. Secure Shell Command Execution

All commands (e.g., npm install, python manage.py runserver, git commit) execute in a Docker sandbox—protecting your system.

3. Web Browsing for Solutions

Need to fetch documentation or Stack Overflow answers? Agents can autonomously browse and integrate relevant findings.

4. API Integration

Agents interact with RESTful APIs or other services—automating data fetching, updating, or chaining workflows.

5. File System Management

Agents can create, modify, and delete files or folders within a sandboxed workspace—ideal for scaffolding new projects or updating existing ones.

Practical Usage Examples

Example: Automate a Simple Script

Prompt:

Write a bash script hello.sh that prints "hello world!"

- Agent plans: Create

hello.sh, add code, set permissions, execute, and report output. - You can iteratively refine:

Modify hello.sh so that it accepts a name as the first argument, defaulting to "world"

Example: Build a React TODO App

Prompt:

Build a frontend-only TODO app in React. Store state in localStorage. Add tasks and display them.

- Agent scaffolds the project, writes components, and integrates localStorage.

- Request enhancements:

Add an optional due date for each task and show it in the list.

Example: Add a GitHub Action

Prompt:

Add a GitHub Action to lint JavaScript code using ESLint on every push to main.

- Agent creates

.github/workflows/lint.ymland sets up the workflow.

Example: Refactor and Debug

- Refactor:

In ./utils/calculation.py, rename variables in process_data() for clarity. - Bug Fix:

Fix the regex in server/handlers.js so it accepts .co.uk emails.

Example: Test-Driven Bug Fix

- Prompt:

Write a Jest test in pricing.test.js to reproduce the crash in 'calculate_discount' when quantity is zero. - Prompt:

Fix calculate_discount in pricing.js so the test passes.

Prompt Engineering: Get the Most from OpenHands

Effective prompting is critical. Here’s how to craft prompts that get real results:

Best Practices

- Be specific: Name files, functions, and describe the exact desired change.

- Keep scope manageable: Limit to 1 small feature or bug per prompt.

- Provide context: Include code snippets, error messages, or file paths.

- Iterate: Break complex tasks into steps.

Good Prompt Example:

Add a function 'calculate_average' to 'utils/math_operations.py' that returns the average of a list of numbers.

Weak Prompt Example:

Improve the codebase.(Too vague.)

Advanced: Configuring OpenHands Docker Runtime

The runtime container is where all code execution happens, isolated from your system.

- Default runtime:

docker.all-hands.dev/all-hands-ai/runtime:0.34-nikolaik - Customize by version:

Update the tag in theSANDBOX_RUNTIME_CONTAINER_IMAGEenv var when launching the container.

For bleeding-edge or feature-specific needs, consult the OpenHands documentation.

OpenHands vs Devin AI: Why Open Source Matters

- Transparency: Review and modify every aspect of your AI agent.

- Community-driven: Rapid updates, bug fixes, and features from global contributors.

- No vendor lock-in: Maintain full control over your workflows and data.

- Seamless integration: Use alongside trusted API tools like Apidog for end-to-end automation, testing, and documentation.

💡 Need a robust API testing and documentation platform? Apidog works seamlessly with AI-powered workflows, driving maximum productivity for technical teams. Switch from Postman and save!

Conclusion

OpenHands is reshaping developer workflows by delivering a transparent, customizable AI agent capable of automating complex software development tasks—without the limitations of closed systems like Devin AI. Combined with API-centric platforms such as Apidog, teams can achieve unparalleled productivity, security, and collaboration. Start exploring OpenHands today and experience how open-source AI can help you "Code Less, Make More."