OpenAI has expanded its lineup of powerful AI models with o1-pro, a new offering designed for advanced reasoning and problem-solving tasks. As part of OpenAI's evolution beyond the GPT series, the "o" models represent specialized AI systems optimized for particular use cases. In this comprehensive guide, we'll explore everything you need to know about o1-pro, including its capabilities, pricing structure, and how to implement it in your applications through various methods, including with API testing tools like Apidog.

What is o1-pro? And How Good Is It?

o1-pro is OpenAI's specialized model for complex reasoning tasks. It's part of the "o1" family of models that excel at step-by-step thinking and problem-solving. The "pro" variant offers enhanced capabilities over the base model, making it suitable for demanding applications that require sophisticated reasoning.

Unlike more general-purpose models like GPT-4o, o1-pro has been specifically tuned for tasks that require logical progression, mathematical reasoning, and structured analysis. This specialized focus allows it to perform exceptionally well in areas where methodical thinking and problem decomposition are crucial.

o1-pro is designed with the following strengths:

- Advanced Reasoning: Excels at breaking down complex problems into logical steps, making it ideal for tasks requiring structured thinking and analysis. It can navigate complex decision trees and provide explicit reasoning paths.

- Mathematical Problem-Solving: Handles mathematical reasoning, proofs, and calculations with improved accuracy. The model demonstrates particular strength in algebra, calculus, statistics, and formal logic.

- Code Analysis: Capable of analyzing, debugging, and explaining code with detailed understanding. It can work across multiple programming languages and identify logical errors or optimization opportunities.

- Structured Thinking: Produces clearer, more structured responses for complex queries, with natural breakdowns of multi-step problems and clearly delineated reasoning.

- Research and Analysis: Performs in-depth analysis of topics with logical organization, comparing different perspectives and methodically evaluating evidence.

- Chain-of-Thought Processing: Explicitly shows its reasoning process, making it easier to follow complex lines of thought and verify conclusions.

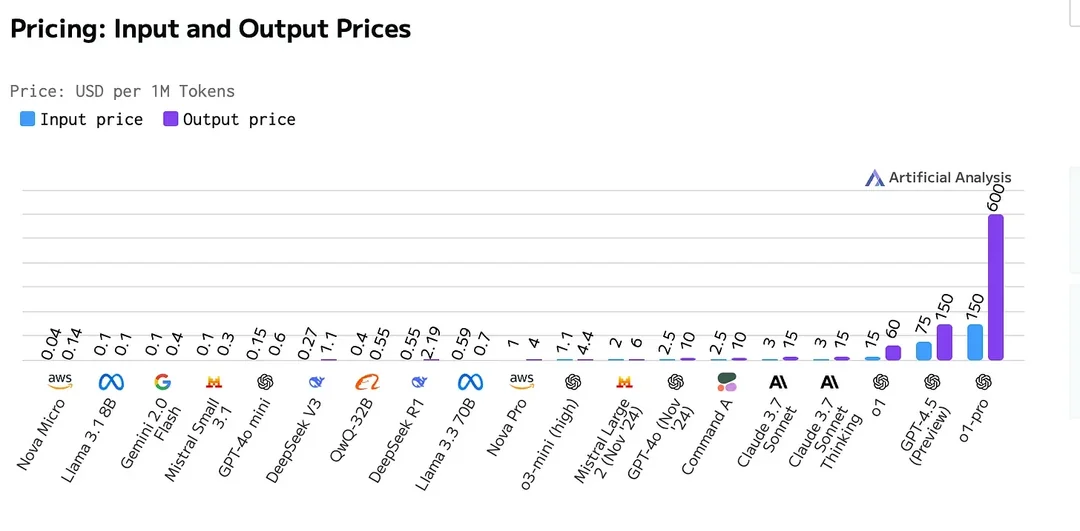

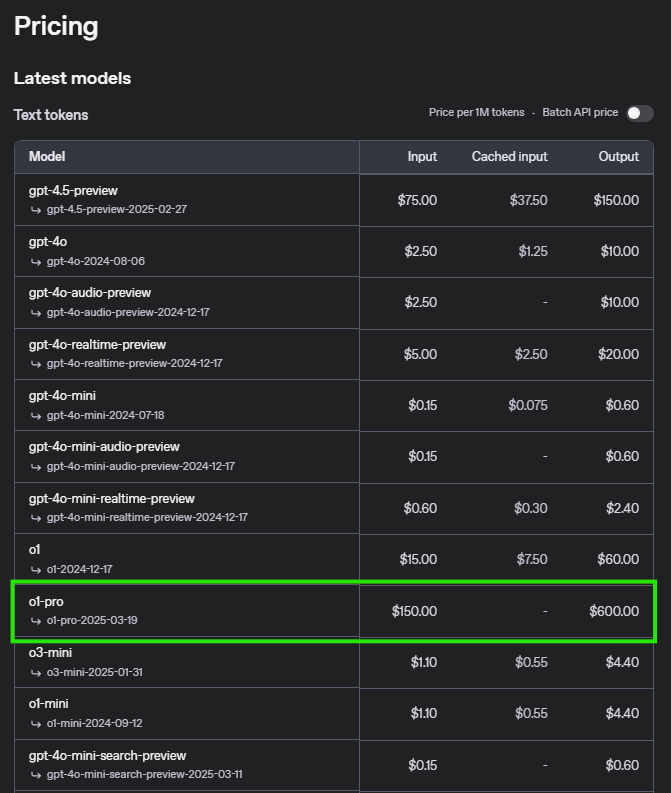

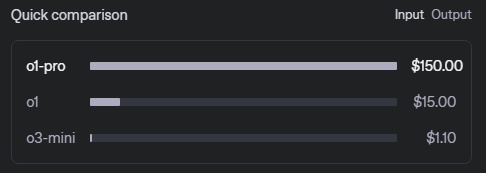

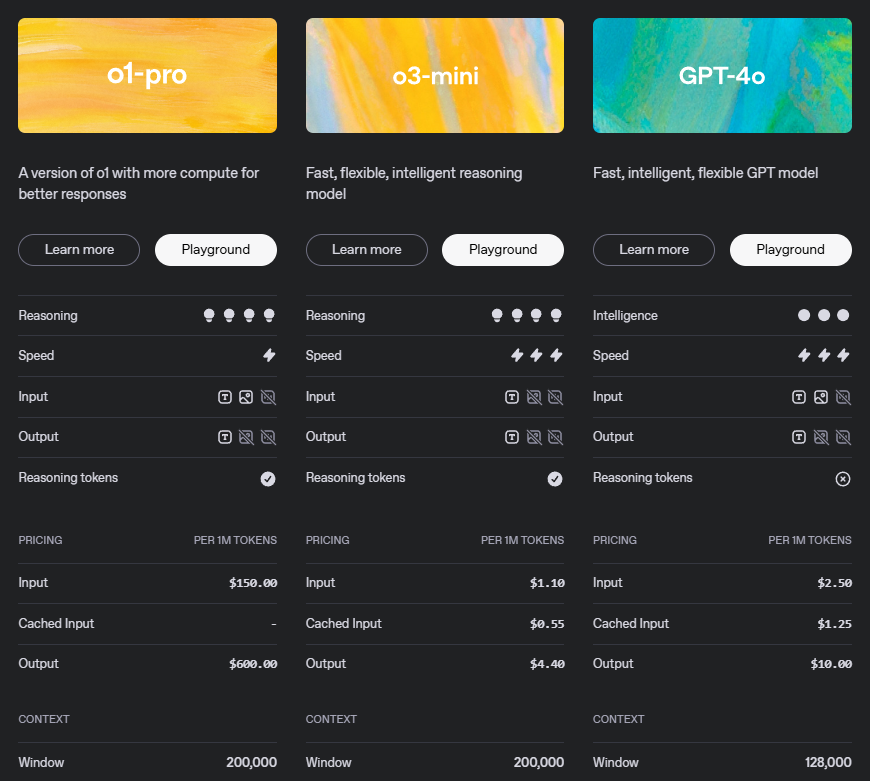

o1-pro API Pricing

Understanding the pricing structure is essential for planning your API usage. Here's the detailed breakdown for o1-pro:

This pricing model reflects the sophisticated capabilities of o1-pro, particularly its advanced reasoning and output quality. The higher cost for output tokens acknowledges the value of the structured, well-reasoned responses the model generates.

Pricing Example Scenarios

| Operation | Price |

|---|---|

| Input (Prompt) | $5 per million tokens |

| Output (Completion) | $15 per million tokens |

Scenario 1: Small-scale usage

For a scenario with 500,000 input tokens and 200,000 output tokens:

- Input cost: 500,000 tokens × 5/million tokens = 2.50

- Output cost: 200,000 tokens × 15/million tokens = 3.00

- Total cost: $5.50

Scenario 2: Medium-scale usage

For a scenario with 5 million input tokens and 2 million output tokens:

- Input cost: 5,000,000 tokens × 5/million tokens = 25.00

- Output cost: 2,000,000 tokens × 15/million tokens = 30.00

- Total cost: $55.00

Scenario 3: Large-scale usage

For a scenario with 50 million input tokens and 20 million output tokens:

- Input cost: 50,000,000 tokens × 5/million tokens = 250.00

- Output cost: 20,000,000 tokens × 15/million tokens = 300.00

- Total cost: $550.00

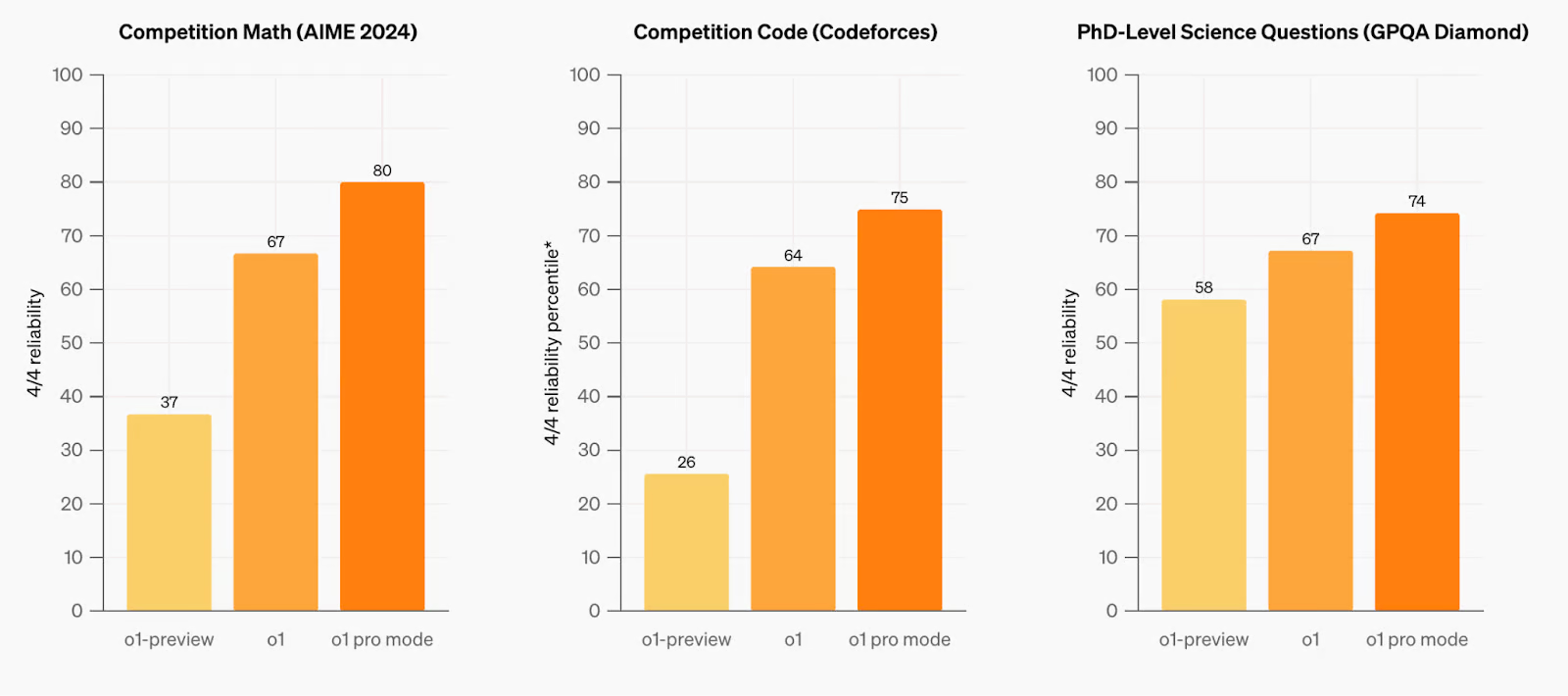

Comparison With Previous Models

o1-pro demonstrates notable advancements in reasoning, problem-solving, and long-context understanding. The model has been rigorously tested across various benchmarks, showcasing improvements over its predecessors in handling complex queries and multi-step reasoning tasks. In key domains such as mathematics, coding, and structured planning, o1-pro exhibits enhanced accuracy and reliability, surpassing previous models in both performance and efficiency. Its optimized architecture allows for better comprehension of intricate prompts, making it a strong contender for advanced applications requiring high-level reasoning and extended context capabilities.

You can find the full comparison of OpenAI models, including detailed specifications and benchmarks, on the official OpenAI documentation page.

OpenAI O1 Pro API Requirements and Rate Limits

| Tier | Qualification | Usage Limits |

|---|---|---|

| Free | User must be in an allowed geography | $100/month |

| Tier 1 | $5 paid | $100/month |

| Tier 2 | $50 paid and 7+ days since first successful payment | $500/month |

| Tier 3 | $100 paid and 7+ days since first successful payment | $1,000/month |

| Tier 4 | $250 paid and 14+ days since first successful payment | $5,000/month |

| Tier 5 | $1,000 paid and 30+ days since first successful payment | $50,000/month |

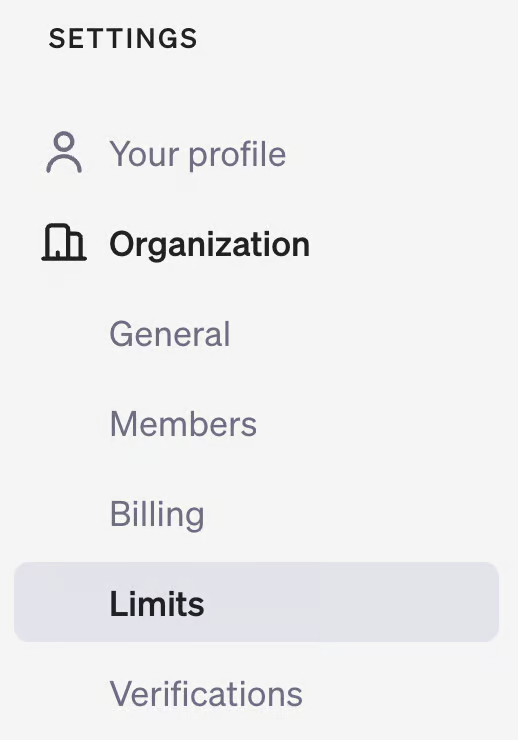

To check your usage tier, visit your account page on the OpenAI developer platform and check the "Limits" section under "Organization."

How to Use 01 API

Implementing o1-pro in your applications is straightforward. Follow these steps to get started:

1. Prerequisites

- An OpenAI API key (obtain from your OpenAI dashboard)

- A compatible development environment with appropriate libraries

- Sufficient API credits or payment method linked to your OpenAI account

2. Installation

Install the OpenAI library for your preferred programming language:

For Python:

pip install openai

For JavaScript:

npm install openai

For other languages, refer to OpenAI's official documentation for client libraries.

3. Python Implementation Example

Here's how to use o1-pro in Python with detailed parameters:

from openai import OpenAI

client = OpenAI(api_key="your-api-key")

response = client.chat.completions.create(

model="o1-pro",

messages=[

{"role": "system", "content": "You are an AI assistant specializing in mathematical reasoning. Provide clear, step-by-step solutions with explanations for each step."},

{"role": "user", "content": "Solve this calculus problem step by step: find the derivative of f(x) = x^3*ln(x)."}

],

temperature=0.1, # Lower temperature for more precise reasoning

max_tokens=1024, # Adjust based on expected response length

top_p=0.95,

frequency_penalty=0,

presence_penalty=0

)

print(response.choices[0].message.content)

4. JavaScript Implementation Example

For Node.js applications with more detailed configuration:

import OpenAI from 'openai';

const openai = new OpenAI({

apiKey: 'your-api-key',

});

async function askO1Pro() {

try {

const response = await openai.chat.completions.create({

model: 'o1-pro',

messages: [

{role: 'system', content: 'You are an AI assistant specializing in mathematical reasoning. Provide clear, step-by-step solutions with explanations for each step.'},

{role: 'user', content: 'Solve this calculus problem step by step: find the derivative of f(x) = x^3*ln(x).'}

],

temperature: 0.1,

max_tokens: 1024,

top_p: 0.95,

frequency_penalty: 0,

presence_penalty: 0

});

console.log(response.choices[0].message.content);

// Optional: Track token usage

console.log("Prompt tokens:", response.usage.prompt_tokens);

console.log("Completion tokens:", response.usage.completion_tokens);

console.log("Total tokens:", response.usage.total_tokens);

} catch (error) {

console.error('Error:', error);

}

}

askO1Pro();

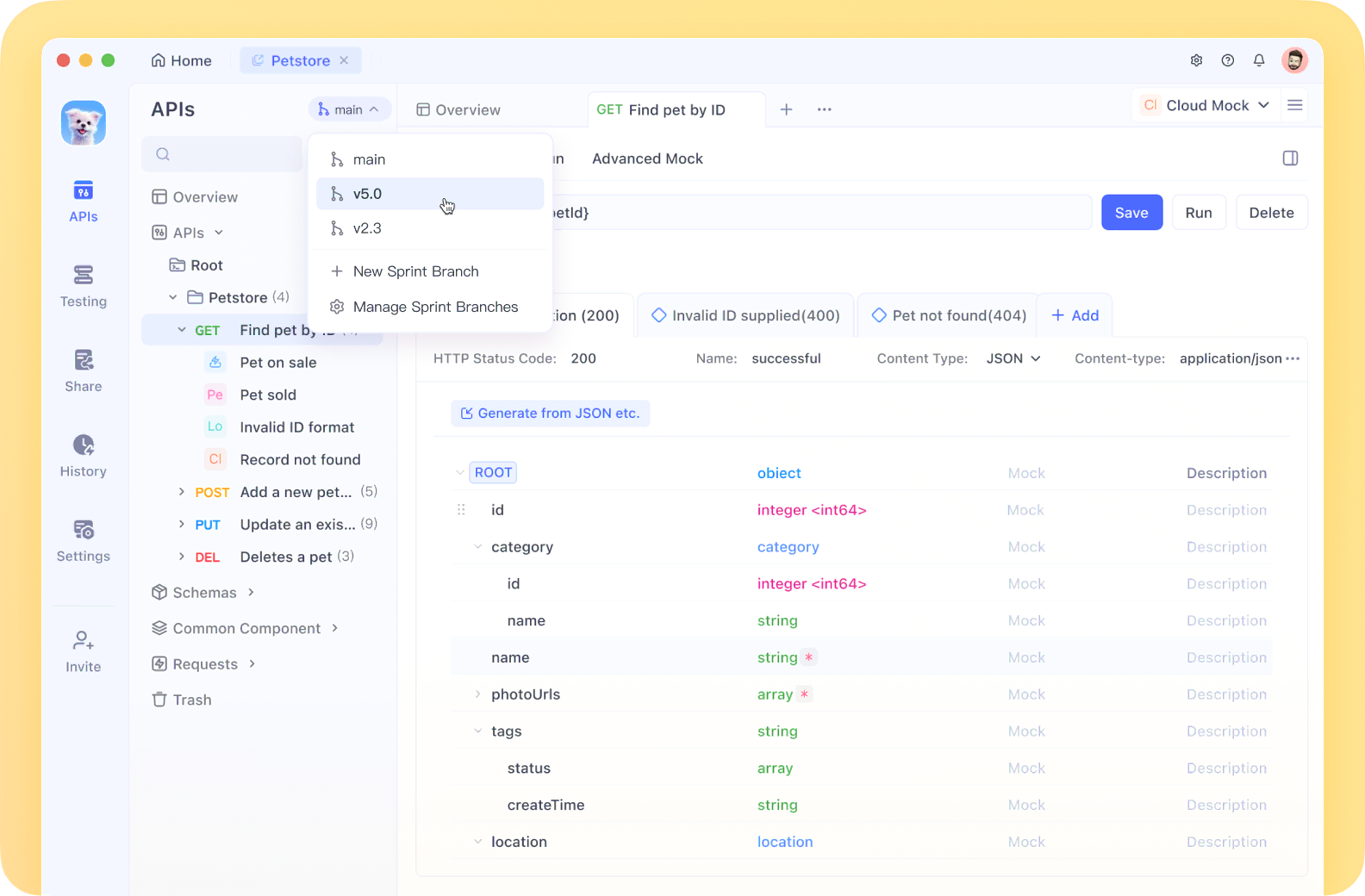

Testing o1-pro API with Apidog

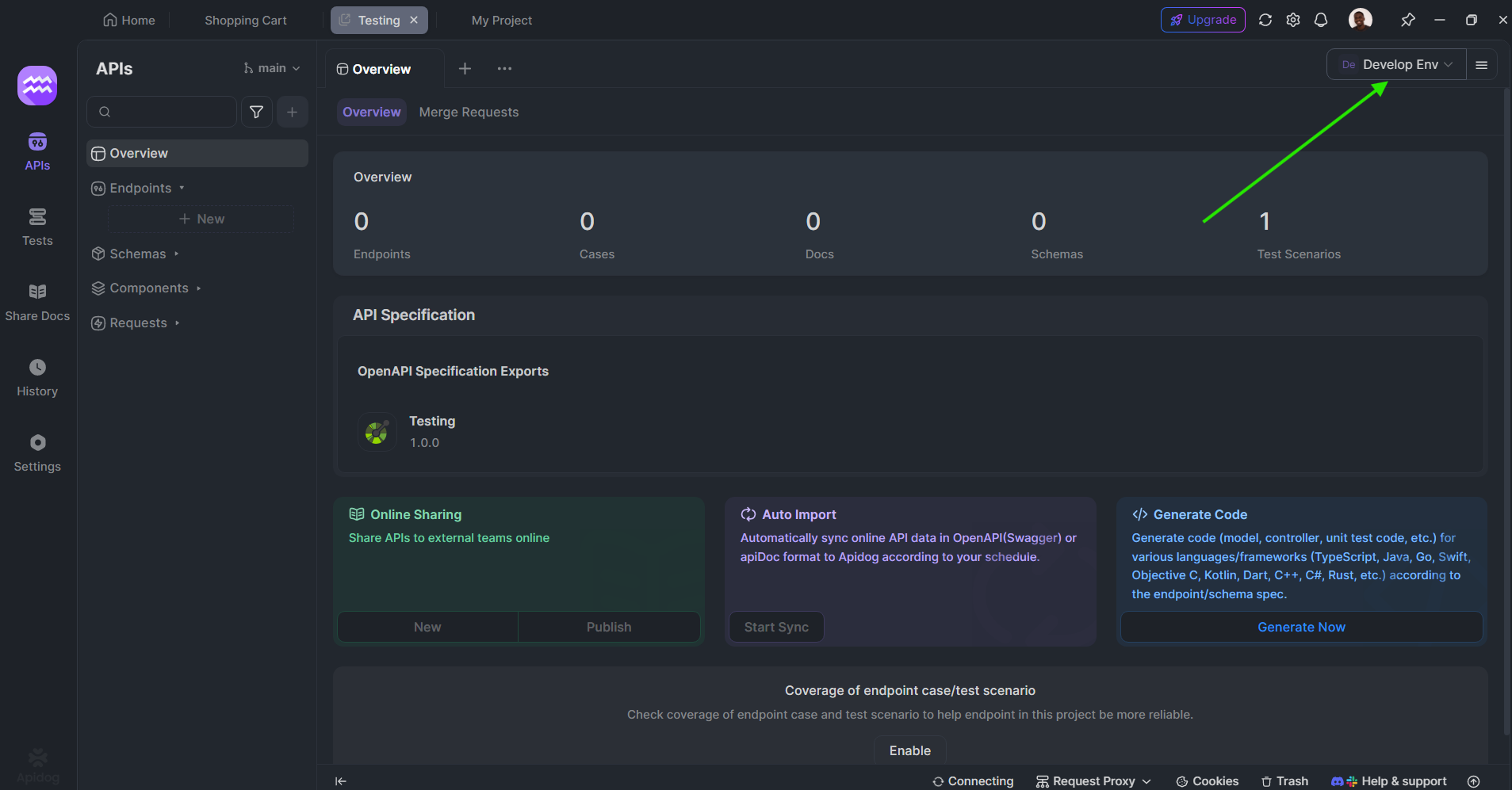

Apidog is a comprehensive API development platform that can significantly streamline your work with o1-pro and other OpenAI APIs. Here's how you can leverage Apidog for testing and integrating o1-pro into your workflow:

Setting Up o1-pro in Apidog

- Create an Account: Sign up for Apidog at apidog.com if you don't already have an account.

- Create a New API Collection: In your Apidog dashboard, create a new collection specifically for OpenAI APIs.

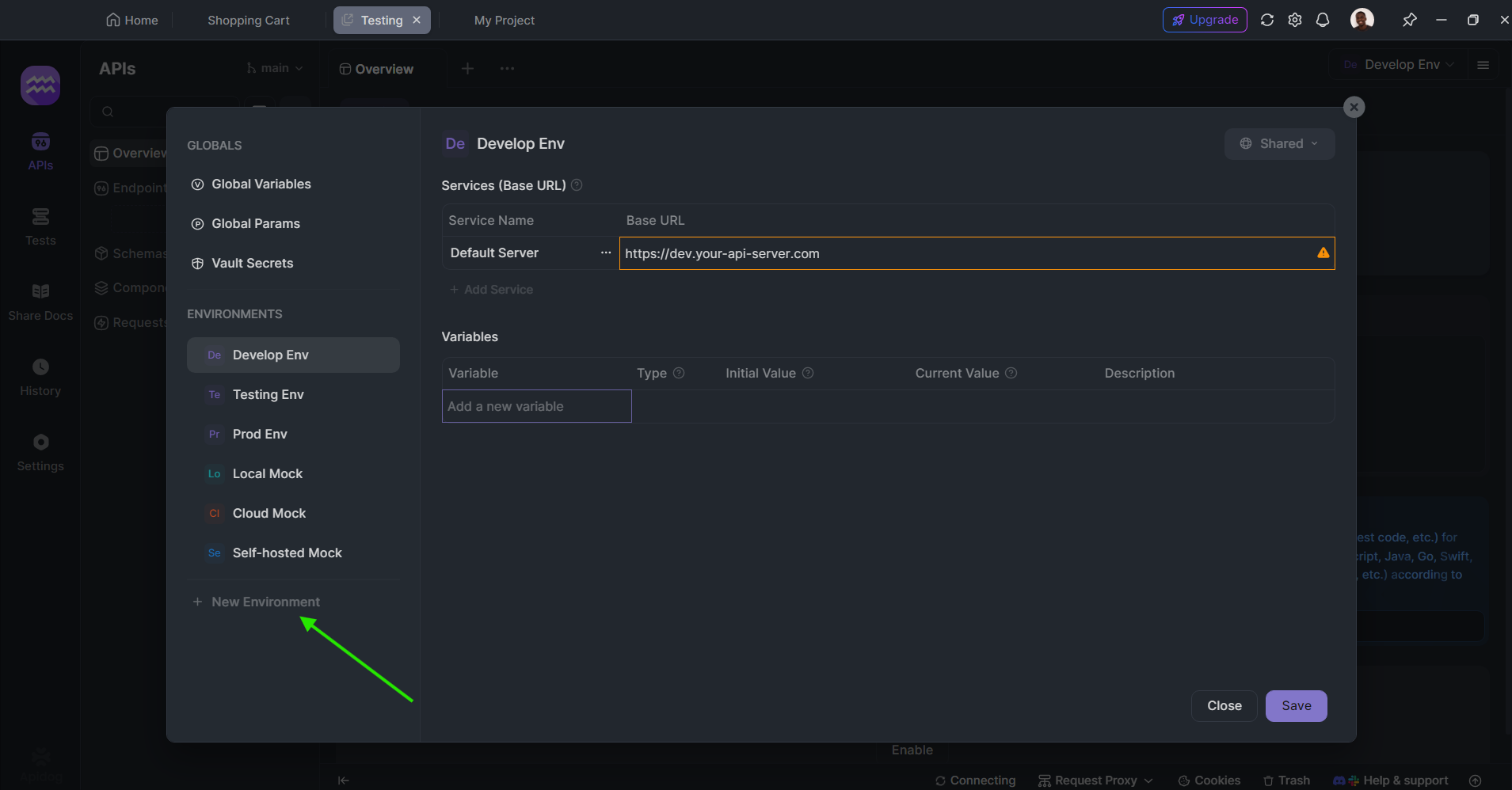

Set Environment Variables: Configure your OpenAI API key as an environment variable for secure and convenient access:

- Go to Environments in the Right top corner

- Create a new environment called "OpenAI"

- Add a variable named "OPENAI_API_KEY" with your actual API key as the value

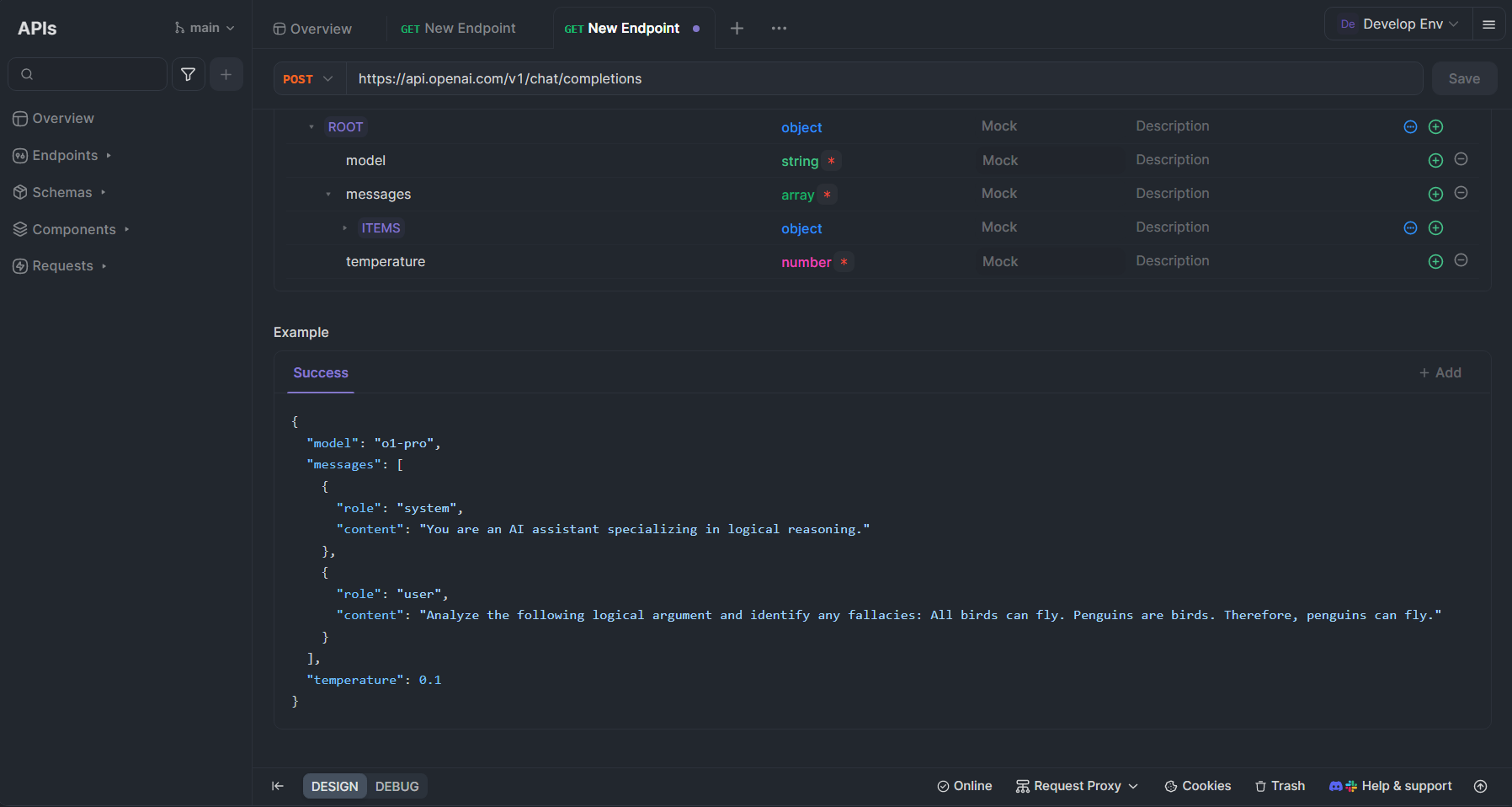

Create a Request for o1-pro:

- Create a new POST request

- Set the URL to

https://api.openai.com/v1/chat/completions - Add an Authorization header with the value

Bearer {{OPENAI_API_KEY}} - In the request body (JSON), use:

{

"model": "o1-pro",

"messages": [

{

"role": "system",

"content": "You are an AI assistant specializing in logical reasoning."

},

{

"role": "user",

"content": "Analyze the following logical argument and identify any fallacies: All birds can fly. Penguins are birds. Therefore, penguins can fly."

}

],

"temperature": 0.1

}

Benefits of Using Apidog with o1-pro

Apidog offers several advantages for working with o1-pro:

- Interactive Testing: Test API calls in real-time and see formatted responses, making it easy to iterate on prompts.

- Automatic Documentation: Document your API implementations automatically, making it easier for team collaboration.

- API Mocking: Create mock responses for o1-pro API calls during development.

- Collaboration Features: Share API collections with team members for collaborative development.

- Environment Management: Easily switch between development, staging, and production environments with different API keys.

- Response Visualization: Get clear visual representation of token usage and costs for better budget management.

- Request History: Keep track of previous API calls for reference and optimization.

Best Practices for Using o1-pro APIs

To maximize the effectiveness of o1-pro and optimize your costs:

- Structure Your Prompts Clearly: For complex reasoning tasks, structure your prompts with clear instructions and expected outputs. Consider using bullet points or numbered instructions for multi-part problems.

- Use System Messages Effectively: Set the context with system messages to guide o1-pro toward the reasoning approach you want. Be specific about the type of reasoning (deductive, inductive, etc.) you're expecting.

Adjust Temperature Parameters:

- Lower temperature (0.1-0.3) for more precise, deterministic reasoning and mathematical problems

- Moderate temperature (0.3-0.7) for balanced reasoning with some flexibility

- Higher temperature for more creative problem-solving approaches

- Request Step-by-Step Thinking: Explicitly ask for detailed reasoning steps when solving complex problems. Phrases like "Think through this step by step" or "Show your reasoning" help activate o1-pro's strengths.

- Monitor Token Usage: Keep track of input and output tokens to manage costs effectively. Consider implementing a token estimation function in your application.

- Batch Related Queries: When possible, combine related questions into a single API call rather than making multiple separate calls.

- Implement Caching: Cache common responses to avoid redundant API calls for frequently requested information.

- Use Chain-of-Thought Techniques: For complex problems, implement chain-of-thought prompting by asking the model to "think about this problem step by step before providing the final answer."

Conclusion

o1-pro represents a significant advancement in AI reasoning capabilities, offering powerful tools for tasks requiring structured thinking and complex problem-solving. With its competitive pricing structure and straightforward implementation, it's accessible to developers looking to enhance their applications with advanced reasoning capabilities.

By following the implementation guidelines and best practices outlined in this article, you can effectively integrate o1-pro into your workflows and take advantage of its sophisticated reasoning abilities while managing your API costs efficiently. Tools like Apidog further streamline the development and testing process, making it easier to harness the full potential of o1-pro for your specific use cases.

As AI reasoning capabilities continue to advance, models like o1-pro will increasingly become essential components in applications that require not just intelligence, but structured, logical thinking that users can follow and understand.