You’ve probably heard about OpenAI’s latest innovations, their new tools for building agents. These tools, powered by the OpenAI API, are revolutionizing how developers create intelligent, responsive systems. Whether you’re a seasoned coder or just starting, this blog post will walk you through everything you need to know about leveraging OpenAI’s offerings to build your own agents.

Why OpenAI’s New Tools for Building Agents Are a Game-Changer

OpenAI announced the integration of web search capabilities into the OpenAI API. This update introduces fast, up-to-date answers with links to relevant web sources, all powered by the same model behind ChatGPT search. It’s a massive leap for developers looking to build agentic systems think AI assistants that can handle complex tasks, fetch real-time data, and interact seamlessly with users.

OpenAI introduced the Responses API, web search capabilities, file search tools, computer use features, and the Agents SDK. Together, they form a robust framework for building agents that feel smarter and more autonomous than ever before. The new tools are designed to help developers create safe, efficient, and powerful agents.

What Are Agents, Anyway? A Quick Refresher

Agents, in the context of AI, are autonomous systems or programs that can perceive their environment, make decisions, and take actions to achieve specific goals. Think of them as your digital sidekicks capable of answering questions, executing tasks, or even learning from interactions.

OpenAI’s new tools empower developers to build these agents using the OpenAI API, making them smarter, faster, and more connected to the web.

Getting Started: Understanding OpenAI’s Responses API

The Responses API is a game-changer that combines the best features of OpenAI’s Chat Completions and Assistants APIs into a simpler, more powerful tool. If you’re familiar with OpenAI’s previous APIs, you’ll appreciate how this streamlines the process of building agents.

To get started, head over to OpenAI’s developer documentation . The Responses API lets you integrate multiple tools and models to execute complex tasks, making it perfect for creating AI agents that can handle everything from answering questions to orchestrating multi-step workflows.

Here’s how you can begin:

The OpenAI API provides a simple interface to state-of-the-art AI models for text generation, natural language processing, computer vision, and more. This example generates text output from a prompt, as you might using ChatGPT.

import OpenAI from "openai";

const client = new OpenAI();

const response = await client.responses.create({

model: "gpt-4o",

input: "Write a one-sentence bedtime story about a unicorn."

});

console.log(response.output_text);Analyze image inputs

You can provide image inputs to the model as well. Scan receipts, analyze screenshots, or find objects in the real world with computer vision.

import OpenAI from "openai";

const client = new OpenAI();

const response = await client.responses.create({

model: "gpt-4o",

input: [

{ role: "user", content: "What two teams are playing in this photo?" },

{

role: "user",

content: [

{

type: "input_image",

image_url: "https://upload.wikimedia.org/wikipedia/commons/3/3b/LeBron_James_Layup_%28Cleveland_vs_Brooklyn_2018%29.jpg",

}

],

},

],

});

console.log(response.output_text);Extend the model with tools

Give the model access to new data and capabilities using tools. You can either call your own custom code, or use one of OpenAI's powerful built-in tools. This example uses web search to give the model access to the latest information on the Internet.

import OpenAI from "openai";

const client = new OpenAI();

const response = await client.responses.create({

model: "gpt-4o",

tools: [ { type: "web_search_preview" } ],

input: "What was a positive news story from today?",

});

console.log(response.output_text);Deliver blazing fast AI experiences

Using either the new Realtime API or server-sent streaming events, you can build high performance, low-latency experiences for your users.

import { OpenAI } from "openai";

const client = new OpenAI();

const stream = await client.responses.create({

model: "gpt-4o",

input: [

{

role: "user",

content: "Say 'double bubble bath' ten times fast.",

},

],

stream: true,

});

for await (const event of stream) {

console.log(event);

}Build agents

Use the OpenAI platform to build agents capable of taking action—like controlling computers—on behalf of your users. Use the Agent SDK for Python to create orchestration logic on the backend.

from agents import Agent, Runner

import asyncio

spanish_agent = Agent(

name="Spanish agent",

instructions="You only speak Spanish.",

)

english_agent = Agent(

name="English agent",

instructions="You only speak English",

)

triage_agent = Agent(

name="Triage agent",

instructions="Handoff to the appropriate agent based on the language of the request.",

handoffs=[spanish_agent, english_agent],

)

async def main():

result = await Runner.run(triage_agent, input="Hola, ¿cómo estás?")

print(result.final_output)

if __name__ == "__main__":

asyncio.run(main())

# ¡Hola! Estoy bien, gracias por preguntar. ¿Y tú, cómo estás?The API’s built-in tools execute these tasks seamlessly, saving you time and effort. Plus, it’s designed with safety and reliability in mind, which is a huge win for developers.

Web Search for Smarter Agents

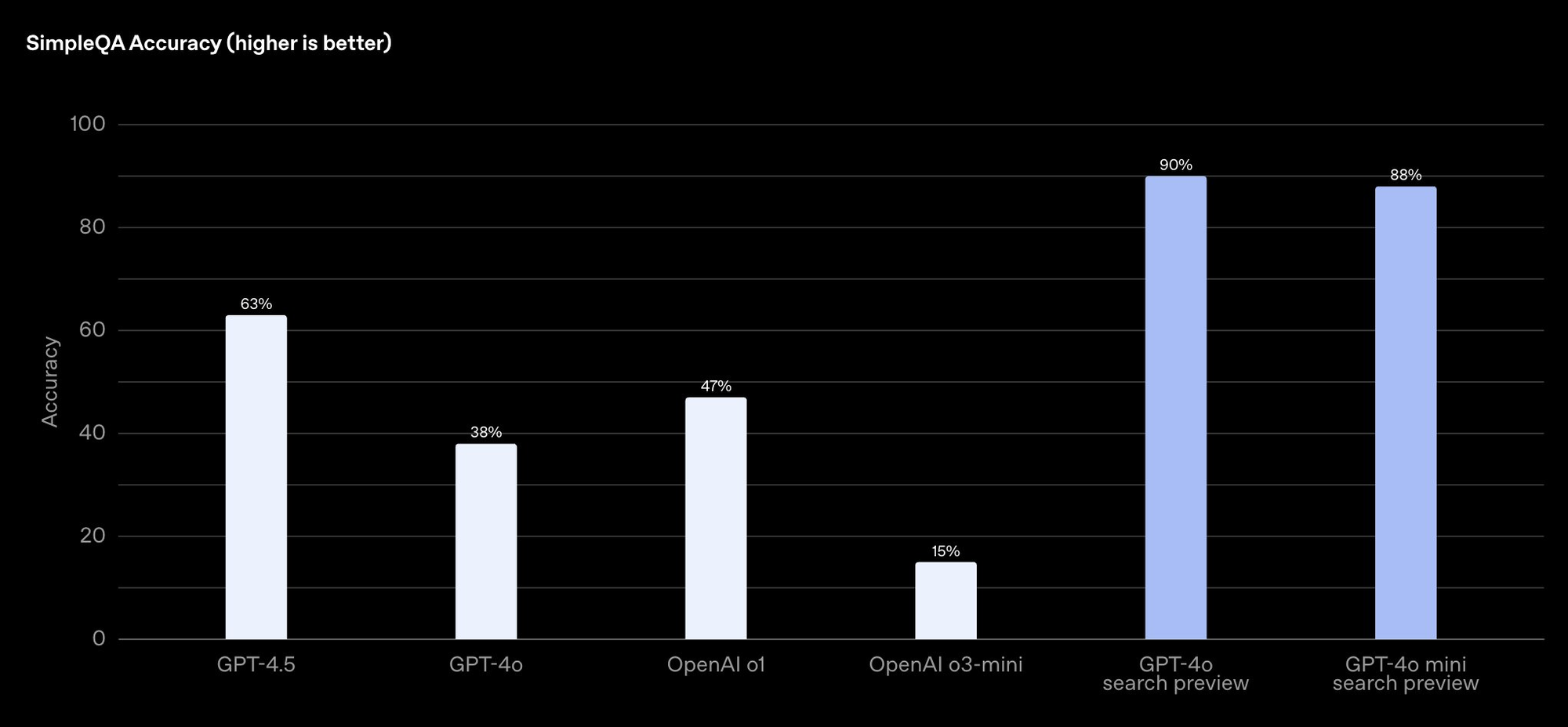

OpenAI’s web search tool, powered by models like GPT-4o search and GPT-4o mini search, allows your agents to fetch up-to-date information from the internet and cite sources. This is especially useful for building agents that need to provide accurate, real-time answers.

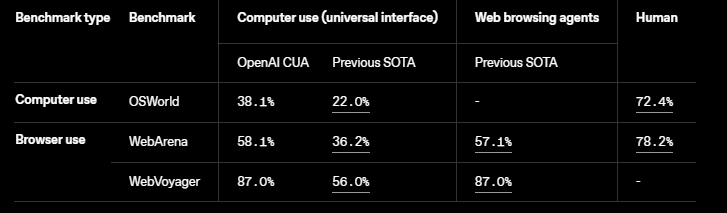

The web search tool is available in preview through the OpenAI API, and it boasts impressive accuracy. On OpenAI’s SimpleQA benchmark, GPT-4o search scores 90%, while GPT-4o mini search scores 88%. That’s some serious precision!

To implement web search in your agent, check out OpenAI’s guide. Here’s a quick rundown:

- Integrate the Tool: Use the Responses API to enable web search capabilities in your agent.

- Craft Queries: Design your agent to send specific queries to the web search tool, which then retrieves relevant results.

- Display Results: Your agent can present the findings to users, complete with links to sources for transparency.

Imagine building a customer service bot that uses web search to answer questions about product availability or industry trends. With OpenAI’s web search, your agent can deliver timely, accurate responses, boosting user trust and satisfaction.

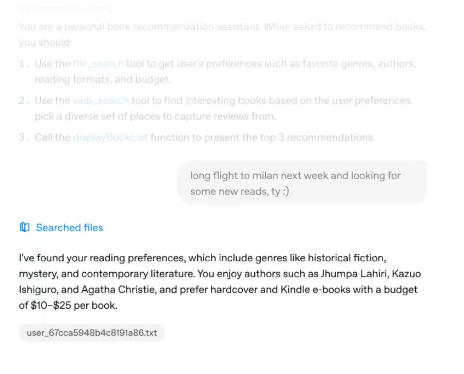

Mastering File Search for Efficient Data Access

Another powerful tool in OpenAI’s arsenal is file search. This feature allows your AI agents to quickly scan through files in a company’s databases to retrieve information. It’s ideal for enterprise applications where agents need to access internal documents, reports, or datasets.

OpenAI emphasizes that it won’t train its models on these files, ensuring privacy and security a critical consideration for businesses. You can learn more about file search in the documentation .

Here’s how to incorporate file search into your agent:

- Upload Files: Use the OpenAI API to upload your files to the platform.

- Configure the Agent: Set up your agent to use the file search tool within the Responses API.

- Query the Data: Your agent can search for specific information within the files and return relevant results.

For instance, you could build an HR agent that searches employee records to provide payroll details or vacation balances. This level of automation can save hours of manual work and improve efficiency across departments.

Automating Tasks with Computer Use Capabilities

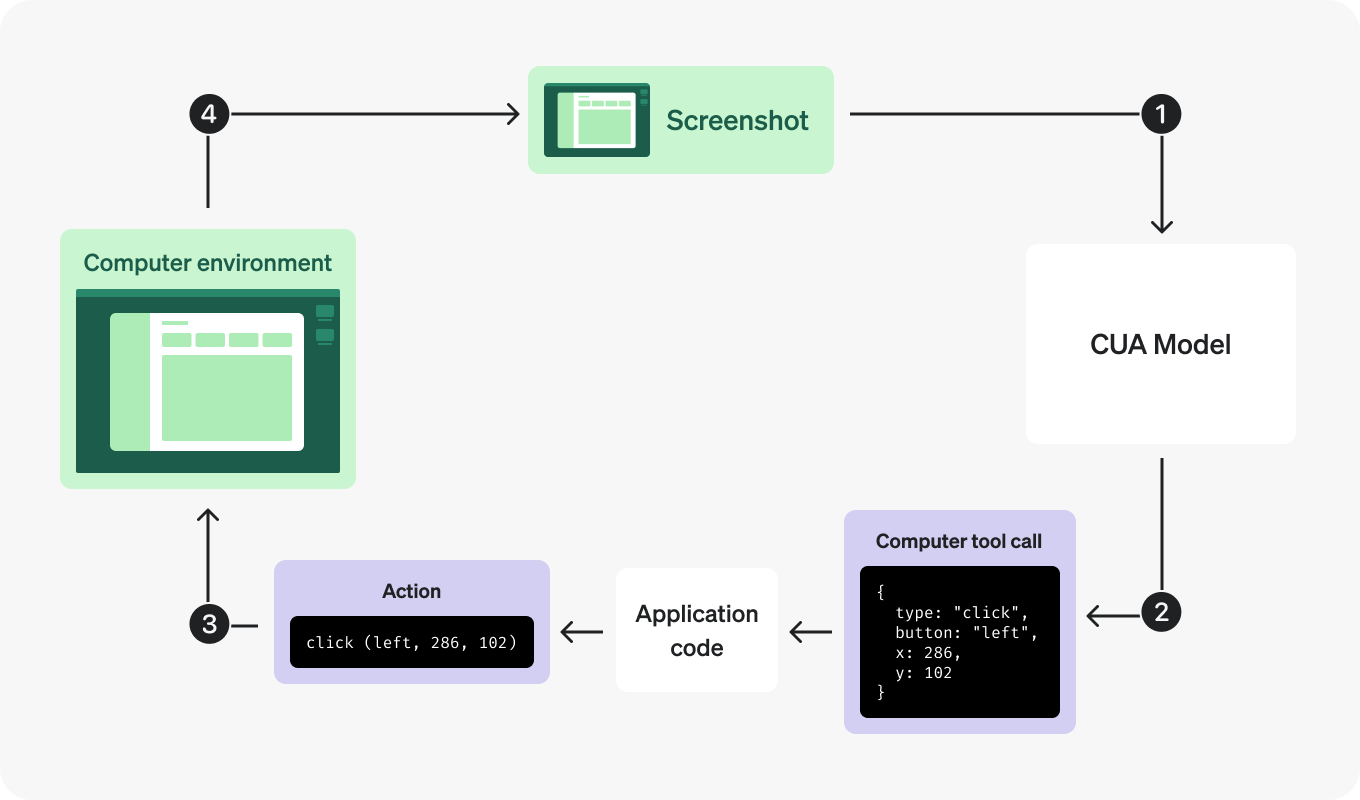

OpenAI’s Computer-Using Agent (CUA) model, which powers the Operator product, enables agents to generate mouse and keyboard actions. This means your agents can automate tasks like data entry, app workflows, and website navigation.

This tool is currently in research preview, but it’s already showing promise for developers. You can explore its capabilities in the documentation. The consumer version of CUA, available in Operator, focuses on web-based actions, but enterprises can run it locally for broader applications.

Here’s how to get started:

- Access the Preview: Sign up for the research preview to test the CUA model.

- Define Tasks: Program your agent to perform specific computer tasks, such as filling out forms or clicking buttons.

- Monitor Performance: Use OpenAI’s tools to debug and optimize your agent’s actions.

Imagine building an agent that automates repetitive office tasks, like updating spreadsheets or scheduling meetings. With computer use capabilities, your agent can handle these tasks autonomously, freeing up human workers for more creative endeavors.

1. Send a request to the model

First, you might want to setup the OpenAI key

import openai

import os

# Set API key

openai.api_key = os.environ.get("OPENAI_API_KEY")

Send a request to create a Response with the computer-use-preview model equipped with the computer_use_preview tool. This request should include details about your environment, along with an initial input prompt.

Optionally, you can include a screenshot of the initial state of the environment.

To be able to use the computer_use_preview tool, you need to set the truncation parameter to "auto" (by default, truncation is disabled).

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="computer-use-preview",

tools=[{

"type": "computer_use_preview",

"display_width": 1024,

"display_height": 768,

"environment": "browser" # other possible values: "mac", "windows", "ubuntu"

}],

input=[

{

"role": "user",

"content": "Check the latest OpenAI news on bing.com."

}

# Optional: include a screenshot of the initial state of the environment

# {

# type: "input_image",

# image_url: f"data:image/png;base64,{screenshot_base64}"

# }

],

truncation="auto"

)

print(response.output)2. Receive a suggested action

The model returns an output that contains either a computer_call item, just text, or other tool calls, depending on the state of the conversation.

Examples of computer_call items are a click, a scroll, a key press, or any other event defined in the API reference. In our example, the item is a click action:

"output": [

{

"type": "reasoning",

"id": "rs_67cc...",

"content": []

},

{

"type": "computer_call",

"id": "cu_67cc...",

"call_id": "call_zw3...",

"action": {

"type": "click",

"button": "left",

"x": 156,

"y": 50

},

"pending_safety_checks": [],

"status": "completed"

}

]The model may return a reasoning item in the response output for some actions. If it does, you should always include back the reasoning items when sending the next request to the CUA model.

The reasoning items are only compatible with the same model that produced them. If you implement a flow where you use several models with the same conversation history, you should filter these reasoning items out of the inputs array you send to other models.

3. Execute the action in your environment

Execute the corresponding actions on your computer or browser. How you map a computer call to actions through code depends on your environment. This code shows example implementations for the most common computer actions.

def handle_model_action(page, action):

"""

Given a computer action (e.g., click, double_click, scroll, etc.),

execute the corresponding operation on the Playwright page.

"""

action_type = action.type

try:

match action_type:

case "click":

x, y = action.x, action.y

button = action.button

print(f"Action: click at ({x}, {y}) with button '{button}'")

# Not handling things like middle click, etc.

if button != "left" and button != "right":

button = "left"

page.mouse.click(x, y, button=button)

case "scroll":

x, y = action.x, action.y

scroll_x, scroll_y = action.scroll_x, action.scroll_y

print(f"Action: scroll at ({x}, {y}) with offsets (scroll_x={scroll_x}, scroll_y={scroll_y})")

page.mouse.move(x, y)

page.evaluate(f"window.scrollBy({scroll_x}, {scroll_y})")

case "keypress":

keys = action.keys

for k in keys:

print(f"Action: keypress '{k}'")

# A simple mapping for common keys; expand as needed.

if k.lower() == "enter":

page.keyboard.press("Enter")

elif k.lower() == "space":

page.keyboard.press(" ")

else:

page.keyboard.press(k)

case "type":

text = action.text

print(f"Action: type text: {text}")

page.keyboard.type(text)

case "wait":

print(f"Action: wait")

time.sleep(2)

case "screenshot":

# Nothing to do as screenshot is taken at each turn

print(f"Action: screenshot")

# Handle other actions here

case _:

print(f"Unrecognized action: {action}")

except Exception as e:

print(f"Error handling action {action}: {e}")4. Capture the updated screenshot

After executing the action, capture the updated state of the environment as a screenshot, which also differs depending on your environment.

def get_screenshot(page):

"""

Take a full-page screenshot using Playwright and return the image bytes.

"""

return page.screenshot()5. Repeat

Once you have the screenshot, you can send it back to the model as a computer_call_output to get the next action. Repeat these steps as long as you get a computer_call item in the response.

import time

import base64

from openai import OpenAI

client = OpenAI()

def computer_use_loop(instance, response):

"""

Run the loop that executes computer actions until no 'computer_call' is found.

"""

while True:

computer_calls = [item for item in response.output if item.type == "computer_call"]

if not computer_calls:

print("No computer call found. Output from model:")

for item in response.output:

print(item)

break # Exit when no computer calls are issued.

# We expect at most one computer call per response.

computer_call = computer_calls[0]

last_call_id = computer_call.call_id

action = computer_call.action

# Execute the action (function defined in step 3)

handle_model_action(instance, action)

time.sleep(1) # Allow time for changes to take effect.

# Take a screenshot after the action (function defined in step 4)

screenshot_bytes = get_screenshot(instance)

screenshot_base64 = base64.b64encode(screenshot_bytes).decode("utf-8")

# Send the screenshot back as a computer_call_output

response = client.responses.create(

model="computer-use-preview",

previous_response_id=response.id,

tools=[

{

"type": "computer_use_preview",

"display_width": 1024,

"display_height": 768,

"environment": "browser"

}

],

input=[

{

"call_id": last_call_id,

"type": "computer_call_output",

"output": {

"type": "input_image",

"image_url": f"data:image/png;base64,{screenshot_base64}"

}

}

],

truncation="auto"

)

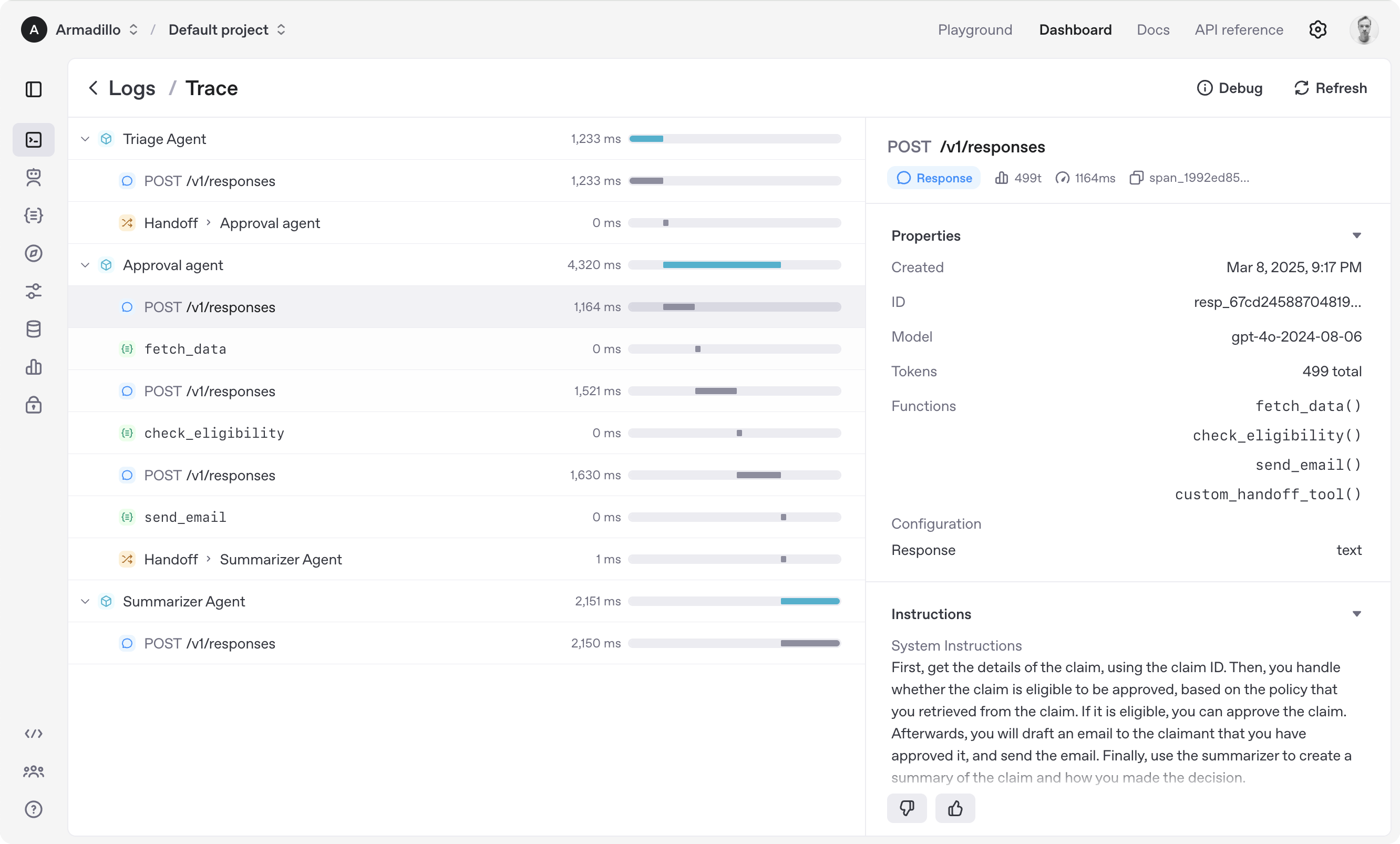

return responseOrchestrating Agents with the Agents SDK

OpenAI’s open-source toolkit for building and managing multi-agent workflows. This SDK builds on OpenAI’s earlier framework, Swarm, and offers developers free tools to integrate models, implement safeguards, and monitor agent activities.

The Agents SDK is Python-first and includes features like built-in agent loops and safety checks. It’s perfect for creating complex systems where multiple agents work together to solve problems.

Here’s how to use the Agents SDK:

- Download the SDK: Access the open-source code from OpenAI’s GitHub repository.

- Set Up Multi-Agent Workflows: Use the SDK to orchestrate tasks between agents, delegating based on their capabilities.

- Add Safeguards: Implement safety checks to ensure your agents operate responsibly and reliably.

For example, you could build a sales team of AI agents where one agent handles web research, another manages files, and a third automates computer tasks. The Agents SDK ties them together, creating a seamless, efficient system.

Conclusion

From the Responses API to web search, file search, computer use, and the Agents SDK, OpenAI has equipped developers with everything needed to create autonomous, intelligent systems. Whether you’re automating business tasks, enhancing customer service, or exploring new research frontiers, these tools open up a world of possibilities.

So, what are you waiting for? Dive into the OpenAI API, experiment with their new tools, and start building agents that wow your users. And don’t forget download Apidog for free to streamline your API development and make your journey even smoother!