Ollama advances local AI capabilities with its new web search API and MCP Server. Developers now access real-time information to boost model performance. Furthermore, this update streamlines tool integrations across various clients.

Ollama stands as a robust platform for running large language models locally. Engineers leverage it to deploy models without relying on cloud services. However, the addition of web search API expands its scope. This API allows models to query the internet directly. Consequently, applications handle current events and dynamic data more effectively.

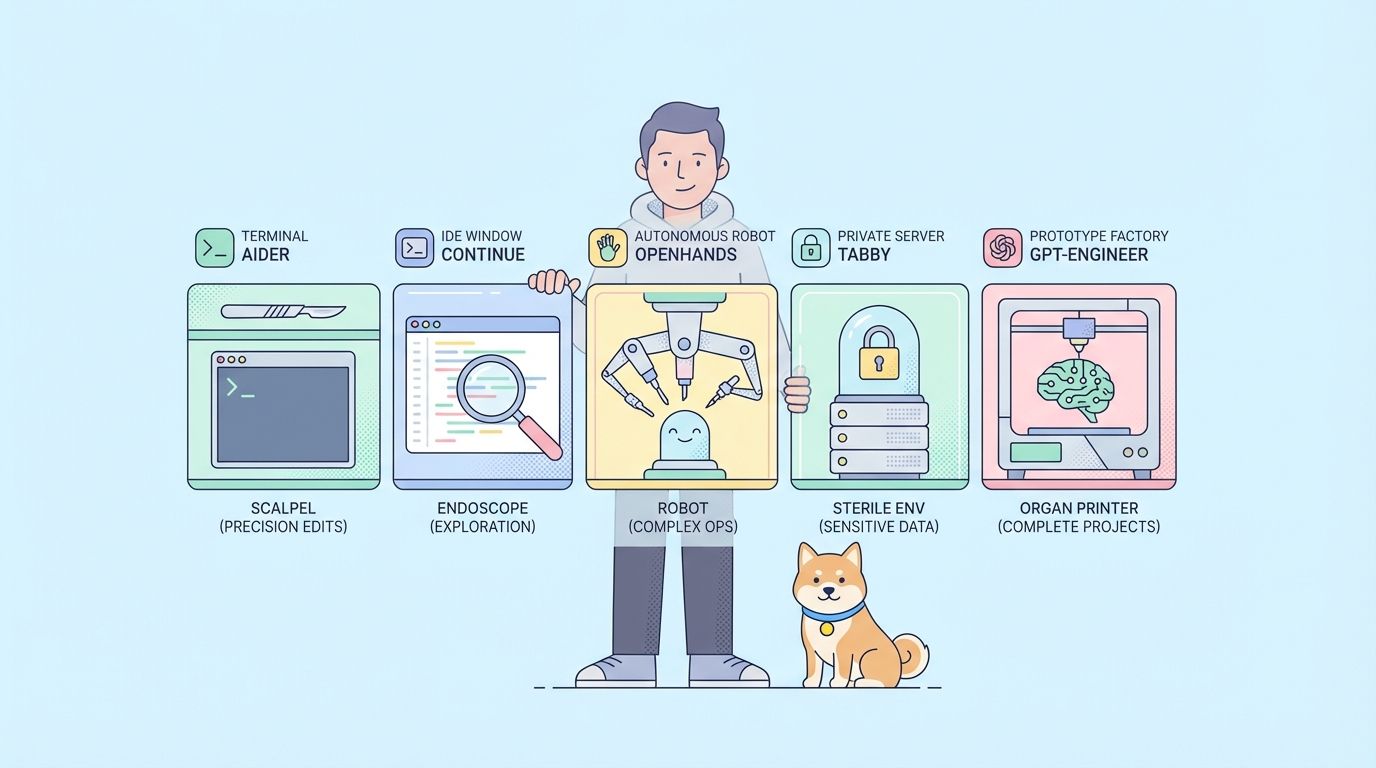

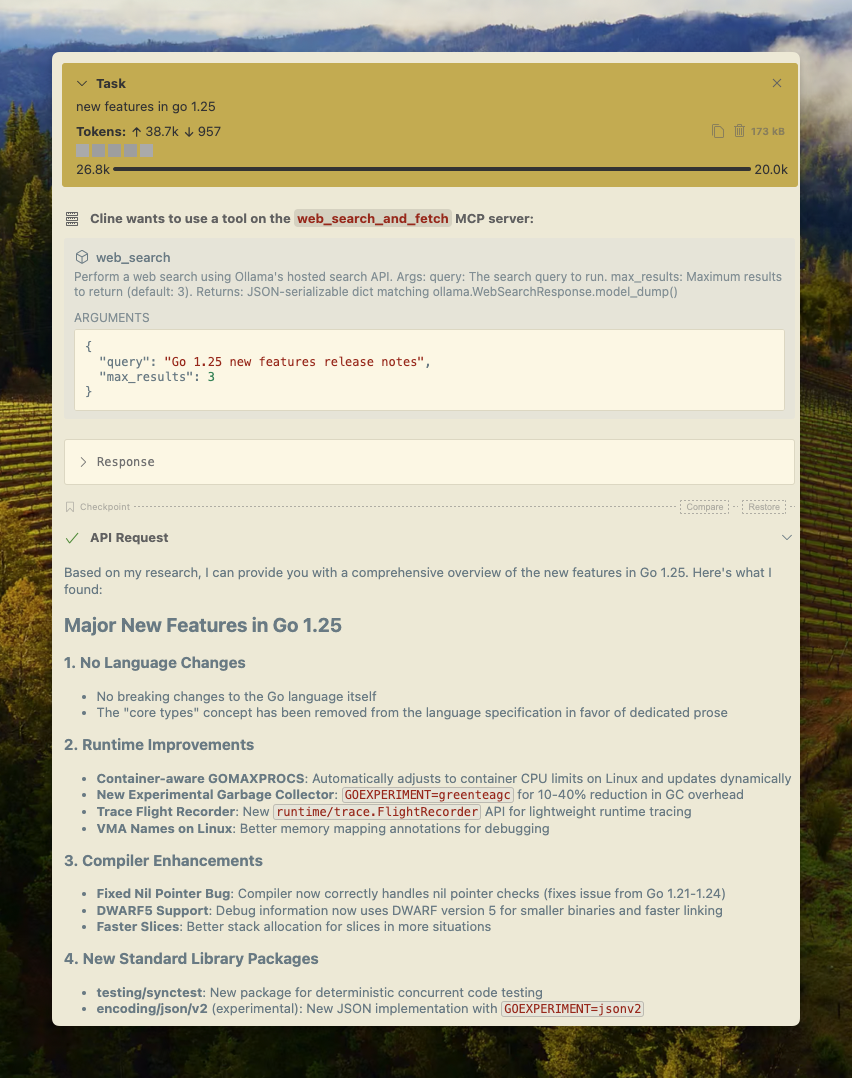

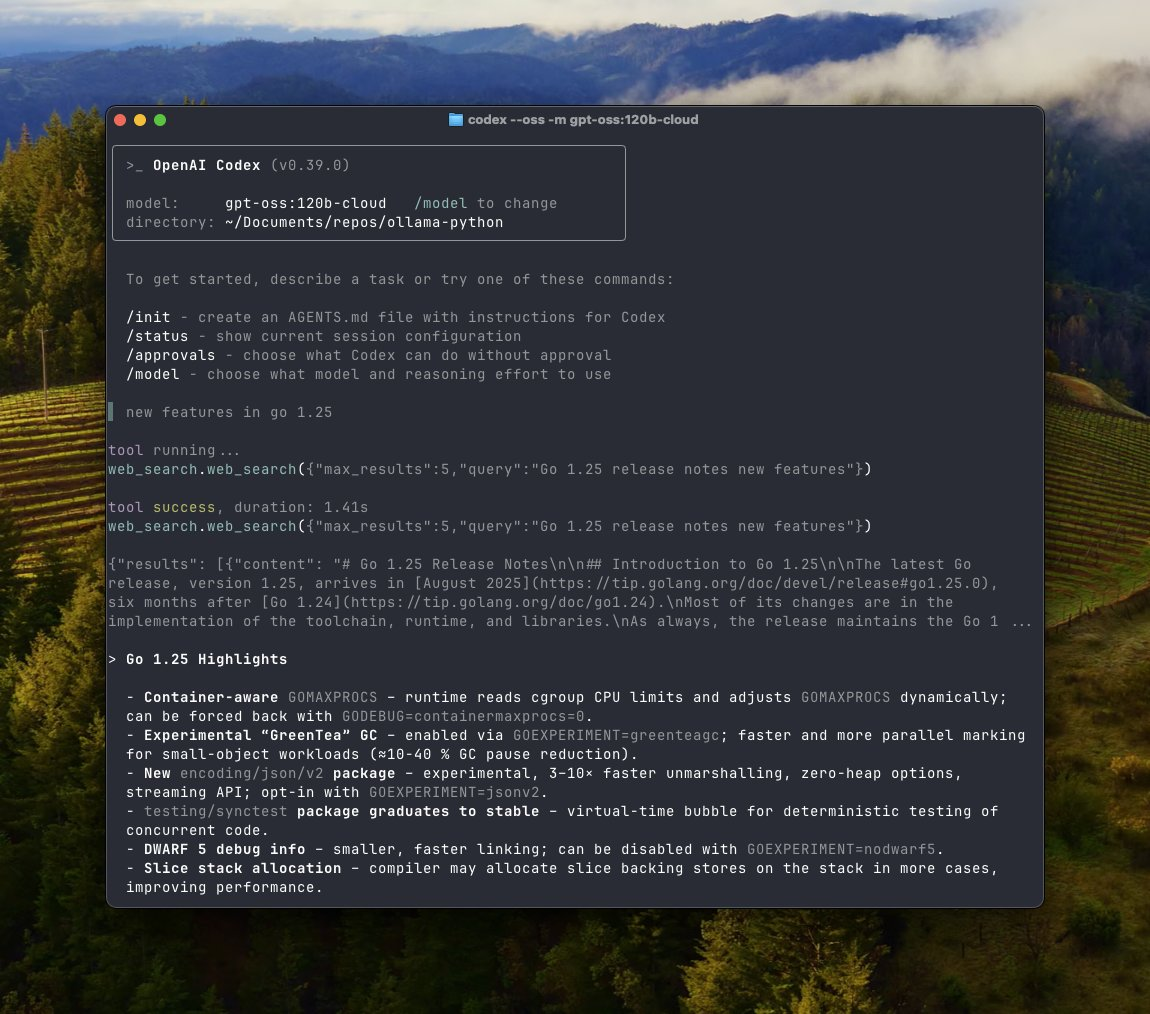

The MCP Server complements this by providing a standardized protocol for context exchange. Developers connect models to external tools effortlessly. For instance, MCP Server integrates with clients like Cline, Codex, and Goose. This setup enables complex workflows where models interact with web search results in real time.

Transitioning to the technical details, Ollama's web search API operates via REST endpoints. Users send POST requests to https://ollama.com/api/web_search with a query parameter. The system returns relevant results, limited to a maximum of 10 by default. Additionally, the web fetch API at https://ollama.com/api/web_fetch retrieves content from specific URLs. Both require an API key from an Ollama account.

Ollama ensures accessibility across platforms. On macOS, users install via Homebrew. Windows users download the executable directly. Linux supports straightforward package managers. Regardless of the platform, the API integrates uniformly.

What Developers Need to Know About Ollama

Ollama powers local inference for models like Llama and Qwen. It downloads quantized models efficiently. Users pull models with commands such as ollama pull qwen3:4b. This process optimizes for hardware like NVIDIA GPUs or Apple Silicon.

Furthermore, Ollama supports multimodal tasks. For example, it processes images and videos alongside text. The platform evolves rapidly, with updates enhancing scheduling and context handling.

Developers appreciate Ollama's open-source nature. They customize models without vendor lock-in. However, limitations arise with static knowledge. Models trained on past data struggle with recent information. Here, the web search API intervenes.

Ollama's API addresses this gap. It augments responses with fresh data. As a result, hallucinations decrease significantly. Engineers build reliable applications for research or automation.

Transitioning to MCP Server, this component standardizes interactions. MCP, or Model Context Protocol, facilitates data exchange between models and systems. Ollama implements MCP Server in Python, allowing seamless tool use.

For instance, MCP Server enables file operations, calculations, and web access. Developers configure it for local LLMs, extending capabilities beyond basic inference.

Exploring Ollama's Web Search API in Depth

Ollama's web search API delivers structured results. Users specify queries and optional max_results. The response includes snippets, URLs, and metadata. This format aids in parsing for agents.

To integrate, developers use Python libraries. Install with pip install ollama. Then, call ollama.web_search(query="example"). The function handles authentication via environment variables.

Similarly, JavaScript users leverage ollama-js. Import the module and invoke Ollama().webSearch({query: "example"}). Examples in repositories demonstrate error handling and retries.

cURL provides a low-level option. Craft requests with headers for Authorization. This approach suits scripting or testing.

However, Apidog enhances this process. As an API management tool, Apidog visualizes endpoints and parameters. It generates code snippets for Ollama's API, accelerating development.

The API supports long contexts. Results can span thousands of tokens. Therefore, models with extended windows perform best. Ollama recommends 32,000 tokens minimum.

Furthermore, the fetch endpoint complements search. It extracts content from URLs, bypassing direct browser needs. Combine both for comprehensive agents.

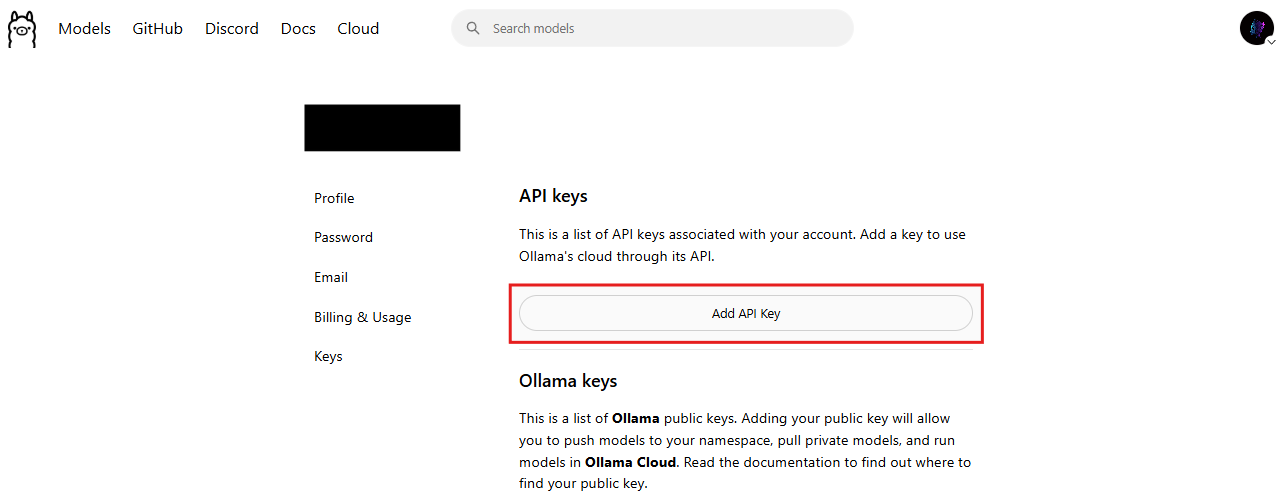

Security remains paramount. Ollama requires API keys, preventing unauthorized access. Users generate keys at https://ollama.com/settings/keys.

Transitioning to practical use, consider a research agent. The agent queries web search, fetches pages, and synthesizes answers. This workflow outperforms static models.

Demystifying MCP Server for Ollama Users

MCP Server bridges models and tools. It implements Model Context Protocol, a framework for context sharing. In Ollama, a Python script runs the server.

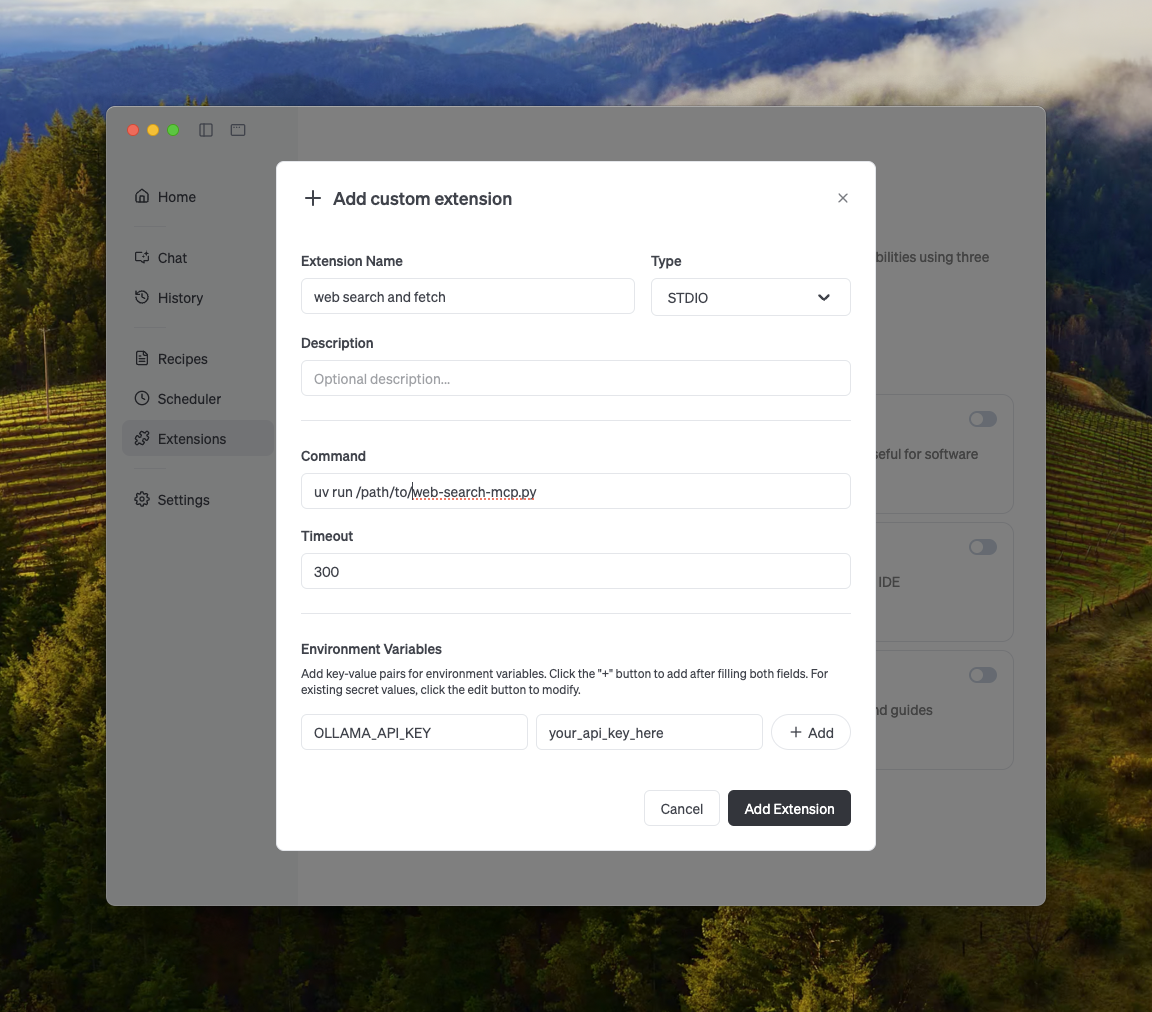

Setup involves cloning repositories and setting environments. For example, uv run web-search-mcp.py launches it. Clients connect via compatible interfaces.

Cline configures with commands in settings. Add OLLAMA_API_KEY to environments. Codex edits config.toml files. Goose follows similar patterns.

This integration unlocks web search in clients. Models call tools dynamically, enhancing interactivity.

Moreover, MCP Server supports extensions. Developers add custom tools for email, GitHub, or images. This flexibility positions Ollama as infrastructure for agents.

On Windows with NVIDIA, installations include CUDA drivers. Linux uses Docker for isolation. macOS benefits from native acceleration.

Transitioning to advanced setups, cluster multiple MCP Servers. This distributes loads for enterprise scales.

How to Integrate Ollama's API and MCP Server

Integration starts with account creation. Sign up for free at Ollama's site. Generate an API key immediately.

Next, install Ollama locally. Run ollama serve to start the server. Pull models suited for tools, like gpt-oss.

For web search, set OLLAMA_API_KEY. Test with Python:

import ollama

response = ollama.web_search(query="latest AI news", max_results=5)

print(response)

This returns JSON with results.

To incorporate MCP Server, download examples from GitHub. Run the script and configure clients.

For Cline: Edit config to point at the MCP endpoint. Test prompts that invoke search.

Codex requires toml updates. Specify the command and args.

Goose integrates via MCP settings, enabling web tools.

Furthermore, build custom agents. Use loops to handle multi-turn interactions. Parse tool calls and feed back results.

Error handling proves crucial. Implement retries for rate limits. Monitor usage to stay within tiers.

Apidog assists here. It mocks responses, tests authentication, and documents workflows. Download Apidog to prototype Ollama integrations quickly.

Building Powerful Search Agents with Ollama

Agents represent a core use case. Ollama provides examples with Qwen 3.

Pull the model: ollama pull qwen3:4b.

In Python, define tools:

tools = [

{"type": "function", "function": {"name": "web_search", "description": "Search the web"}},

{"type": "function", "function": {"name": "web_fetch", "description": "Fetch URL content"}}

]

Chat loop processes messages, calls tools, and appends results.

This agent answers queries like "What's the current weather in Tokyo?" by searching and fetching.

Expand to vision: Analyze images via multimodal models, then search for context.

Transitioning to optimization, increase context lengths. Cloud models handle up to full capacity.

Agents reduce costs by minimizing unnecessary calls. Cache results locally.

Furthermore, combine with other APIs. Integrate databases or computation tools via MCP.

Pricing Details for Ollama Across Platforms

Ollama offers tiered pricing. The base is free, with generous search limits. This suits hobbyists and testing.

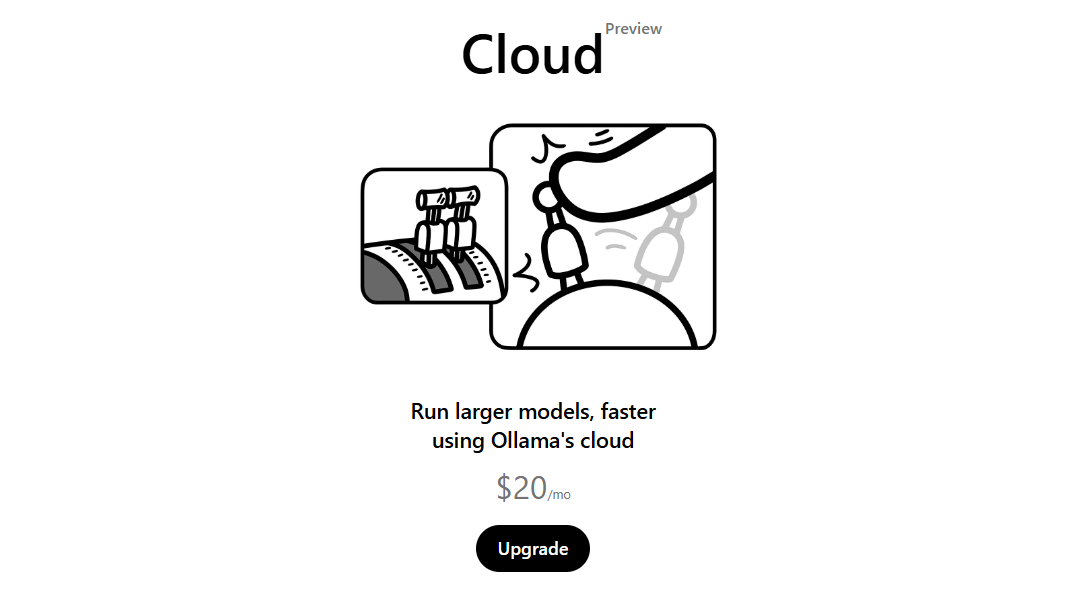

For production, upgrade subscriptions. Cloud access starts at approximately $20 monthly, based on community discussions. Higher tiers provide unlimited queries and priority support.

Platforms influence costs indirectly. Local runs on macOS, Windows, Linux incur no fees beyond hardware. Cloud models bill per usage.

Web search API charges per call in advanced plans. However, free tiers cover most needs.

Compare to alternatives: OpenAI's search costs $10 per 1k calls. Ollama undercuts this for local-first users.

Enterprises calculate ROI. Local inference saves on data transfer, while API adds minimal overhead.

Transitioning to budgeting, monitor via dashboards. Ollama provides usage stats.

Real-World Use Cases and Examples

Developers apply this in chatbots. A bot searches news, fetches articles, summarizes.

In education, tools query facts, reducing errors.

Researchers build agents for literature reviews. Search academic sites, fetch PDFs.

E-commerce integrates for product recommendations. Search trends, fetch reviews.

Code example for agent:

import ollama

import json

def run_agent(prompt):

messages = [{"role": "user", "content": prompt}]

while True:

response = ollama.chat(model="qwen3:4b", messages=messages, tools=tools)

if "tool_calls" in response["message"]:

for call in response["message"]["tool_calls"]:

if call["function"]["name"] == "web_search":

args = json.loads(call["function"]["arguments"])

result = ollama.web_search(**args)

messages.append({"role": "tool", "content": str(result)})

else:

return response["message"]["content"]

This loop handles iterations.

Furthermore, vision use: Describe images, search for matches.

Businesses automate reports. Agents compile data from web sources.

Benefits of Adopting Ollama's New Features

Ollama enhances privacy. Data stays local, with API calls optional.

Accuracy improves via real-time augmentation. Models handle evolving topics.

Scalability follows. MCP Server distributes tasks.

Cost-efficiency stands out. Free tiers minimize expenses.

Developer productivity rises. Integrations like Apidog speed workflows.

Transitioning to community, forums discuss optimizations.

Ecosystem grows. Tools like OpenWebUI interface with Ollama.

Potential Challenges and Solutions

Challenges include rate limits. Solution: Upgrade subscriptions.

Hardware constraints limit models. Use cloud variants.

Integration complexity arises. Follow docs and examples.

Security: Rotate API keys regularly.

Debugging agents requires logging. Implement verbose modes.

Furthermore, test across platforms for consistency.

Wrapping Up Ollama's Advancements

Ollama's web search API and MCP Server mark significant progress. Developers harness these for powerful applications. With free tiers and cross-platform support, adoption accelerates. Explore further, integrate with Apidog, and build the next generation of AI tools.