OpenAI’s O3 Model Gets 80% Cheaper: Key Insights for API Developers

OpenAI has made a significant move by slashing O3 model pricing by 80%. This price cut is a game-changer for API developers, backend engineers, and product teams who rely on advanced AI for their applications. In this article, we break down the new O3 pricing, how it stacks up against competitors, and what it means for your projects.

💡 Looking for an API testing platform that generates beautiful API documentation and helps your team collaborate for maximum productivity? Apidog brings it all together, replacing Postman at a more affordable price.

O3 Pricing Update: What’s Changed?

OpenAI’s O3 model is known for its advanced reasoning and flexible API integration. Previously, its high cost limited adoption—$10 per million input tokens and $40 per million output tokens. Now, OpenAI has dropped prices to $2 per million input tokens and $8 per million output tokens. That’s a direct 80% reduction, opening up O3 to a wider range of teams and projects.

Key Details of the New O3 Pricing

- Input Tokens: Now $2 per million, down from $10.

- Output Tokens: Now $8 per million, down from $40.

- Cached Input Discount: An extra $0.50 per million token discount for cached (previously processed) input.

This new pricing makes high-volume tasks—like NLP, analytics, or automated support—much more accessible to API-centric teams. For those running heavily-used endpoints, the cached input discount can further cut operational costs.

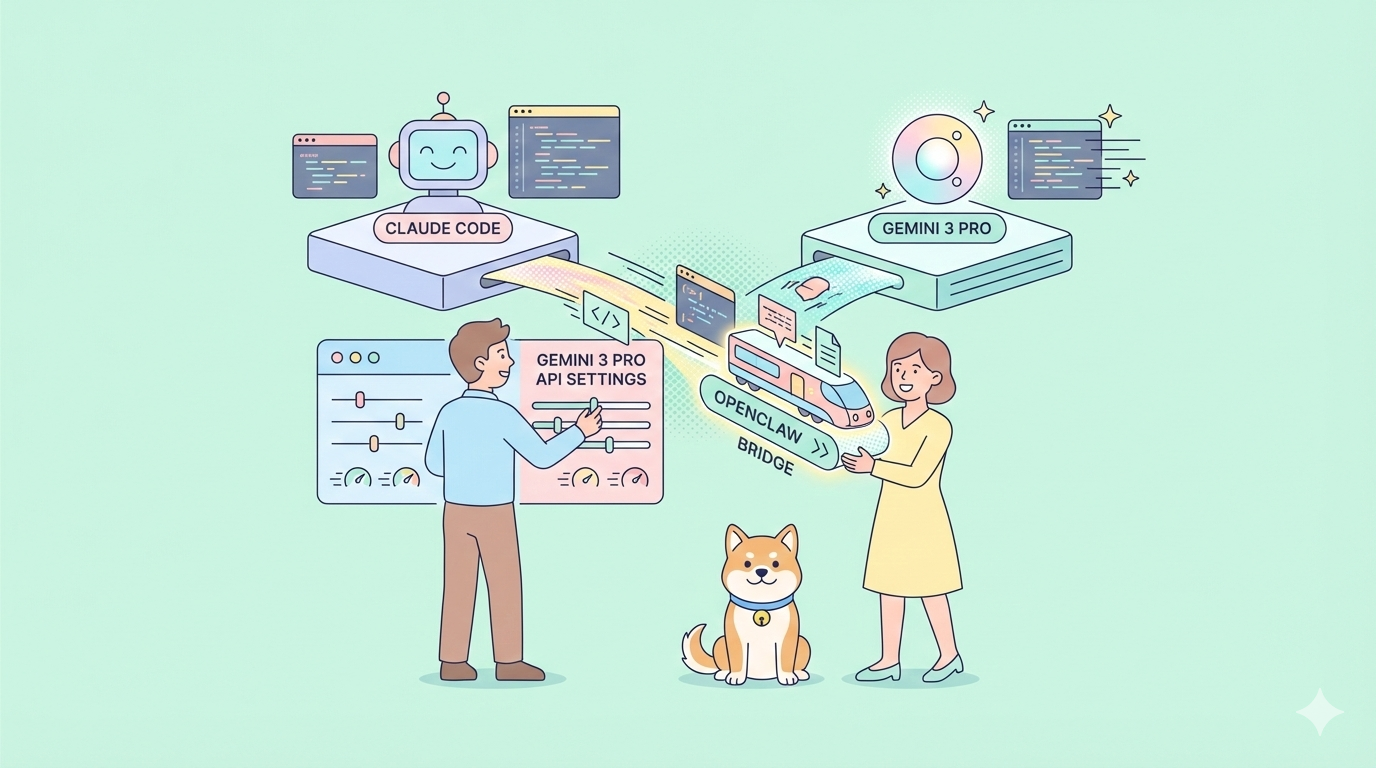

O3 vs. Competitors: How Does It Stack Up?

OpenAI’s new rates put O3 ahead of many rivals in both affordability and value. Let’s see how O3 compares to major players:

Competitive Comparison: Gemini 2.5 Pro & Claude 4

- Gemini 2.5 Pro: O3 matches its per-token pricing but often beats it in reasoning tasks.

- Claude 4 Sonnet: O3 outperforms in intelligence metrics, and Claude 4 Opus remains 8x pricier per token.

- GPT-4o: O3 now undercuts it, making advanced reasoning more budget-friendly.

For example, running the Artificial Analysis Intelligence Index now costs $390 with O3, compared to $971 for Gemini 2.5 Pro—huge savings for research and production workloads.

Technical Impact of the O3 Price Cut

This price drop is not just about saving money—it changes how developers build. With lower rates, you can:

- Process More Data: Run larger batch jobs, analytics, or document summarization without worrying about cost overruns.

- Scale Automated Support: Power customer chatbots or ticket triage systems with advanced reasoning at scale.

- Optimize with Cached Inputs: Use the cached input discount to lower repeat query costs.

OpenAI confirms O3’s architecture and reasoning power remain unchanged despite the lower price. You still get the same high-quality results trusted for mission-critical APIs.

What This Means for API Teams and Startups

For smaller teams, the old O3 pricing was often a dealbreaker. Now, with costs slashed, startups and independent developers can experiment with cutting-edge reasoning in real-world apps—education, healthcare, finance, or automation.

Lowering the barrier for advanced AI means more innovation. API teams can now prototype, iterate, and deploy with fewer budget constraints.

O3 Technical Performance: Does Cheaper Mean Worse?

A common concern after a price cut: is the model still as good? Independent benchmarks, including Artificial Analysis, show O3 remains strong in reasoning and efficiency:

- Output Token Efficiency: O3 generates concise, relevant results—more efficient than Gemini 2.5 Pro and competitive with Claude 4 Opus.

- Flex Mode: Developers can choose a flex mode ($5 input, $20 output per million tokens) for greater control over latency and cost, ideal for synchronous APIs.

Introducing O3-Pro: Premium Features for Power Users

Alongside the pricing update, OpenAI launched O3-Pro for users needing deeper analysis. Priced at $20 per million input tokens and $80 per million output tokens, O3-Pro is available via ChatGPT Pro and the API. It’s slower but offers enhanced reasoning, suitable for complex data work.

O3 and O3-Pro now serve both mainstream and advanced needs, letting you pick the right balance between speed, depth, and cost for your API workflows.

Strategic Implications: The Start of an AI Price War?

OpenAI’s aggressive pricing could spark a wider price war. Google and Anthropic may be forced to follow suit to retain API customers, especially as O3’s accessibility draws in developers at scale.

More Room for Experimentation

Lower costs mean more room to test new ideas. Sam Altman has highlighted his excitement about what users will build with cheaper O3 access—opening up experimentation in fields like code generation, diagnostics, and research.

Real-World Use Cases: Where O3’s New Pricing Makes a Difference

With the cost barrier removed, here’s where O3 can deliver major value:

- Education APIs: Adaptive tutoring, content generation, and student support at scale.

- Healthcare: Automated data review and decision support for clinics or research.

- Business Automation: Intelligent customer service bots and workflow automation.

- Coding Assistants: Tools like Cursor AI use O3 for smarter code completion and suggestions.

For API-focused teams, integrating O3 is now a practical way to deliver smarter endpoints without blowing the budget.

Considerations and Best Practices

- Token Usage Still Matters: O3’s advanced reasoning may use more tokens per query, so prompt engineering remains important for cost control.

- O3-Pro Latency: The premium model is slower—choose it for depth, not for real-time needs.

- Monitor Your Usage: Use built-in analytics to track API token consumption and optimize input/output patterns.

Conclusion: A New Era for AI-Powered APIs

OpenAI’s 80% price cut on O3 fundamentally changes what’s possible for API development. With greater affordability, more teams can leverage world-class reasoning for their applications—whether building internal tools, customer-facing platforms, or research systems.

Now is the time for API developers to experiment with O3 and O3-Pro. Tools like Apidog help you integrate, test, and document your AI-driven APIs efficiently—enabling rapid deployment and seamless teamwork.

Stay tuned for more updates as the market adapts to this pricing shift. Affordable, powerful AI models like O3 are shaping the future of API innovation.

💡 Want an API testing solution that creates beautiful documentation and boosts developer productivity? Apidog meets your needs and replaces Postman at a better price.