Developers seek efficient tools that handle both text and images without breaking the bank. NVIDIA Nemotron Nano 12B v2 VL stands out as a compact yet powerful vision-language model, and accessing it via the NVIDIA API free tier through platforms like OpenRouter makes experimentation straightforward.

This post guides you through the process of leveraging the NVIDIA Nemotron Nano 12B v2 VL API for free. You will learn about the model's architecture, setup requirements, practical implementation steps, and advanced usage patterns. By the end, you will possess the knowledge to deploy this model in your applications, from image captioning to visual question answering.

Understanding NVIDIA Nemotron Nano 12B v2 VL: Core Architecture and Capabilities

NVIDIA engineers designed the Nemotron Nano 12B v2 VL model to address the growing demand for efficient vision-language processing. This 12-billion-parameter model combines transformer-based language understanding with visual encoders, enabling it to process interleaved sequences of text and images. Unlike larger models that require substantial GPU resources, Nemotron Nano 12B v2 VL optimizes for edge deployment and low-latency inference, making it ideal for real-time applications.

At its core, the model employs a vision transformer (ViT) to extract features from input images, followed by a multimodal projector that aligns these features with the text embedding space. The language component builds on NVIDIA's Nemotron architecture, which incorporates rotary positional embeddings for extended context handling. This setup supports a context length of up to 4,096 tokens, sufficient for most practical tasks involving short descriptions or queries paired with visuals.

Key capabilities include:

- Image-Text Alignment: The model generates descriptive captions for images or answers questions based on visual content.

- Multimodal Reasoning: It performs tasks like visual question answering (VQA), where users query specific details from an image, such as "What color is the car in the foreground?"

- Document Understanding: Process scanned documents or charts by combining OCR-like text extraction with semantic interpretation.

Benchmarks reveal strong performance: On the VQAv2 dataset, Nemotron Nano 12B v2 VL achieves approximately 75% accuracy, rivaling larger models while consuming far less compute. For developers, this translates to faster prototyping cycles, especially when using the NVIDIA API free access.

Moreover, the model's quantization options—such as 4-bit or 8-bit weights—reduce memory footprint without significant accuracy loss. NVIDIA provides these through their NGC catalog, but for API-based usage, platforms like OpenRouter handle the heavy lifting, exposing the model via standardized HTTP endpoints.

Accessing the NVIDIA API Free Tier: OpenRouter Integration

To use the NVIDIA Nemotron Nano 12B v2 VL API for free, you route requests through OpenRouter, a unified gateway for AI models. OpenRouter offers a generous free tier for this specific model variant, allowing up to 10 requests per minute and 1,000 tokens per minute without charges. This limitation suits testing and small-scale development, and you can upgrade to paid plans for higher throughput if needed.

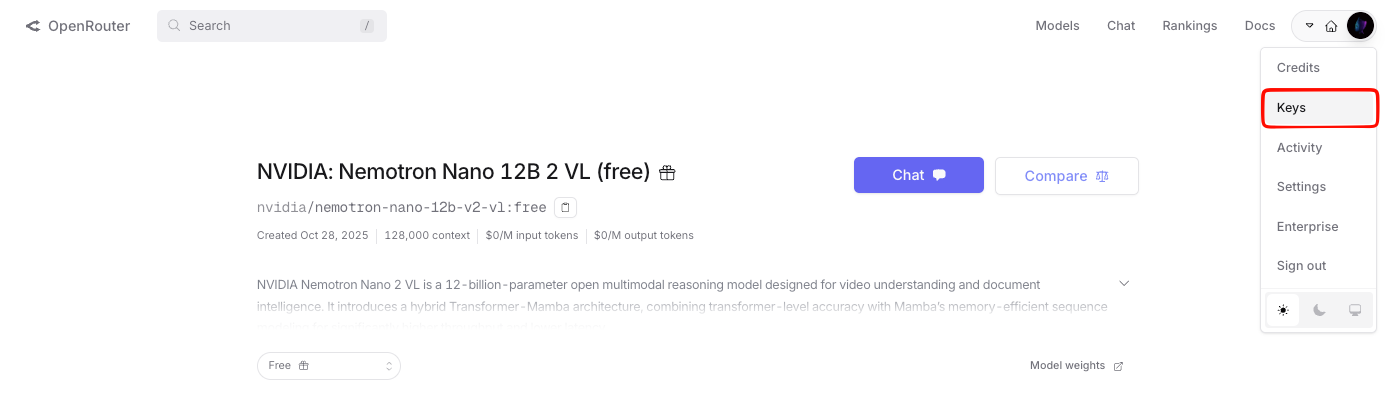

First, create an account on OpenRouter. Navigate to their dashboard and sign up using your email or GitHub credentials. Once logged in, generate an API key from the "Keys" section. This key authenticates all subsequent calls, following a simple Bearer token scheme.

OpenRouter standardizes the API interface to mimic OpenAI's format, which simplifies migration for developers familiar with GPT endpoints. The base URL for requests is https://openrouter.ai/api/v1, and you specify the model as nvidia/nemotron-nano-12b-v2-vl:free. This tag ensures you hit the free tier endpoint, avoiding any inadvertent charges.

For vision inputs, you encode images as base64 strings within the JSON payload. The API supports JPEG and PNG formats, with a maximum resolution of 1024x1024 pixels per image—higher resolutions trigger automatic resizing to prevent overflow. Text inputs remain standard UTF-8 strings, and the model outputs JSON-formatted responses containing generated text.

Transitioning from setup to implementation, you now prepare your development environment. Install Python 3.8 or later, along with the requests library for HTTP handling. For more advanced testing, Apidog integrates seamlessly, allowing you to visualize request/response cycles and export collections for team collaboration.

Step-by-Step Setup: Prerequisites and Environment Configuration

You begin by verifying your system's readiness. Ensure Python resides on your machine; check via python --version in the terminal. If absent, download it from the official Python website.

Next, create a virtual environment to isolate dependencies:

python -m venv nemotron_env

source nemotron_env/bin/activate # On Windows: nemotron_env\Scripts\activate

Install the necessary package:

pip install requests

Store your OpenRouter API key securely. Use environment variables for this purpose—create a .env file in your project directory with OPENROUTER_API_KEY=your_key_here. Load it using the python-dotenv library:

pip install python-dotenv

In your code, import and use it as follows:

import os

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv('OPENROUTER_API_KEY')

This configuration prevents hardcoding sensitive data, a best practice for production environments. With these foundations in place, you proceed to crafting your first API call.

Furthermore, if you favor GUI-based testing, Apidog shines here. Import the OpenRouter schema directly into Apidog, configure your NVIDIA API free key, and run simulations without writing code. This approach accelerates debugging, particularly for multimodal payloads where JSON structure matters.

Implementing Basic API Calls: Text-Only and Image-Only Examples

You start with simple requests to build confidence. The core endpoint is /chat/completions, a POST method that accepts a JSON body with model, messages, and optional parameters like temperature (0-2 for creativity control) and max_tokens (up to 2048).

Consider a text-only query for model familiarization:

import requests

import json

import base64

url = "https://openrouter.ai/api/v1/chat/completions"

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

payload = {

"model": "nvidia/nemotron-nano-12b-v2-vl:free",

"messages": [

{"role": "user", "content": "Explain the basics of vision-language models in 100 words."}

],

"max_tokens": 150,

"temperature": 0.7

}

response = requests.post(url, headers=headers, json=payload)

result = response.json()

print(result['choices'][0]['message']['content'])

This script sends a prompt and retrieves a concise explanation. The response streams back in under 2 seconds on average, thanks to OpenRouter's optimized routing.

Now, extend to image-only processing. Encode an image file to base64:

with open("sample_image.jpg", "rb") as image_file:

base64_image = base64.b64encode(image_file.read()).decode('utf-8')

content = [

{

"type": "text",

"text": "Describe this image in detail."

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

payload["messages"] = [{"role": "user", "content": content}]

# Repeat the POST request as above

The model analyzes the image, outputting descriptions like "A red sports car parked on a city street at dusk, with blurred pedestrians in the background." Such outputs demonstrate the VL fusion effectively.

However, for complex scenarios, you combine modalities, as explored next.

Advanced Usage: Multimodal Queries with NVIDIA Nemotron Nano 12B v2 VL

Combining text and images unlocks the model's full potential. You construct messages with interleaved content arrays, where each element specifies type ("text" or "image_url") and value.

Example for visual question answering:

content = [

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{base64_chart_image}"}},

{"type": "text", "text": "What is the trend in sales from Q1 to Q4 in this chart?"}

]

payload["messages"] = [{"role": "user", "content": content}]

response = requests.post(url, headers=headers, json=payload)

The API returns reasoned responses, such as "Sales increase steadily from $100K in Q1 to $400K in Q4, indicating 300% growth." This capability proves invaluable for data visualization tools or automated reporting systems.

To enhance reliability, you incorporate system prompts for role-playing:

payload["messages"] = [

{"role": "system", "content": "You are a precise image analyst."},

{"role": "user", "content": content}

]

System messages guide the model's behavior, reducing hallucinations in outputs. Additionally, set top_p to 0.9 for nucleus sampling, which balances diversity and coherence.

For batch processing, OpenRouter supports asynchronous calls via WebSockets, but stick to synchronous POSTs for free tier simplicity. Monitor usage through the dashboard to stay within limits—exceeding them triggers 429 errors, which you handle with exponential backoff:

import time

try:

response = requests.post(url, headers=headers, json=payload)

if response.status_code == 429:

time.sleep(60) # Wait 1 minute

response = requests.post(url, headers=headers, json=payload)

except Exception as e:

print(f"Error: {e}")

This resilience ensures uninterrupted workflows. As you scale, Apidog's mocking features simulate responses, aiding offline development.

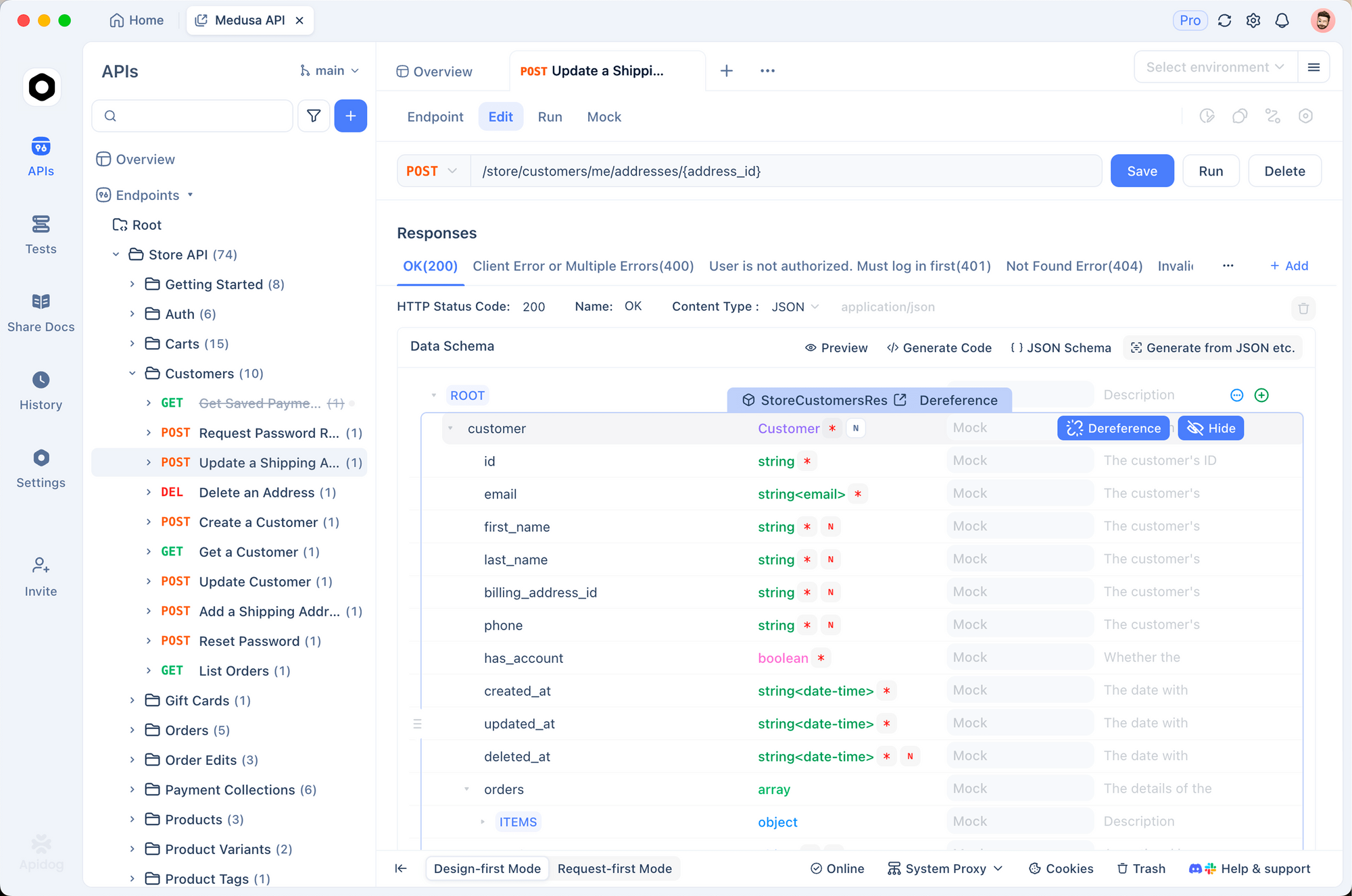

Leveraging Apidog for NVIDIA API Free Testing and Documentation

Apidog elevates your API interactions beyond raw scripts. This open-source tool supports importing OpenAPI specs, and OpenRouter provides one for Nemotron endpoints.

Download Apidog for free, as mentioned earlier, and follow these steps:

- Launch Apidog and create a new project.

- Import the OpenRouter collection from their GitHub repository or paste the schema JSON.

- Add your NVIDIA API free key under environment variables.

- Design requests: Drag-and-drop image uploads convert to base64 automatically.

- Run tests and view traces—Apidog highlights latency spikes or payload errors.

You document endpoints effortlessly, generating Markdown reports with examples. For instance, export a curl command for your VQA query:

curl -X POST https://openrouter.ai/api/v1/chat/completions \

-H "Authorization: Bearer $OPENROUTER_API_KEY" \

-H "Content-Type: application/json" \

-d '{"model":"nvidia/nemotron-nano-12b-v2-vl:free","messages":[{"role":"user","content":[{"type":"image_url","image_url":{"url":"data:image/jpeg;base64,..."},"type":"text","text":"Analyze this."}]}]}'

Such exports facilitate sharing with teams. Moreover, Apidog's collaboration mode tracks changes, version-controlling your NVIDIA Nemotron Nano 12B v2 VL experiments.

In practice, developers report 40% faster iteration cycles with Apidog, as it abstracts boilerplate code. Transition to production by exporting to Postman or directly integrating via SDKs.

Error Handling, Best Practices, and Optimization Strategies

Errors arise in API usage, so you anticipate them proactively. Common issues include 401 (invalid key)—double-check your Bearer token. For 400 (malformed JSON), validate payloads with tools like JSONLint. Image-specific errors, such as oversized base64 strings, resolve by compressing files beforehand using Pillow:

from PIL import Image

img = Image.open("large_image.jpg")

img = img.resize((512, 512))

img.save("resized.jpg", quality=85)

Best practices include rate limiting on your end with time.sleep(6) between calls to respect the 10 RPM cap. Cache frequent responses using Redis to minimize API hits.

Optimization focuses on prompt engineering. Use concise queries: "Identify objects and their relations in this photo" yields better results than vague ones. Experiment with temperature values—lower for factual tasks, higher for creative generation.

For cost-free scaling within limits, batch logical queries into single messages, maximizing token efficiency. Monitor token usage via response metadata: result['usage']['total_tokens'].

Furthermore, integrate logging with logging module to track performance:

import logging

logging.basicConfig(level=logging.INFO)

logging.info(f"Response tokens: {result['usage']['total_tokens']}")

These habits build robust applications. As you refine, consider hybrid setups combining Nemotron with local preprocessing for ultra-low latency.

Conclusion

You now hold the tools to harness the NVIDIA Nemotron Nano 12B v2 VL API for free. From initial setup to advanced deployments, this guide equips you for success. Experiment boldly—start with simple calls and iterate toward sophisticated applications. Remember, consistent small tweaks, like refined prompts or Apidog-assisted testing, yield substantial gains.

For further reading, explore NVIDIA's developer forums or OpenRouter's changelog. Download Apidog today if you haven't, and transform your API workflows. What project will you tackle first?