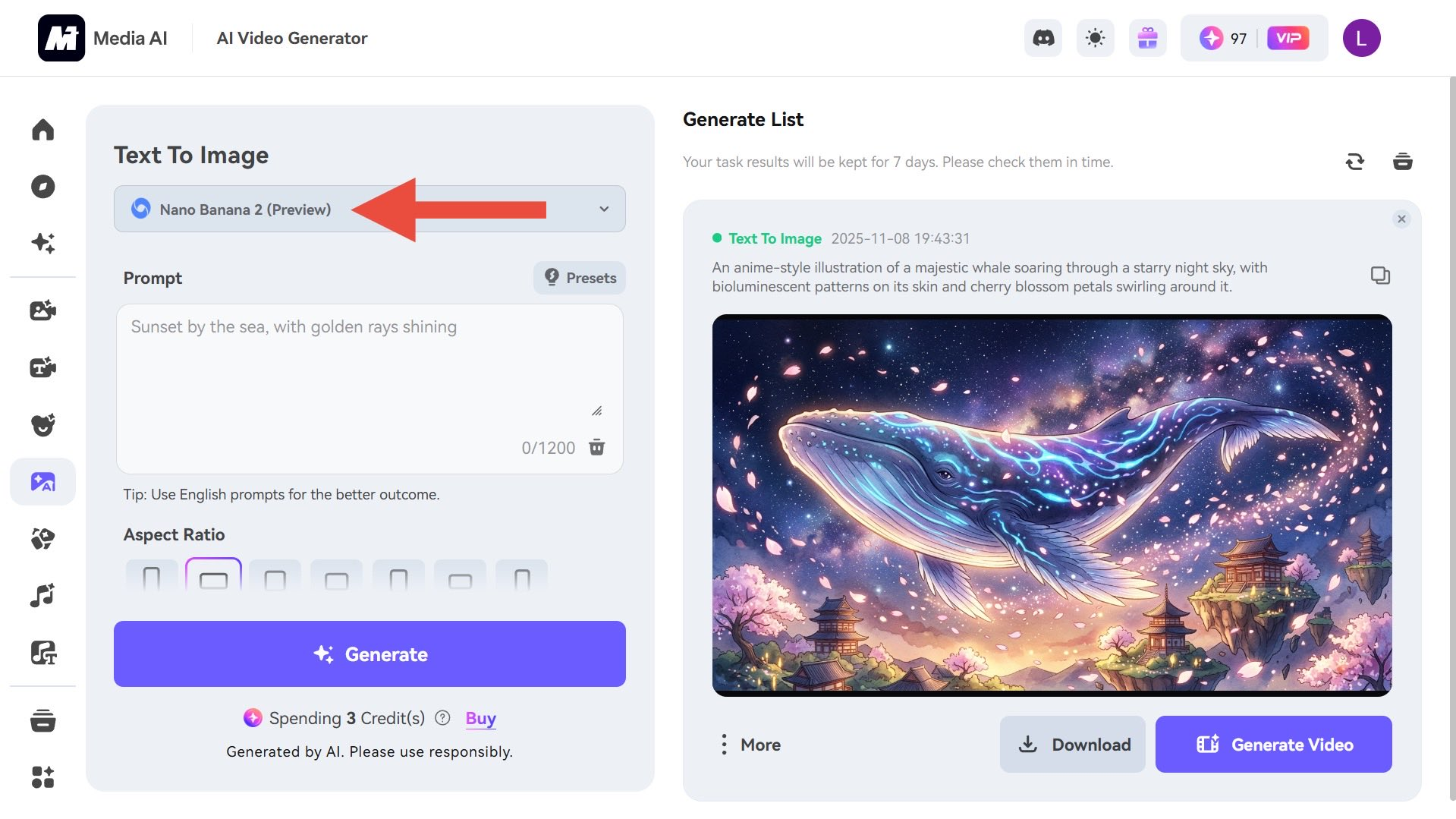

Google pushes boundaries in artificial intelligence, and Nano Banana 2 emerges as a key player in that evolution. This rumored successor to the original Nano Banana model promises advancements in image generation that could transform how users create visuals on mobile devices. Engineers at Google reportedly refine this technology to handle complex prompts with precision, integrating it seamlessly into ecosystems like Gemini. As developers anticipate building applications around such innovations, tools become essential for efficient API testing.

Nano Banana 2 builds on foundations that captivated millions. The first Nano Banana generated figurine-style portraits and cinematic recreations, drawing over 10 million new users to the Gemini app. Now, rumors suggest Google enhances this with higher fidelity and smarter processing. Analysts predict these upgrades address common pain points in AI image tools, such as inconsistent subjects or blurry text. Moreover, the model's potential on-device deployment means faster, privacy-focused generation on devices like the Pixel 9 Pro.

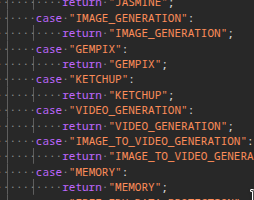

Sources from developer notes and leaked previews indicate Nano Banana 2, codenamed GEMPIX2 (now KETCHUP) , leverages Gemini 3 Pro as its backbone.

This integration allows for multimodal reasoning, where the system processes text, images, and contextual data simultaneously. Consequently, users might generate images that not only look realistic but also convey narrative depth, such as emotional tones or cultural nuances.

What Is Nano Banana 2? Understanding the Basics

Google develops Nano Banana 2 as an advanced AI image generator, evolving from its predecessor. The original Nano Banana, often linked to Gemini 2.5 Flash, specialized in creating stylized images like action figures set in real-world scenes. It processed prompts in 20-30 seconds, producing 1MP outputs with upscaling. In contrast, Nano Banana 2 aims to elevate this to professional levels.

Essentially, Nano Banana 2 functions as a hybrid system. It combines large language model (LLM) reasoning from Gemini 3 Pro with diffusion-based rendering. The LLM handles high-level planning, interpreting prompts for intent, cause, and effect. Then, the diffusion component renders the visuals, guided by shared latent representations. This architecture marks a shift from traditional models, which map text to visuals without deeper comprehension.

Transitioning to its ecosystem role, Nano Banana 2 integrates with Google services. It could power features in Google Photos for automatic edits, Workspace for slide templates, or Search for visual results. As a result, everyday users gain access to studio-quality tools without specialized software.

Rumored Features of Nano Banana 2: A Technical Breakdown

Rumors highlight several features that set Nano Banana 2 apart. First, it offers sharper fidelity and improved text integration. The model renders legible typography and clean edges, supporting native 2K resolutions with 4K upscaling. This upgrade stems from enhanced prompt understanding, where the system parses complex descriptions accurately.

Additionally, global context awareness stands out. Nano Banana 2 incorporates cultural and geographic data, generating authentic details. For example, a prompt like "a family picnic in Tokyo during cherry blossom season" yields visuals with accurate flora, attire, and atmosphere. This feature relies on expanded training datasets, enabling the model to avoid generic outputs.

Moreover, subject consistency improves dramatically. The original model occasionally warped faces or altered outfits across iterations. Nano Banana 2 addresses this through scene memory, preserving lighting, geometry, and elements in multi-image sequences. It extends to narrative coherence, treating generations like film frames.

Creative editing modes add versatility. Users select "Edit with Gemini" to refine images by highlighting areas for changes, such as swapping backgrounds or adjusting lighting. This operates via image-to-image pipelines, fusing user inputs with AI suggestions.

Faster iterations represent another key enhancement. Nano Banana 2 completes complex prompts in under 10 seconds, rivaling tools like Midjourney. This speed comes from optimized sampling schedulers and hybrid processing, where on-device hardware accelerates routine tasks.

Self-correcting generation introduces intelligence. The model plans images, analyzes for errors—like anatomical inconsistencies or prompt mismatches—and iterates internally. This mimics human workflows, reducing the need for manual refinements.

Multimodal architecture broadens applications. Nano Banana 2 supports text-to-image, image-to-image, and multi-image fusion. It even hints at video diffusion through temporal coherence mapping, potentially generating short clips.

In practical terms, these features enable diverse use cases. Marketers generate banner concepts in consistent styles, game developers prototype environments, and casual users create personalized wallpapers. However, challenges remain, such as ensuring ethical outputs and managing computational demands.

Technical Specifications: Under the Hood of Nano Banana 2

Engineers design Nano Banana 2 with a sophisticated technical foundation. At its core, Gemini 3 Pro Image provides the multimodal LLM, handling reasoning and structure. This LLM processes inputs to create "intent vectors," embeddings that capture emotion, narrative, and context.

The diffusion head then renders based on these vectors. Unlike standalone diffusion models, this setup uses shared latents for seamless integration. Rumors suggest 16-bit depth for richer colors and gradients, enhancing photorealism.

Resolution capabilities impress: native 2K with AI-driven 4K upscaling. This involves super-resolution techniques, possibly convolutional neural networks fine-tuned on high-res datasets.

For on-device deployment, quantization reduces model size. Techniques like INT8 or FP16 maintain accuracy while fitting mobile hardware, such as Tensor Processing Units in Pixels.

Power consumption considerations factor in. Nano Banana 2 optimizes for battery life, offloading heavy computations to the cloud when needed. Developers can test such hybrids using Apidog, which simulates API endpoints for latency and error handling.

Security features include built-in safeguards. The model detects and avoids harmful content, aligning with Google's AI principles. Watermarking embeds metadata for traceability.

Scalability extends to cloud versions via Vertex AI. Here, Nano Banana 2 handles batch processing for enterprise needs, supporting APIs for integration.

Comparatively, the original Nano Banana used simpler diffusion without LLM guidance, limiting reasoning. Nano Banana 2's hybrid approach bridges this gap, potentially achieving higher PSNR (Peak Signal-to-Noise Ratio) scores in benchmarks.

Release Date Rumors and Rollout Strategy

Sources predict Nano Banana 2 launches in mid-November 2025. Leaks from the Gemini website and developer previews suggest an imminent reveal, possibly within days. This timing aligns with Google's pattern of rapid iterations in AI.

Initially, a limited release targets beta users in the Gemini app. Full rollout could follow by early 2026, integrating into Android and web services.

Google likely employs a phased strategy. First, on-device for Pixel devices, then cloud access via APIs. This allows iterative feedback, refining features based on user data.

Potential announcements tie to events like Google I/O extensions or AI-focused updates. However, surprises like the original Nano Banana's sudden drop remain possible.

Post-launch, updates might introduce "Nano Banana Pro" for premium tasks, as hinted in code references.

Comparisons to Predecessors and Competitors

Nano Banana 2 surpasses the original in every metric. The first version excelled in stylized outputs but lagged in speed and resolution. Now, with under-10-second generations and 4K support, it competes directly with Midjourney and Adobe Firefly.

Midjourney offers artistic versatility but requires subscriptions. Nano Banana 2, integrated into free Gemini, provides accessibility. Firefly emphasizes ethical training; Google matches this with robust datasets.

Against DALL-E 3, Nano Banana 2's self-correction gives an edge, reducing iterations. OpenAI's model shines in creativity, but Google's on-device focus prioritizes mobility.

Broader comparisons include Stable Diffusion variants. Nano Banana 2's closed ecosystem ensures consistency, unlike open-source alternatives prone to variability.

In benchmarks, expect superior FID (Fréchet Inception Distance) scores due to advanced reasoning.

Implications for Developers and Industries

Developers gain powerful tools with Nano Banana 2. APIs enable embedding in apps, from photo editors to e-commerce visualizers. Apidog facilitates this by offering free downloads for API mocking and testing, ensuring reliable integrations.

Industries transform: marketing automates campaigns, education visualizes concepts, and healthcare simulates scenarios.

However, ethical concerns arise. Bias in training data requires mitigation, and over-reliance on AI might stifle human creativity.

Economically, it boosts Google's ecosystem, attracting more users and developers.

Potential Challenges and Future Directions

Challenges include computational costs. High-res generation demands efficient hardware, limiting accessibility.

Privacy issues surface with on-device processing, though local execution helps.

Future directions point to video and multimodal expansions. Rumors of "Audio Papaya" suggest audio integration.

Google might open-source elements, fostering community contributions.

Conclusion: Preparing for Nano Banana 2's Impact

Nano Banana 2 positions Google at AI's forefront. Its features promise transformative image generation, blending speed, intelligence, and accessibility.

As rumors solidify, stakeholders watch closely. Developers, download Apidog for free to gear up for API-driven innovations.