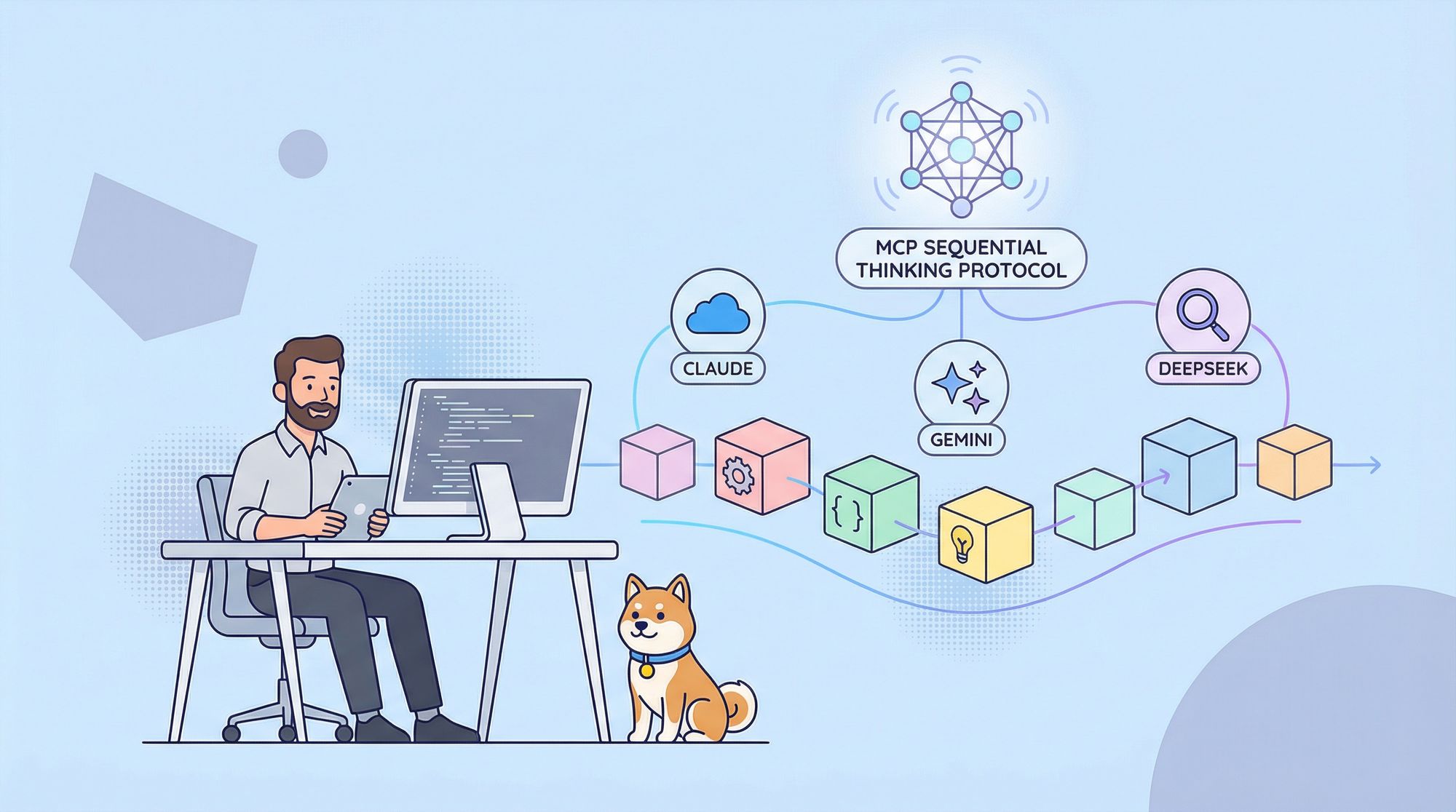

Imagine a development environment where your code is crafted by a team of specialized AI agents. Claude designs the architecture, Gemini writes the tests, and DeepSeek flawlessly implements features - all working in perfect harmony. This isn't a futuristic fantasy; it's the power of MCP Sequential Thinking combined with OpenRouter. In this guide we'll show you how to make it your secret weapon for tackling complex projects.

What is MCP Sequential Thinking?

MCP (Model Context Protocol) Sequential Thinking is a revolutionary approach to problem-solving that tackles complex coding challenges by breaking them down into a series of clear, logical, and interconnected steps. It's about more than just coding; it's about structuring your thoughts and leveraging the strengths of different AI models at each stage of the development process. Instead of diving headfirst into implementation, MCP Sequential Thinking emphasizes:

1. Precise Problem Definition: Clearly articulating the problem you're trying to solve, leaving no room for ambiguity.

2. Atomic Sub-Task Decomposition: Breaking down the problem into smaller, manageable, and independent sub-tasks.

3. Dependency Sequencing: Identifying and organizing the dependencies between these sub-tasks to ensure a logical execution flow.

4. Optimized Execution Flow: Streamlining the execution of these sub-tasks for maximum efficiency and effectiveness.

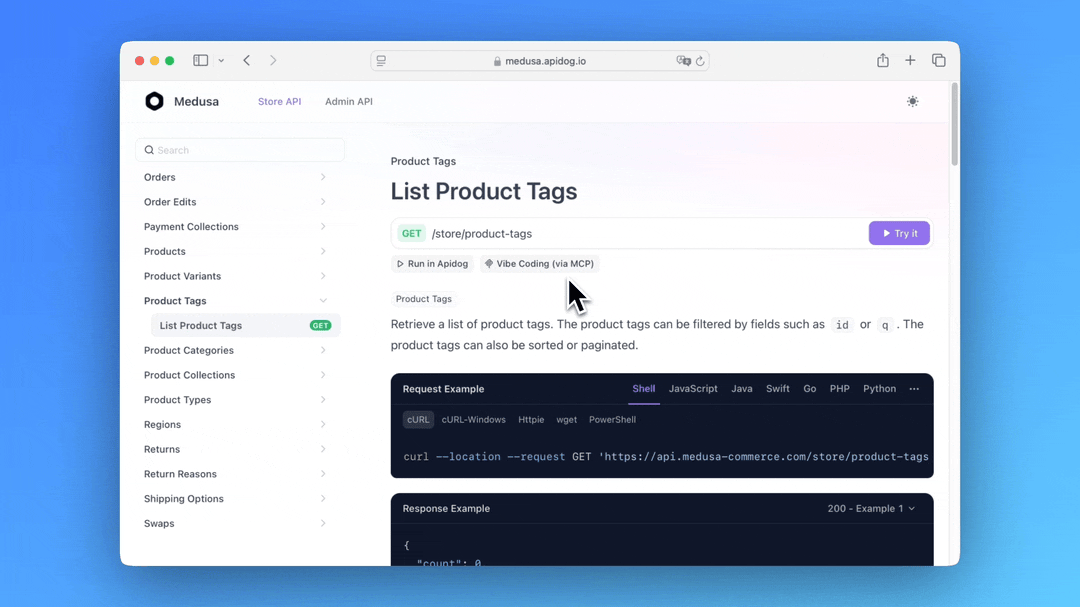

Here's a quick rundown of what the Apidog MCP Server offers:

- Generates or modifies code based on your API specifications

- Searches through API specification content

- Creates data models and DTOs that align seamlessly with your API design

- Adds relevant comments and documentation based on API specifications

By acting as a bridge between your Apidog projects and Cursor, the Apidog MCP Server ensures your AI assistant has access to the most up-to-date API designs. This integration enhances the Memory Bank feature by providing structured API information that Cursor can reference during development.

To learn more, check out the Documentation or visit the NPM page.

Also, consider trying Apidog—an integrated, powerful, and cost-effective alternative to Postman!

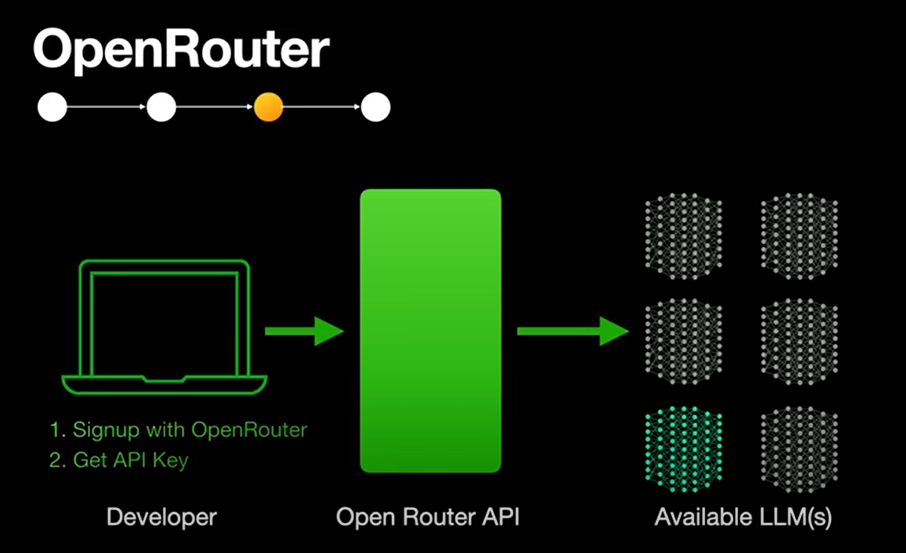

How OpenRouter AI Improves This Process

OpenRouter AI plays a crucial role in enhancing MCP Sequential Thinking. It acts as a unified API gateway, providing access to a diverse range of AI models (GPT-4, Claude, Mistral, and more). This allows you to leverage the unique strengths of different models for each step of the sequence. OpenRouter AI improves the process by:

- Automating Repetitive Coding Steps: Frees you from tedious tasks by automatically generating boilerplate code, setting up project structures, and configuring development environments.

- Generating Boilerplate Code: Drastically reduces development time by automatically creating the basic code structure and initial components for each task.

- Suggesting Optimizations: Provides intelligent suggestions for improving code efficiency, performance, and security.

- Debugging in Real-Time: Helps identify and resolve errors quickly with real-time code analysis and intelligent debugging suggestions.

MCP Sequential Thinking 101

What Makes This Different?

Traditional AI coding often involves single-model conversations, where a single AI is responsible for the entire task. In contrast, Sequential Thinking is like an AI assembly line:

Task --> [Planner] --> [Researcher] --> [Coder] --> [Reviewer]Real-world example from the forum thread:

User: "Build a React dashboard showing real-time crypto prices"

1. Claude-3.5-Sonnet: Creates architecture plan

2. Gemini-2.0-Flash-Thinking: Researches best WebSocket APIs

3. DeepSeek-R1: Implements React components

4. GPT-4-Omni: Reviews code for security flawsKey Components

1. MCP Server Network: Manages the handoff of tasks between different AI models.

2. OpenRouter Gateway: Routes tasks to the most cost-effective and appropriate AI model.

3. Cursor IDE Integration: Provides native workflow control directly within your development environment.

Setup MCP Sequential Thinking and OpenRouter Like a Pro

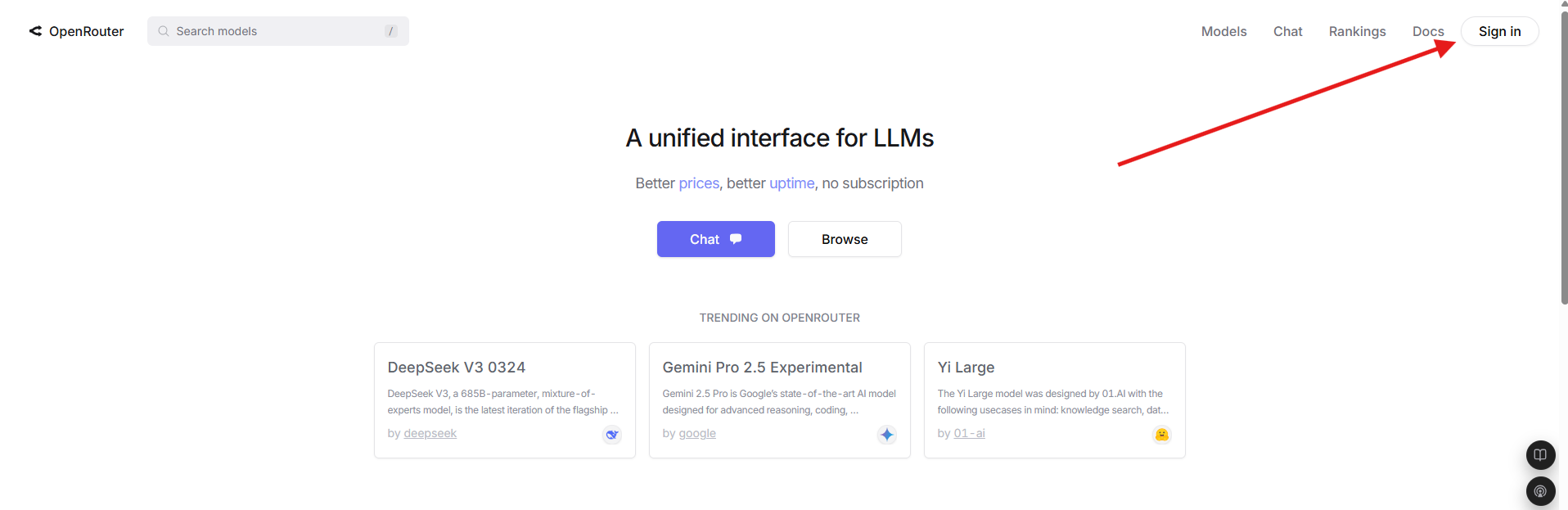

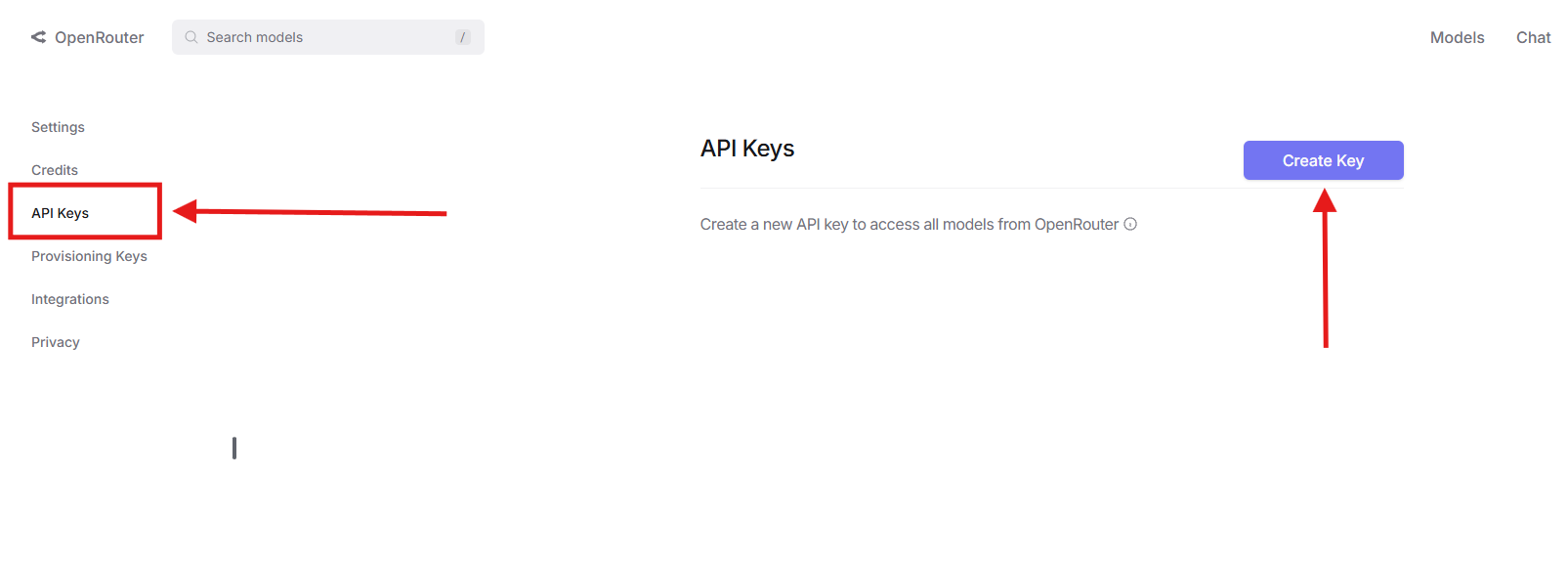

Step 1: Get Your OpenRouter API Key

- Visit OpenRouter.ai

2. Sign up → Go to Account Settings → API Keys

3. Click Create Key → Copy to clipboard

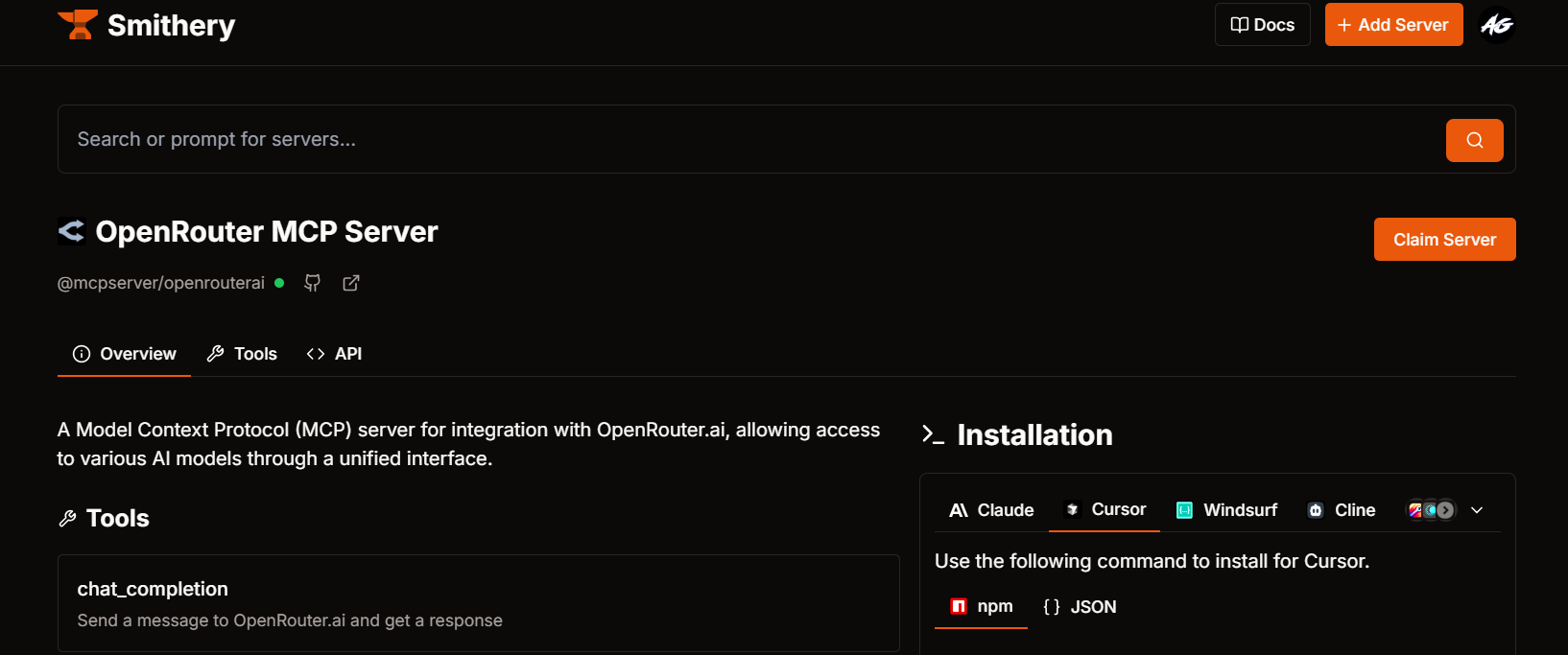

Step 2: Configure OpenRouter in Smithery AI

- Open Smithery AI

- Search for "OpenRouter MCP"

- In the config panel paste:

{

"api_key": "your_copied_key",

"default_model": "google/gemini-pro" // Free tier recommended

}

4. Copy either:

- NPM Command:

npx -y @smithery/cli@latest install @mcpserver/openrouterai --client cursor --config "{\"openrouterApiKey\":\"YOUR_API_KEY\",\"openrouterDefaultModel\":\"deepseek/deepseek-chat-v3-0324:free\"}"- or JSON Config (for manual setup):

{

"mcpServers": {

"openrouterai": {

"command": "npx",

"args": [

"-y",

"@smithery/cli@latest",

"run",

"@mcpserver/openrouterai",

"--config",

"{\"openrouterApiKey\":\"YOUR_API_KEY\",\"openrouterDefaultModel\":\"deepseek/deepseek-chat-v3-0324:free\"}"

]

}

}

}

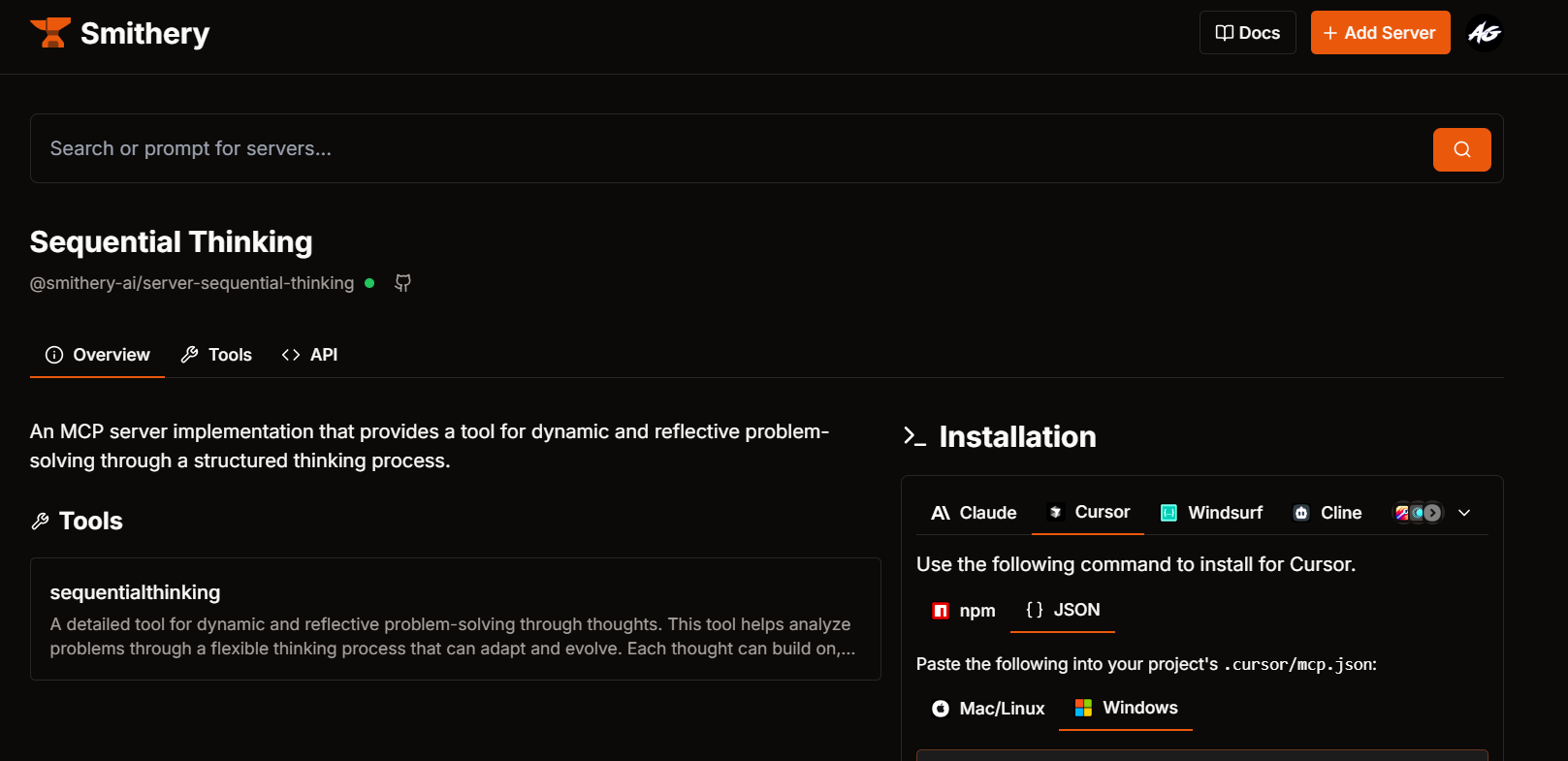

Step 3: Add Sequential Thinking MCP

- In Smithery AI, search "Sequential Thinking MCP Server"

- Choose your preferred format:

- NPM Quick Install:

npx -y @smithery/cli@latest install @smithery-ai/server-sequential-thinking --client cursor --key YOUR_API_KEY- or JSON Config (Advanced):

{

"mcpServers": {

"server-sequential-thinking": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"@smithery/cli@latest",

"run",

"@smithery-ai/server-sequential-thinking",

"--key",

"YOUR_API_KEY"

]

}

}

}

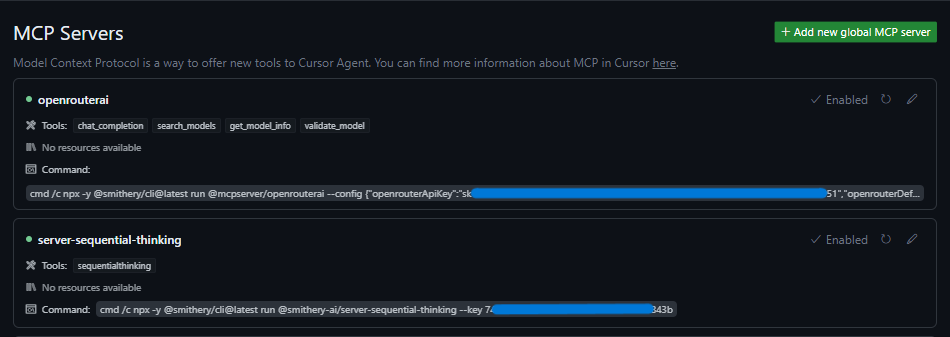

Step 4: Activate in Cursor IDE

For NPM Users:

- Open Cursor → Terminal (

Ctrl + j) - Paste commands one at a time

- Wait for ✅ success confirmation

For JSON Config Users:

- Open Cursor Settings (

Ctrl + shift + j) - Navigate to MCP → Servers

- Click Add Server → Paste JSON

- Save → Look for green ● Connected status

Verify the Installation:

After adding the server, look for a green dot next to "Sequential Thinking" in the MCP Servers list, indicating a successful connection.

By following these steps, you'll effectively integrate OpenRouter and Sequential Thinking MCP servers into Cursor, enhancing your development environment with advanced AI-driven tools.

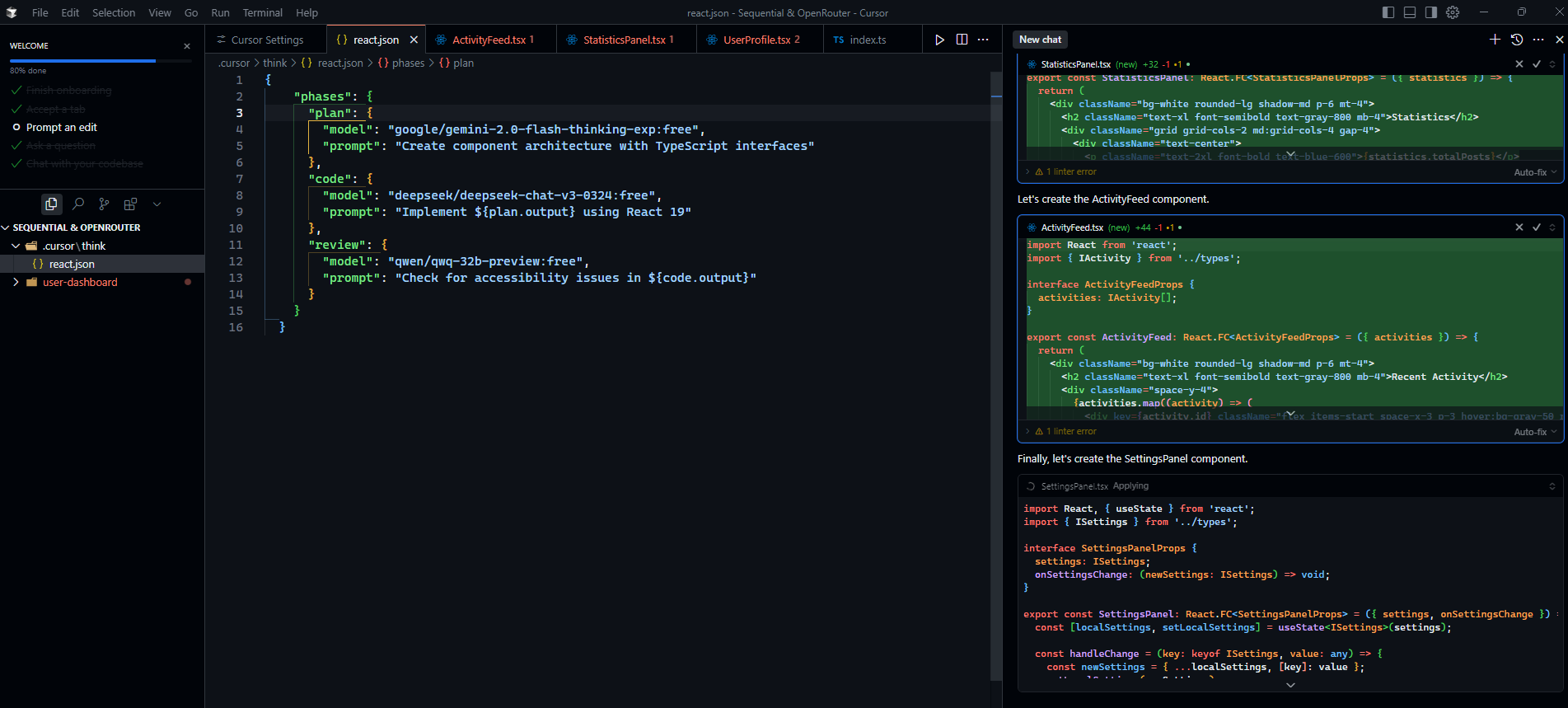

Crafting Your First OpenRouter and MCP Sequential thinking Workflow

Developing a template for React Projects:

Open a new project with Cursor and create a file named .cursor/think/react.json with the following content:

{

"phases": {

"plan": {

"model": "google/gemini-2.0-flash-thinking-exp:free",

"prompt": "Create component architecture with TypeScript interfaces"

},

"code": {

"model": "deepseek/deepseek-chat-v3-0324:free",

"prompt": "Implement ${plan.output} using React 19"

},

"review": {

"model": "qwen/qwq-32b-preview:free",

"prompt": "Check for accessibility issues in ${code.output}"

}

}

}Usage: To execute this workflow, run the following command:

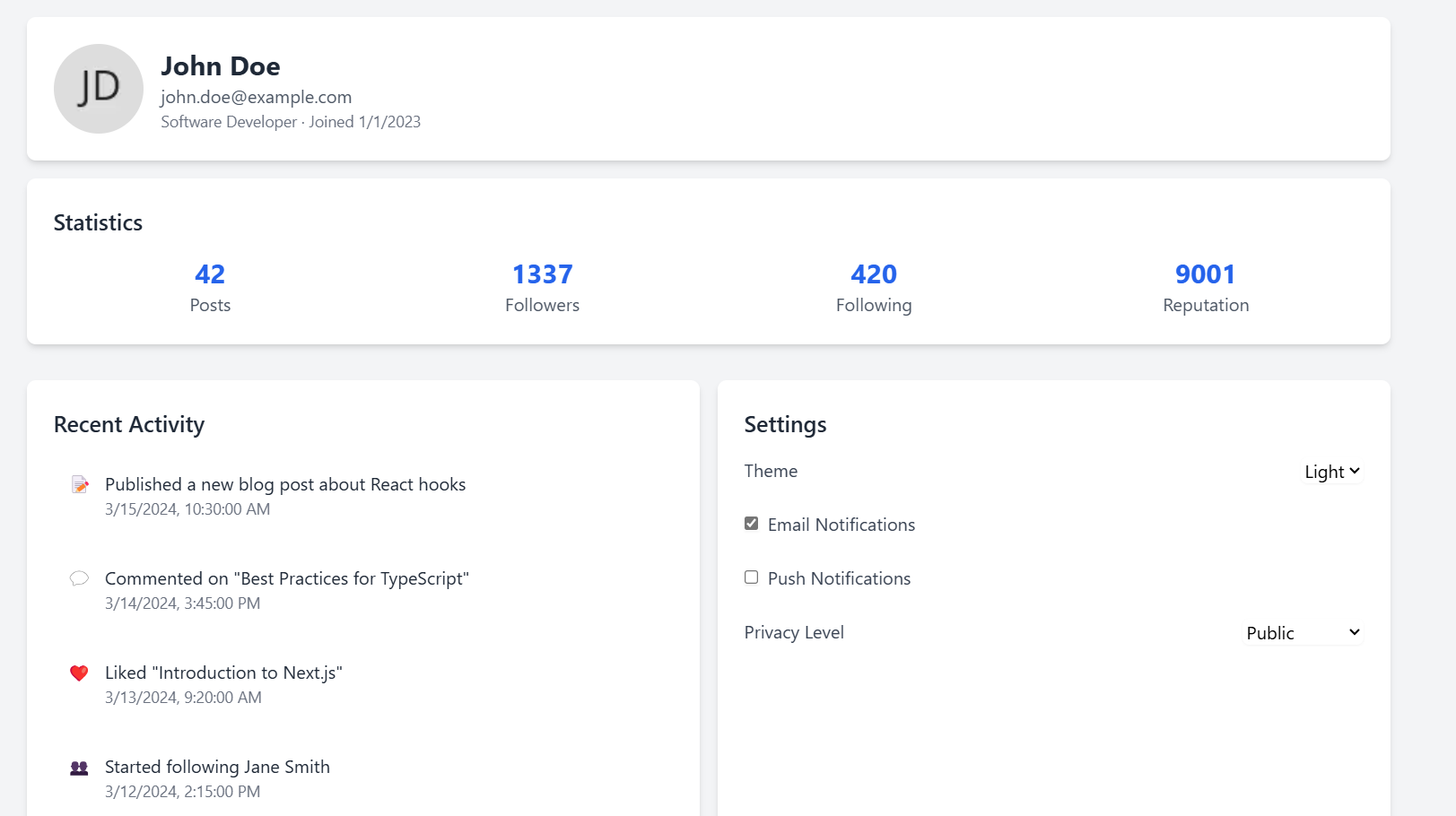

/think react "Simple User profile dashboard"Workflow Breakdown:

- Planning Phase with Gemini 2.0 Flash Thinking: Utilize the Gemini 2.0 Flash Thinking model to create a detailed component architecture with TypeScript interfaces. This step ensures a well-structured foundation for your React project.

- Coding Phase with DeepSeek Chat V3: Implement the planned architecture using React 19 by leveraging the DeepSeek Chat V3 model. This phase focuses on translating the design into functional code components.

- Review Phase with Qwen 32B Preview: Conduct a thorough review of the implemented code to identify and address any accessibility issues, ensuring the application is user-friendly and compliant with accessibility standards.

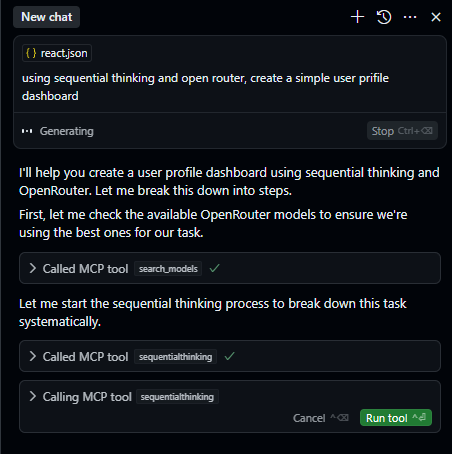

Code Execution: When executing the defined workflow, Cursor sequentially engages the specified MCP (Model Context Protocol) tools—Sequential Thinking and OpenRouter AI—to perform designated tasks.

During this process, Cursor prompts for your explicit permission before each MCP server is utilized, ensuring you maintain control over the integration of these tools into your development workflow.

Result: A production-ready User-Profile dashboard in a few minutes compared to hours or days that you would spend if you had to do it manually.

Final Thoughts: The Future of Coding is Here

MCP Sequential Thinking is not just a trend; it's a fundamental shift in how we approach software development. By leveraging the power of multiple AI models in a structured and efficient manner, you can achieve unprecedented levels of productivity, code quality, and innovation. Your code will never be the same. Embrace the future of AI-assisted development with MCP Sequential Thinking and unlock your team's full potential. The key takeaways are the ability to dissect complex projects into smaller, manageable tasks, assign those tasks to AI models specialized for that particular area, and streamline the entire development lifecycle. With the continued advancement of AI and tools like OpenRouter and Cursor, the future of software development is undoubtedly intertwined with MCP Sequential Thinking, making it an essential skill for any forward-thinking developer. 🚀