Are you eager to tap into the capabilities of Meta's advanced Llama language models without spending a dime? In this detailed guide, we will walk you through two practical methods to use the Llama API for free: leveraging OpenRouter and Together AI.

What Is the Llama API?

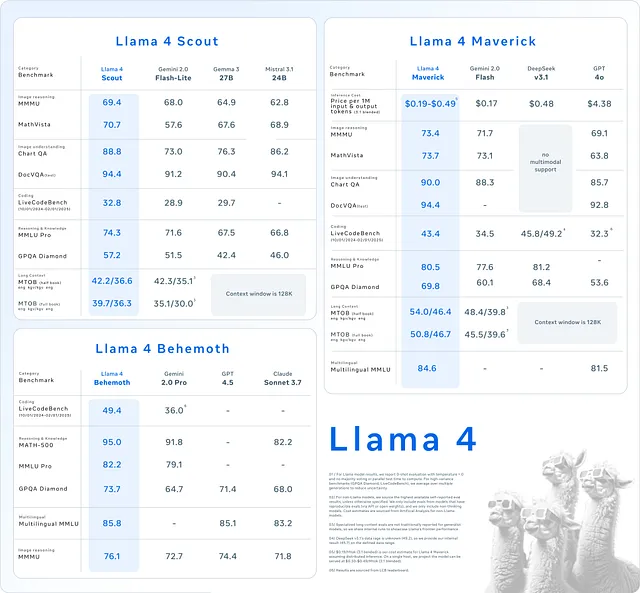

The Llama API provides access to Meta’s family of language models, including Llama 3 and Llama 4. These models excel at tasks like text generation, contextual understanding, and even multimodal applications involving images. Developers use the Llama API to power applications ranging from chatbots to content generation tools, all without needing to train models from scratch.

While these models are impressive, accessing them typically involves costs—unless you know where to look. Fortunately, platforms like OpenRouter and Together AI offer free access to certain Llama models. In this guide, we will cover both methods, complete with step-by-step instructions and code examples to get you started.

Why Choose the Llama API?

Before we jump into the methods, let’s consider why the Llama API stands out. First, it delivers top-tier language processing, enabling applications to produce coherent, human-like text. Next, newer versions like Llama 4 introduce multimodal features, allowing the model to handle both text and images—a game-changer for innovative projects. Finally, using the API saves time and resources compared to building custom models.

With that in mind, let’s explore how to access the Llama API for free.

Method 1: Using OpenRouter to Access Llama API for Free

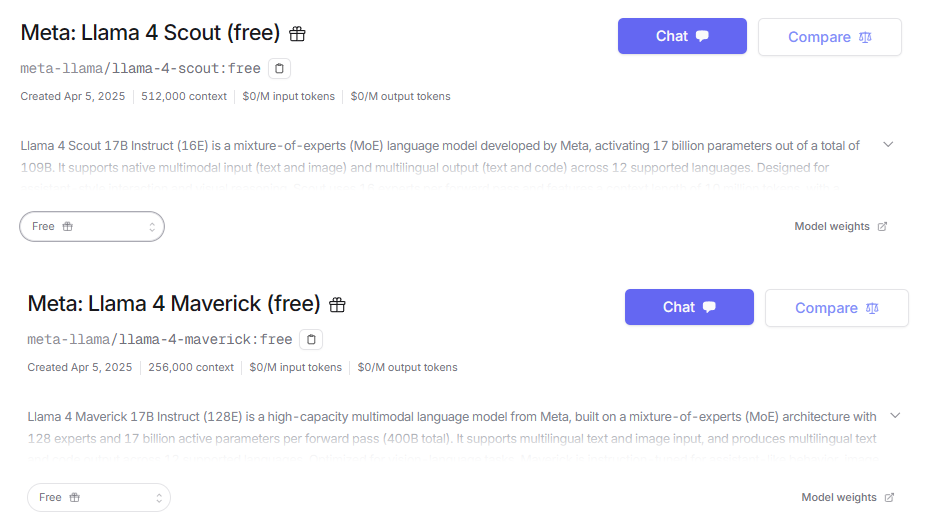

OpenRouter serves as a unified API platform, granting access to over 300 AI models, including Meta’s Llama series. Best of all, it offers a free tier that includes models like Llama 4 Maverick and Llama 4 Scout. This makes OpenRouter an ideal starting point for developers seeking cost-free access to cutting-edge AI.

Step 1: Sign Up and Obtain an API Key

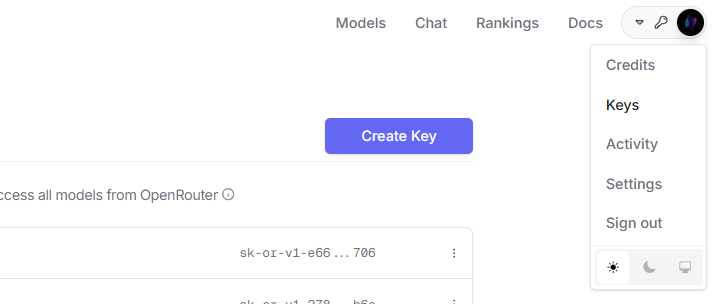

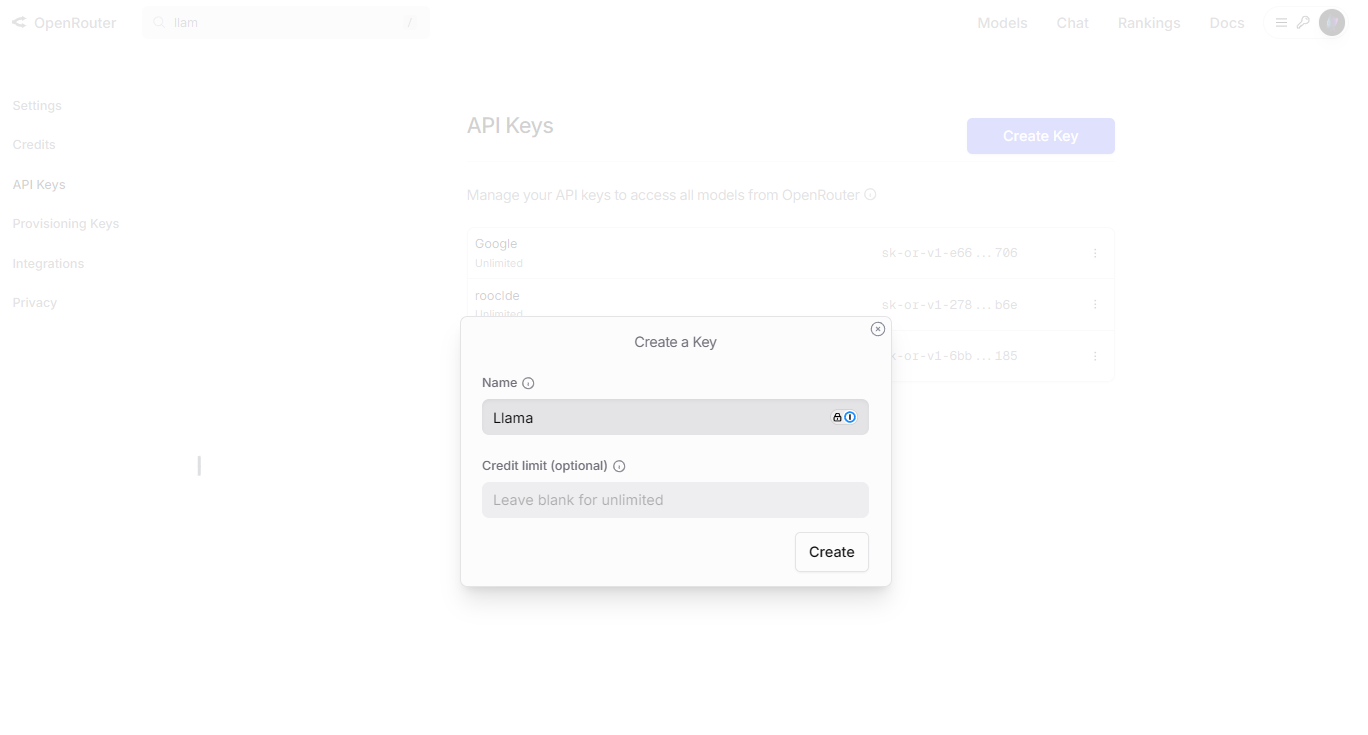

To begin, you need an OpenRouter account and an API key. Follow these steps:

Navigate to the OpenRouter website and click "Sign Up." Log in, then head to the "API Keys" section in your dashboard.

Click "Create Key," name it (e.g., "Llama Free Key"), and copy the key securely.

Step 2: Set Up Your Environment

You’ll need a programming language and an HTTP client library to interact with the API. We’ll use Python with the requests library. Install it if you haven’t already:

pip install requests

Step 3: Send Your First Request

Now, let’s write a Python script to generate text using the Llama 4 Scout model via OpenRouter:

import requests

import json

# Define the endpoint and your API key

API_URL = "https://openrouter.ai/api/v1/chat/completions"

API_KEY = "your_api_key_here" # Replace with your actual key

# Set headers for authentication

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

# Create the payload with model and prompt

payload = {

"model": "meta-llama/llama-4-scout:free",

"messages": [

{"role": "user", "content": "Describe the Llama API in one sentence."}

],

"max_tokens": 50

}

# Send the request

response = requests.post(API_URL, headers=headers, data=json.dumps(payload))

# Handle the response

if response.status_code == 200:

result = response.json()

print(result["choices"][0]["message"]["content"])

else:

print(f"Error: Request failed with status {response.status_code}")

This script sends a prompt to the Llama 4 Scout model and prints the response. Adjust max_tokens to control the output length.

Step 4: Experiment with Features

OpenRouter supports customization. For instance, tweak the temperature parameter to adjust response creativity, or explore multimodal capabilities with models like Llama 4 Maverick. Check the OpenRouter models page for details on available options.

Method 2: Using Together AI to Access Llama API for Free

Together AI is another platform hosting Llama models, offering free access to Llama 3 models like Llama 3.2 11B. However, note that Llama 4 is not free on Together AI at this time—only paid plans unlock it. If Llama 3 meets your needs, this method works well.

Step 1: Register and Get an API Key

Start by setting up a Together AI account:

Visit the Together AI website and go to the "API Keys" section and generate a new key.

Save the key securely for use in your requests.

Step 2: Prepare Your Environment

As with OpenRouter, we’ll use Python and requests. Install it if needed:

pip install requests

Step 3: Make an API Call

Here’s a Python script to generate text with the Llama 3 model on Together AI:

import requests

import json

# Define the endpoint and your API key

API_URL = "https://api.together.xyz/inference"

API_KEY = "your_api_key_here" # Replace with your actual key

# Set headers for authentication

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

# Create the payload with model and prompt

payload = {

"model": "llama-3-2-11b-free",

"prompt": "What does the Llama API do?",

"max_tokens": 50,

"temperature": 0.7

}

# Send the request

response = requests.post(API_URL, headers=headers, data=json.dumps(payload))

# Handle the response

if response.status_code == 200:

result = response.json()

print(result["output"]["text"])

else:

print(f"Error: Request failed with status {response.status_code}")

This script queries the Llama 3 model and displays the output. The temperature setting balances creativity and coherence.

Step 4: Know the Limits

Together AI’s free tier has constraints, such as rate limits and no access to Llama 4. Review the Together AI models page to understand what’s available and plan accordingly.

Optimizing Your Llama API Experience

To maximize your success with the Llama API, adopt these practices:

- Craft Precise Prompts: Clear prompts yield better results. Test variations to refine outputs.

- Track Usage: Monitor your API calls via OpenRouter or Together AI dashboards to stay within free-tier limits.

- Handle Errors: Add try-except blocks in your code to manage failures gracefully.

- Cache Responses: Store frequent query results to reduce API usage and speed up your app.

These steps ensure efficiency and reliability in your projects.

Troubleshooting Common Problems

Issues can arise when using the Llama API. Here’s how to fix them:

- 401 Unauthorized: Verify your API key is correct and included in the headers.

- 429 Too Many Requests: You’ve hit the rate limit—wait or upgrade your plan.

- Invalid Model: Confirm the model name matches the provider’s documentation.

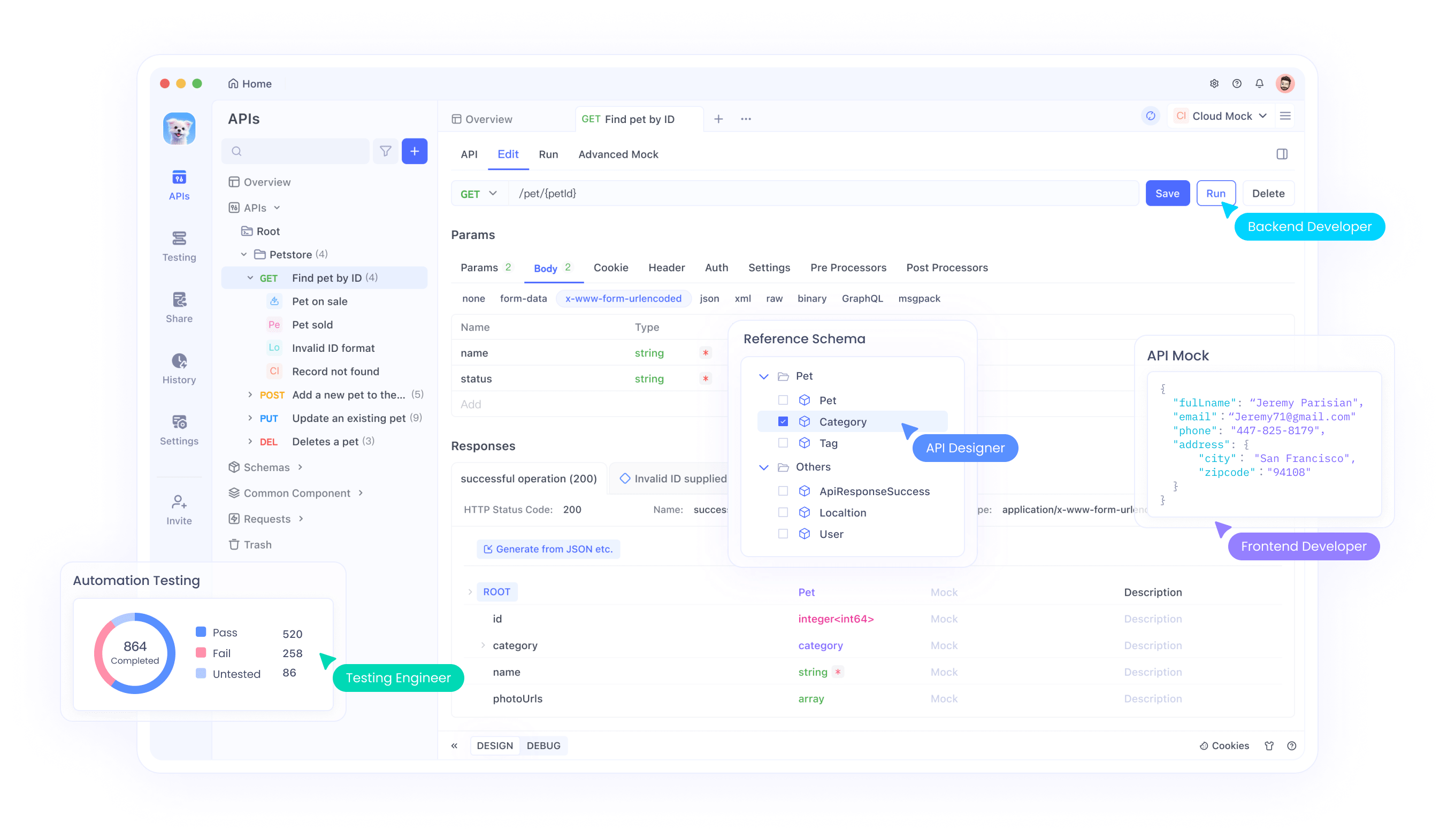

- Bad Payload: Check your JSON syntax. Tools like Apidog can help debug this.

Speaking of tools, download Apidog for free to simplify testing and troubleshooting your API calls—it’s a must-have for developers.

Comparing OpenRouter and Together AI

Both platforms offer free access, but they differ:

- Model Availability: OpenRouter includes Llama 4 for free; Together AI limits free access to Llama 3.

- Ease of Use: Both provide straightforward APIs, but OpenRouter’s broader model selection stands out.

- Scalability: Together AI may push paid plans for advanced features, while OpenRouter’s free tier is more generous.

Choose based on your project’s needs—Llama 4 access leans toward OpenRouter, while Together AI suits Llama 3 users.

Use Cases for the Llama API

The Llama API shines in various scenarios:

- Chatbots: Build conversational agents with natural responses.

- Content Creation: Generate articles, summaries, or captions.

- Multimodal Apps: Combine text and image processing with Llama 4 (via OpenRouter).

These applications showcase the API’s versatility, all achievable for free with the right setup.

Conclusion

In this guide, we’ve demonstrated how to use the Llama API for free through OpenRouter and Together AI. OpenRouter grants access to Llama 4 models, while Together AI offers Llama 3 on its free tier. With detailed instructions and code snippets, you’re now equipped to integrate these models into your projects. Start experimenting today, and don’t forget to leverage tools like Apidog to enhance your workflow. The power of Llama is yours—use it wisely!