Have you ever found managing your Kubernetes (K8s) deployments to be a challenging task? With the help of AI, you can efficiently manage containers while maintaining control and security. This is where the Kubernetes MCP Server (K8s MCP) comes into play.

This tutorial will guide you through understanding and using a K8s MCP, which is a server implementing the Model Context Protocol (MCP) specifically designed for interacting with your Kubernetes clusters. This unlocks exciting possibilities for automating tasks, gaining insights, and even using AI agents to manage your deployments. Let's dive into the world of intelligent cloud management!

What is a Kubernetes MCP Server (K8s MCP)?

A Kubernetes MCP Server is a bridge between your AI-powered tools and your Kubernetes cluster. It implements the Model Context Protocol (MCP) to provide a secure and structured way for AI to interact with your deployments.

Think of it this way: Kubernetes is the operating system for your cloud, and the K8s MCP is the translator that allows AI to understand and manage that operating system.

Instead of directly exposing your cluster to potentially untrusted AI models, the K8s MCP server acts as a gatekeeper, providing a controlled interface that allows AI to:

- List available resources in your cluster (pods, deployments, services, etc.).

- Get detailed information about specific resources.

- Execute commands to manage your deployments (scale, update, restart, etc.).

All of this happens with proper authorization and auditing, ensuring that your cluster remains secure.

Kubernetes MCP Server Prerequisites

Before we get started, let's make sure you have everything you need:

1. A Kubernetes Cluster: You'll need access to a running Kubernetes cluster. This could be a local cluster (using Minikube or Rancher Desktop), a cloud-based cluster (on AWS, Azure, or Google Cloud), or any other Kubernetes environment.

2. kubectl Installed and Configured: The kubectl command-line tool is essential for interacting with your Kubernetes cluster.

- Make sure you have kubectl installed and in your PATH (your operating system's list of directories where executable programs are searched for).

- You must have a valid kubeconfig file with at least one context configured. This file typically lives in ~/.kube/config.

- Verify that kubectl can connect to your cluster without any credential errors. Run kubectl get pods in a standard terminal. If this command fails, the K8s MCP server will also fail to connect.

3. Node.js and Bun: This specific K8s MCP implementation uses Node.js and the Bun package manager. Make sure you have both installed.

4. Helm v3 (Optional): Helm v3 needs to be installed and configured for the server, although it might not be used. You can download it from their official website.

Installation and Configuration: Setting Up Your K8s MCP Server

1. Clone the Repository:

Open your terminal and run the following command:

git clone https://github.com/Flux159/mcp-server-kubernetes.git

cd mcp-server-kubernetesThis will download the code from GitHub and move you into the project directory.

2. Install Dependencies with Bun:

This project utilizes Bun, a fast JavaScript runtime, as its package manager. Make sure you have Bun installed, and then run:

bun installThis command reads the package.json file and installs all the necessary dependencies for the project.

3. Run the K8s MCP Server:

bun run dev # for development and file watchingThis will start the K8s MCP server. It will automatically connect to the kubectl context you currently have configured. Make sure your connection to the K8s cluster is working or the server will have connection issues.

Important Notes: Since the server will automatically connect to your current kubectl context you will want to make sure that Helm is properly configured if you have a Helm chart in the project.

4. Local Testing with MCP Inspector:

For quick testing of the k8's MCP server, it is recommended to use the Model Context Protocol Inspector (@modelcontextprotocol/inspector) for local testing. This tool helps you visualize and interact with the MCP server's capabilities.

npx @modelcontextprotocol/inspector node dist/index.jsFollow the on-screen instructions in the terminal to access the Inspector link in your browser. This will allow you to explore the available MCP resources and test commands.

Local Testing K8s MCP Server with Claude Desktop

To integrate this K8s MCP with Claude Desktop (or another AI tool), you'll need to configure Claude to communicate with the server.

- Locate Claude Desktop Configuration:

Find the "claude_desktop_config.json" file in Claude Desktop's settings (usually found in a Developer or Advanced section).

2. Add the MCP Server Configuration:

Add a new entry to the mcp servers section of the "claude_desktop_config.json" file:

{

"mcpServers": {

"k8s-mcp": { // Or choose a descriptive name

"command": "node",

"args": ["/path/to/your/mcp-server-kubernetes/dist/index.js"]

}

}

}Important: Replace "/path/to/your/mcp-server-kubernetes/dist/index.js" with the actual absolute path to the "dist/index.js" file in your cloned repository.

3. Test with Claude Desktop:

Restart Claude Desktop. Now, you should be able to interact with your Kubernetes cluster through Claude using natural language commands. Start by asking Claude to list your pods or create a test deployment to see if the connection to the server works. If these work, it is safe to assume that the rest will work as well.

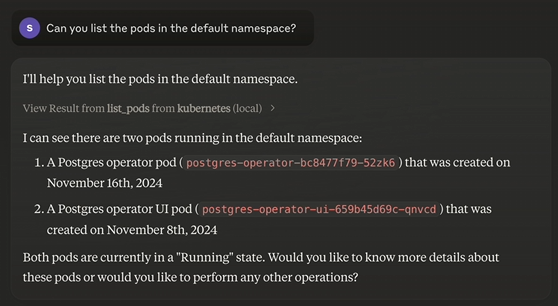

Example 1: "Can you list the pods in the default namespace"

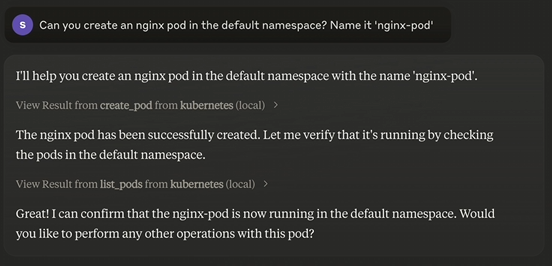

Example 2: "Can you create and nginx pod in the default namespace and name it nginx-pod"

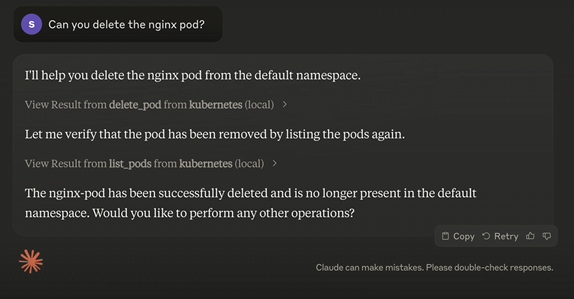

Example 3: "Can you delete the nginx pod"

Security Considerations: Protecting Your Cluster

Security is paramount when integrating AI with your Kubernetes cluster.

- Verify Functionality and Scope: Before trusting the AI to manage critical aspects of your cluster, carefully verify what actions it's actually capable of performing. Understand the permissions associated with your kubectl context or Service Account.

Conclusion

You've now taken the first steps towards exploring the possibilities of using AI to interact with your Kubernetes cluster! By setting up a K8s MCP server, you've opened the door for AI tools to potentially assist with management and provide insights. Remember to focus on security and carefully validate the functionality before relying on the AI for critical tasks.